Keywords

Computer Science and Digital Science

- A3.1.4. Uncertain data

- A3.1.10. Heterogeneous data

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.3. Reinforcement learning

- A3.4.4. Optimization and learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.1. Human-Computer Interaction

- A5.1.1. Engineering of interactive systems

- A5.1.2. Evaluation of interactive systems

- A5.1.5. Body-based interfaces

- A5.1.8. 3D User Interfaces

- A5.1.9. User and perceptual studies

- A5.2. Data visualization

- A5.3.5. Computational photography

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.9.1. Sampling, acquisition

- A5.9.3. Reconstruction, enhancement

- A6.1. Methods in mathematical modeling

- A6.2. Scientific computing, Numerical Analysis & Optimization

- A6.3.5. Uncertainty Quantification

- A8.3. Geometry, Topology

- A9.2. Machine learning

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B3.2. Climate and meteorology

- B3.3.1. Earth and subsoil

- B3.3.2. Water: sea & ocean, lake & river

- B3.3.3. Nearshore

- B3.4.1. Natural risks

- B5. Industry of the future

- B5.2. Design and manufacturing

- B5.5. Materials

- B5.7. 3D printing

- B5.8. Learning and training

- B8. Smart Cities and Territories

- B8.3. Urbanism and urban planning

- B9. Society and Knowledge

- B9.1.2. Serious games

- B9.2. Art

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.5.1. Computer science

- B9.5.2. Mathematics

- B9.5.3. Physics

- B9.5.5. Mechanics

- B9.6. Humanities

- B9.6.6. Archeology, History

- B9.8. Reproducibility

- B9.11.1. Environmental risks

1 Team members, visitors, external collaborators

Research Scientists

- George Drettakis [Team leader, Inria, Senior Researcher, HDR]

- Adrien Bousseau [Inria, Senior Researcher, HDR]

- Guillaume Cordonnier [Inria, Researcher]

- Changjian Li [Inria, Starting Research Position, from Oct 2021]

Post-Doctoral Fellows

- Thomas Leimkühler [Inria, until Aug 2021]

- Julien Philip [Inria, until Jan 2021]

- Gilles Rainer [Inria]

PhD Students

- Stavros Diolatzis [Inria]

- Felix Hahnlein [Inria]

- David Jourdan [Inria]

- Georgios Kopanas [Inria]

- Siddhant Prakash [Inria]

- Nicolas Rosset [Inria, from Oct 2021]

- Emilie Yu [Inria]

Technical Staff

- Mahdi Benadel [Inria, Engineer, until Mar 2021]

Interns and Apprentices

- Ronan Cailleau [Inria, until Jul 2021]

- Stephane Chabrillac [Inria, from Mar 2021 until Aug 2021]

- Leo Heidelberger [Inria, from Apr 2021 until Sep 2021]

- Capucine Nghiem [Inria, from Feb 2021 until Jul 2021]

Administrative Assistant

- Sophie Honnorat [Inria]

External Collaborators

- Thomas Leimkühler [Max-Planck-Institut for Informatics, Researcher]

- Capucine Nghien [Inria, ExSitu team at Inria Saclay, PhD Student]

2 Overall objectives

2.1 General Presentation

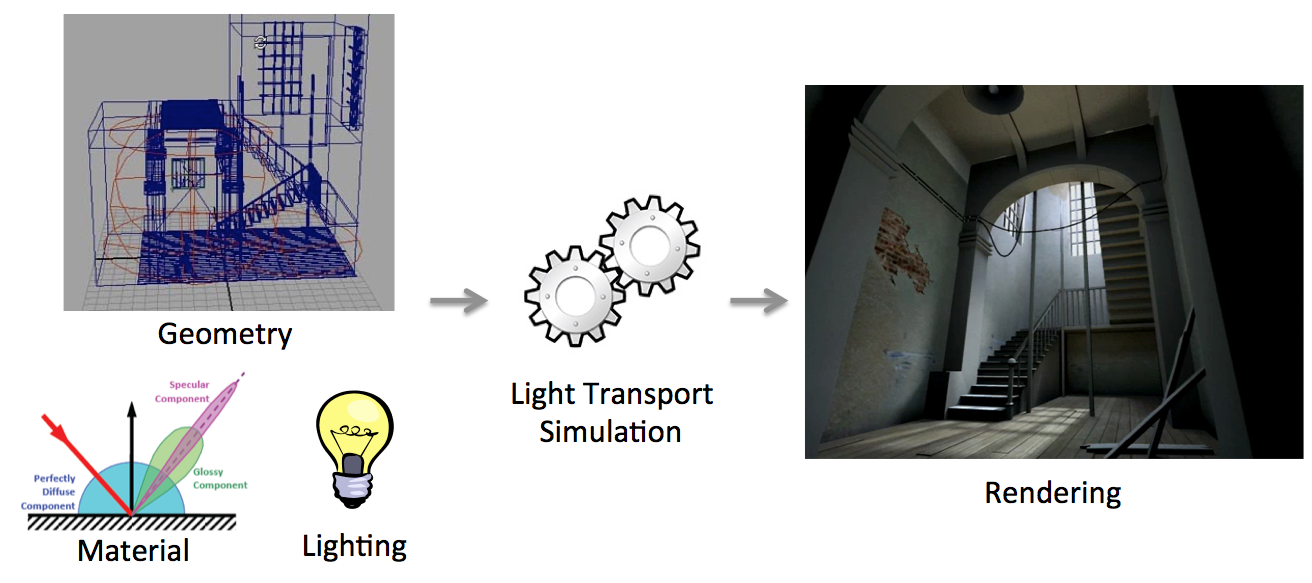

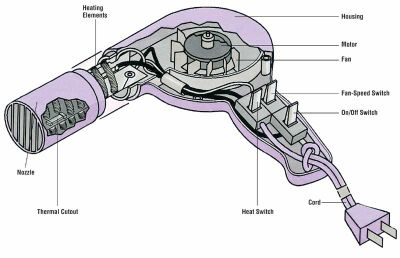

In traditional Computer Graphics (CG) input is accurately modeled by hand by artists. The artists first create the 3D geometry – i.e., the polygons and surfaces used to represent the 3D scene. They then need to assign colors, textures and more generally material properties to each piece of geometry in the scene. Finally they also define the position, type and intensity of the lights. This modeling process is illustrated schematically in Fig. 1(left). Creating all this 3D content involves a high level of training and skills, and is reserved to a small minority of expert modelers. This tedious process is a significant distraction for creative exploration, during which artists and designers are primarily interested in obtaining compelling imagery and prototypes rather than in accurately specifying all the ingredients listed above. Designers also often want to explore many variations of a concept, which requires them to repeat the above steps multiple times.

Once the 3D elements are in place, a rendering algorithm is employed to generate a shaded, realistic image (Fig. 1(right)). Costly rendering algorithms are then required to simulate light transport (or global illumination) from the light sources to the camera, accounting for the complex interactions between light and materials and the visibility between objects. Such rendering algorithms only provide meaningful results if the input has been accurately modeled and is complete, which is prohibitive as discussed above.

A major recent development is that many alternative sources of 3D content are becoming available. Cheap depth sensors allow anyone to capture real objects but the resulting 3D models are often uncertain, since the reconstruction can be inaccurate and is most often incomplete. There have also been significant advances in casual content creation, e.g., sketch-based modeling tools. The resulting models are often approximate since people rarely draw accurate perspective and proportions. These models also often lack details, which can be seen as a form of uncertainty since a variety of refined models could correspond to the rough one. Finally, in recent years we have witnessed the emergence of new usage of 3D content for rapid prototyping, which aims at accelerating the transition from rough ideas to physical artifacts.

The inability to handle uncertainty in the data is a major shortcoming of CG today as it prevents the direct use of cheap and casual sources of 3D content for the design and rendering of high-quality images. The abundance and ease of access to inaccurate, incomplete and heterogeneous 3D content imposes the need to rethink the foundations of 3D computer graphics to allow uncertainty to be treated in inherent manner in Computer Graphics, from design all the way to rendering and prototyping.

The technological shifts we mention above, together with developments in computer vision, user-friendly sketch-based modeling, online tutorials, but also image, video and 3D model repositories and 3D printing represent a great opportunity for new imaging methods. There are several significant challenges to overcome before such visual content can become widely accessible.

In GraphDeco, we have identified two major scientific challenges of our field which we will address:

- First, the design pipeline needs to be revisited to explicitly account for the variability and uncertainty of a concept and its representations, from early sketches to 3D models and prototypes. Professional practice also needs to be adapted and facilitated to be accessible to all.

- Second, a new approach is required to develop computer graphics models and algorithms capable of handling uncertain and heterogeneous data as well as traditional synthetic content.

We next describe the context of our proposed research for these two challenges. Both directions address hetereogeneous and uncertain input and (in some cases) output, and build on a set of common methodological tools.

3 Research program

3.1 Introduction

Our research program is oriented around two main axes: 1) Computer-Assisted Design with Heterogeneous Representations and 2) Graphics with Uncertainty and Heterogeneous Content. These two axes are governed by a set of common fundamental goals, share many common methodological tools and are deeply intertwined in the development of applications.

3.2 Computer-Assisted Design with Heterogeneous Representations

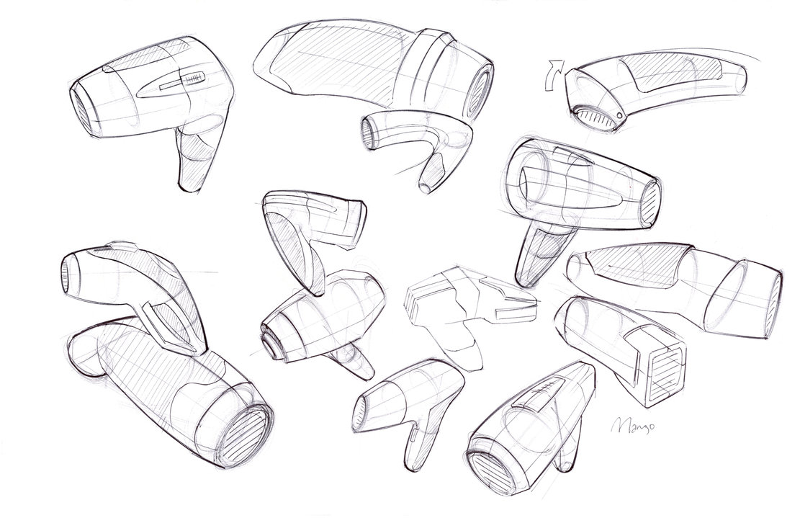

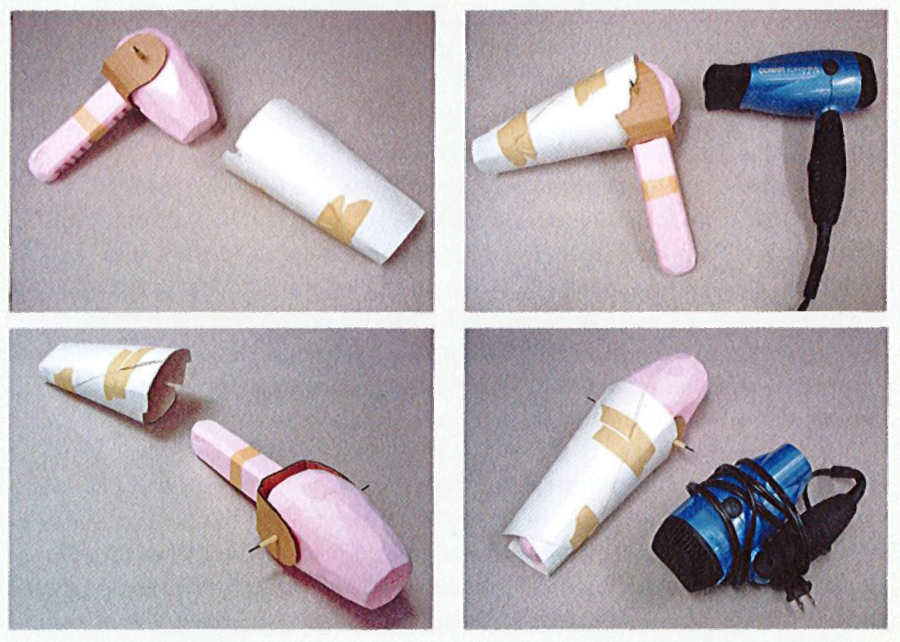

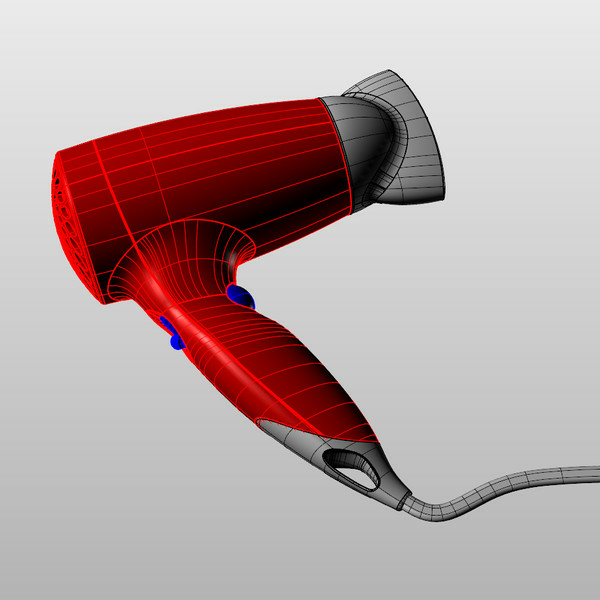

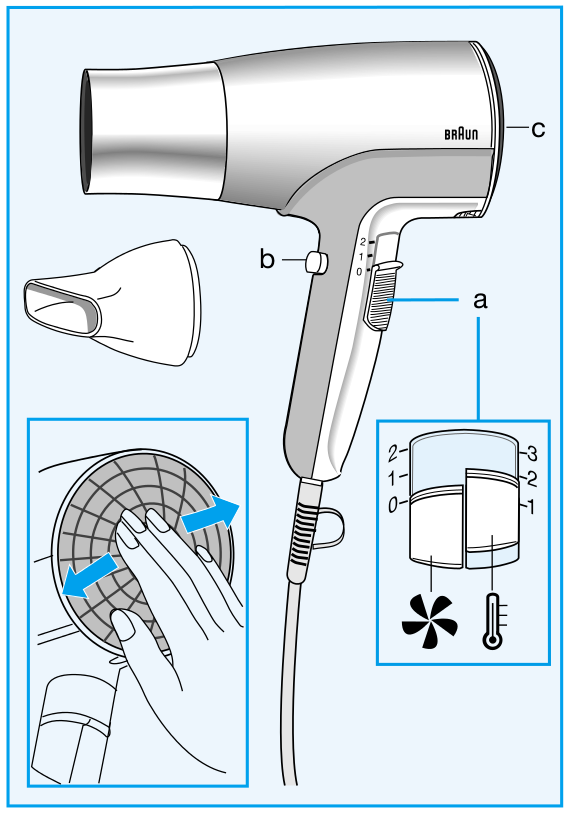

Designers use a variety of visual representations to explore and communicate about a concept. Fig. 2 illustrates some typical representations, including sketches, hand-made prototypes, 3D models, 3D printed prototypes or instructions.

|

|

|

|

| (a) Ideation sketch | (b) Presentation sketch | (c) Coarse prototype | (d) 3D model |

|

|

|

|

| (f) Simulation | (e) 3D Printing | (g) Technical diagram | (h) Instructions |

The early representations of a concept, such as rough sketches and hand-made prototypes, help designers formulate their ideas and test the form and function of multiple design alternatives. These low-fidelity representations are meant to be cheap and fast to produce, to allow quick exploration of the design space of the concept. These representations are also often approximate to leave room for subjective interpretation and to stimulate imagination; in this sense, these representations can be considered uncertain. As the concept gets more finalized, time and effort are invested in the production of more detailed and accurate representations, such as high-fidelity 3D models suitable for simulation and fabrication. These detailed models can also be used to create didactic instructions for assembly and usage.

Producing these different representations of a concept requires specific skills in sketching, modeling, manufacturing and visual communication. For these reasons, professional studios often employ different experts to produce the different representations of the same concept, at the cost of extensive discussions and numerous iterations between the actors of this process. The complexity of the multi-disciplinary skills involved in the design process also hinders their adoption by laymen.

Existing solutions to facilitate design have focused on a subset of the representations used by designers. However, no solution considers all representations at once, for instance to directly convert a series of sketches into a set of physical prototypes. In addition, all existing methods assume that the concept is unique rather than ambiguous. As a result, rich information about the variability of the concept is lost during each conversion step.

We plan to facilitate design for professionals and laymen by adressing the following objectives:

- We want to assist designers in the exploration of the design space that captures the possible variations of a concept. By considering a concept as a distribution of shapes and functionalities rather than a single object, our goal is to help designers consider multiple design alternatives more quickly and effectively. Such a representation should also allow designers to preserve multiple alternatives along all steps of the design process rather than committing to a single solution early on and pay the price of this decision for all subsequent steps. We expect that preserving alternatives will facilitate communication with engineers, managers and clients, accelerate design iterations and even allow mass personalization by the end consumers.

- We want to support the various representations used by designers during concept development. While drawings and 3D models have received significant attention in past Computer Graphics research, we will also account for the various forms of rough physical prototypes made to evaluate the shape and functionality of a concept. Depending on the task at hand, our algorithms will either analyse these prototypes to generate a virtual concept, or assist the creation of these prototypes from a virtual model. We also want to develop methods capable of adapting to the different drawing and manufacturing techniques used to create sketches and prototypes. We envision design tools that conform to the habits of users rather than impose specific techniques to them.

- We want to make professional design techniques available to novices. Affordable software, hardware and online instructions are democratizing technology and design, allowing small businesses and individuals to compete with large companies. New manufacturing processes and online interfaces also allow customers to participate in the design of an object via mass personalization. However, similarly to what happened for desktop publishing thirty years ago, desktop manufacturing tools need to be simplified to account for the needs and skills of novice designers. We hope to support this trend by adapting the techniques of professionals and by automating the tasks that require significant expertise.

3.3 Graphics with Uncertainty and Heterogeneous Content

Our research is motivated by the observation that traditional CG algorithms have not been designed to account for uncertain data. For example, global illumination rendering assumes accurate virtual models of geometry, light and materials to simulate light transport. While these algorithms produce images of high realism, capturing effects such as shadows, reflections and interreflections, they are not applicable to the growing mass of uncertain data available nowadays.

The need to handle uncertainty in CG is timely and pressing, given the large number of heterogeneous sources of 3D content that have become available in recent years. These include data from cheap depth+image sensors (e.g., Kinect or the Tango), 3D reconstructions from image/video data, but also data from large 3D geometry databases, or casual 3D models created using simplified sketch-based modeling tools. Such alternate content has varying levels of uncertainty about the scene or objects being modelled. This includes uncertainty in geometry, but also in materials and/or lights – which are often not even available with such content. Since CG algorithms cannot be applied directly, visual effects artists spend hundreds of hours correcting inaccuracies and completing the captured data to make them useable in film and advertising.

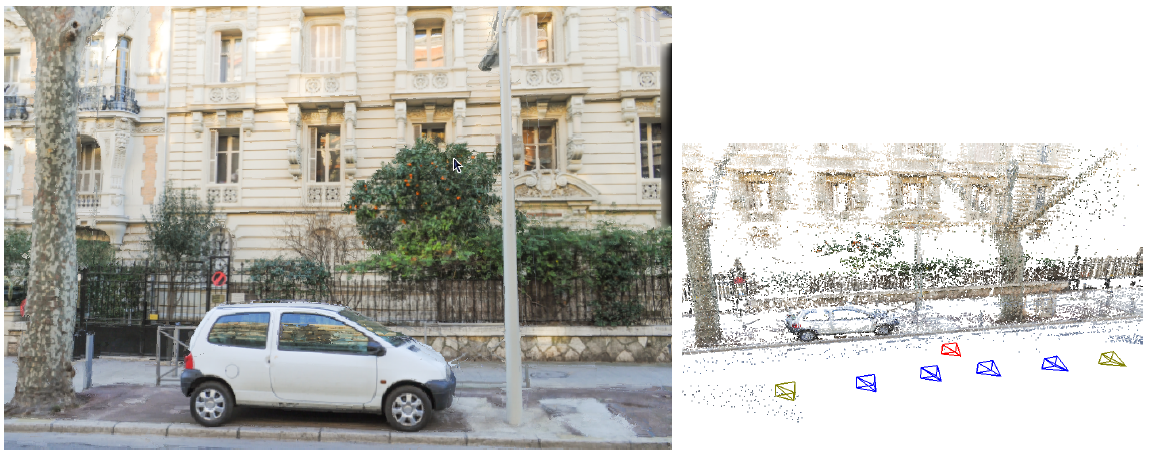

|

|

Image-Based Rendering (IBR) techniques use input photographs and approximate 3D to produce new synthetic views.

We identify a major scientific bottleneck which is the need to treat heterogeneous content, i.e., containing both (mostly captured) uncertain and perfect, traditional content. Our goal is to provide solutions to this bottleneck, by explicitly and formally modeling uncertainty in CG, and to develop new algorithms that are capable of mixed rendering for this content.

We strive to develop methods in which heterogeneous – and often uncertain – data can be handled automatically in CG with a principled methodology. Our main focus is on rendering in CG, including dynamic scenes (video/animations).

Given the above, we need to address the following challenges:

- Develop a theoretical model to handle uncertainty in computer graphics. We must define a new formalism that inherently incorporates uncertainty, and must be able to express traditional CG rendering, both physically accurate and approximate approaches. Most importantly, the new formulation must elegantly handle mixed rendering of perfect synthetic data and captured uncertain content. An important element of this goal is to incorporate cost in the choice of algorithm and the optimizations used to obtain results, e.g., preferring solutions which may be slightly less accurate, but cheaper in computation or memory.

- The development of rendering algorithms for heterogeneous content often requires preprocessing of image and video data, which sometimes also includes depth information. An example is the decomposition of images into intrinsic layers of reflectance and lighting, which is required to perform relighting. Such solutions are also useful as image-manipulation or computational photography techniques. The challenge will be to develop such “intermediate” algorithms for the uncertain and heterogeneous data we target.

- Develop efficient rendering algorithms for uncertain and heterogeneous content, reformulating rendering in a probabilistic setting where appropriate. Such methods should allow us to develop approximate rendering algorithms using our formulation in a well-grounded manner. The formalism should include probabilistic models of how the scene, the image and the data interact. These models should be data-driven, e.g., building on the abundance of online geometry and image databases, domain-driven, e.g., based on requirements of the rendering algorithms or perceptually guided, leading to plausible solutions based on limitations of perception.

4 Application domains

Our research on design and computer graphics with heterogeneous data has the potential to change many different application domains. Such applications include:

Product design will be significantly accelerated and facilitated. Current industrial workflows seperate 2D illustrators, 3D modelers and engineers who create physical prototypes, which results in a slow and complex process with frequent misunderstandings and corrective iterations between different people and different media. Our unified approach based on design principles could allow all processes to be done within a single framework, avoiding unnecessary iterations. This could significantly accelerate the design process (from months to weeks), result in much better communication between the different experts, or even create new types of experts who cross boundaries of disciplines today.

Mass customization will allow end customers to participate in the design of a product before buying it. In this context of “cloud-based design”, users of an e-commerce website will be provided with controls on the main variations of a product created by a professional designer. Intuitive modeling tools will also allow users to personalize the shape and appearance of the object while remaining within the bounds of the pre-defined design space.

Digital instructions for creating and repairing objects, in collaboration with other groups working in 3D fabrication, could have significant impact in sustainable development and allow anyone to be a creator of things, not just consumers, the motto of the makers movement.

Gaming experience individualization is an important emerging trend; using our results players will also be able to integrate personal objects or environments (e.g., their homes, neighborhoods) into any realistic 3D game. The success of creative games where the player constructs their world illustrates the potential of such solutions. This approach also applies to serious gaming, with applications in medicine, education/learning, training etc. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Virtual training, which today is restricted to pre-defined virtual environment(s) that are expensive and hard to create; with our solutions on-site data can be seamlessly and realistically used together with the actual virtual training environment. With our results, any real site can be captured, and the synthetic elements for the interventions rendered with high levels of realism, thus greatly enhancing the quality of the training experience.

Another interesting novel use of heterogeneous graphics could be for news reports. Using our interactive tool, a news reporter can take on-site footage, and combine it with 3D mapping data. The reporter can design the 3D presentation allowing the reader to zoom from a map or satellite imagery and better situate the geographic location of a news event. Subsequently, the reader will be able to zoom into a pre-existing street-level 3D online map to see the newly added footage presented in a highly realistic manner. A key aspect of these presentation is the ability of the reader to interact with the scene and the data, while maintaining a fully realistic and immersive experience. The realism of the presentation and the interactivity will greatly enhance the readers experience and improve comprehension of the news. The same advantages apply to enhanced personal photography/videography, resulting in much more engaging and lively memories. Such interactive experiences with high-quality images of heterogeneous 3D content will be also applicable to archeology (e.g., realistic presentation of different reconstruction hypotheses), urban planning and renovation where new elements can be realistically used with captured imagery.

Other applications may include scientific domains which use photogrammetric data (captured with various 3D scanners), such as geophysics and seismology. Note however that our goal is not to produce 3D data suitable for numerical simulations; our approaches can help in combining captured data with presentations and visualization of scientific information.

5 Highlights of the year

Adrien Bousseau, George Drettakis, and Guillaume Cordonnier, jointly with the TITANE team and I3S lab, organized JFIG 2021 at Inria Sophia Antipolis. This three days conference is the annual meeting of the french association of computer graphics. It was one of the rare events which managed to be in-person this year.

5.1 Awards

- Emilie Yu received the Best Paper Honorable Mention at the ACM Conference on Human Factors in Computing Systems (CHI) for her paper on curve and surface sketching in immersive environments 20.

- Julien Philip received a Young Researcher Award during the event “Victoire de la recherche de la ville de Nice.”

6 New software and platforms

Let us describe new/updated software.

6.1 New software

6.1.1 SynDraw

-

Keywords:

Non-photorealistic rendering, Vector-based drawing, Geometry Processing

-

Functional Description:

The SynDraw library extracts occluding contours and sharp features over a 3D shape, computes all their intersections using a binary space partitioning algorithm, and finally performs a raycast to determine each sub-contour visibility. The resulting lines can then be exported as an SVG file for subsequent processing, for instance to stylize the drawing with different brush strokes. The library can also export various attributes for each line, such as its visibility and type. Finally, the library embeds tools allowing one to add noise into an SVG drawing, in order to generate multiple images from a single sketch. SynthDraw is based on the geometry processing library libIGL.

-

Release Contributions:

This first version extracts occluding contours, boundaries, creases, ridges, valleys, suggestive contours and demarcating curves. Visibility is computed with a view graph structure. Lines can be aggregated and/or filtered. Labels and outputs include: line type, visibility, depth and aligned normal map.

-

Authors:

Adrien Bousseau, Bastien Wailly, Adele Saint-Denis

-

Contact:

Bastien Wailly

6.1.2 DeepSketch

-

Keywords:

3D modeling, Sketching, Deep learning

-

Functional Description:

DeepSketch is a sketch-based modeling system that runs in a web browser. It relies on deep learning to recognize geometric shapes in line drawings. The system follows a client/server architecture, based on the Node.js and WebGL technology. The application's main targets are iPads or Android tablets equipped with a digital pen, but it can also be used on desktop computers.

-

Release Contributions:

This first version is built around a client/server Node.js application whose job is to transmit a drawing from the client's interface to the server where the deep networks are deployed, then transmit the results back to the client where the final shape is created and rendered in a WebGL 3D scene thanks to the THREE.js JavaScript framework. Moreover, the client is able to perform various camera transformations before drawing an object (change position, rotate in place, scale on place) by interacting with the touch screen. The user also has the ability to draw the shape's shadow to disambiguate depth/height. The deep networks are created, trained and deployed with the Caffe framework.

-

Authors:

Adrien Bousseau, Bastien Wailly

-

Contact:

Adrien Bousseau

6.1.3 sibr-core

-

Name:

System for Image-Based Rendering

-

Keyword:

Graphics

-

Scientific Description:

Core functionality to support Image-Based Rendering research. The core provides basic support for camera calibration, multi-view stereo meshes and basic image-based rendering functionality. Separate dependent repositories interface with the core for each research project. This library is an evolution of the previous SIBR software, but now is much more modular.

sibr-core has been released as open source software, as well as the code for several of our research papers, as well as papers from other authors for comparisons and benchmark purposes.

The corresponding gitlab is: https://gitlab.inria.fr/sibr/sibr_core

The full documentation is at: https://sibr.gitlabpages.inria.fr

-

Functional Description:

sibr-core is a framework containing libraries and tools used internally for research projects based on Image-Base Rendering. It includes both preprocessing tools (computing data used for rendering) and rendering utilities and serves as the basis for many research projects in the group.

-

Authors:

Sebastien Bonopera, Jérôme Esnault, Siddhant Prakash, Simon Rodriguez, Théo Thonat, Gaurav Chaurasia, Julien Philip, George Drettakis, Mahdi Benadel

-

Contact:

George Drettakis

6.1.4 SGTDGP

-

Name:

Synthetic Ground Truth Data Generation Platform

-

Keyword:

Graphics

-

Functional Description:

The goal of this platform is to render large numbers of realistic synthetic images for use as ground truth to compare and validate image-based rendering algorithms and also to train deep neural networks developed in our team.

This pipeline consists of tree major elements that are:

- Scene exporter

- Assisted point of view generation

- Distributed rendering on INRIA's high performance computing cluster

The scene exporter is able to export scenes created in the widely-used commercial modeler 3DSMAX to the Mitsuba opensource renderer format. It handles the conversion of complex materials and shade trees from 3DSMAX including materials made for VRay. The overall quality of the produced images with exported scenes have been improved thanks to a more accurate material conversion. The initial version of the exporter was extended and improved to provide better stability and to avoid any manual intervention.

From each scene we can generate a large number of images by placing multiple cameras. Most of the time those points of view has to be placed with a certain coherency. This task could be long and tedious. In the context of image-based rendering, cameras have to be placed in a row with a specific spacing. To simplify this process we have developed a set of tools to assist the placement of hundreds of cameras along a path.

The rendering is made with the open source renderer Mitsuba. The rendering pipeline is optimised to render a large number of point of view for single scene. We use a path tracing algorithm to simulate the light interaction in the scene and produce hight dynamic range images. It produces realistic images but it is computationally demanding. To speed up the process we setup an architecture that takes advantage of the INRIA cluster to distribute the rendering on hundreds of CPUs cores.

The scene data (geometry, textures, materials) and the cameras are automatically transfered to remote workers and HDR images are returned to the user.

We already use this pipeline to export tens of scenes and to generate several thousands of images, which have been used for machine learning and for ground-truth image production.

We have recently integrated the platform with the sibr-core software library, allowing us to read mitsuba scenes. We have written a tool to allow camera placement to be used for rendering and for reconstruction of synthetic scenes, including alignment of the exact and reconstructed version of the scenes. This dual-representation scenes can be used for learning and as ground truth. We can also perform various operations on the ground truth data within sibr-core, e.g., compute shadow maps of both exact and reconstructed representations etc.

-

Authors:

Laurent Boiron, Sébastien Morgenthaler, Georgios Kopanas, Julien Philip, George Drettakis

-

Contact:

George Drettakis

6.1.5 DeepRelighting

-

Name:

Deep Geometry-Aware Multi-View Relighting

-

Keyword:

Graphics

-

Scientific Description:

Implementation of the paper: Multi-view Relighting using a Geometry-Aware Network (https://hal.inria.fr/hal-02125095), based on the sibr-core library.

-

Functional Description:

Implementation of the paper: Multi-view Relighting using a Geometry-Aware Network (https://hal.inria.fr/hal-02125095), based on the sibr-core library.

- Publication:

-

Contact:

George Drettakis

-

Participants:

Julien Philip, George Drettakis

6.1.6 SingleDeepMat

-

Name:

Single-image deep material acquisition

-

Keywords:

Materials, 3D, Realistic rendering, Deep learning

-

Scientific Description:

Cook-Torrance SVBRDF parameter acquisition from a single Image using Deep learning

-

Functional Description:

Allows material acquisition from a single picture, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-01793826/

-

Release Contributions:

Based on Pix2Pix implementation by AffineLayer (Github)

- URL:

- Publication:

-

Contact:

Adrien Bousseau

-

Participants:

Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

-

Partner:

CSAIL, MIT

6.1.7 MultiDeepMat

-

Name:

Multi-image deep material acquisition

-

Keywords:

3D, Materials, Deep learning

-

Scientific Description:

Allows material acquisition from multiple pictures, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-02164993

-

Functional Description:

Allows material acquisition from multiple pictures, to then be rendered in a virtual environment. Implementation of the paper https://hal.inria.fr/hal-02164993

-

Release Contributions:

Code fully rewritten since the SingleDeepMat project, but some function are imported from it.

- URL:

- Publication:

-

Contact:

Adrien Bousseau

-

Participants:

Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, Adrien Bousseau

6.1.8 GuidedDeepMat

-

Name:

Guided deep material acquisition

-

Keywords:

Materials, 3D, Deep learning

-

Scientific Description:

Deep large scale HD material acquisition guided by an example small scale SVBRDF

-

Functional Description:

Deep large scale HD material acquisition guided by an example small scale SVBRDF

-

Release Contributions:

Code based on the MultiDeepMat project code.

-

Contact:

Adrien Bousseau

-

Participants:

Valentin Deschaintre, George Drettakis, Adrien Bousseau

6.1.9 SemanticReflections

-

Name:

Image-Based Rendering of Cars with Reflections

-

Keywords:

Graphics, Realistic rendering, Multi-View reconstruction

-

Scientific Description:

Implementation of the paper: Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow (https://hal.inria.fr/hal-02533190, http://www-sop.inria.fr/reves/Basilic/2020/RPHD20/, https://gitlab.inria.fr/sibr/projects/semantic-reflections/semantic_reflections), based on the sibr-core library.

-

Functional Description:

Implementation of the paper: Image-Based Rendering of Cars using Semantic Labels and Approximate Reflection Flow (https://hal.inria.fr/hal-02533190, http://www-sop.inria.fr/reves/Basilic/2020/RPHD20/, https://gitlab.inria.fr/sibr/projects/semantic-reflections/semantic_reflections), based on the sibr-core library.

- Publication:

-

Contact:

George Drettakis

-

Participants:

Simon Rodriguez, George Drettakis, Siddhant Prakash, Lars Peter Hedman

6.1.10 GlossyProbes

-

Name:

Glossy Probe Reprojection for GI

-

Keywords:

Graphics, Realistic rendering, Real-time rendering

-

Scientific Description:

Implementation of the paper: Glossy Probe Reprojection for Interactive Global Illumination (https://hal.inria.fr/hal-02930925, http://www-sop.inria.fr/reves/Basilic/2020/RLPWSD20/, https://gitlab.inria.fr/sibr/projects/glossy-probes/synthetic_ibr), based on the sibr-core library.

-

Functional Description:

Implementation of the paper: Glossy Probe Reprojection for Interactive Global Illumination (https://hal.inria.fr/hal-02930925, http://www-sop.inria.fr/reves/Basilic/2020/RLPWSD20/, https://gitlab.inria.fr/sibr/projects/glossy-probes/synthetic_ibr), based on the sibr-core library.

- Publication:

-

Contact:

George Drettakis

-

Participants:

Simon Rodriguez, George Drettakis, Thomas Leimkühler, Siddhant Prakash

6.1.11 SpixelWarpandSelection

-

Name:

Superpixel warp and depth synthesis and Selective Rendering

-

Keywords:

3D, Graphics, Multi-View reconstruction

-

Scientific Description:

Implementation of the following two papers, as part of the sibr library: + Depth Synthesis and Local Warps for Plausible Image-based Navigation http://www-sop.inria.fr/reves/Basilic/2013/CDSD13/ (previous bil fiche https://bil.inria.fr/fr/software/view/1802/tab ) + A Bayesian Approach for Selective Image-Based Rendering using Superpixels http://www-sop.inria.fr/reves/Basilic/2015/ODD15/

Gitlab: https://gitlab.inria.fr/sibr/projects/spixelwarp

-

Functional Description:

Implementation of the following two papers, as part of the sibr library: + Depth Synthesis and Local Warps for Plausible Image-based Navigation http://www-sop.inria.fr/reves/Basilic/2013/CDSD13/ (previous bil fiche https://bil.inria.fr/fr/software/view/1802/tab ) + A Bayesian Approach for Selective Image-Based Rendering using Superpixels http://www-sop.inria.fr/reves/Basilic/2015/ODD15/

-

Release Contributions:

Integration into sibr framework and updated documentation

-

Contact:

George Drettakis

-

Participants:

George Drettakis, Gaurav Chaurasia, Olga Sorkine-Hornung, Sylvain François Duchene, Rodrigo Ortiz Cayon, Abdelaziz Djelouah, Siddhant Prakash

6.1.12 DeepBlending

-

Name:

Deep Blending for Image-Based Rendering

-

Keywords:

3D, Graphics

-

Scientific Description:

Implementations of two papers: + Scalable Inside-Out Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2016/HRDB16/ (with MVS input) + Deep Blending for Free-Viewpoint Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18/

-

Functional Description:

Implementations of two papers: + Scalable Inside-Out Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2016/HRDB16/ (with MVS input) + Deep Blending for Free-Viewpoint Image-Based Rendering http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18/

Code: https://gitlab.inria.fr/sibr/projects/inside_out_deep_blending

-

Contact:

George Drettakis

-

Participants:

George Drettakis, Julien Philip, Lars Peter Hedman, Siddhant Prakash, Gabriel Brostow, Tobias Ritschel

6.1.13 IndoorRelighting

-

Name:

Free-viewpoint indoor neural relighting from multi-view stereo

-

Keywords:

3D rendering, Lighting simulation

-

Scientific Description:

Implementation of the paper Free-viewpoint indoor neural relighting from multi-view stereo (currently under review), as a module for sibr.

-

Functional Description:

Implementation of the paper Free-viewpoint indoor neural relighting from multi-view stereo (currently under review), as a module for sibr.

- URL:

-

Contact:

George Drettakis

-

Participants:

George Drettakis, Julien Philip, Sébastien Morgenthaler, Michaël Gharbi

-

Partner:

Adobe

6.1.14 HybridIBR

-

Name:

Hybrid Image-Based Rendering

-

Keyword:

3D

-

Scientific Description:

Implementation of the paper "Hybrid Image-based Rendering for Free-view Synthesis", (https://hal.inria.fr/hal-03212598) based on the sibr-core library. Code available: https://gitlab.inria.fr/sibr/projects/hybrid_ibr

-

Functional Description:

Implementation of the paper "Hybrid Image-based Rendering for Free-view Synthesis", (https://hal.inria.fr/hal-03212598) based on the sibr-core library. Code available: https://gitlab.inria.fr/sibr/projects/hybrid_ibr

- URL:

- Publication:

-

Contact:

George Drettakis

-

Participants:

Siddhant Prakash, Thomas Leimkühler, Simon Rodriguez, George Drettakis

6.1.15 FreeStyleGAN

-

Keywords:

Graphics, Generative Models

-

Functional Description:

This codebase contains all applications and tools to replicate and experiment with the ideas brought forward in the publication "FreeStyleGAN: Free-view Editable Portrait Rendering with the Camera Manifold", Leimkühler & Drettakis, ACM Transactions on Graphics (SIGGRAPH Asia) 2021.

- URL:

- Publication:

-

Authors:

Thomas Leimkühler, George Drettakis

-

Contact:

George Drettakis

6.1.16 PBNR

-

Name:

Point-Based Neural Rendering

-

Keyword:

3D

-

Scientific Description:

Implementation of the method Point-Based Neural Rendering (https://repo-sam.inria.fr/fungraph/differentiable-multi-view/). This code includes the training module for a given scene provided as a multi-view set of calibrated images and a multi-view stereo mesh, as well as an interactive viewer built as part of SIBR.

-

Functional Description:

Implementation of the method Point-Based Neural Rendering (https://repo-sam.inria.fr/fungraph/differentiable-multi-view/)

- URL:

-

Contact:

George Drettakis

6.1.17 CASSIE

-

Name:

CASSIE: Curve and Surface Sketching in Immersive Environments

-

Keywords:

Virtual reality, 3D modeling

-

Scientific Description:

This project is composed of: - a VR sketching interface in Unity - an optimisation method to enforce intersection and beautification constraints on an input 3D stroke - a method to construct and maintain a curve network data structure while the sketch is created - a method to locally look for intended surface patches in the curve network

-

Functional Description:

This system is a 3D conceptual modeling user interface in VR that leverages freehand mid-air sketching, and a novel 3D optimization framework to create connected curve network armatures, predictively surfaced using patches. Implementation of the paper https://hal.inria.fr/hal-03149000

-

Authors:

Emilie Yu, Rahul Arora, Tibor Stanko, Adrien Bousseau, Karan Singh, J. Andreas Bærentzen

-

Contact:

Emilie Yu

6.1.18 fabsim

-

Keywords:

3D, Graphics, Simulation

-

Scientific Description:

Implemented models include: Discrete Elastic Rods (both for individual rods and rod networks) Discrete Shells Saint-Venant Kirchhoff, neo-hookean and incompressible neo-hookean membrane energies Mass-spring system

-

Functional Description:

Static simulation of slender structures (rods, shells), implements known models from computer graphics

-

Contact:

David Jourdan

-

Participants:

Melina Skouras, Etienne Vouga

-

Partners:

Etienne Vouga, Mélina Skouras

7 New results

7.1 Computer-Assisted Design with Heterogeneous Representations

7.1.1 Fashion Transfer: Dressing 3D Characters from Stylized Fashion Sketches

Participants: Adrien Bousseau.

Fashion design often starts with hand-drawn, expressive sketches that communicate the essence of a garment over idealized human bodies. We propose an approach to automatically dress virtual characters from such input, previously complemented with user-annotations. In contrast to prior work requiring users to draw garments with accurate proportions over each virtual character to be dressed, our method follows a style transfer strategy: the information extracted from a single, annotated fashion sketch can be used to inform the synthesis of one to many new garment(s) with a similar style, yet different proportions. In particular, we define the style of a loose garment from its silhouette and folds, which we extract from the drawing. Key to our method is our strategy to extract both shape and repetitive patterns of folds from the 2D input. As our results show, each input sketch can be used to dress a variety of characters of different morphologies, from virtual humans to cartoon-style characters (Fig. 4).

This work is a collaboration with Amélie Fondevilla from Université de Toulouse, Stefanie Hahmann from Université Grenoble Alpes, and Damien Rohmer and Marie-Paule Cani from Ecole Polytechnique. This work was published at Computer Graphics Forum 12.

7.1.2 CASSIE: Curve and Surface Sketching in Immersive Environments

Participants: Emilie Yu, Tibor Stanko, Adrien Bousseau.

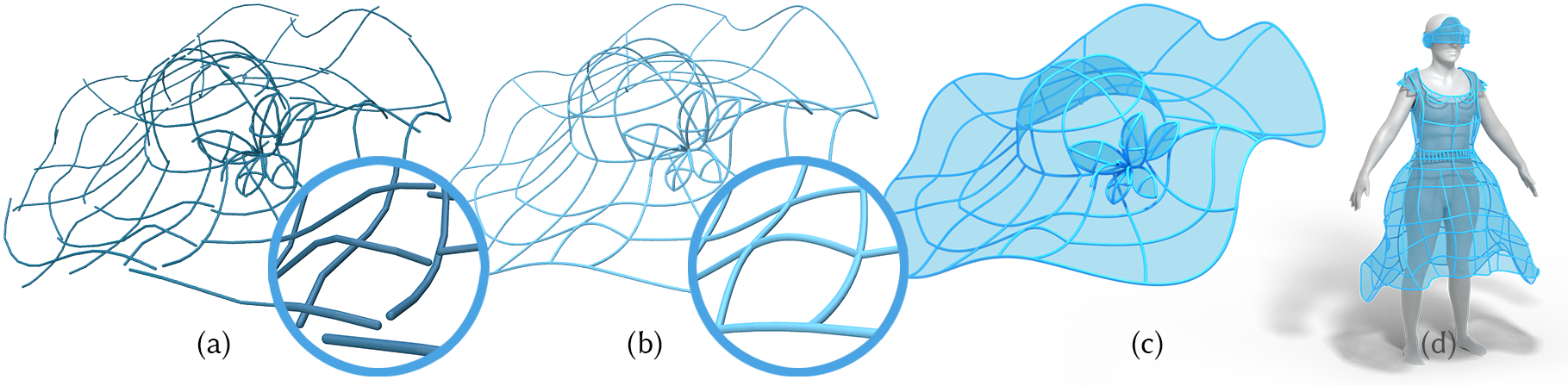

In this project, we develop CASSIE, a conceptual modeling system in VR that leverages freehand mid-air sketching, and a novel 3D optimization framework to create connected curve network armatures (Fig. 5b), predictively surfaced using patches with continuity (Fig. 5c). This system provides a judicious balance of interactivity and automation, providing a homogeneous 3D drawing interface for a mix of freehand curves, curve networks, and surface patches. This encourages and aids users in drawing consistent networks of curves, easing the transition from freehand ideation to concept modeling. A comprehensive user study with professional designers as well as amateurs (N=12), and a diverse gallery of 3D models, show our armature and patch functionality to offer a user experience and expressivity on par with freehand ideation while creating sophisticated concept models for downstream applications.

This work is a collaboration with Rahul Arora and Karan Singh from the University of Toronto and J. Andreas Bærentzen from the Technical University of Denmark. It was published at ACM SIGCHI 2021 20.

7.1.3 Piecewise-Smooth Surfacing of Unstructured 3D sketches

Participants: Emilie Yu, Adrien Bousseau.

We present a method to automatically surface unstructured sparse 3D sketches that faithfully recovers sharp features. Based on the insight that artists tend to depict piecewise-smooth objects by placing strokes at sharp features, we develop a method that recovers a piecewise-smooth surface that approximates all strokes and with discontinuities aligned with sharp feature strokes. We evaluate our method by surfacing more than 50 sketches from various sources – VR systems, sketch-based modeling interfaces – and by comparing our result to ground truth surfaces from which we generate synthetic sketches.

This work is a collaboration with Rahul Arora and Karan Singh from the University of Toronto and J. Andreas Bærentzen from the Technical University of Denmark.

7.1.4 Computational Design of Self-Actuated Surfaces by Printing Plastic Stripes on Stretched Fabric

Participants: David Jourdan, Adrien Bousseau.

In this project, we propose to solve the inverse problem of designing self-actuated structures that morph from a flat sheet to a curved 3D geometry by embedding plastic strips into a pre-stretched piece of fabric. Our inverse design tool takes as input a triangle mesh representing the desired target (deployed) surface shape, and simultaneously computes (1) a flattening of this surface into the plane, and (2) a stripe pattern on this planar domain, so that 3D-printing the stripe pattern onto the fabric with constant pre-stress, and cutting the fabric along the boundary of the planar domain, yields an assembly whose static shape deploys to match the target surface. Combined with a novel technique allowing us to print on both sides of the fabric, this algorithm allows us to reproduce a wide variety of shapes.

This ongoing work is a collaboration with Mélina Skouras from Inria Grenoble Rhône-Alpes and Etienne Vouga from UT Austin.

7.1.5 Bilayer shell simulation of self-shaping textiles

Participants: David Jourdan, Adrien Bousseau.

We propose a method that is capable of computing the final deployed shape of printed-on-fabric patterns of any complexity. To do so, we develop a shell simulation method taking into account both intrinsic behaviors such as metric frustration due to the printed rods having finite width, and extrinsic behaviors such as bilayer effects due to the layers of plastic and fabric having different reference geometries.

Our method is built on top of a physically accurate shell simulation model tailored to reproducing bilayer effects (such as the one encountered when attaching a rigid material to a pre-stretched substrate). The geometry of the final deployed shape can be very sensitive to material properties such as Young's modulus of the different materials and geometric properties such as the thickness of the different layers, we therefore accurately measured those properties to calibrate the form-finding method.

This ongoing work is a collaboration with Mélina Skouras and Victor Romero from Inria Grenoble Rhône-Alpes and Etienne Vouga from UT Austin.

7.1.6 Symmetric Concept Sketches

Participants: Felix Hahnlein, Adrien Bousseau.

In this project, we leverage symmetry as a prior for reconstructing concept sketches into 3D. Reconstructing concept sketches is extremely challenging, notably due to the presence of construction lines. We observe that designers commonly use 2D construction techniques which yield symmetric lines. We are currently developing a method which makes use of an integer programming solver to identify symmetric pairs and deduce the resulting 3D reconstruction. To evaluate our method, we compare the results with those obtained by previous methods.

This ongoing work is a collaboration with Alla Sheffer from the University of British Columbia and Yulia Gryaditskaya from the University of Surrey.

7.1.7 Synthesizing Concept Sketches

Participants: Felix Hahnlein, Changjian Li, Adrien Bousseau.

Designers use construction lines to accurately sketch 3D shapes in perspective. In this project, we explore how to synthesize the most commonly used construction lines. Informed by observations from both sketching textbooks and statistics from real-world sketches, we develop a method which procedurally generates a set of construction lines from a given 3D design sequence. Then, we formulate a discrete optimization problem which selects the final construction lines by balancing design intent, sketching accuracy, and sketch readability, thereby mimicking a typical trade-off that a designer is faced with.

This work is a collaboration with Niloy Mitra from University College London and Adobe Research.

7.1.8 Predict and visualize 3D fluid flow around design drawings

Participants: Nicolas Rosset, Guillaume Cordonnier, Adrien Bousseau.

The design of aerodynamic objects (e.g, cars, planes) often requires several iterations between the designer and complex simulations. In this project, we build a designer tool that sidesteps the simulation and informs the designer about aerodynamic flaws by directly annotating on top of the input sketch. Core to our method is a learned model that encodes the correspondences between user sketches and pre-computed flow simulations for a family of shapes (cars). At inference time, our system can take as input a user sketch, and output physical variables, such as velocity or pressure. Backtracking through the gradients of the model will further allow us to propose corrections to the input sketch to improve the aerodynamic properties of the sketched object.

This work is done in collaboration with Regis Duvigneau from the Acumes team of Inria Sophia Antipolis.

7.1.9 SKetch2CAD++

Participants: Changjian Li, Adrien Bousseau.

In this project, we propose a sketch-based modeling system that effectively turns a complete drawing of a 3D shape into a sequence of CAD operations that reproduce the desired shape. We formulate this problem as a grouping task, where we propose a novel neural network architecture for stroke grouping. And we also propose an interleaved segmentation and fitting scheme that leverages 3D geometry to improve the grouping of strokes that represent CAD operations. Combining the two ingredients, our sketch-based system allows users to draw entire 3D shapes freehand without knowing how they should be decomposed into CAD operations.

This is a collaboration with Niloy J. Mitra from University College London and Adobe Research, and Hao Pan from Microsoft Research Asia.

7.1.10 Deep Reconstruction of 3D Smoke Densities from Artist Sketches

Participants: Guillaume Cordonnier.

Creative processes of artists often start with hand-drawn sketches illustrating an object. We explore a method to compute a 3D smoke density field directly from 2D artist sketches, bridging the gap between early-stage prototyping of smoke keyframes and pre-visualization. We introduce a differentiable sketcher, used in the end-to-end training, and as a key component to the generation of our dataset.

This project is a collaboration with Byungsoo Kim, Laura Wülfroth, Jingwei Tang, Markus Gross, and Barbara Solenthaler from ETH Zurich, and Xingchang Huan from the Max Planck Institute for Informatics.

7.1.11 Speech-Assisted Design Sketching

Participants: Adrien Bousseau, Capucine Nghiem.

We are interested in studying how speech recognition can enhance artistic sketching. As a first step, we collaborate with design teachers to develop a video processing tool that would convert online courses into interactive step-by-step tutorials. Our tool combines drawing analysis and speech recognition to identify important steps in the video.

This work is a collaboration with Capucine Nghiem and Theophanis Tsandilas from ExSitu at Inria Saclay, which was initiated during Capucine's master's thesis in our group. We also collaborate with Mark Sypesteyn and Jan Willem Hoftijzer from TU Delft and with Maneesh Agrawala from Stanford.

7.2 Graphics with Uncertainty and Heterogeneous Content

7.2.1 FreeStyleGAN: Free-view Editable Portrait Rendering with the Camera Manifold

Participants: Thomas Leimkühler, George Drettakis.

Current Generative Adversarial Networks (GANs) produce photorealistic renderings of portrait images. Embedding real images into the latent space of such models enables high-level image editing. While recent methods provide considerable semantic control over the (re-)generated images, they can only generate a limited set of viewpoints and cannot explicitly control the camera. Such 3D camera control is required for 3D virtual and mixed reality applications.

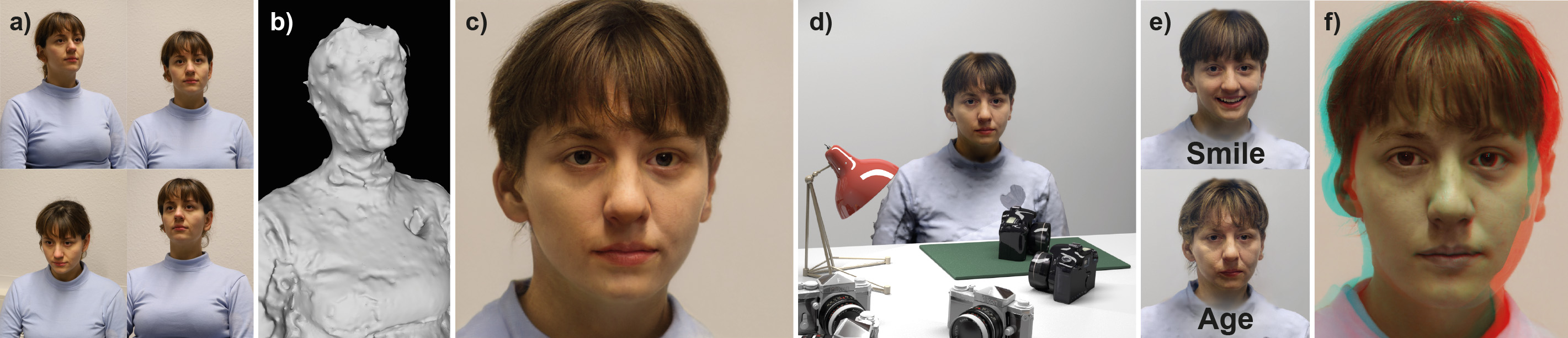

In our solution, we use a few images of a face (Fig. 6a) to perform 3D reconstruction (Fig. 6b), and we introduce the notion of the GAN camera manifold, the key element allowing us to precisely define the range of images that the GAN can reproduce in a stable manner. We train a small face-specific neural implicit representation network to map a captured face to this manifold (Fig. 6c) and complement it with a warping scheme to obtain free-viewpoint novel-view synthesis. We show how our approach – due to its precise camera control – enables the integration of a pre-trained StyleGAN into standard 3D rendering pipelines, allowing e.g., stereo rendering (Fig. 6f) or consistent insertion of faces in synthetic 3D environments (Fig. 6d). Our solution proposes the first truly free-viewpoint rendering of realistic faces at interactive rates, using only a small number of casual photos as input, while simultaneously allowing semantic editing, such as changes of facial expression or age (Fig. 6e).

This work was published at ACM Transactions on Graphics, and presented at SIGGRAPH Asia 2021 15.

7.2.2 Point-Based Neural Rendering with Per-View Optimization

Participants: Georgios Kopanas, Julien Philip, Thomas Leimkühler, George Drettakis.

There has recently been great interest in neural rendering methods. Some approaches use 3D geometry reconstructed with Multi-View Stereo (MVS) but cannot recover from the errors of this process, while others directly learn a volumetric neural representation, but suffer from expensive training and inference.

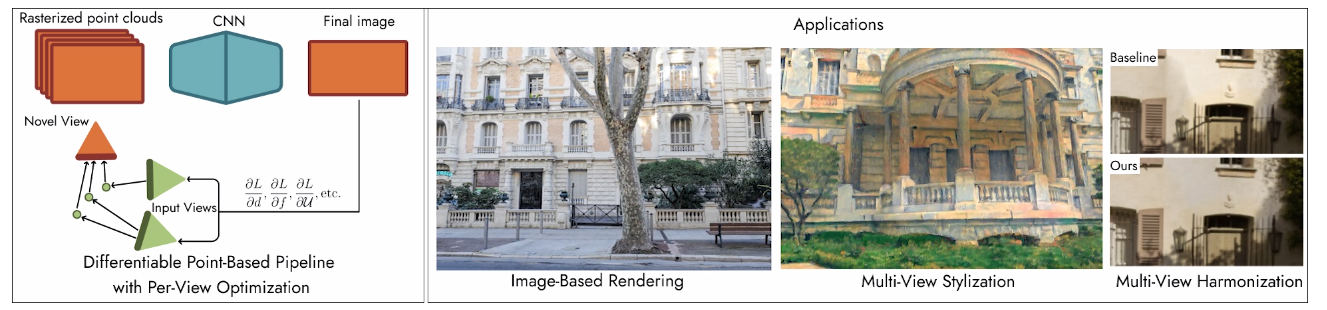

We introduce a general approach that is initialized with MVS, but allows further optimization of scene properties in the space of input views, including depth and reprojected features, resulting in improved novel-view synthesis. A key element of our approach is our new differentiable point-based pipeline, based on bi-directional Elliptical Weighted Average splatting, a probabilistic depth test, and effective camera selection. We use these elements together in our neural renderer, which outperforms all previous methods both in quality and speed in almost all scenes we tested. Our pipeline can be applied to multi-view harmonization and stylization in addition to novel-view synthesis.

This work was published in Computer Graphics Forum and presented at EGSR 2021 14.

7.2.3 Hybrid Image-based Rendering for Free-view Synthesis

Participants: Siddhant Prakash, Thomas Leimkühler, Simon Rodriguez, George Drettakis.

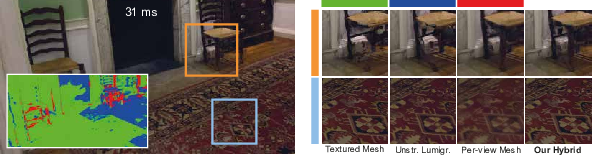

Image-based rendering (IBR) provides a rich toolset for free-viewpoint navigation in captured scenes. Many methods exist, usually with an emphasis either on image quality or rendering speed. We identify common IBR artifacts and combine the strengths of different algorithms to strike a good balance in the speed/quality tradeoff. First, we address the problem of visible color seams that arise from blending casually-captured input images by explicitly treating view-dependent effects. Second, we compensate for geometric reconstruction errors by refining per-view information using a novel clustering and filtering approach. Finally, we devise a practical hybrid IBR algorithm, which locally identifies and utilizes the rendering method best suited for an image region while retaining interactive rates. We compare our method against classical and modern (neural) approaches in indoor and outdoor scenes and demonstrate superiority in quality and/or speed - see Fig. 8.

This work was published in Proceedings of the ACM on Computer Graphics and Interactive Techniques, and presented at ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games 17.

7.2.4 Free-viewpoint Indoor Neural Relighting from Multi-view Stereo

Participants: Julien Philip, Sébastien Morgenthaler, George Drettakis.

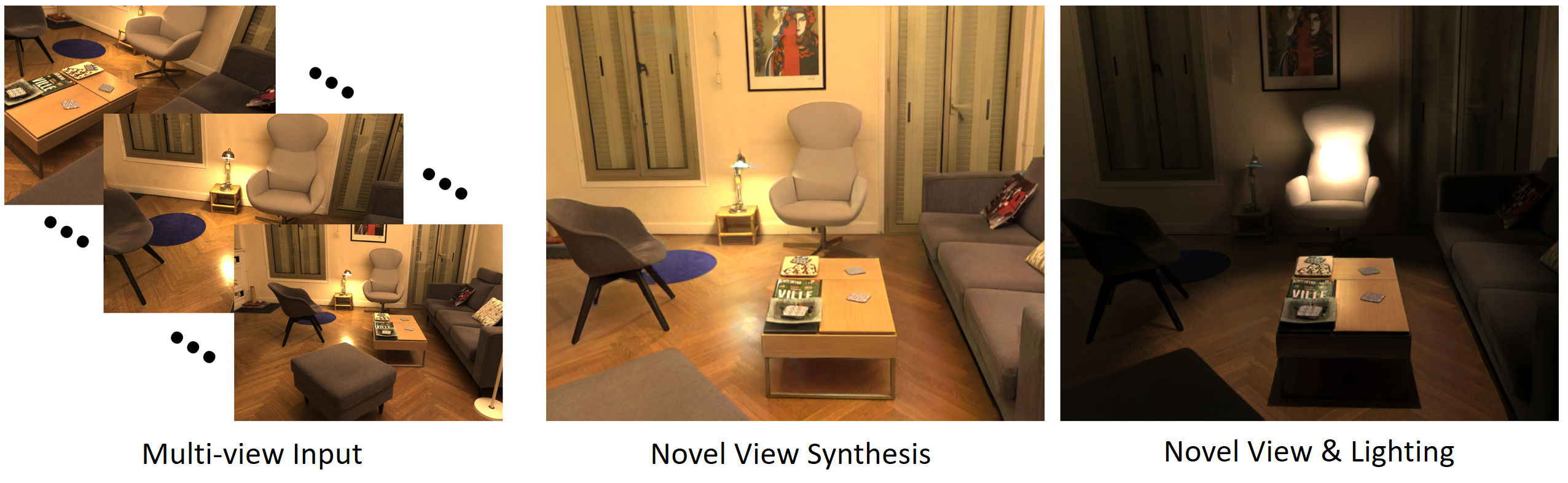

We introduce a neural relighting algorithm for captured indoors scenes, that allows interactive free-viewpoint navigation. Our method allows illumination to be changed synthetically, while coherently rendering cast shadows and complex glossy materials. We start with multiple images of the scene and a 3D mesh obtained by multi-view stereo (MVS) reconstruction. We assume that lighting is well-explained as the sum of a view-independent diffuse component and a view-dependent glossy term concentrated around the mirror reflection direction. We design a convolutional network around input feature maps that facilitate learning of an implicit representation of scene materials and illumination, enabling both relighting and free-viewpoint navigation. We generate these input maps by exploiting the best elements of both image-based and physically-based rendering. We sample the input views to estimate diffuse scene irradiance, and compute the new illumination caused by user-specified light sources using path tracing. To facilitate the network's understanding of materials and synthesize plausible glossy reflections, we reproject the views and compute mirror images. We train the network on a synthetic dataset where each scene is also reconstructed with MVS. We show results of our algorithm relighting real indoor scenes and performing free-viewpoint navigation with complex and realistic glossy reflections, which so far remained out of reach for view-synthesis techniques (Fig. 9).

This work is a collaboration with Michaël Gharbi from Adobe Research. This work was published at ACM Transactions on Graphics and will be presented at SIGGRAPH 2022 16.

7.2.5 Video-Based Rendering of Dynamic Stationary Environments from Unsynchronized Inputs

Participants: Théo Thonat, George Drettakis.

Image-Based Rendering allows users to easily capture a scene using a single camera and then navigate freely with realistic results. However, the resulting renderings are completely static, and dynamic effects cannot be reproduced. We tackle the challenging problem of enabling free-viewpoint navigation including such stationary dynamic effects, but still maintaining the simplicity of casual capture. Using a single camera – instead of previous complex synchronized multi-camera setups – means that we have unsynchronized videos of the dynamic effect from multiple views, making it hard to blend them when synthesizing novel views. We present a solution that allows smooth free-viewpoint video-based rendering (VBR) of such scenes using temporal Laplacian pyramid decomposition video, enabling spatio-temporal blending. For effects such as fire and waterfalls, that are semi-transparent and occupy 3D space, we first estimate their spatial volume. This allows us to create per-video geometries and alpha-matte videos that we can blend using our frequency-dependent method. We show results on scenes containing fire, waterfalls, or rippling waves at the seaside, bringing these scenes to life (Fig. 10).

This work is a collaboration with Sylvain Paris from Adobe Research, Miika Aittala from MIT CSAIL, and Yagiz Aksoy from ETH Zurich. It was published in Computer Graphics Forum and presented at EGSR 2021 18.

7.2.6 Authoring Consistent Landscapes with Flora and Fauna

Participants: Guillaume Cordonnier.

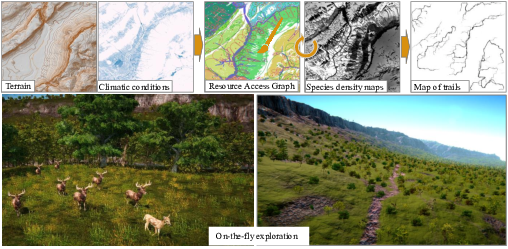

We develop a novel method for authoring landscapes with flora and fauna while considering their mutual interactions. Our algorithm outputs a steady-state ecosystem in the form of density maps for each species, their daily circuits, and a modified terrain with eroded trails from a terrain, climatic conditions, and species with related biological information. We introduce the Resource Access Graph, a new data structure that encodes both interactions between food chain levels and animals traveling between resources over the terrain. A novel competition algorithm operating on this data progressively computes a steady-state solution up the food chain, from plants to carnivores. The user can explore the resulting landscape, where plants and animals are instantiated on the fly, and interactively edit it by over-painting the maps. Our results show that our system enables the authoring of consistent landscapes where the impact of wildlife is visible through animated animals, clearings in the vegetation, and eroded trails (Fig.11). We provide quantitative validation with existing ecosystems and a user-study with expert paleontologist end-users, showing that our system enables them to author and compare different ecosystems illustrating climate changes over the same terrain while enabling relevant visual immersion into consistent landscapes.

This work is a collaboration with Pierre Ecormier-Nocca, Pooran Memari and Marie-Paule Cani from Ecole polytechnique, Philippe Carrez from Immersion Tools, Anne-Marie Moigne from the Muséum national d’Histoire naturelle, and Bedrich Benes from Purdue University. It was published in ACM TOG and presented at Siggraph 2021 11.

7.2.7 Deep learning speeds up ice flow modelling by several orders of magnitude

Participants: Guillaume Cordonnier.

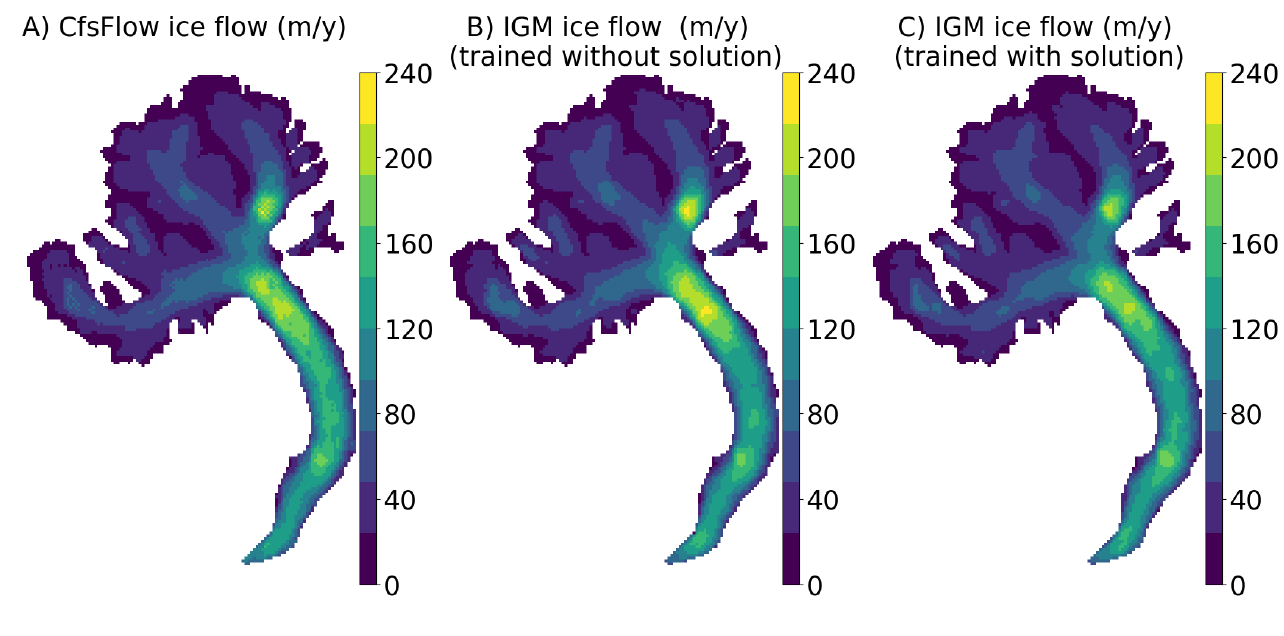

This project introduces the Instructed Glacier Model (IGM) — a model that simulates ice dynamics, mass balance, and its coupling to predict the evolution of glaciers, icefields, or ice sheets. The novelty of IGM is that it models the ice flow by a convolutional neural network, which is trained from data generated with hybrid SIA+SSA or Stokes ice flow models. By doing so, the most computationally demanding model component is substituted by a cheap emulator. Once trained with representative data, we demonstrate that IGM permits to model mountain glaciers up to 1000 faster than Stokes ones on CPU with fidelity levels above 90% in terms of ice flow solutions leading to nearly identical transient thickness evolution. Switching to the GPU often permits additional significant speed-ups, especially when emulating Stokes dynamics or/and modelling at high spatial resolution. IGM is an open-source Python code which deals with two-dimensional gridded input and output data. Together with a companion library of trained ice flow emulators, IGM permits user-friendly, highly efficient, and mechanically state-of-the-art glacier and icefields simulations (Fig.12).

This project was a collaboration with Guillaume Jouvet, Martin Lüthi and Andreas Vieli from the University of Zurich, Byungsoo Kim from ETH Zurich, and Andy Aschwanden from the University of Alaska Fairbanks. It was published in the Journal of Glaciology 13.

7.2.8 Indoor Scene Material Capture

Participants: Siddhant Prakash, Gilles Rainer, Adrien Bousseau, George Drettakis.

Material capture from a set of images has many applications in augmented and virtual reality. Recent works propose to capture the appearance of flat patches and objects but not full scenes with multiple objects. We aim to develop a novel learning-based solution to capture materials of indoor scenes. Our method takes a set of images of a scene and infers materials by observing surfaces from multiple viewpoints. Once trained the network will generate high-quality SVBRDF maps per view which is consistent between all observed views. The applications of our method target relighting, object insertion, and material manipulation on real and synthetic scenes.

7.2.9 Neural Precomputed Radiance Transfer

Participants: Gilles Rainer, Adrien Bousseau, George Drettakis.

Pre-computed Radiance Transfer (PRT) is a well-studied family of Computer Graphics techniques for real-time rendering. We study neural rendering in the same setting as PRT for global illumination of static scenes under dynamic environment lighting. We introduce four different network architectures and show that those based on knowledge of light transport models and PRT-inspired principles improve the quality of global illumination predictions at equal training time and network size, offering a lightweight alternative to real-time rendering with high-end ray-tracing hardware.

This paper is a collaboration with Tobias Ritschel from University College London.

7.2.10 Neural Global Illumination of Variable Scenes

Participants: Stavros Diolatzis, Julien Philip, George Drettakis.

We introduce a new method for the neural rendering of variable scenes at interactive framerates. Our method is focused on reducing the amount of data necessary to train our neural generator compared to previous methods. In addition, we can handle hard light transport effects such as specular diffuse specular paths and reflections that denoising methods struggle with.

7.2.11 Meso-GAN

Participants: Stavros Diolatzis, George Drettakis.

We propose the use of a generative model to create neural textures of meso-scale materials such as fur grass, moss, cloth etc. A generative model allows us to create stochastic 3d textures and incorporate them in path tracing with artist controllable parameters such as length, color, etc.

This project is a collaboration with Jonathan Granskog, Fabrice Rouselle and Jan Novak from Nvidia, and Ravi Ramamoorthi from the University of California, San Diego.

7.2.12 Learning Interactive Reflection Rendering

Participants: Ronan Cailleau, Gilles Rainer, Thomas Leimküehler, George Drettakis.

In this project, we investigate a machine learning method to compute reflection and refraction flows for synthetic scenes, based on a probe-based parameterization. We first extend a previous interactive reflection algorithm to handle simple cases of refraction and then investigate several different neural network architectures that would learn lookup and filtering of the reflection/refraction probes.

7.2.13 Multi-View Spatio-Temporal Sampling for Accelerated NeRF Training

Participants: Leo Heidelberger, Gilles Rainer, Thomas Leimküehler, George Drettakis.

In this project, we develop a new method to accelerate training times for Neural Radiance Fields (NeRF). The main ideas are to build probability density functions for sampling NeRF by storing and reusing samples during training and to reproject samples from a given input view into other views. The method accelerates training times significantly compared to a standard NeRF baseline.

7.2.14 Interactive simulation of plume and pyroclastic volcanic ejections

Participants: Guillaume Cordonnier.

In this project, we propose an interactive animation method for the ejection of gas and ashes mixtures in a volcano eruption. Our layered solution combines a coarse-grain, physically-based simulation of the ejection dynamics with a consistent, procedural animation of multi-resolution details. We show that this layered model can be used to capture the two main types of ejection, namely ascending plume columns and pyroclastic flows.

This project is a collaboration with Maud Lastic, Damien Rohmer and Marie-Paule Cani from Ecole Polytechnique, Fabrice Neyret from CNRS and Université Grenoble Alpes, and Claude Jaupart from the Institut de Physique du Globe de Paris.

7.2.15 Landscape rendering

Participants: Stephane Chabrillac, Thomas Leimkühler, Guillaume Cordonnier, George Drettakis.

Several methods enable the generation or capture of the geometry of terrains, but the path between the geometry and the rendering is still challenging, as it requires to texture the terrain and instantiates countless objects from a variety of biomes. In this project, we propose an automatic approach to render a landscape directly from a digital elevation map, using a learning method inspired by the family of image-to-image translation models, augmented to support consistency with respect to view movement in a large range of scales.

This work is a collaboration with Marie-Paule Cani from Ecole polytechnique and Thomas Dewez and Simon Lopez from BRGM.

7.2.16 Neural Catacaustics

Participants: Georgios Kopanas, Thomas Leimkühler, Gilles Rainer, George Drettakis.

View-dependent effects such as reflections have always posed a substantial challenge for image-based rendering algorithms. Above all, non-planar reflectors are a particularly difficult case, as they lead to highly non-linear reflection flows. We employ a point-based representation to render novel views of scenes with non-planar specular and glossy reflectors from a set of casually-captured input photos. At the core of our method is a neural field that models the catacaustic surfaces of reflections, such that complex view-dependent effects can be rendered using efficient point splatting in conjunction with a neural renderer. We show that our approach leads to real-time and high-quality renderings with coherent (glossy) reflections, while naturally establishing correspondences between reflected objects across views and allowing intuitive material editing.

8 Bilateral contracts and grants with industry

8.1 Bilateral Grants with Industry

We have received regular donations from Adobe Research, thanks to our collaboration with J. Philip and the internship of F. Hahnlein.

9 Partnerships and cooperations

9.1 International research visitors

9.1.1 Visits of international scientists

Other international visits to the team

Miika Aittala

-

Status:

Researcher

-

Institution of origin:

Nvidia Research

-

Country:

Finland

-

Dates:

Sept. 16-17th

-

Context of the visit:

Invited talk (team retreat)

Mathieu Aubry

-

Status:

Researcher

-

Institution of origin:

Ponts ParisTech

-

Country:

France

-

Dates:

Sept. 16-17th

-

Context of the visit:

Invited talk (team retreat)

James Tompkin

-

Status:

Assistant professor

-

Institution of origin:

Brown University

-

Country:

USA

-

Dates:

Dec. 17th

-

Context of the visit:

Invited talk

9.1.2 Visits to international teams

Research stays abroad

Stavros Diolatzis

-

Visited institution:

Nvidia Research

-

Country:

Switzerland (remote)

-

Dates:

Sep 1st-Nov 30th

-

Mobility program/type of mobility:

Internship

Felix Hähnlein

-

Visited institution:

Adobe Research

-

Country:

UK (remote)

-

Dates:

Jun 1st - Aug 13th

-

Mobility program/type of mobility:

Internship

9.2 European initiatives

9.2.1 ERC D3: Drawing Interpretation for 3D Design

Participants: Adrien Bousseau, Changjian Li, Felix Hähnlein, David Jourdan, Emilie Yu.

Line drawing is a fundamental tool for designers to quickly visualize 3D concepts. The goal of this ERC project is to develop algorithms capable of understanding design drawings. This year, we continued progressing on the 3D reconstruction of 2D design drawings, including results on the challenging case of exaggerated fashion drawings 12. We also contributed to the emerging topic of 3D drawing in Virtual Reality by describing a novel user interface to help designers draw curves and surfaces with this medium 20. Finally, we presented our work on fabricated 3D freeform shapes at the conference Advances on Architectural Geometry 19.

9.2.2 ERC FunGraph

Participants: George Drettakis, Thomas Leimkühler, Julien Philip, Gilles Rainer, Mahdi Benadel, Stavros Diolatzis, Georgios Kopanas, Siddhant Prakash, Ronan Cailleau, Leo Heidelberger.

This year, we had several new results for FUNGRAPH. We first developed an relighting and novel view synthesis algorithm for indoors scenes 16, a hybrid algorithm for Image-Based Rendering 17, a Point-based Neural Rendering algorithm 14, an algorithm that allows the use of true 3D camera information for Generative Adversarial Network renderings of faces 15 and finally a video-based rendering method for stochastic phenomena 18.

10 Dissemination

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

General chair, scientific chair

- Adrien Bousseau, Guillaume Cordonnier and George Drettakis participated in the organization of the Journées Française d'Informatique Graphique (JFIG) at the Inria Sophia Antipolis center.

Member of the organizing committees

- Adrien Bousseau chaired the SIGGRAPH Thesis Fast-Forwad, which allows Ph.D. students to showcase their work in a short, 3-minutes video.

- George Drettakis chairs the Eurographics Working Group on Rendering and the Steering Committee of the Eurographics Symposium on Rendering and was a member of the Papers Advisory Board for SIGGRAPH and SIGGRAPH Asia 2021.

10.1.2 Scientific events: selection

Chair of conference program committees

- Adrien Bousseau was program co-chair for Eurographics Symposium on Rendering (EGSR).

Member of the conference program committees

- George Drettakis was a member of the program committee for SIGGRAPH.

- Guillaume Cordonnier was a member of the program committee for Motion, Interaction and Games (MIG).

- Thomas Leimkühler was a member of the program comittee of Eurograpics Short Papers and High Performance Graphics.

10.1.3 Journal

Member of the editorial boards

- George Drettakis is a member of the Editorial Board of Computational Visual Media (CVM).

- Adrien Bousseau is associated editor for Computer Graphics Forum.

Reviewer - reviewing activities

- George Drettakis reviewed submissions to SIGGRAPH and EGSR.

- Adrien Bousseau reviewed submissions to SIGGRAPH, SIGGRAPH Asia, Eurographics, IEEE TVCG, ACM TOG, ICLR.

- Guillaume Cordonnier reviewed submissions to SIGGRAPH, IEEE TVCG, Eurographics, Graphical Models, Computer and Graphics, IEEE TVCG, ACM TOG, ICLR.

- Gilles Rainer reviewed submissions to SIGGRAPH, ACM TOG, IEEE TVCG, Eurographics, Eurographics STAR.

- Thomas Leimkühler reviewed submissions to SIGGRAPH Asia, Eurographics, Pacific Graphics, The Visual Computer Journal.

10.1.4 Invited talks

- George Drettakis gave invited talks at Inria Grenoble (September) and LIRIS Lyon (December), which was also a Eurographics French chapter webinar (link).

- Adrien Bousseau gave an invited talk at the University of Surrey (Center for Vision, Speech and Signal Processing) and at Delft University of Technology (Faculty of Industrial Design Engineering).

- Guillaume Cordonnier gave an invited talk at the LIX (Ecole Polytechnique).

- Thomas Leimkühler gave an invited talk in the Joint Lecture Series at MPI Informatik, Saarbrücken, Germany.

10.1.5 Leadership within the scientific community

- George Drettakis chairs the Eurographics (EG) working group on Rendering, and the steering committee of EG Symposium on Rendering.

- George Drettakis serves as chair of the ACM SIGGRAPH Papers Advisory Group (https://www.siggraph.org/papers-advisory-group/) which choses the technical papers chairs of ACM SIGGRAPH conferences and is reponsible for all issues related to publication policy of our flagship conferences SIGGRAPH and SIGGRAPH Asia.

10.1.6 Scientific expertise

- George Drettakis is a member of the Ph.D. Award selection committe of the French GdR IG-RV, and was part of the Scientific Advisory Board for the Aalto University School of Science which performed a remote evaluation this November.

- Adrien Bousseau reviewed a grant application to the ERC(starting grant), and participated to the assessment of candidate assistant professors for DTU (Denmark).

10.1.7 Research administration

- George Drettakis is a member (suppleant) of the Inria Scientific Council, co-leads the Working Group on Rendering of the GT IGRV (https://gdr-igrv.fr/gts/gt-rendu/) with R. Vergne and is a member of the Administrative Council of the Eurographics French Chapter (https://projet.liris.cnrs.fr/egfr/camembers/).

- Guillaume Cordonnier is a member of the direction committe of the GT IGRV CNRS working group (https://gdr-igrv.fr/).

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Adrien Bousseau, Guillaume Cordonnier and George Drettakis taught the course "Graphics and Learning", 32h, M2 at the Ecole Normale Superieure de Lyon.

- Emilie Yu taught Object Oriented Conception and Programming, 27h (TD, L1), and Object Oriented Programming Methods, 36h, (TD, L3) at Université Côte d'Azur.

- David Jourdan taught imperative programming for 33h (TD, L1) at Université Côte d'Azur.

10.2.2 Supervision

- Ph.D. in progress: Stavros Diolatzis, Guiding and Learning for Illumination Algorithms, since April 2019, George Drettakis.

- Ph.D. in progress: Georgios Kopanas, Neural Rendering with Uncertainty for Full Scenes, since September 2020, George Drettakis.

- Ph.D. in progress: Siddhant Prakash, Rendering with uncertain data with application to augmented reality, since November 2019, George Drettakis.

- Ph.D. in progress: David Jourdan, computational design of deformable structures, since October 2018, Adrien Bousseau.

- Ph.D. in progress: Felix Hähnlein, 3D reconstruction of design drawings, since September 2019, Adrien Bousseau.

- Ph.D. in progress: Emilie Yu, 3D drawing in Virtual Reality, since October 2020, Adrien Bousseau.

- Ph.D. in progress: Nicolas Rosset, sketch-based design of aerodynamic shapes, since October 2021, Adrien Bousseau and Guillaume Cordonnier.

10.2.3 Juries

- George Drettakis was a reviewer for the Ph.D. theses of M. Armando (Inria Grenoble), B. Fraboni (INSA Lyon) and a member of the Ph.D. committee of Y. Wang (Inria Sophia-Antipolis).

- Adrien Bousseau was a reviewer for the Ph.D. theses of Othman Sbai (ENPC), François Desrichard (Université Toulouse III Paul Sabatier), Beatrix-Emoke Fulop-Balogh (Université Claude Bernard - Lyon 1).

10.3 Popularization

10.3.1 Internal or external Inria responsibilities

- George Drettakis chairs the local “Jacques Morgenstern” Colloquium organizing committee.

11 Scientific production

11.1 Major publications

- 1 articleSingle-Image SVBRDF Capture with a Rendering-Aware Deep Network.ACM Transactions on Graphics372018, 128 - 143

- 2 articleFidelity vs. Simplicity: a Global Approach to Line Drawing Vectorization.ACM Transactions on Graphics (SIGGRAPH Conference Proceedings)2016, URL: http://www-sop.inria.fr/reves/Basilic/2016/FLB16

- 3 articleLifting Freehand Concept Sketches into 3D.ACM Transactions on GraphicsNovember 2020

- 4 articleOpenSketch: A Richly-Annotated Dataset of Product Design Sketches.ACM Transactions on Graphics2019

- 5 articleDeep Blending for Free-Viewpoint Image-Based Rendering.ACM Transactions on Graphics (SIGGRAPH Asia Conference Proceedings)376November 2018, URL: http://www-sop.inria.fr/reves/Basilic/2018/HPPFDB18

- 6 articleScalable Inside-Out Image-Based Rendering.ACM Transactions on Graphics (SIGGRAPH Asia Conference Proceedings)356December 2016, URL: http://www-sop.inria.fr/reves/Basilic/2016/HRDB16