Keywords

Computer Science and Digital Science

- A5.5.4. Animation

- A5.6.1. Virtual reality

- A5.6.3. Avatar simulation and embodiment

- A5.11.1. Human activity analysis and recognition

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B1.2. Neuroscience and cognitive science

- B2.8. Sports, performance, motor skills

- B7.1.1. Pedestrian traffic and crowds

- B9.3. Medias

- B9.5.6. Data science

1 Team members, visitors, external collaborators

Research Scientists

- Julien Pettré [Team leader, INRIA, Senior Researcher, HDR]

- Samuel Boivin [INRIA, Researcher]

- Ludovic Hoyet [INRIA, Researcher, HDR]

Faculty Members

- Kadi Bouatouch [UNIV RENNES I, Emeritus, HDR]

- Marc Christie [UNIV RENNES I, Associate Professor]

- Anne-Hélène Olivier [UNIV RENNES II, Associate Professor, HDR]

Post-Doctoral Fellows

- Pratik Mullick [INRIA, until Sep 2022]

- Pierre Raimbaud [INRIA, until Aug 2022]

- Xi Wang [UNIV RENNES 1, from Jul 2022]

- Katja Zibrek [INRIA]

PhD Students

- Vicenzo Abichequer Sangalli [INRIA]

- Jean-Baptiste Bordier [UNIV RENNES I]

- Ludovic Burg [Université Rennes 1 ]

- Thomas Chatagnon [INRIA]

- Adèle Colas [INRIA, until Oct 2022]

- Robin Courant [Ecole Polytechnique, from Nov 2022]

- Alexis Jensen [UNIV RENNES I, from Sep 2022]

- Hongda Jiang [Pekin University]

- Alberto Jovane [INRIA]

- Jordan Martin [Université Laval, Québec, Canada, from Mar 2022 until Apr 2022]

- Maé Mavromatis [INRIA]

- Lucas Mourot [INTERDIGITAL]

- Yuliya Patotskaya [INRIA]

- Xiaoyuan Wang [ENS RENNES]

- Tairan Yin [INRIA]

Technical Staff

- Robin Adili [INRIA, Engineer, until Aug 2022]

- Rémi Cambuzat [INRIA, Engineer, from Dec 2022]

- Robin Courant [UNIV RENNES I, Engineer, until Oct 2022]

- Solenne Fortun [INRIA, until Oct 2022, Project manager]

- Nena Markovic [UNIV RENNES I, Engineer]

- Anthony Mirabile [UNIV RENNES I, Engineer]

- Adrien Rezeau [INRIA, until Sep 2022]

Interns and Apprentices

- Philippe De Clermont Gallerande [INRIA, from Apr 2022 until Nov 2022]

- Victorien Desbois [UNIV RENNES I, Master SIF intern, from May 2022 until Aug 2022]

- Alexis Jensen [INRIA, from Feb 2022 until Aug 2022]

Administrative Assistant

- Gwenaëlle Lannec [INRIA]

Visiting Scientists

- Michael Cinelli [Wilfrid Laurier University, Canada, from May 2022 until Jun 2022]

- Bradford McFadyen [Université Laval, Québec, Canada, from Mar 2022 until Apr 2022]

2 Overall objectives

Numerical simulation is a tool at the disposal of the scientist for the understanding and the prediction of real phenomena. The simulation of complex physical behaviours is, for example, a perfectly integrated solution in the technological design of aircrafts, cars or engineered structures in order to study their aerodynamics or mechanical resistance. The economic and technological impact of simulators is undeniable in terms of preventing design flaws and saving time in the development of increasingly complex systems.

Let us now imagine the impact of a simulator that would incorporate our digital alter-egos as simulated doubles that would be capable of reproducing real humans, in their behaviours, choices, attitudes, reactions, movements, or appearances. The simulation would not be limited to the identification of physical parameters such as the shape of an object to improve its mechanical properties, but would also extend to the identification of functional or interaction parameters and would significantly increase its scope of application. Also imagine that this simulation is immersive, that is to say that beyond the simulation of humans, we would enable real users to share the experience of a simulated situation with their digital alter-egos. We would then open up a wide field of possibilities towards studies accounting for psychological and sociological parameters and furthermore experiential, narrative or emotional dimensions. A revolution, but also a key challenge as putting the human being into equations, following all its aspects and dimensions, is extremely difficult. This is the challenge we propose to tackle by exploring the design and applications of immersive simulators for scenes populated by virtual humans.

This challenge is transdisciplinary by nature. The human being can be considered under the eye of Physics, Psychology, Sociology, Neurosciences, which all have triggered many research topics at the interface with Computer Science: biomechanical simulation, animation and graphics rendering of characters, artificial intelligence, computational neurosciences, etc. In such a context, our transversal activity aims to create a new generation of virtual, realistic, autonomous, expressive, reactive and interactive humans to populate virtual scenes. Harnessing the ambition of designing an immersive virtual population, redour Inria project-team The Virtual Us (our virtual alter-egos, code name VirtUs) has the ambition we propose a title for a future Inria project-team The Virtual Us (our virtual alter-egos), with the code name VirtUs. A strong ambition of VirtUs is to create and simulate immersive populated spaces (shorten as “VirtUs simulators”) where both virtual and real humans coexist, with a sufficient level of realism so that the experience lived virtually and its results can be transposed to reality. The achievement of this goal allows us to use VirtUs simulators to better digitally replicate them, better interact with them and thus create a new kind of narrative experiences as well as new ways to observe and understand human behaviours.

3 Research program

3.1 Scientific Framework and Challenges

Immersive simulation of populated environments is currently undergoing a revolution on several fronts, whose origins go well beyond the limits of this single theme. Immersive technologies are experiencing an industrial revolution, and are now available at low cost to a very wide range of users. Software solutions for simulation have also known an industrial revolution: generic solutions from the world of video games are now commonly available (e.g., Unity, Unreal) to design interactive and immersive virtual worlds in a simplified way. Beyond the technological aspects, simulation techniques, and in particular human-related processes, are undergoing the revolution of machine learning, with a radical shift in their paradigm: from a procedural approach that tends to reproduce the mechanisms by which humans make decisions and carry out actions, we are moving towards solutions that tend to directly reproduce the results of these mechanisms from a large statistics of past behaviors. On a broad horizon, these revolutions radically change the interactions between digital systems and the real world including us, suddenly bringing them much closer to certain human capacities to interpret or predict the world, to act or react to it. These technological and scientific revolutions necessarily reposition the application framework of simulators, also opening up new uses, such as the use of immersive simulation as a learning ground for intelligent systems or for data collection on human-computer interaction.

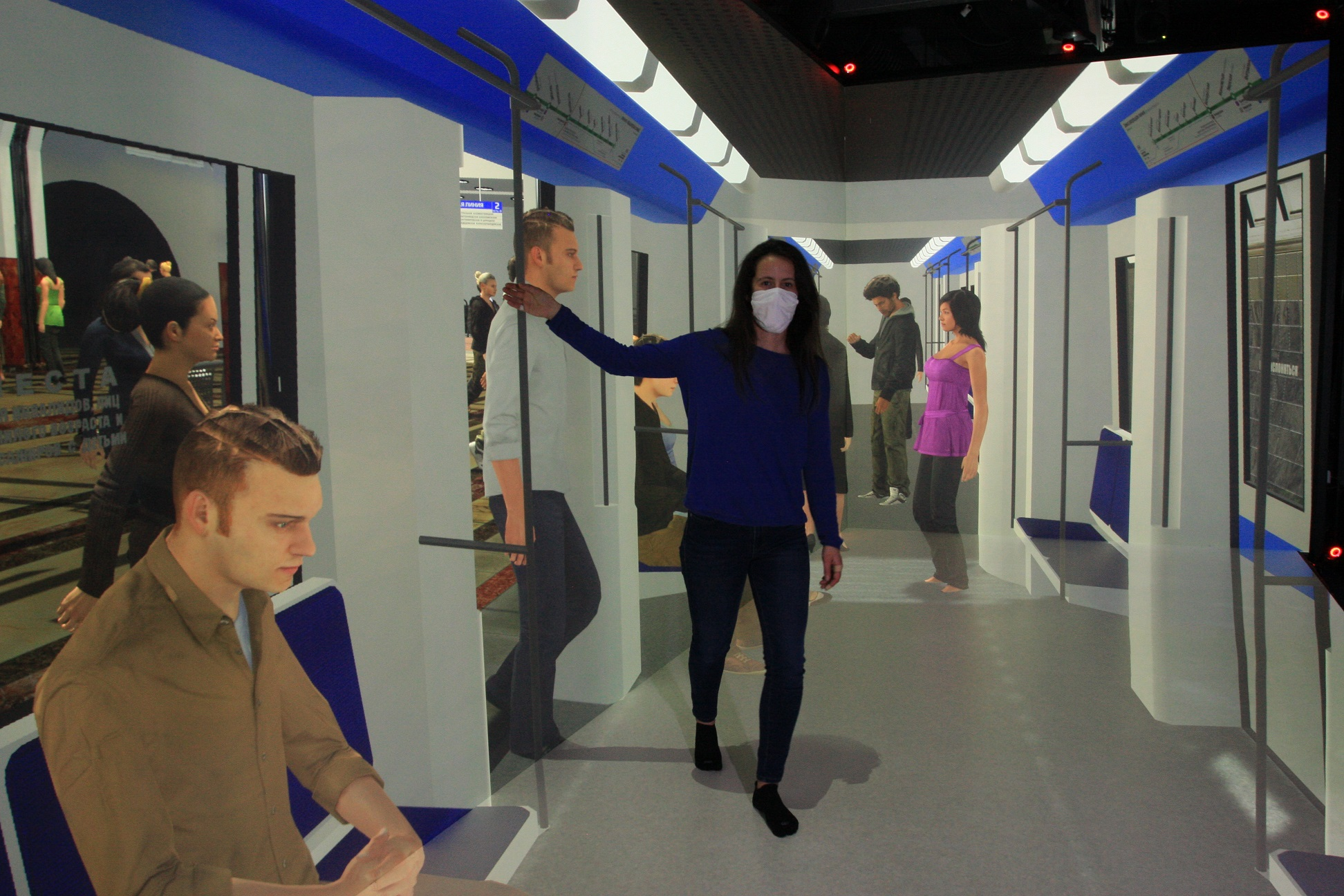

The VirtUs team's proposal is fully in line with this revolution. Typical usage of VirtUs simulators in a technological or scientific setting are, for instance, illustrated in Figure 1 through the following example scenarios:

-

S1 -

We want to model collective behaviours from a microscopic perspective, i.e., to understand the mechanisms of local human interactions and how they result into emergent crowd behaviours. The VirtUs simulator is used to: immerse real users in specific social settings, expose them to controlled behaviours of their neighbours, gather behavioral data in controlled conditions, and as an application, help modeling decisions.

-

S2 -

We want to evaluate and improve a robot's capabilities to navigate in a public space in proximity to humans. The VirtUs simulator is used to immerse a robot in a virtual public space to: generate an infinite number of test scenarios for it, generate automatically annotated sensor data for the learning of tasks related to its navigation, determine safety-critical density thresholds, observe the reactions of subjects also immersed, etc1.

-

S3 -

We want to evaluate the design of a transportation facility in terms of comfort and safety. The VirtUs simulator is used to immerse real users in virtual facilities to: study the positioning of information signs, measure the related gaze activity, evaluate users' personal experiences in changing conditions, evaluate safety procedures in specific crisis scenarios, evaluate reactions to specific events, etc.

These three scenarios allow us to detail the ambitions of VirtUs, and in particular to define the major challenges that the team wishes to take up:

-

C1 -

Better capture the characteristics of human motion in complex and variate situations

-

C2 -

Provide an increased realism in the individual and collective behaviors (from models gathered in C1)

-

C3 -

Improve the immersion of users, to not only create new user experiences, but to also better capture and understand their behaviors

But it is also stressed through these scenarios and challenges that they cannot be addressed in a concrete way without taking into account the uses made of VirtUs simulators. Scenario S2 for the synthesis of robot sensor data requires that simulated scenes reflect the same characteristics as real scenes (for instance, training a robot to predict human movements requires that virtual character indeed cover all the relevant postures and movements). Scenario S1 and S3 focus on verifying that users have the same information that guides their behavior as in a real situation (for instance, the salience of the virtual scene was consistent with reality and caused users to behave in the same way as in a real situation), etc. Thus, while the nature of the scientific questions that animate the team remains, they are addressed across the spectrum of applications of VirtUs simulators. VirtUs members explore some scientific applications that directly contribute to the VirtUs research program or to connected fields, such as: the study of crowd behaviours, the study of pedestrian behaviour, and virtual cinematography.

3.2 Research Axes

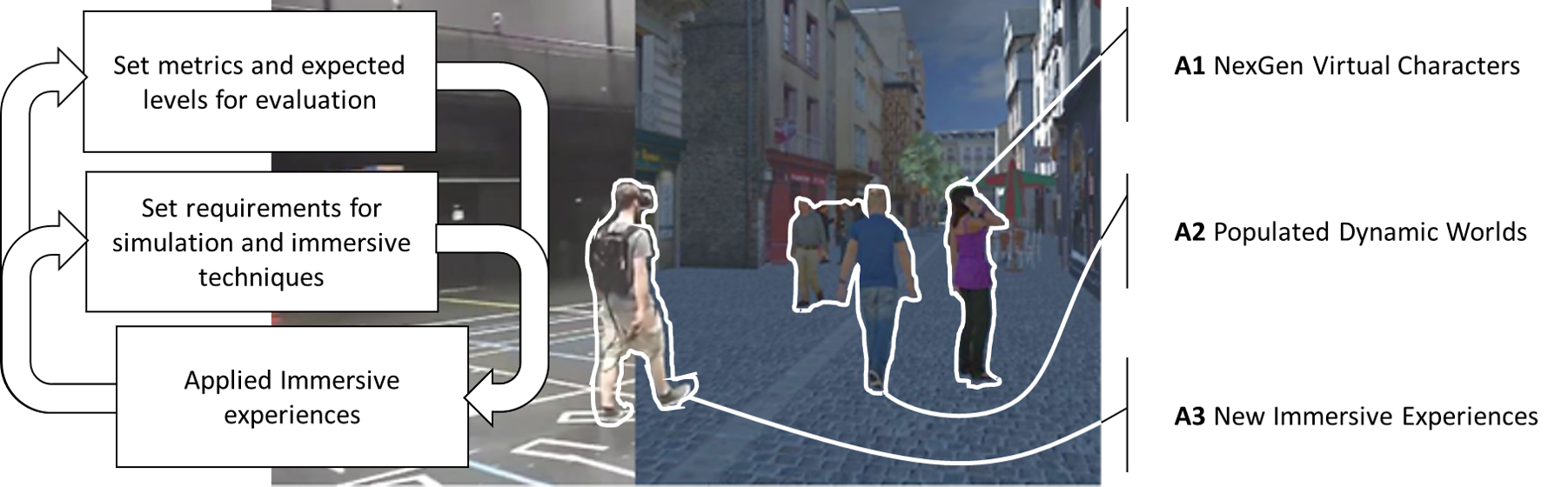

Figure 2 shows how we articulate the challenges taken up by the team, and how we identify 3 research axes to implement this schema, addressing problems at different scales: the individual virtual human one, the group-level one, and the whole simulator one:

3.2.1 Axis A1 - NextGen Virtual Characters

Summary:

At the individual level, we aim at developing the next generation of virtual humans whose capabilities enable them to autonomously act in immersive environment and interact with immersed users in a natural, believable and autonomous way.

Vision:

Technology is today existing to generate virtual characters with a unique visual appearance (body-shape, skin color, hairstyle, clothing, etc). But it still requires a large - programming or designing - effort to individualize their motion style, to give them traits based on the way they move, or to make them behaving in consistent way across a large set of situations. Our vision is that individualization of motion should be as easy as appearance tuning. This way, virtual characters could for example convey their personality or their emotional state by the way they move, or adapt to new scenarios. Unlike other approaches which rely on always larger datasets to extend characters motion capabilities, we prefer exploring procedural techniques to adjust existing motions.

Long term scientific objective:

Axis A1 addresses the challenge C1 to better capture the characteristics of human motion in variate situations, as well as C2 to increase the realism of individual behaviour, with the expectation of achieving realistic immersive experience of the virtual world by setting the spotlight on VirtUs characters that populate them. The objective is to bring characters to life, to be our digital alter-egos by the way they move and behave in their environment and by the way they interact with us. Our Grail is the perceived realism of characters motion, which means that users can understand the meaning of characters actions, interpret their expressions or predict their behaviors, just like they do with other real humans. In the long term, this objective raises the questions of characters non-verbal communication capabilities, including multimodal interactions between characters and users - such as touch (haptic rendering of character contacts) or sound (character reaction to user sounds) - as well as adaptation of the character's behaviors to his morphology, physiology or psychology, allowing for example to vary the weight, age, height or emotional state of a character with all the adaptations that follow. Finally, our goal is to avoid the need for constant expansion of motion databases capturing these variations, which requires expensive hardware and effort to achieve, and instead bring them procedurally to existing motions.

3.2.2 Axis A2 - Populated Dynamic Worlds

Summary:

Populated Dynamic Worlds: at the group or crowd level, we aim at developing techniques to design populated environments (i.e., how to define and control the activity of large numbers of virtual humans) as well as to simulate them (i.e., enable them to autonomously interact together, perform group actions and collective behaviours)

Vision:

Designing immersive populated environments require bringing to life many characters, basically meaning that thousands of animation trajectory must be generated, and eventually in real-time to ensure interaction capabilities. Our vision is that, while automating the animation process is obviously required here to manage complexity, there is as well an absolute need for intelligent authoring tools to let designers controlling, scripting or devising, the higher level aspects of populated scenes: exhibited population behaviours, density, reactions to events, etc. In ten years from now, we aim for tools that combine authoring and simulation aspects of our problem, and let designers work in the most intuitive manner. Typically working from a “palette” of examples of typical populations and behaviours, we are aiming for virtual population design processes which are as simple as “I would like to populate my virtual railway station in the style of the New York central station at rush hour (full of commuters), or in the style of Paris Montparnasse station on a week-end (with more families, and different kind of shopping activities)”.

Long term scientific objective:

Axis A2 tackles the challenge of building and conveying lively, complex, realistic virtual environments populated by many characters. It addresses the challenges C1 and C2 (extending to complex and collective situations in comparison with the previous axis). Our objective is twofold. First, we want to simulate the motion of crowds of characters. Second, we want to deliver tools for authoring crowded scenes. At the interface of these two objectives, we also explore the question of animating crowds, i.e., generating full-body animations for crowd characters (crowd simulation works with abstraction levels where bodies are not represented). We target a high-level of visual realism. To this end, our objective is to be capable of simulating and authoring virtual crowds so that they resemble real ones: e.g., working from examples of crowd behaviours and tuning simulation and animations processes accordingly. We also want to progressively erase the distinction that exists today between crowd simulation and crowd animation. This is critical in the treatment of complex, body-to-body interactions. By setting this objective, we keep the application field for our crowd simulation techniques wide open, as we both satisfy the needs for visual realism of entertainment applications, as well as the need for data assimilation capabilities for real crowd management kind of applications.

3.2.3 Axis A3 - New Immersive Experiences

Summary:

New immersive experiences: in an application framework, we aim at devising relevant evaluation metrics and demonstrating the performance of VirtUs simulations and as well as compliance and transferability of new immersive experiences to reality

Vision:

We have highlighted through some scenarios the high potential of VirtUs simulators to provide new immersive experiences, and to reach new horizons in terms of scientific or technological applications. Our vision is that new immersive capabilities, and especially new kinds of immersive interactions with realistic groups of virtual characters, can lead to radical changes in various domains, such as the experimental process to study human behaviour in fields like socio-psychology, or the one of entertainment, to tell stories across new medias. In ten years from now, immersive simulators like VirtUs ones will have demonstrated their capacities to reach new levels of realism to open such possibilities, offering experiences where one can perceive the context in which immersive experience take place, can understand and interpret characters' actions happening around him, and can get his belief catched by the ongoing story conveyed by the simulated world.

Long term scientific objective:

Axis A3 addresses the challenge of designing novel immersive experiences, which builds on the innovations from the first two research axes to design VirtUs simulators placing real users in close interaction with virtual characters. We design our immersive experiences with two scientific objectives in mind. Our first objective is to observe users in new generation of immersive experiences where they will move, behave and interact as in the normal life, so that observations made in VirtUs simulators will enable us to study increasingly more complex and ecological situations. This could be a step change in the technologies to study human behaviours, that we apply to our own research objects developed in Axes 1 & 2. Our second objective is to benefit from this face-to-face interaction with VirtUs characters to evaluate their capabilities of presenting more subtle behaviors (e.g., reactivity, expressiveness), to improve immersion protocols. But evaluation methodologies are to be invented. Long term perspectives also encompass to better understand how immersive contents are perceived, not only at the low-level of image saliency (to compose scenes and contents more visually perceptible), but also at higher levels related to cognition and emotion (to compose scenes and contents with a meaning and an emotional impact). By embracing a broader vision, we expect in the future the interactive design of more compelling and enjoyable user experiences.

4 Application domains

In this section we detail how each research axis of the VirtUs team contributes to different application areas. We have identified four application domains, which we detail in the subsections below.

4.1 Computer Graphics, Computer Animation and Virtual Reality

Our research program aims at increasing the action and reaction capabilities of the virtual characters that populate our immersive simulations. Therefore, we contribute to techniques that enable us to animate virtual characters, to develop their autonomous behaviour capabilities, to control their visual representations, but also, more related to immersive applications, to develop their interaction capabilities with a real user, and finally to adapt all these techniques to the limited computational time budget imposed by a real time, immersive and interactive application. These contributions are at the interface of computer graphics, computer animation and virtual reality.

Our research also aims at proposing tools to stage a set of characters in relation to a specific environment. Our contributions aim at making intuitive the tasks of scene creation while ensuring an excellent coherence between the visible behaviour of the characters and the universe in which they evolve. These contributions have applications in the field of computer animation.

4.2 Cinematography and Digital Storytelling

Our research targets the understanding of complex movie sequences, and more specifically how low-and-high level features are spatially and temporally orchestrated to create a coherent and aesthetic narrative. This understanding then feeds computational models (through probabilistic encodings but also by relying on learning techniques), which are designed with the capacity to regenerate new animated contents, though automated or interactive approaches, e.g., exploring sylistic variations. This finds applications in 1) the analysis of film/TV/Broadcast contents, augmenting the nature and range of knowledge potentially extracted from such contents, with interest from major content providers (Amazon, Netflix, Disney) and 2) the generation of animated contents, with interest from animation companies, film previsualisation, or game engines.

Furthermore, our research focusses on the extraction of lighting features from real or captures scenes, and the simulation of these lightings in new virtual or augmented reality contexts. The underlying scientific challenges are complex and related to the understanding from images where lights are, how they interact with the scene and how light propagate. Applications in understanding this light staging, and reproducing it in virtual environments find many applications in the film and media industries, which want to seamlessly integrate virtual and real contents together through spatially and temporally coherent light setups.

4.3 Human motion, Crowd dynamics and Pedestrian behaviours

Our research program contributes to crowd modelling and simulation, including pedestrian simulation. Crowd simulation has various applications ranging from entertainment (visual effects for cinema, animated and interactive scenes for video games) to architecture and security (dimensioning of places intended to receive the public, flow management). Our research program also aims to immerse users in these simulations in order to study their behaviour, opening the way to applications for research on human behaviour, including human movement sciences, or the modeling of pedestrians and crowds.

4.4 Psychology and Perception

One important dimension of our research consists in identifying and measuring what users of our immersive simulators are able to perceive of the virtual situations. We are particularly interested in understanding how the content presented to them is interpreted, how they react to different situations, what are the elements that impact their reactions as well as their immersion in the virtual word, and how all these elements differ from real situations. Such challenges are directly related to the fields of Psychology and Perception, which are historically associated with Virtual Reality.

5 Highlights of the year

Official starting of the VirtUs team

The year 2022 marks above all the official start of the VirtUs team, which registers on 1 July 2022.

5.1 Project activities

Final evaluation of the H2020 CrowdBot project

The CrowdBot project ended on 31 December 2021, and its final evaluation by the European Commission was carried out in 2022. The project was initiated and coordinated by Julien Pettré (implemented in the Inria Rainbow team). The evaluation, whose outcome was very positive, marks the end of an epic journey that has greatly influenced the research carried out within the team, in particular towards modelling and prediction techniques of pedestrian behaviour, as well as the use of virtual reality for the collection of data on human-robot interaction, as well as the evaluation of the safety level of autonomous systems during such interactions.

Kick-off of the ANR OLICOW project

The team is a partner in the ANR OLICOW project which started on 1 December 2022. In this project, the VirtUs team will address the topics of behaviour prediction and calibration of simulation models by assimilation of real data.

End of project H2020 INVICTUS

The project INVICTUS, coordinated by M. Christie has reached its official end December 31st, 2022. The final evaluation of the project will take place beginning of 2023.

5.2 Awards

Etoile de l'europe:

Julien Pettré was awarded an “Etoile de l'Europe” from the Ministère de l'enseignement supérieur et de la Recherche. Lien vers le site d'information du Ministère.

Eurographics - Best Paper Award, Honorable Mention:

Adèle Colas was awarded a Honorable Mention at Eurographics for the her work Interaction Fields: Intuitive Sketch-based Steering Behaviors for Crowd Simulation.

IEEE Virtual Reality - TVCG Best Journal Papers Nominees:

Tairan Yin was nominated for the Best Journal Award for his work The One-Man-Crowd: Single User Generation of Crowd Motions Using Virtual Reality.

Outstanding Reviewer Award:

Ludovic Hoyet obtained the Outstanding Reviewer Award at the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games 2022 conference.

6 New software and platforms

6.1 New software

6.1.1 AvatarReady

-

Name:

A unified platform for the next generation of our virtual selves in digital worlds

-

Keywords:

Avatars, Virtual reality, Augmented reality, Motion capture, 3D animation, Embodiment

-

Scientific Description:

AvatarReady is an open-source tool (AGPL) written in C#, providing a plugin for the Unity 3D software to facilitate the use of humanoid avatars for mixed reality applications. Due to the current complexity of semi-automatically configuring avatars coming from different origins, and using different interaction techniques and devices, AvatarReady aggregates several industrial solutions and results from the academic state of the art to propose a simple and fast way to use humanoid avatars in mixed reality in a seamless way. For example, it is possible to automatically configure avatars from different libraries (e.g., rocketbox, character creator, mixamo), as well as to easily use different avatar control methods (e.g., motion capture, inverse kinematics). AvatarReady is also organized in a modular way so that scientific advances can be progressively integrated into the framework. AvatarReady is furthermore accompanied by a utility to generate ready-to-use avatar packages that can be used on the fly, as well as a website to display them and offer them for download to users.

-

Functional Description:

AvatarReady is a Unity tool to facilitate the configuration and use of humanoid avatars for mixed reality applications. It comes with a utility to generate ready-to-use avatar packages and a website to display them and offer them for download.

- URL:

-

Authors:

Ludovic Hoyet, Fernando Argelaguet Sanz, Adrien Reuzeau

-

Contact:

Ludovic Hoyet

6.1.2 PyNimation

-

Keywords:

Moving bodies, 3D animation, Synthetic human

-

Scientific Description:

PyNimation is a python-based open-source (AGPL) software for editing motion capture data which was initiated because of a lack of open-source software enabling to process different types of motion capture data in a unified way, which typically forces animation pipelines to rely on several commercial software. For instance, motions are captured with a software, retargeted using another one, then edited using a third one, etc. The goal of Pynimation is therefore to bridge the gap in the animation pipeline between motion capture software and final game engines, by handling in a unified way different types of motion capture data, providing standard and novel motion editing solutions, and exporting motion capture data to be compatible with common 3D game engines (e.g., Unity, Unreal). Its goal is also simultaneously to provide support to our research efforts in this area, and it is therefore used, maintained, and extended to progressively include novel motion editing features, as well as to integrate the results of our research projects. At a short term, our goal is to further extend its capabilities and to share it more largely with the animation/research community.

-

Functional Description:

PyNimation is a framework for editing, visualizing and studying skeletal 3D animations, it was more particularly designed to process motion capture data. It stems from the wish to utilize Python’s data science capabilities and ease of use for human motion research.

In its version 1.0, Pynimation offers the following functionalities, which aim to evolve with the development of the tool : - Import / Export of FBX, BVH, and MVNX animation file formats - Access and modification of skeletal joint transformations, as well as a certain number of functionalities to manipulate these transformations - Basic features for human motion animation (under development, but including e.g. different methods of inverse kinematics, editing filters, etc.). - Interactive visualisation in OpenGL for animations and objects, including the possibility to animate skinned meshes

- URL:

-

Authors:

Ludovic Hoyet, Robin Adili, Benjamin Niay, Alberto Jovane

-

Contact:

Ludovic Hoyet

7 New results

7.1 NextGen Virtual Characters

7.1.1 Warping Character Animations using Visual Motion Features

Participants: Julien Pettré [contact], Marc Christie, Ludovic Hoyet, Alberto Jovane, Anne-Hélène Olivier, Pierre Raimbaud, Katja Zibrek.

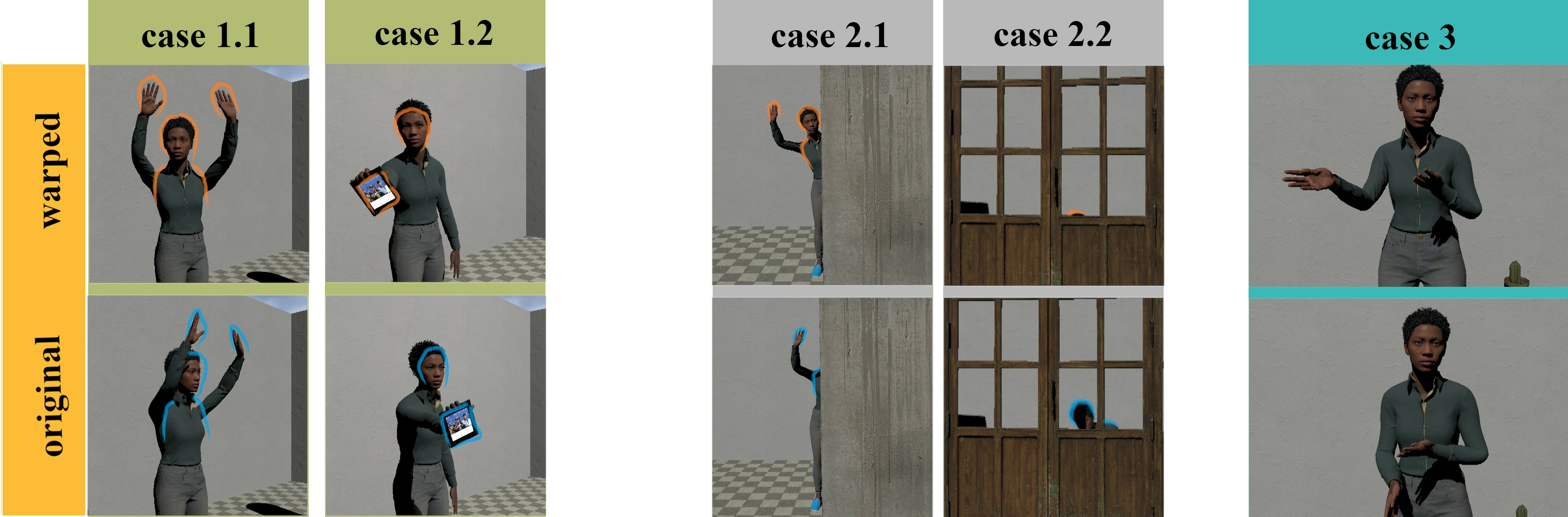

Despite the wide availability of large motion databases, and recent advances in exploiting them to create tailored contents, artists still spend tremendous efforts in editing and adapting existing character animations to new contexts. This motion editing problem is a well-addressed research topic. Yet only a few approaches have considered the influence of the camera angle, and the resulting visual features it yields, as a mean to control and warp a character animation. This work 3 proposes the design of viewpoint-dependent motion warping units that perform subtle updates on animations through the specification of visual motion features such as visibility, or spatial extent. We express the problem as a specific case of visual servoing, where the warping of a given character motion is regulated by a number of visual features to enforce. Results demonstrate the relevance of the approach for different motion editing tasks and its potential to empower virtual characters with attention-aware communication abilities, as diplayed in Figure 3. This work was performed in collaboration with Claudio Pacchierotti from the Rainbow team.

7.1.2 UnderPressure: Deep Learning for Foot Contact Detection, Ground Reaction Force Estimation and Footskate Cleanup

Participants: Ludovic Hoyet [contact], Lucas Mourot.

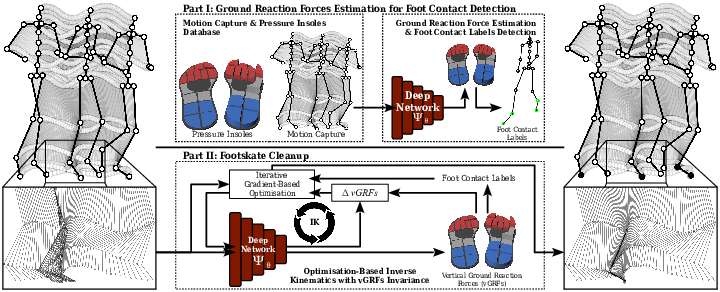

In this work, we leverage UnderPressure, a novel publicly available dataset of motion capture synchronized with pressure insoles data, to estimate vertical Ground Reaction Forces (vGRFs) from motion data with a deep neural network and derive foot contact labels. We further clean up footskate artefacts through an optimisation-based inverse kinematics algorithm while enforcing vGRFs invariance.

Human motion synthesis and editing are essential to many applications like video games, virtual reality, and film post-production. However, they often introduce artefacts in motion capture data, which can be detrimental to the perceived realism. In particular, footskating is a frequent and disturbing artefact, which requires knowledge of foot contacts to be cleaned up. Current approaches to obtain foot contact labels rely either on unreliable threshold-based heuristics or on tedious manual annotation. In this work 5, we address automatic foot contact label detection from motion capture data with a deep learning based method. To this end, we first publicly release UnderPressure, a novel motion capture database labelled with pressure insoles data serving as reliable knowledge of foot contact with the ground, wich we used to design and train a deep neural network to estimate ground reaction forces exerted on the feet from motion data and derive accurate foot contact labels (Figure 4). The evaluation of our model shows that we significantly outperform heuristic approaches based on height and velocity thresholds and that our approach is much more robust when applied on motion sequences suffering from perturbations like noise or footskate. We further propose a fully automatic workflow for footskate cleanup: foot contact labels are first derived from estimated ground reaction forces. Then, footskate is removed by solving foot constraints through an optimisation-based inverse kinematics (IK) approach that ensures consistency with the estimated ground reaction forces. Beyond footskate cleanup, both the database and the method we propose could help to improve many approaches based on foot contact labels or ground reaction forces, including inverse dynamics problems like motion reconstruction and learning of deep motion models in motion synthesis or character animation. This work was performed in collaboration with Pierre Hellier and François Le Clerc from InterDigital.

7.1.3 A new framework for the evaluation of motion datasets through motion matching techniques

Participants: Julien Pettré [contact], Vicenzo Abichequer Sangalli, Marc Christie, Ludovic Hoyet.

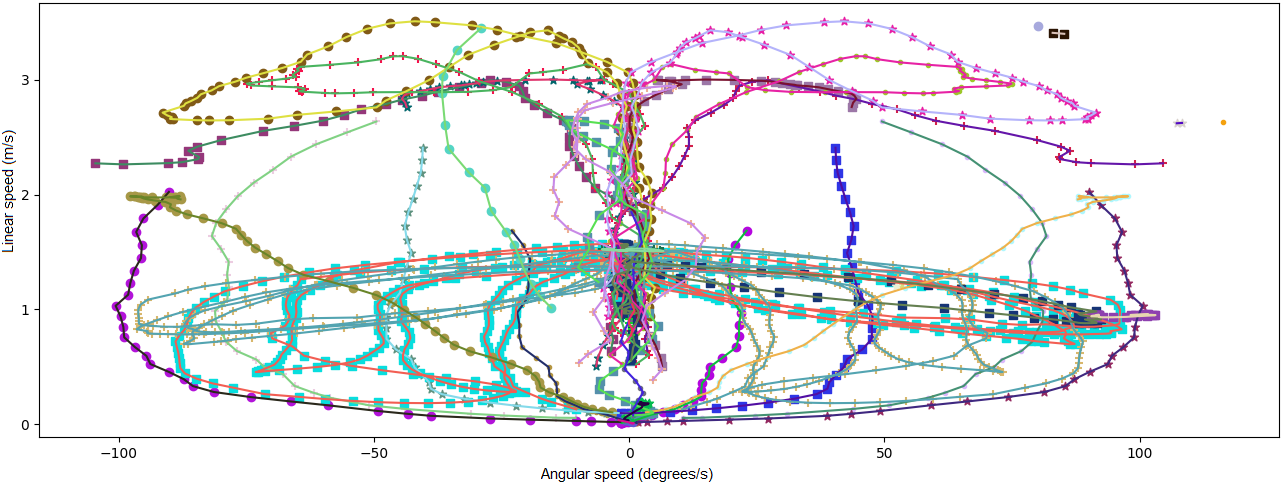

The many different motions that compose one of the datasets used in this work . Each motion clip is represented in a different color and symbol. Such representations are exploited to give the user an idea of how motions are distributed in the dataset.

Analyzing motion data is a critical step when building meaningful locomotive motion datasets. This can be done by labeling motion capture data and inspecting it, through a planned motion capture session or by carefully selecting locomotion clips from a public dataset. These analyses, however, have no clear definition of coverage, making it harder to diagnose when something goes wrong, such as a virtual character not being able to perform an action or not moving at a given speed. This issue is compounded by the large amount of information present in motion capture data, which poses a challenge when trying to interpret it. This work 9 provides a visualization and an optimization method to streamline the process of crafting locomotive motion datasets (Figure 5). It provides a more grounded approach towards locomotive motion analysis by calculating different quality metrics, such as: demarcating coverage in terms of both linear and angular speeds, frame use frequency in each animation clip, deviation from the planned path, number of transitions, number of used vs. unused animations and transition cost. By using these metrics as a comparison mean for different motion datasets, our approach is able to provide a less subjective alternative to the modification and analysis of motion datasets, while improving interpretability.

7.1.4 Impact of Self-Contacts on Perceived Pose Equivalences

Participants: Ludovic Hoyet [contact].

Human characters with varying morphologies performing poses with several self-contacts. Characters with similar poses but different body shapes (columns) can have slightly different spatial relationships between body segments, in particular self-contacts (e.g., contact between the left arm and the flank appearing for the middle characters). In this work we explore which self-contacts are important to the meaning of the pose independently from other parameters such as body shape.

Defining equivalences between poses of different human characters is an important problem for imitation research, human pose recognition and deformation transfer. However, pose equivalence is a subjective information that depends on context and on the morphology of the characters. A common hypothesis is that interactions between body surfaces, such as self-contacts, are important attributes of human poses, and are therefore consistently included in animation approaches aiming at retargeting human motions. However, some of these self-contacts are only present because of the morphology of the character and are not important to the pose, e.g. contacts between the upper arms and the torso during a standing A-pose. In this work 10, we conduct a first study towards the goal of understanding the impact of self-contacts between body surfaces on perceived pose equivalences. More specifically, we focus on contacts between the arms or hands and the upper body as displayed in Figure 6, which are frequent in everyday human poses. We conduct a study where we present to observers two models of a character mimicking the pose of a source character, one with the same self-contacts as the source, and one with one self-contact removed, and ask observers to select which model best mimics the source pose. We show that while poses with different self-contacts are considered different by observers in most cases, this effect is stronger for self-contacts involving the hands than for those involving the arms. This work was conducted in collaboration with Franck Multon of the MimeTIC team, and Jean Basset and Stefanie Wuhrer of the Morpheo team.

7.2 Populated Dynamic Worlds

7.2.1 A Survey on Reinforcement Learning Methods in Character Animation

Participants: Julien Pettré [contact].

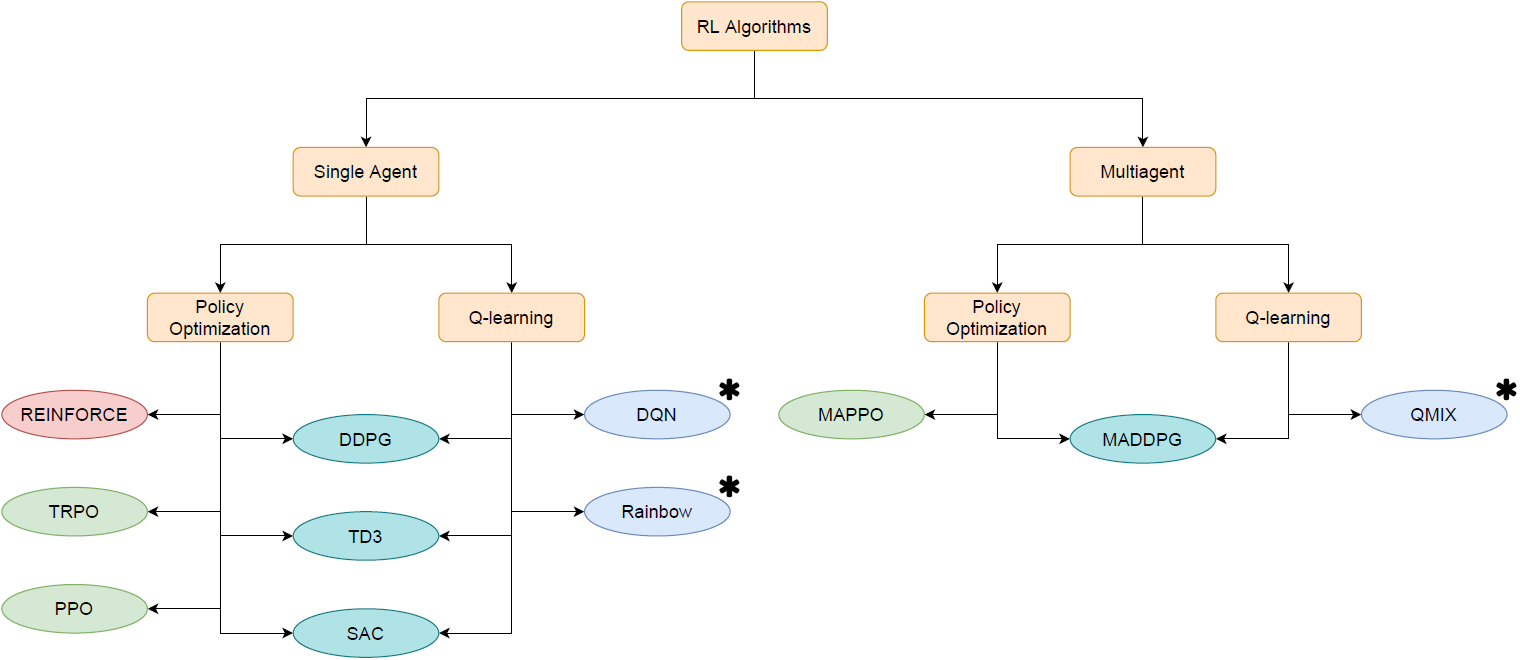

A diagram showing a taxonomy of the Reinforcement Learning algorithms described in this work. We focus on two divisions: single agent or multiagent, and policy-based or value-based. The colors of nodes correspond to whether the algorithm is on-policy (red), off-policy (blue), or in between (green). Algorithms marked with an asterisk (⁎) can only be used with discrete action spaces.

Reinforcement Learning is an area of Machine Learning focused on how agents can be trained to make sequential decisions, and achieve a particular goal within an arbitrary environment. While learning, they repeatedly take actions based on their observation of the environment, and receive appropriate rewards which define the objective. This experience is then used to progressively improve the policy controlling the agent's behavior, typically represented by a neural network. This trained module can then be reused for similar problems, which makes this approach promising for the animation of autonomous, yet reactive characters in simulators, video games or virtual reality environments. We provide the community with a comprehensible survey 4 of the modern Deep Reinforcement Learning methods and discusses their possible applications in Character Animation, from skeletal control of a single, physically-based character to navigation controllers for individual agents and virtual crowds. It also describes the practical side of training DRL systems, comparing the different frameworks available to build such agents (Fig. 7).

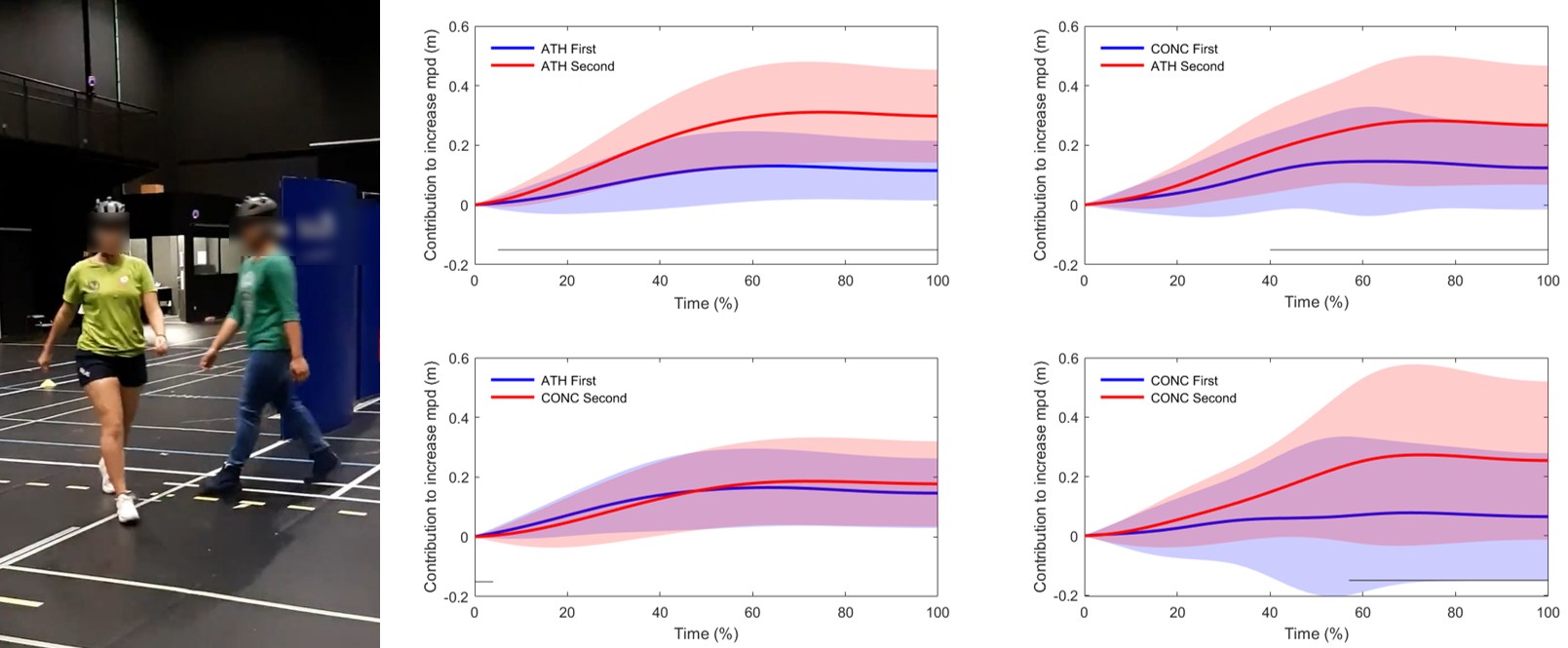

7.2.2 Analysis of emergent patterns in crossing flows of pedestrians reveals an invariant of ‘stripe’ formation in human data

Participants: Julien Pettré [contact], Pratick Mullick.

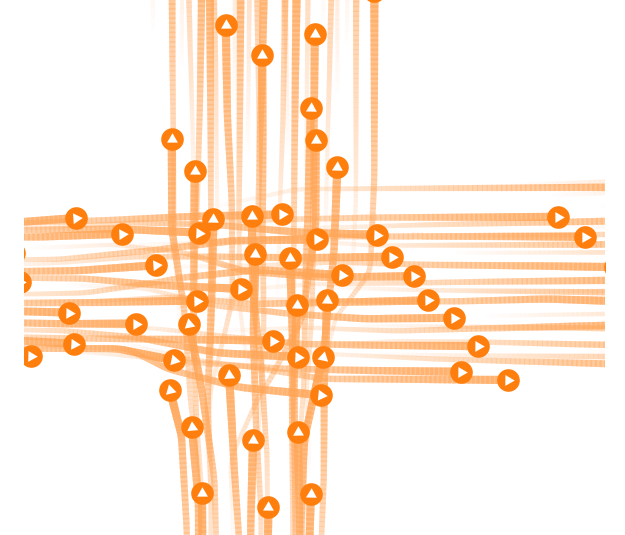

When two streams of pedestrians cross at an angle, striped patterns spontaneously emerge as a result of local pedestrian interactions. This clear case of self-organized pattern formation remains to be elucidated. In counterflows, with a crossing angle of 180 degrees, alternating lanes of traffic are commonly observed moving in opposite directions, whereas in crossing flows at an angle of 90 degrees, diagonal stripes have been reported. Naka (1977) hypothesized that stripe orientation is perpendicular to the bisector of the crossing angle. However, studies of crossing flows at acute and obtuse angles remain underdeveloped. We tested the bisector hypothesis in experiments on small groups (18-19 participants each) crossing at seven angles (30 degrees intervals), and analyzed the geometric properties of stripes 8. We present 6 two novel computational methods for analyzing striped patterns in pedestrian data: (i) an edge-cutting algorithm, which detects the dynamic formation of stripes and allows us to measure local properties of individual stripes; and (ii) a pattern-matching technique, based on the Gabor function, which allows us to estimate global properties (orientation and wavelength) of the striped pattern at a time . We find an invariant property: stripes in the two groups are parallel and perpendicular to the bisector at all crossing angles. In contrast, other properties depend on the crossing angle: stripe spacing (wavelength), stripe size (number of pedestrians per stripe), and crossing time all decrease as the crossing angle increases from 30 degrees to 180 degrees, whereas the number of stripes increases with crossing angle. We also observe that the width of individual stripes is dynamically squeezed as the two groups cross each other. The findings thus support the bisector hypothesis at a wide range of crossing angles, although the theoretical reasons for this invariant remain unclear. The present results provide empirical constraints on theoretical studies and computational models of crossing flows.

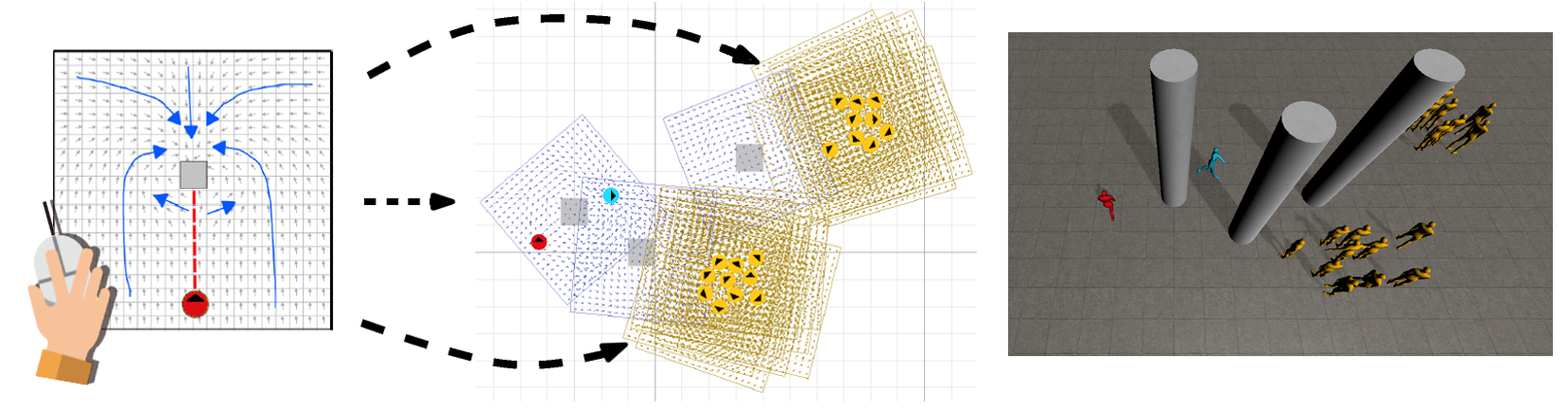

7.2.3 Interaction Fields: Intuitive Sketch-based Steering Behaviors for Crowd Simulation

Participants: Julien Pettré [contact], Ludovic Hoyet, Adèle Colas, Katja Zibrek, Anne-Hélène Olivier.

Photograph of our experimental set-up to study crossing flows. Agents participating in our experiment are shown in this photograph for a typical trial with crossing angle 120 degrees. The three stages of the trial are shown here, viz. (left) before crossing (middle) during crossing and (right) after crossing.

The real-time simulation of human crowds has many applications. In a typical crowd simulation, each person (“agent”) in the crowd moves towards a goal while adhering to local constraints. Many algorithms exist for specific local `steering' tasks such as collision avoidance or group behavior. However, these do not easily extend to completely new types of behavior, such as circling around another agent or hiding behind an obstacle. They also tend to focus purely on an agent's velocity without explicitly controlling its orientation. Our paper 2 presents a novel sketch-based method for modelling and simulating many steering behaviors for agents in a crowd. Central to this is the concept of an interaction field (IF): a vector field that describes the velocities or orientations that agents should use around a given `source' agent or obstacle. An IF can also change dynamically according to parameters, such as the walking speed of the source agent. IFs can be easily combined with other aspects of crowd simulation, such as collision avoidance. Using an implementation of IFs in a real-time crowd simulation framework, we could demonstrate the capabilities of IFs in various scenarios (e.g., hide and seek scenarios as illustrated in Figure 9). This includes game-like scenarios where the crowd responds to a user-controlled avatar. We also present an interactive tool that computes an IF based on input sketches. This IF editor lets users intuitively and quickly design new types of behavior, without the need for programming extra behavioral rules. We thoroughly evaluate the efficacy of the IF editor through a user study, which demonstrates that our method enables non-expert users to easily enrich any agent-based crowd simulation with new agent interactions.

7.2.4 Dynamic Combination of Crowd Steering Policies Based on Context

Participants: Ludovic Hoyet [contact].

|

|

|

| PL () | RVO () | PL and RVO () |

Simulating crowds requires controlling a very large number of trajectories of characters and is usually performed using crowd steering algorithms. The question of choosing the right algorithm with the right parameter values is of crucial importance given the large impact on the quality of results. In this work 1, we study the performance of a number of steering policies (i.e., simulation algorithm and its parameters) in a variety of contexts, resorting to an existing quality function able to automatically evaluate simulation results. This analysis allows us to map contexts to the performance of steering policies. Based on this mapping, we demonstrate that distributing the best performing policies among characters improves the resulting simulations. Furthermore, we also propose a solution to dynamically adjust the policies, for each agent independently and while the simulation is running, based on the local context each agent is currently in. We demonstrate significant improvements of simulation results compared to previous work that would optimize parameters once for the whole simulation, or pick an optimized, but unique and static, policy for a given global simulation context (Figure 10). This work was performed in collaboration with Julien Pettré from the Virtus team.

7.2.5 Towards new models of collision avoidance for specific populations

Participants: Anne-Hélène Olivier [contact].

In this research topic, we are interested in identifying the interaction behavior of specific populations such as athletes who have sustained a previous concussion 7, persons with a chronic low back pain 1516 as well as users of powered wheelchair 14, 17, 13. Understanding those specificity is interesting to adapt our crowd simulations models, providing more variety.

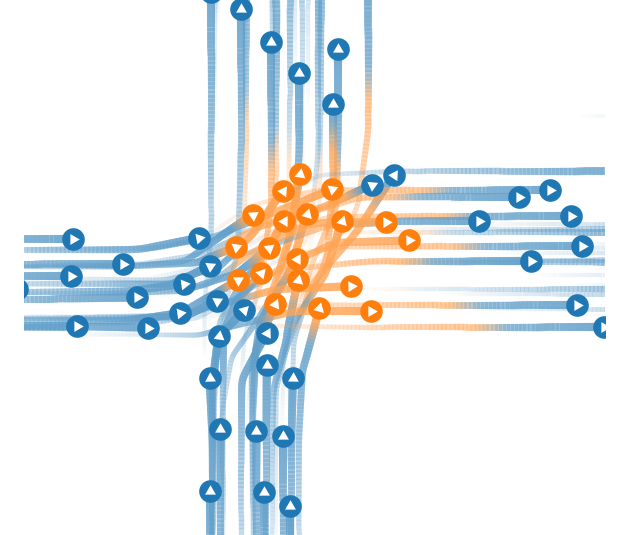

Left: Illustration of the real world image of two athletes interacting within the experiment to understand the effect of concussion on visuo-motor strategies. Right: Relative contributions to solve the collision depending on the group of athletes interacting. When 2 concussed athletes are involved in the interaction, the variability is increased .

Individuals who have sustained a concussion often display associated balance control deficits and visuomotor impairments despite being cleared by a physician to return to sport. Such visuomotor impairments can be highlighted in collision avoidance tasks that involves a mutual adaptation between two walkers. However, studies have yet to challenge athletes with a previous concussion during an everyday collision avoidance task, following return to sport. In this project performed in the frame of the Inria Bear Associate team, we investigated whether athletes with a previous concussion display associated behavioural changes during a 90 degree collision avoidance task with an approaching pedestrian (Cf. Figure 11, Left). To this end, thirteen athletes (ATH; 9 females, 234years) and 13 athletes with a previous concussion (CONC; 9 females, 223 years, concussion 6 months) walked at a comfortable walking speed along a 12.6m pathway while avoiding another athlete on a 90 degree collision course. Each participant randomly interacted with individuals from the same group 20 times and interacted with individuals from the opposite group 21 times. Minimum predicted distance (mpd) was used to examine collision avoidance behaviours between ATH and CONC groups. The overall progression of mpd(t) did not differ between groups (p>.05). During the collision avoidance task, previously concussed athletes contributed less when passing second compared to their peers (p<.001). When two previously concussed athletes were on a collision course, there was a greater amount of variability resulting in inappropriate adaptive behaviours (Cf. Figure 11 Right). Although successful at avoiding a collision with an approaching athlete, previously concussed athletes exhibit behavioural changes manifesting in riskier behaviours. The current findings suggest that previously concussed athletes possess behavioural changes even after being cleared to returned to sport, which may increase their risk of a subsequent injury when playing.

We have also conducted experiments with person suffering from chronic non specific low back pain (cNSLBP) (Cf. Figure 12 Left). cNSLBP is characterized by symptoms without clear patho-anatomical causes and has been identified as one of the leading global causes of disability. The majority of clinical trials assess cNSLBP using scales or questionnaires reporting an influence of cognitive, emotional and behavioral factors. Previous studies have evaluated the motor consequences of cNSLBP using biomechanical analysis, showing alterations in the kinematics and dynamics of locomotion. However, few studies have evaluated cNSLBP participants in ecological situations, such as walking with obstacles or avoidance, allowing a simultaneous evaluation of cognitive, perceptual and motor components in a situation closer to the one encountered in daily life. In this context, using a horizontal aperture crossing paradigm, we aimed at 1) understanding the effect of cNSLBP in action strategies and (2) identifying factors that may influence these decisions. Healthy adults (N=15) and cNSLBP adults (N=15) performed a walking protocol composed of 3 random blocks of 19 trials with aperture ranging from 0.9 to 1.8 x shoulder width. The dimensions of the obstacle causing an individual to change their actions (shoulders rotation, velocity, trunk stability) is referred to as the Critical Point (CP). Results showed that the average CP in the healthy group was significantly larger than the one of cNSLBP group. This analysis on shoulder data is a first attempt to investigate the influence of cNSLBP on motor behavior and decisions in an ecological paradigm. Results show a slightly more risky behavior of patients with smaller CP. Future work is required to study walking speed profile and to relate behavioral data with variables that can influence pain perception (intensity, duration, kinesiophobia, fear avoidance beliefs, catastrophism and psychological inflexibility).

Finally, we studied the interaction behaviour when a wheelchair user interacts with other pedestrians both in lab or in a museum (Musée des Beaux Arts and Musée de Bretagne of Rennes) (Cf. Figure 12 Middle and Right). In the museum study, we also investigated the efficacy of a driving assistance system on the users' experience. This work is performed in collaboration with Marie Babel and Emilie Leblong in the Rainbow team as well as with the Pôle Saint-Hélier. Preliminary results showed the positive effect of the driving assistance system on various dimensions such as usability, acceptability, learning, social influence. In addition, observation data showed an importance of the social context in the regulation of proximity.

As a short note, we took also interest in the effect of ageing on the body segment strategies to initiate walking motion during street-crossing 18.

7.3 New Immersive Experiences

7.3.1 The Stare-in-the-Crowd Effect in Virtual Reality

Participants: Anne-Hélène Olivier [contact], Marc Christie, Ludovic Hoyet, Alberto Jovane, Julien Pettré, Pierre Raimbaud, Katja Zibrek.

Nonverbal cues are paramount in real-world interactions. Among these cues, gaze has received much attention in the literature. In particular, previous work has shown a search asymmetry between directed and averted gaze towards the observer using photographic stimuli, with faster detection and longer fixation towards directed gaze by the observer. This is known as the stare-in-the-crowd effect. In this work 12, we investigate whether stare-in-the crowd effect is preserved in Virtual Reality (VR). To this end, we designed a within-subject experiment where 30 human users were immersed in a virtual environment in front of an audience of 11 virtual agents (Figure 13), following 4 different gaze behaviours . We analysed the user's gaze behaviour when observing the audience, computing fixations and dwell time. We also collected the users' social anxiety score using a post-experiment questionnaire to control for some potential influencing factors. Results show that the stare-in-the-crowd effect is preserved in VR, as demonstrated by the significant differences between gaze behaviours, similarly to what was found in previous studies using photographic stimuli. Additionally, we found a negative correlation between dwell time towards directed gazes and users’ social anxiety scores. Such results are encouraging for the development of expressive and reactive virtual humans, which can be animated to express natural interactive behaviour. This work was performed in collaboration with Julien Pettré from the Virtus team and Claudio Pacchierotti from the Rainbow team.

7.3.2 The One-Man-Crowd: Single User Generation of Crowd Motions Using Virtual Reality

Participants: Julien Pettré [contact], Marc Christie, Ludovic Hoyet, Tairan Yin.

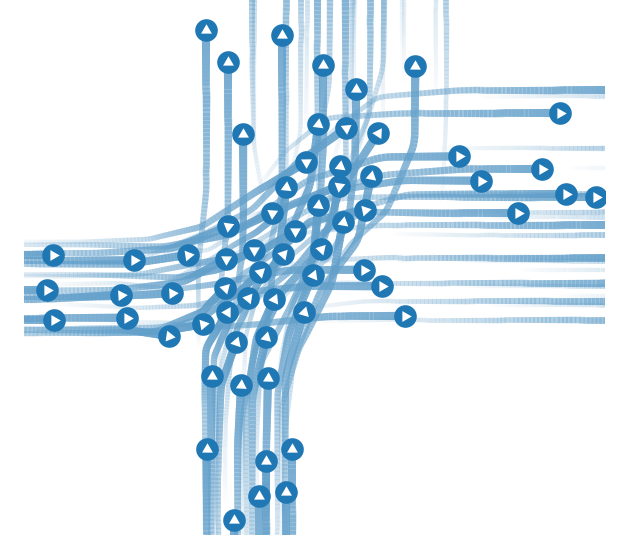

Crowd motion data is fundamental for understanding and simulating realistic crowd behaviours. Such data is usually collected through controlled experiments to ensure that both desired individual interactions and collective behaviours can be observed. It is however scarce, due to ethical concerns and logistical difficulties involved in its gathering, and only covers a few typical crowd scenarios. In this work 8, we propose and evaluate a novel Virtual Reality based approach lifting the limitations of real-world experiments for the acquisition of crowd motion data. Our approach immerses a single user in virtual scenarios where he/she successively acts each crowd member. By recording the past trajectories and body movements of the user, and displaying them on virtual characters, the user progressively builds the overall crowd behaviour by him/herself. We validate the feasibility of our approach by replicating three real experiments (Figure 14), and compare both the resulting emergent phenomena and the individual interactions to existing real datasets. Our results suggest that realistic collective behaviours can naturally emerge from virtual crowd data generated using our approach, even though the variety in behaviours is lower than in real situations. These results provide valuable insights to the building of virtual crowd experiences, and reveal key directions for further improvements. This work was performed in collaboration with Julien Pettré from the Virtus team and Marie-Paule Cani from LIX.

7.3.3 Proximity in VR: The Importance of Character Attractiveness and Participant Gender

Participants: Katja Zibrek [contact], Ludovic Hoyet, Anne-Hélène Olivier, Julien Pettré.

In this work, we expand upon recent evidence that the motion of virtual characters affects the proximity of users who are immersed with them in virtual reality (VR). Attractive motions decrease proximity, but no effect of character gender on proximity was found. We therefore designed a similar experiment where users observed walking motions in VR which were displayed on male and female virtual characters 19. Our results show similar patterns found in previous studies, while some differences related to the model of the character emerged.

7.3.4 Smart Motion Trails for Animating in VR

Participants: Marc Christie [contact], Robin Courant, Jean-Baptiste Bordier, Anthony Mirabile.

The artistic crafting of 3D animations by designers is a complex and iterative process. While classical animation tools have brought significant improvements in creating and manipulating shapes over time, most approaches rely on classical 2D input devices to create 3D contents. With the advent of virtual reality technologies and their ability to dive the users in their 3D worlds and to precisely track devices in 6 dimensions (position and orientation), a number of VR creative tools have emerged such as Quill, AnimVR, Tvori, Tiltbrush or MasterPieceVR. While these tools provide intuitive means to directly design in the 3D space by exploiting both the 6D tracking capacity of the hand devices and the stereoscopic perception by the user, the animation capacities or such tools remain strongly limited, and often reproduce classical 2D manipulators in VR. In this work, we propose the design of smart interactive manipulators which leverage on the specificity of VR to animate poly-articulated animations. We then perform a user study to evaluate the benefits of such manipulators over traditional 2D tools for three groups of users: beginner, intermediate, and professional artists. We build on this user to discuss how smart tools (e.g., using a variety of AI techniques) can be coupled with VR technologies to improve content creation. More details can be found in 11.

8 Bilateral contracts and grants with industry

8.1 Bilateral contracts with industry

Cifre InterDigitial - Learning-Based Human Character Animation Synthesis for Content Production

Participants: Ludovic Hoyet [contact], Lucas Mourot.

The overall objective of the PhD thesis of Lucas Mourot, which started in June 2020, is to adapt and improve the state-of-art on video animation and human motion modelling to develop a semi-automated framework for human animation synthesis that brings real benefits to artists in the movie and advertising industry. In particular, one objective is to leverage the use of novel learning-based approaches, in order to propose skeleton-based animation representations, as well as editing tools, in order to improve the resolution and accuracy of the produced animations, so that automatically synthetized animations might become usable in an interactive way by animation artists.

8.2 Bilateral Grants with Industry

Unreal MegaGrant

Participants: Marc Christie [contact], Anthony Mirabile.

The objective of the Unreal Megagrant (70k€) is to initiate the development of Augmented Reality techniques as animation authoring tools. While we have demonstrated the benefits of VR as a relevant authoring tool for artists, we would like to explore with this funding the animation capacities of augmented reality techniques. Underlying challenges are pertained to the capacity of estimating precisely hand poses for fine animation tasks, but also exploring means of high-level authoring tools such as gesturing techniques.

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Inria associate team not involved in an IIL or an international program

BEAR

-

Title:

from BEhavioral Analysis to modeling and simulation of interactions between walkeRs

-

Duration:

2019 -> 2022

-

Coordinator:

Michael Cinelli (mcinelli@wlu.ca)

-

Partners:

- Wilfrid Laurier University

-

Inria contact:

Anne-Hélène Olivier

-

VirtUs participants:

Anne-Hélène Olivier, Julien Pettré

-

Summary:

BEAR project (from BEhavioral Analysis to modeling and simulation of interactions between walkeRs) is a collaborative project between France (Inria Rennes) and Canada (Wilfrid Laurier University and Waterloo University), dedicated to the simulation of human behavior during interactions between pedestrians. In this context, the project aims at providing more realistic models and simulation by considering the strong coupling between pedestrians’ visual perception and their locomotor adaptations.

9.2 International research visitors

9.2.1 Visits of international scientists

Other international visits to the team

Bradford McFadyen

-

Status

Professor

-

Institution of origin:

Dept. Rehabilitation, Université Laval; Centre for Interdisciplinary Research in Rehabilitation and Social Integration (Cirris), Québec

-

Country:

Canada

-

Dates:

March 2nd to April 30th, 2022

-

Context of the visit:

To develop a collaborative research project aiming at studying the influence of a brain injury on the control of locomotion in the context of a navigation task with other walkers. To do so, we propose a methodology combining Movement Sciences and Digital Sciences that will allow us to study the locomotor behavior and the gaze behavior of patients in interaction with a virtual crowd.

-

Mobility program/type of mobility:

sabbatical

Michael Cinelli

-

Status

Professor

-

Institution of origin:

Wilfrid Laurier University, Waterloo

-

Country:

Canada

-

Dates:

May 29th to June 12th, 2022

-

Context of the visit:

This stay is part of the associated team BEAR. The goal of this project is to study the influence of ageing on locomotion control in a navigation task in interaction with other walkers, in particular the role of appearance vs. movement. During this stay an experimental campaign was conducted.

-

Mobility program/type of mobility:

BEAR Inria Associate Team

Sheryl Bourgaize

-

Status

PhD Student

-

Institution of origin:

Wilfrid Laurier University, Waterloo

-

Country:

Canada

-

Dates:

May 29th to June 12th, 2022

-

Context of the visit:

This stay is part of the associated team BEAR. The goal of this project is to study the influence of advancing age on locomotion control in a navigation task in interaction with other walkers, in particular the role of appearance vs. movement. During this stay an experimental campaign was conducted.

-

Mobility program/type of mobility:

BEAR Inria Associate Team

Brooke Thompson

-

Status

Master Student

-

Institution of origin:

Wilfrid Laurier University, Waterloo

-

Country:

Canada

-

Dates:

May 1st to July 24th, 2022

-

Context of the visit:

This stay is part of the associated team BEAR. The goal of this project is to study the influence of ageing on locomotion control in a navigation task in interaction with other walkers, in particular the role of initial crossing order in the interaction. During this stay an experimental campaign was conducted.

-

Mobility program/type of mobility:

MITACS

Gabriel Massarroto

-

Status

Master Student

-

Institution of origin:

Wilfrid Laurier University, Waterloo

-

Country:

Canada

-

Dates:

May 1st to July 24th, 2022

-

Context of the visit:

This stay is part of the associated team BEAR. The goal of this project is to study the influence of ageing on locomotion control in a navigation task in interaction with other walkers, in particular the role of initial crossing order in the interaction. During this stay an experimental campaign was conducted.

-

Mobility program/type of mobility:

MITACS

Megan Hammill

-

Status

(Master Student)

-

Institution of origin:

Wilfrid Laurier University, Waterloo

-

Country:

Canada

-

Dates:

May 1st to July 24th, 2022

-

Context of the visit:

This stay is part of the associated team BEAR. The goal of this project is to study the influence of ageing on locomotion control in a navigation task in interaction with other walkers, in particular the role of initial crossing order in the interaction. During this stay an experimental campaign was conducted.

-

Mobility program/type of mobility:

(MITACS)

9.3 European initiatives

9.3.1 H2020 projects

H2020 MCSA ITN CLIPE

Participants: Julien Pettré [contact], Vicenzo Abichequer Sangalli, Marc Christie, Ludovic Hoyet, Tairan Yin.

-

Title:

Creating Lively Interactive Populated Environments

-

Duration:

2020 - 2024

-

Coordinator:

University of Cyprus

-

Partners:

- University of Cyprus (CY)

- Universitat Politecnica de Catalunya (ES)

- INRIA (FR)

- University College London (UK)

- Trinity College Dublin (IE)

- Max Planck Institute for Intelligent Systems (DE)

- KTH Royal Institute of Technology, Stockholm (SE)

- Ecole Polytechnique (FR)

- Silversky3d (CY)

-

Inria contact:

Julien Pettré

-

Summary:

CLIPE is an Innovative Training Network (ITN) funded by the Marie Skłodowska-Curie program of the European Commission. The primary objective of CLIPE is to train a generation of innovators and researchers in the field of virtual characters simulation and animation. Advances in technology are pushing towards making VR/AR worlds a daily experience. Whilst virtual characters are an important component of these worlds, bringing them to life and giving them interaction and communication abilities requires highly specialized programming combined with artistic skills, and considerable investments: millions spent on countless coders and designers to develop video-games is a typical example. The research objective of CLIPE is to design the next-generation of VR-ready characters. CLIPE is addressing the most important current aspects of the problem, making the characters capable of: behaving more naturally; interacting with real users sharing a virtual experience with them; being more intuitively and extensively controllable for virtual worlds designers. To meet our objectives, the CLIPE consortium gathers some of the main European actors in the field of VR/AR, computer graphics, computer animation, psychology and perception. CLIPE also extends its partnership to key industrial actors of populated virtual worlds, giving students the ability to explore new application fields and start collaborations beyond academia.

- Website:

H2020 FET-Open CrowdDNA

Participants: Julien Pettré [contact], Thomas Chatagnon, Ludovic Hoyet, Anne-Hélène Olivier.

-

Title:

CrowdDNA

-

Duration:

2020 - 2024

-

Coordinator:

Inria

-

Partners:

- Inria (Fr)

- ONHYS (FR)

- University of Leeds (UK)

- Crowd Dynamics (UK)

- Universidad Rey Juan Carlos (ES)

- Forschungszentrum Jülich (DE)

- Universität Ulm (DE)

-

Inria contact:

Julien Pettré

-

Summary:

This project aims to enable a new generation of “crowd technologies”, i.e., a system that can prevent deaths, minimize discomfort and maximize efficiency in the management of crowds. It performs an analysis of crowd behaviour to estimate the characteristics essential to understand its current state and predict its evolution. CrowdDNA is particularly concerned with the dangers and discomforts associated with very high-density crowds such as those that occur at cultural or sporting events or public transport systems. The main idea behind CrowdDNA is that analysis of new kind of macroscopic features of a crowd – such as the apparent motion field that can be efficiently measured in real mass events – can reveal valuable information about its internal structure, provide a precise estimate of a crowd state at the microscopic level, and more importantly, predict its potential to generate dangerous crowd movements. This way of understanding low-level states from high-level observations is similar to that humans can tell a lot about the physical properties of a given object just by looking at it, without touching. CrowdDNA challenges the existing paradigms which rely on simulation technologies to analyse and predict crowds, and also require complex estimations of many features such as density, counting or individual features to calibrate simulations. This vision raises one main scientific challenge, which can be summarized as the need for a deep understanding of the numerical relations between the local – microscopic – scale of crowd behaviours (e.g., contact and pushes at the limb scale) and the global – macroscopic – scale, i.e. the entire crowd.

- Website:

H2020 ICT RIA PRESENT

Participants: Julien Pettré [contact], Marc Christie, Adèle Colas, Ludovic Hoyet, Alberto Jovane, Anne-Hélène Olivier, Pierre Raimbaud, Katja Zibrek.

-

Title:

Photoreal REaltime Sentient ENTity

-

Duration:

2019 - 2023

-

Coordinator:

Universitat Pompeu Fabra

-

Partners:

- Framestore (UK)

- Brainstorm (ES)

- Cubic Motion (UK)

- InfoCert (IT)

- Universitat Pompeu Fabra (ES)

- Universität Augsburg (DE)

- Inria (FR)

- CREW (BE)

-

Inria contact:

Julien Pettré

-

Summary:

PRESENT is a three-year Research and Innovation project to create virtual digital companions –– embodied agents –– that look entirely naturalistic, demonstrate emotional sensitivity, can establish meaningful dialogue, add sense to the experience, and act as trustworthy guardians and guides in the interfaces for AR, VR and more traditional forms of media. There is no higher quality interaction than the human experience when we use all our senses together with language and cognition to understand our surroundings and –– above all -— to interact with other people. We interact with today’s ‘Intelligent Personal Assistants’ primarily by voice; communication is episodic, based on a request-response model. The user does not see the assistant, which does not take advantage of visual and emotional clues or evolve over time. However, advances in the real-time creation of photorealistic computer generated characters, coupled with emotion recognition and behaviour, and natural language technologies, allow us to envisage virtual agents that are realistic in both looks and behaviour; that can interact with users through vision, sound, touch and movement as they navigate rich and complex environments; converse in a natural manner; respond to moods and emotional states; and evolve in response to user behaviour. PRESENT will create and demonstrate a set of practical tools, a pipeline and APIs for creating realistic embodied agents and incorporating them in interfaces for a wide range of applications in entertainment, media and advertising.

- Website:

H2020 ICT IA INVICTUS

Participants: Marc Christie [contact], Anthony Mirabile, Xi Wang, Robin Courant, Jean-Baptiste Bordier.

-

Title:

Innovative Volumetric Capture and Editing Tools for Ubiquitous Storytelling

-

Duration:

2020 - 2022

-

Coordinator:

University of Rennes 1

-

Partners:

- HHI, FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V. (Germany)

- INTERDIGITAL (France)

- VOLOGRAMS (Ireland)

- UNIVERSITE DE RENNES I (France)

-

Inria contact:

Marc Christie

-

Summary:

The world of animation, the art of making inanimate objects appear to move, has come a long way over the hundred or so years since the first animated films were produced. In the digital age, avatars have become ubiquitous. These numerical representations of real human forms burst on the scene in modern video games and are now used in feature films as well as virtual reality and augmented reality entertainment. Given the huge market for avatar-based digital entertainment, the EU-funded INVICTUS project is developing digital design tools based on volumetric capture to help authors create and edit avatars and their associated story components (decors and layouts) by reducing manual labour, speeding up development and spurring innovation.These innovative authoring tools targets the creation of a new generation of high-fidelity avatars and the integration of these avatars in interactive and non-interactive narratives (movies, games, AR+VR immersive productions).

- Website:

9.4 National initiatives

ANR JCJC Per2

Participants: Ludovic Hoyet [contact], Anne-Hélène Olivier, Julien Pettré, Katja Zibrek.

Per2 is a 42-month ANR JCJC project (2018-2022) entitled Perception-based Human Motion Personalisation (Budget: 280kE; Website)The objective of this project is to focus on how viewers perceive motion variations to automatically produce natural motion personalisation accounting for inter-individual variations. In short, our goal is to automate the creation of motion variations to represent given individuals according to their own characteristics, and to produce natural variations that are perceived and identified as such by users. Challenges addressed in this project consist in (i) understanding and quantifying what makes motions of individuals perceptually different, (ii) synthesising motion variations based on these identified relevant perceptual features, according to given individual characteristics, and (iii) leveraging even further the synthesis of motion variations and to explore their creation for interactive large-scale scenarios where both performance and realism are critical.

Défi Avatar

Participants: Ludovic Hoyet [contact], Maé Mavromatis.

This project aims at design avatars (i.e., the user’s representation in virtual environments) that are better embodied, more interactive and more social, through improving all the pipeline related to avatars, from acquisition and simulation, to designing novel interaction paradigms and multi-sensory feedback. It involves 6 other Inria teams (GraphDeco, Hybrid, Loki, MimeTIC, Morpheo, Potioc), Prof. Mel Slater (Uni. Barcelona), and 2 industrial partners (InterDigitak and Faurecia). WebsiteDéfi Ys.AI

Participants: Ludovic Hoyet.

With the recent annoucements about massive investments on the Metaverses, which are seen as the future of the social and professional immersive communication for the emergent AI-based e-society, there is a need for the development of dedicated metaverse technologies and associated representation formats. In this context, the objective of this joint project between Inria and InterDigital is to focus on the representation formats of digital avatars and their behavior in a digital and responsive environment. In particular, the primary challenge tackled in this project consists in solving the uncanny valley effect to provide users with a natural and lifelike social interaction between real and virtual actors, leading to full engagement in those future metaverse experiences.

9.5 Regional initiatives

Financement SAD Reactive

The SAD REACTIVE funding allowed the recruitment of post-doctoral student Pierre Raimbaud, for the period from 1 October 2020 to 31 August 2022. During his post-doctoral stay at INRIA in Rennes, his research work focused on the study of user behaviour with virtual agents in immersive environments (virtual reality, via headsets). The origin of the project comes from the need in virtual reality for expressive and reactive behaviours in virtual agents, so that the user perceives a social feeling adapted to his own actions, in interaction with these agents.

Financement SAD Attract

The SAD ATTRACT project was able to run from 1 November 2020 to 30 April 2022, and was able to fund a postdoctoral stay for Mr Pratik Mullick, a PhD physicist of Indian origin. This stay has been extended by 6 months with our own funds, so Pratik is still present in our laboratory, and has been able to obtain a position of Senior Lecturer in Poland, which he has taken in October 2022. It is therefore a very satisfactory conclusion to this project.

10 Dissemination

Participants: Marc Christie, Ludovic Hoyet, Anne-Hélène Olivier, Julien Pettré, Katja Zibrek.

10.1 Promoting scientific activities

10.1.1 Scientific events: organisation

General chair, scientific chair

- Julien Pettré was co-conference chair for the ACM SIGGRAPH / Eurographics Symposium on Computer Animation 2022 in Durham, U.K. (ACM SCA 2022).

Member of the organizing committees

- Marc Christie, Co-organizer of the 2022 WICED Event (Satellite event of Eurorgraphics 2022): Workshop on Intelligent Cinematography and Editing.

- Anne-Hélène Olivier, Julien Pettré and Katja Zibrek: Organization of the Virtual Humans and Crowd in Immersive Environment Workshop in the frame of IEEE VR 2022 Conference

10.1.2 Scientific events: selection

Member of the conference program committees

- Ludovic Hoyet: ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games 2022, ACM Symposium on Applied Perception 2022, ACM Siggraph Asia 2022 (Technical Papers COI), IEEE International Conference on Artificial Intelligence & Virtual Reality 2022, journées Françaises de l'Informatique Graphique 2022

- Anne-Hélène Olivier: IEEE VR 2022, EuroVR 2022, SAP 2022

- Julien Pettré: Eurographics Short Papers 2022, Eurographics STAR 2023, Associate Editor IROS 2022

- Marc Christie: ACM Multimedia 2022, IEEE AIVR 2022, International Conference on Interactive Digital Storytelling 2022, Computer Animation and Social Agents 2022

Reviewer

- Ludovic Hoyet: ACM Siggraph 2022, ACM CHI Conference on Human Factors in Computing Systems 2023, Eurographics 2022

- Anne-Hélène Olivier: CEIG 2022, Eurographics 2022, ISMAR 2022

- Julien Pettré: IEEE VR 2023

- Marc Christie: ACM Siggraph 2022, ACM CHI Conference on Human Factors in Computing Systems 2023, ACM Siggraph Asia 2022, International Conference on Interactive Digital Storytelling 2022, Computer Animation and Social Agents 2022

10.1.3 Journal

Member of the editorial boards

- Julien Pettré is Associate Editor for Computer Graphics forum, Computer Animation and Virtual Worlds, Collective Dynamics

Reviewer - reviewing activities

- Ludovic Hoyet: International Journal of Human - Computer Studies

- Anne-Hélène Olivier: Gait and Posture, Human Movement Science, Plos One, IEEE RAS

- Julien Pettré: Safety Science, Elsevier Physica A, Transportation Research part C, Royal Society Open Science, Elsevier Computers and Graphics

- Marc Christie: Transactions on Visualization and Computer Graphics, Transactions on Robotics, Computer Vision and Image Understanding

10.1.4 Invited talks

- Anne-Hélène Olivier: Interactions between pedestrians: from real to virtual studies. Wilfrid Laurier Graduate Seminar, Waterloo, Canada, November 2022.

- Anne-Hélène Olivier: VR to study interactions between pedestrians: validation, applications and future directions. 3rd Training Workshop of the H2020 ITN CLIPE project, Current trends in Virtual Humans, Barcelona, Spain, September 2022.

- Julien Pettré. Colloque des Sciences Appliquées au Sapeur Pompier “Simulation de Foules”

- Julien Pettré. Transmusicales / French Touch “Musique live et mouvements de foule lors du Hellfest”

- Julien Pettré. Journées de la matière condensée 2022 “Crowd simulation: scales, algorithms and data”

- Julien Pettré. Graphyz 2 “Keynote in Duet: Crowds”

10.1.5 Scientific expertise

- Julien Pettré: ANRT (dossier CIFRE), NWO (agence de financement de la recherche néerlandaise)

- Marc Christie: Member of the CE33 for the ANR