2024Activity reportTeamAYANA

Inria teams are typically groups of researchers working on the definition of a common project, and objectives, with the goal to arrive at the creation of a project-team. Such project-teams may include other partners (universities or research institutions).

RNSR: null- Research center Inria Centre at Université Côte d'Azur

- Team name: AI and Remote Sensing on board for the New Space

- Domain:Perception, Cognition and Interaction

- Theme:Vision, perception and multimedia interpretation

Keywords

Computer Science and Digital Science

- A2.3.1. Embedded systems

- A3.4.1. Supervised learning

- A3.4.2. Unsupervised learning

- A3.4.5. Bayesian methods

- A3.4.6. Neural networks

- A3.4.8. Deep learning

- A5.4.1. Object recognition

- A5.4.5. Object tracking and motion analysis

- A5.4.6. Object localization

- A5.9.2. Estimation, modeling

- A5.9.4. Signal processing over graphs

- A5.9.6. Optimization tools

- A9.3. Signal analysis

Other Research Topics and Application Domains

- B3.3.1. Earth and subsoil

- B3.3.2. Water: sea & ocean, lake & river

- B3.3.3. Nearshore

- B3.4. Risks

- B3.5. Agronomy

- B3.6. Ecology

- B8.3. Urbanism and urban planning

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientist

- Josiane Zerubia [Team leader, Inria, Senior Researcher]

Post-Doctoral Fellow

- Martina Pastorino [Genoa University (Italy), DITEN, Post-Doctoral Fellow]

PhD Students

- Louis Hauseux [Université Côte d'Azur, Collaboration NEO (K. Avrachenkov) — AYANA (J. Zerubia)]

- Jules Mabon [Inria, until Jan 2024]

- Priscilla Indira Osa [Genoa University (Italy), DITEN, from Mar 2024]

Administrative Assistant

- Nathalie Nordmann [Inria]

External Collaborators

- Pierre Charbonnier [CEREMA Strasbourg (France), Head of the ENDSUM team , HDR]

- Zoltan Kato [University of Szeged (Hungary), Institute of Informatics, full Professor, HDR]

- Gabriele Moser [Genoa University (Italy), DITEN, full Professor, HDR]

- Sebastiano Serpico [Genoa University (Italy), DITEN, full Professor, HDR]

2 Overall objectives

The AYANA AEx is an interdisciplinary project using knowledge in stochastic modeling, image processing, artificial intelligence, remote sensing and embedded electronics/computing. The aerospace sector is expanding and changing ("New Space"). It is currently undergoing a great many changes both from the point of view of the sensors at the spectral level (uncooled IRT, far ultraviolet, etc.) and at the material level (the arrival of nano-technologies or the new generation of "Systems on Chips" (SoCs) for example), that from the point of view of the carriers of these sensors: high resolution geostationary satellites; Leo-type low-orbiting satellites; or mini-satellites and industrial cube-sats in constellation. AYANA will work on a large number of data, consisting of very large images, having very varied resolutions and spectral components, and forming time series at frequencies of 1 to 60 Hz. For the embedded electronics/computing part, AYANA will work in close collaboration with specialists in the field located in Europe, working at space agencies and/or for industrial contractors.

3 Research program

3.1 FAULTS R GEMS: Properties of faults, a key to realistic generic earthquake modeling and hazard simulation

Decades of research on earthquakes have yielded meager prospects for earthquake predictability: we cannot predict the time, location and magnitude of a forthcoming earthquake with sufficient accuracy for immediate societal value. Therefore, the best we can do is to mitigate their impact by anticipating the most “destructive properties” of the largest earthquakes to come: longest extent of rupture zones, largest magnitudes, amplitudes of displacements, accelerations of the ground. This topic has motivated many studies in last decades. Yet, despite these efforts, major discrepancies still remain between available model outputs and natural earthquake behaviors. An important source of discrepancy is related to the incomplete integration of actual geometrical and mechanical properties of earthquake causative faults in existing rupture models. We first aim to document the compliance of rocks in natural permanent damage zones. These data –key to earthquake modeling– are presently lacking. A second objective is to introduce the observed macroscopic fault properties –compliant permanent damage, segmentation, maturity– into 3D dynamic earthquake models. A third objective is to conduct a pilot study aiming at examining the gain of prior fault property and rupture scenario knowledge for Earthquake Early Warning (EEW). This research project is partially funded by the ANR Fault R Gems, whose PI is Prof. I. Manighetti from Geoazur. Two successive postdocs (Barham Jafrasteh and Bilel Kanoun) have worked on this research topic funded by UCA-Jedi.

3.2 Probabilistic models on graphs and machine learning in remote sensing applied to natural disaster response

We currently develop novel probabilistic graphical models combined with machine learning in order to manage natural disasters such as earthquakes, flooding and fires. The first model will introduce a semantic component to the graph at the current scale of the hierarchical graph, and will necessitate a new graph probabilistic model. The quad-tree proposed by Ihsen Hedhli in AYIN team in 2016 is no longer fit to resolve this issue 13. Applications from urban reconstruction or reforestation after natural disasters will be achieved on images from Pleiades optical satellites (provided by the French Space Agency, CNES) and CosmoSKyMed radar satellites (provided by the Italian Space Agency, ASI). This project is conducted in partnership with the University of Genoa (Prof. G. Moser and Prof. S. Serpico) via the co-supervision of a PhD student, financed by the Italian government. The PhD student, Martina Pastorino, has worked with Josiane Zerubia and Gabriele Moser in 2020 during her double Master degree at both University Genoa and IMT Atlantique. She has been a PhD Student in co-supervision between University of Genoa DITEN (Prof. Moser) and Inria (Prof. Zerubia) from November 2020 until December 2023, then a postdoc in 2024.

3.3 Marked point process models for object detection and tracking in temporal series of high resolution images

The model proposed by Paula Craciun's PhD thesis in 2015 in AYIN team, supposed the speed of tracked objects of interest in a series of satellite images to be quasi-constant between two frames. However, this hypothesis is very limiting, particularly when objects of interest are subject to strong and sudden acceleration or deceleration. The model we proposed within AYIN team is then no longer viable. Two solutions will be considered within AYANA team : either a generalist model of marked point processes (MPP), supposing an unknown and variable velocity, or a multimodel of MPPs, simpler with regard to the velocity that can have a very limited amount of values (i.e., quasi-constant velocity for each MPP). The whole model will have to be redesigned, and is to be tested with data from a constellation of small satellites, where the objects of interest can be for instance "speed-boats" or "go-fast cars". Some comparisons with deep learning based methods belonging to Airbus Defense and Space (Airbus DS) are planned at Airbus DS. Then this new model should be brought to diverse platforms (ground based or on-board). The modeling and ground-based application part related to object detection has been studied within the PhD thesis of Jules Mabon. Furthermore, the object-tracking methods have been proposed by AYANA as part of a postdoctoral project (Camilo Aguilar-Herrera). Finally, the on-board version will be developed by Airbus DS, and the company Erems for the onboard hardware. This project is financed by Bpifrance within the LiChIE contract. This research project has started in 2020 for a duration of 6 years.

4 Application domains

Our research is applied within all Earth observation domains such as: urban planning, precision farming, natural disaster management, geological features detection, geospatial mapping, and security management.

5 Highlights of the year

- Louis Hauseux got the 2nd prize at the ACM SIGMETRICS Student Research Competition, Venice, June 2024. This award led to the obtention of the Médaille d'excellence de l'Université Côte d'Azur 2024.

- Priscilla Indira Osa received both the Best Poster Award and the Best Project Award at EURASIP - IEEE SPS Summer School on Remote Sensing and Microscopy Image Processing, August 2024.

- Martina Pastorino received the Prix de thèse de la parité, Ecole Doctorale STIC, Université Côte d'Azur, 2024.

- Martina Pastorino received the Best Project Award at the EURASIP - IEEE SPS Summer School on Remote Sensing and Microscopy Image Processing, August 2024.

- Martina Pastorino was highlighted as “Femme inspirante” by Femmes & Sciences association, see the article.

- Josiane Zerubia defended her IRIS Master diploma (analyste en stratégie internationale, option défense, sécurité et gestion de crise) supported by Inria in front of General Patrick Charaix (2S), September 2024.

6 New software, platforms, open data

6.1 New software

6.1.1 MPP & CNN for object detection in remotely sensed images

-

Name:

Marked Point Processes and Convolutional Neural Networks for object detection in remotely sensed images

-

Keywords:

Detection, Satellite imagery

-

Functional Description:

Implementation of the work presented in: "CNN-based energy learning for MPP object detection in satellite images" Jules Mabon, Mathias Ortner, Josiane Zerubia In Proc. 2022 IEEE International Workshop on Machine Learning for Signal Processing (MLSP) and "Point process and CNN for small objects detection in satellite images" Jules Mabon, Mathias Ortner, Josiane Zerubia In Proc. 2022 SPIE Image and Signal Processing for Remote Sensing XXVIII

- URL:

-

Contact:

Jules Mabon

-

Partner:

Airbus Defense and Space

6.1.2 FCN and Fully Connected NN for Remote Sensing Image Classification

-

Name:

Fully Convolutional Network and Fully Connected Neural Network for Remote Sensing Image Classification

-

Keywords:

Satellite imagery, Classification, Image segmentation

-

Functional Description:

Code related to the paper:

M. Pastorino, G. Moser, S. B. Serpico, and J. Zerubia, "Fully convolutional and feedforward networks for the semantic segmentation of remotely sensed images," 2022 IEEE International Conference on Image Processing, 2022,

- URL:

-

Contact:

Josiane Zerubia

-

Partner:

University of Genoa, DITEN, Italy

6.1.3 Stabilizer for Satellite Videos

-

Keywords:

Satellite imagery, Video sequences

-

Functional Description:

A python-implemented stabilizer for satellite videos. This code was used to produce the object tracking results shown in: C. Aguilar, M. Ortner and J. Zerubia, "Adaptive Birth for the GLMB Filter for object tracking in satellite videos," 2022 IEEE 32st International Workshop on Machine Learning for Signal Processing (MLSP), 2022, pp. 1-6

- URL:

-

Contact:

Josiane Zerubia

-

Partner:

Airbus Defense and Space

6.1.4 GLMB filter with History-based Birth

-

Name:

Python GLMB filter with History-based Birth

-

Keywords:

Multi-Object Tracking, Object detection

-

Functional Description:

Implementation of the work presented in: C. Aguilar, M. Ortner and J. Zerubia, "Adaptive Birth for the GLMB Filter for object tracking in satellite videos," 2022 IEEE 32st International Workshop on Machine Learning for Signal Processing (MLSP), 2022, pp. 1-6

- URL:

-

Contact:

Josiane Zerubia

-

Partner:

Airbus Defense and Space

6.1.5 Automatic fault mapping using CNN

-

Keyword:

Detection

-

Functional Description:

Implementation of the work published in: Bilel Kanoun, Mohamed Abderrazak Cherif, Isabelle Manighetti, Yuliya Tarabalka, Josiane Zerubia. An enhanced deep learning approach for tectonic fault and fracture extraction in very high resolution optical images. ICASSP 2022 - IEEE International Conference on Acoustics, Speech, & Signal Processing, IEEE, May 2022, Singapore/Hybrid, Singapore.

- URL:

-

Contact:

Josiane Zerubia

-

Partner:

Géoazur

6.1.6 RFS-filters for Satellite Videos

-

Keywords:

Detection, Target tracking

-

Functional Description:

Implementation of the works published in Camilo Aguilar, Mathias Ortner, Josiane Zerubia. Enhanced GM-PHD filter for real time satellite multi-target tracking. ICASSP 2023 – IEEE International Conference on Acoustics, Speech, and Signal Processing, Jun 2023, Rhodes, Greece.

- URL:

-

Contact:

Camilo Aguilar Herrera

-

Partner:

Airbus Defense and Space

6.1.7 AYANet

-

Name:

AYANet: A Gabor Wavelet-based and CNN-based Double Encoder for Building Change Detection in Remote Sensing

-

Keywords:

Gabor wavelet, Convolutional Neural Network, Building Change Detection, Remote Sensing

-

Functional Description:

The official implementation of AYANet: A Gabor Wavelet-based and CNN-based Double Encoder for Building Change Detection in Remote Sensing (ICPR 2024) Link to the paper : https://hal.science/hal-04675243

- URL:

-

Contact:

Josiane Zerubia

6.1.8 GabFormer

-

Name:

Gabor Feature Network for Transformer-based Building Change Detection Model in Remote Sensing

-

Keywords:

Transformer, Gabor wavelet, Building Change Detection, Remote Sensing, Image analysis

-

Functional Description:

The official implementation of Gabor Feature Network for Transformer-based Building Change Detection Model in Remote Sensing (ICIP 2024) Link to the paper: https://hal.science/hal-04619245

- URL:

-

Contact:

Josiane Zerubia

6.1.9 PP-EBM

-

Name:

Combining Convolutional Neural Networks and Point Process for object detection

-

Keywords:

Convolutional Neural Network, Point Process, Energy based model, Remote Sensing

-

Functional Description:

This code was used to produce the results shown in:

Mabon, J., Ortner, M., Zerubia, J. Learning Point Processes and Convolutional Neural Networks for Object Detection in Satellite Images. Remote Sens. 2024, 16, 1019. https://doi.org/10.3390/rs16061019

- URL:

-

Contact:

Josiane Zerubia

-

Partner:

Airbus Defense and Space

7 New results

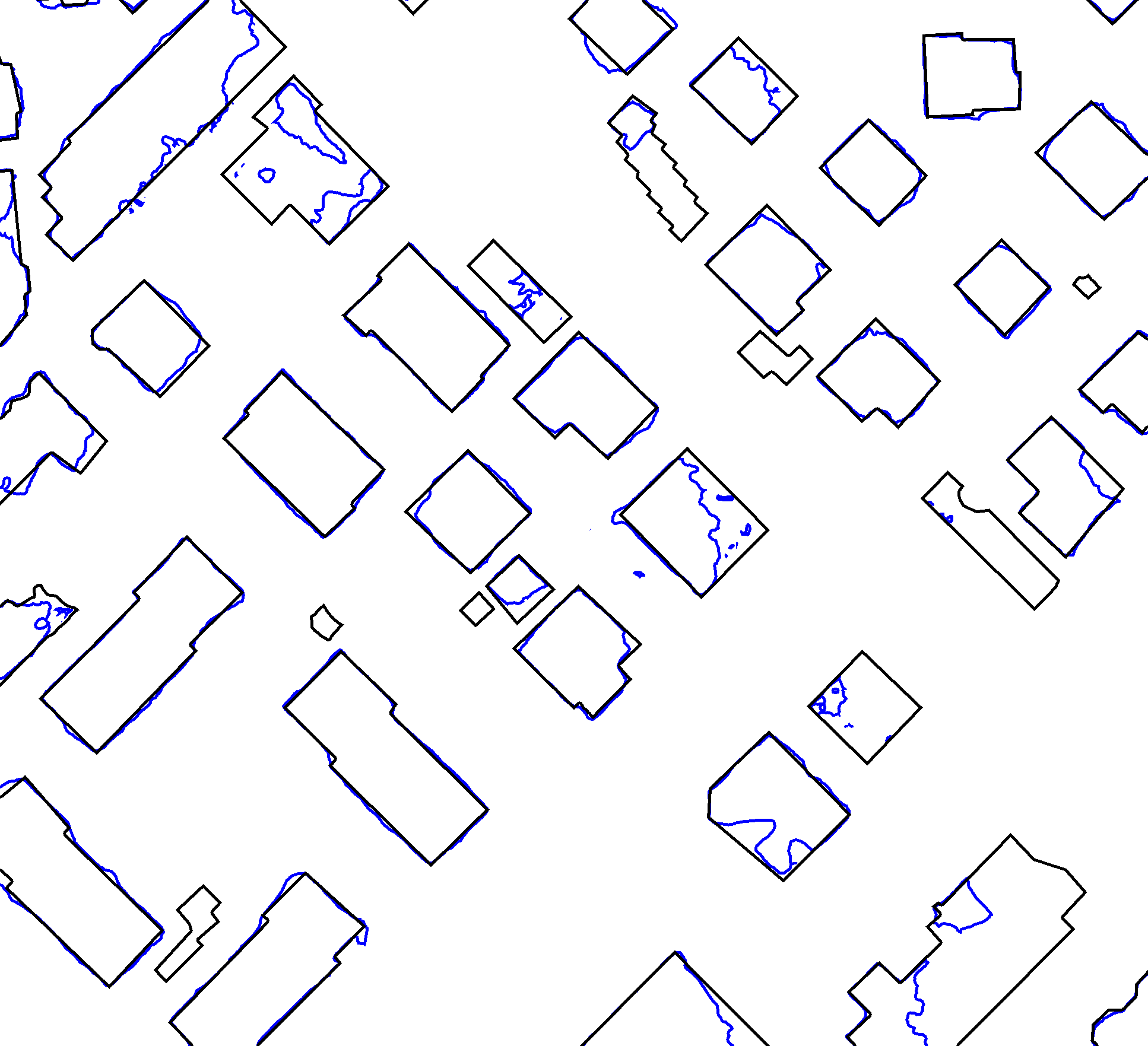

7.1 Hierarchical probabilistic graphical models and fully convolutional networks for the semantic segmentation of multimodal SAR images

Participants: Martina Pastorino, Josiane Zerubia.

External collaborators: Gabriele Moser [University of Genoa, DITEN dept., Professor], Sebastiano Serpico [University of Genoa, DITEN dept., Professor].

Keywords: image processing, stochastic models, deep learning, fully convolutional networks, multimodal radar images.

Due to the heterogeneity of remote sensing imagery (active and passive sensors, multiple spatial resolutions, distinct acquisition geometries, etc.), a challenging problem is the development of image classifiers that can exploit information from multimodal input observations. Emphasis in the research activity has been put in the development of a multimodal framework capable of taking advantage from data associated with different physical characteristics and exploiting the advantages of information at different scales.

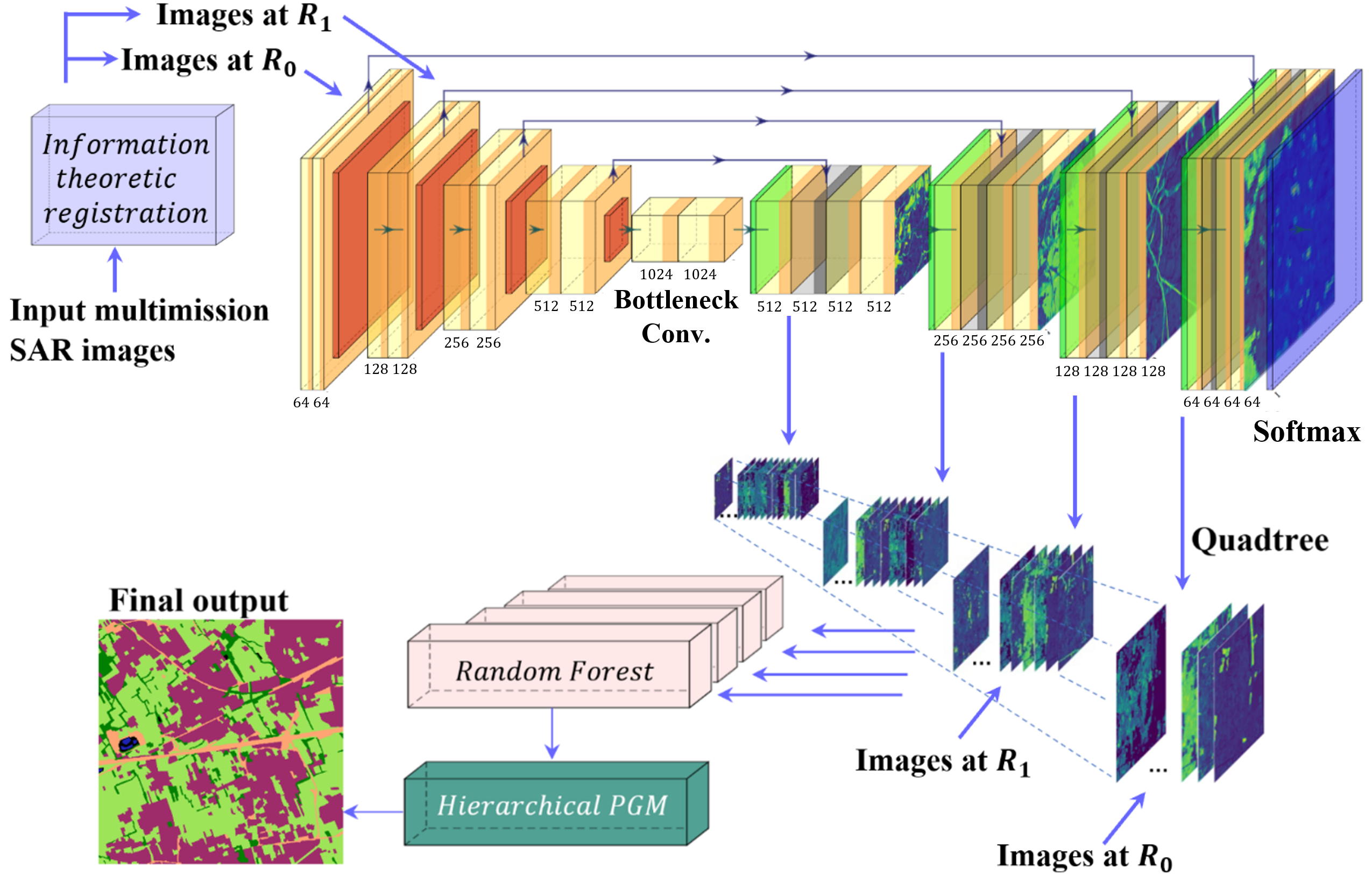

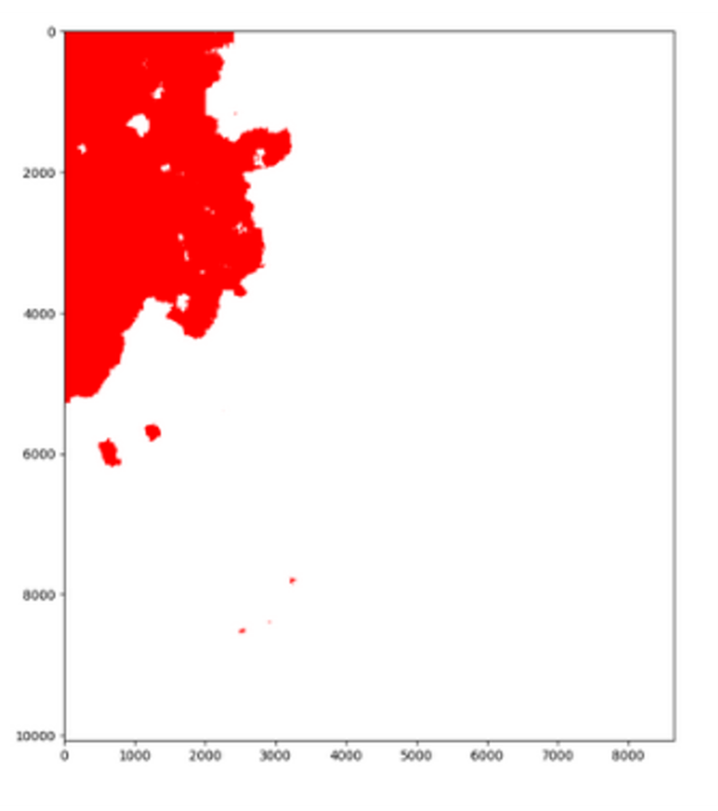

An approach to address the semantic segmentation of multiresolution and multisensor SAR (synthetic aperture radar) images was proposed (see Fig. 1), based on hierarchical probabilistic graphical models (PGMs) 1, and in particular on a novel hierarchical latent causal Markov model and a multiscale deep learning (DL) model for the classification of satellite SAR multimission images 4. It is an extension of the method proposed in 31 for the classification of very high resolution optical images in case of scarce ground truth maps (and published in the Chapter 14), in the framework of a research project funded by the Italian Space Agency (ASI). The methodological properties of the proposed hierarchical latent Markov model, in terms of causality and of inference formulation, have been analytically proven. The accuracy and effectiveness of the proposed approach have been experimentally validated with multifrequency SAR data collected by the COSMO-SkyMed (X-band), SAOCOM (L-band), and Sentinel-1 (C-band) space missions. The experiments have confirmed the effectiveness of this approach especially when the training maps used to learn the deep networks are severely scarce.

The results have been published in IEEE GRSL 4.

7.2 Learning CRF potentials through fully convolutional networks for satellite image semantic segmentation

Participants: Martina Pastorino, Josiane Zerubia.

External collaborators: Gabriele Moser [University of Genoa, DITEN dept., Professor], Sebastiano Serpico [University of Genoa, DITEN dept., Professor].

Keywords: image processing, stochastic models, deep learning, fully convolutional networks, multiscale analysis.

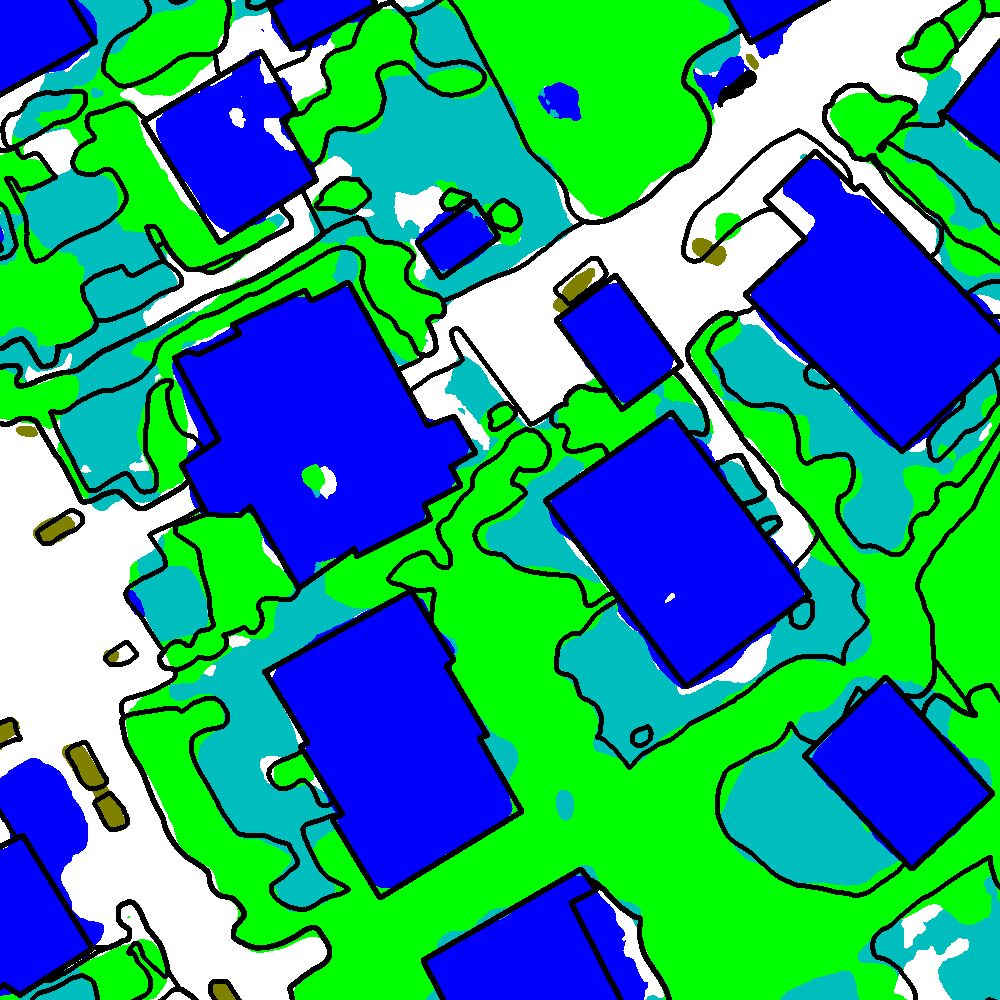

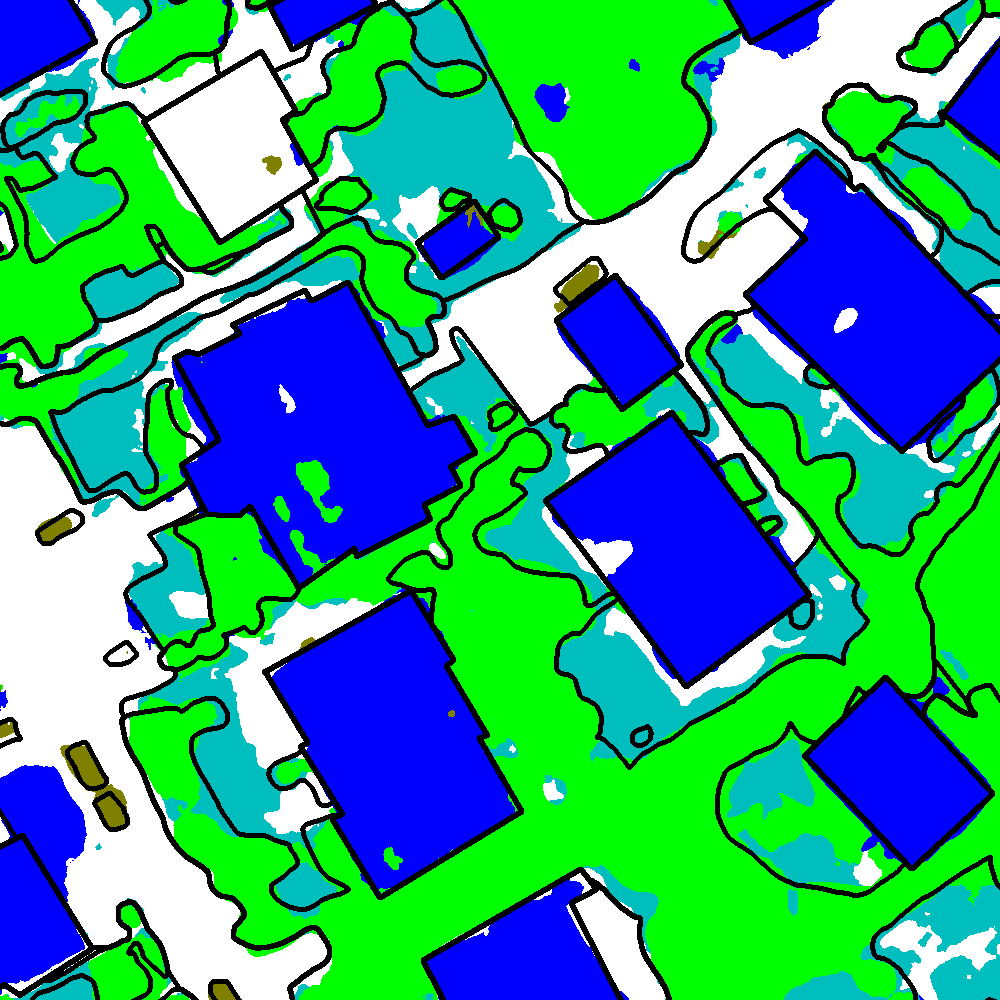

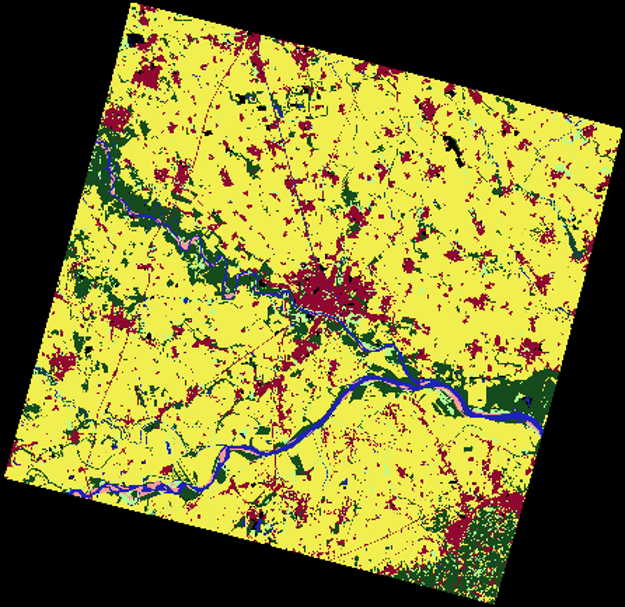

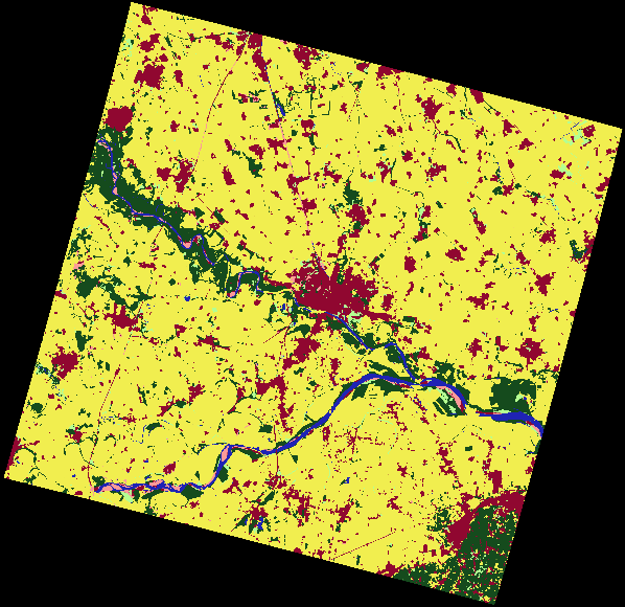

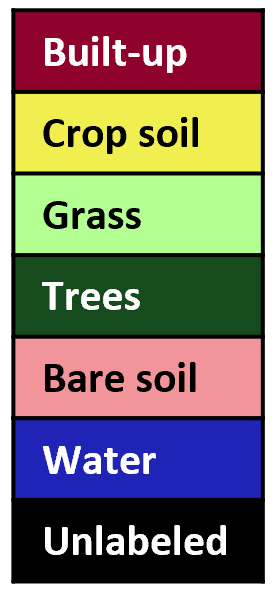

While addressing the problem of semantic segmentation of remote sensing images with input scarce ground truth, a new technique has been developed, in which the unary and pairwise potentials of a conditional random field (CRF) are automatically learned through a fully convolutional network, in order to mine the diverse semantics typical of remote sensing images 3. As the methodology is completely based on DL architectures, it is non-parametric and capable of directly learning the statistics of the stochastic model from the input data. The family of CRF models that can be learned through the proposed approach is broad and flexible, as it includes all CRFs with up to pairwise potentials and a local smoothing condition, without restricting to any parametric family. In this work, the equivalence between the formulation of the proposed network and the local probability distribution predicted by a CRF is analytically proven. The model also explicitly takes into account multiresolution information, through the manipulation of the features extracted by the neural network at different resolutions in a sort of global-to-local information pyramid managing both coarse-scale semantic knowledge and fine-scale details. The experiments with extremely high-resolution aerial imagery have confirmed the effectiveness of the developed approach and its advantage over benchmark DL models, especially in the case of input scarce training maps. The method was tested with the ISPRS 2D Semantic Labeling Challenge Vaihingen and Potsdam datasets and with the IEEE GRSS 2015 DFC Zeebruges dataset, after modifying the ground truths to approximate the ones found in realistic remote sensing applications, characterized by scarce and spatially non-exhaustive annotations. The results (see Fig. 2) confirm the effectiveness of the proposed technique for the semantic segmentation of satellite images. This method was published in IEEE TGRS3.

| blue | Buildings | Impervious | lowveg | Low-Veg. | green | Trees | car | Cars | Unlabeled |

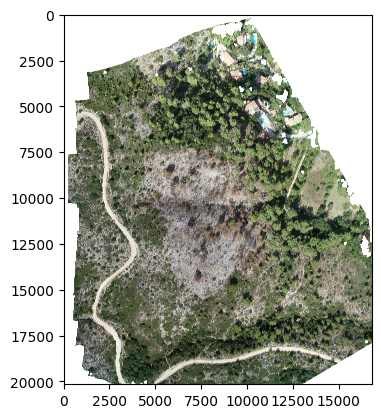

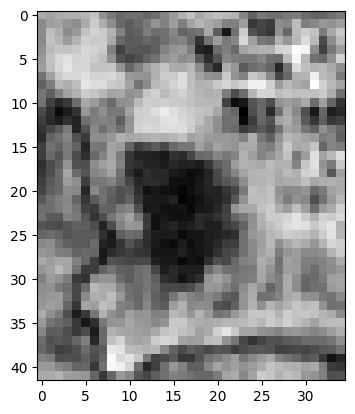

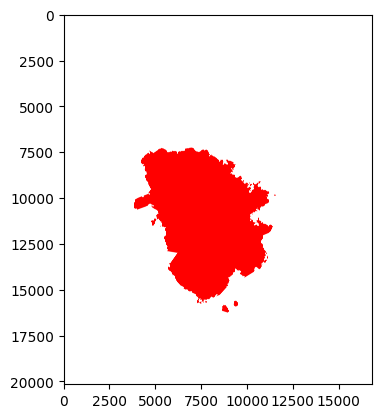

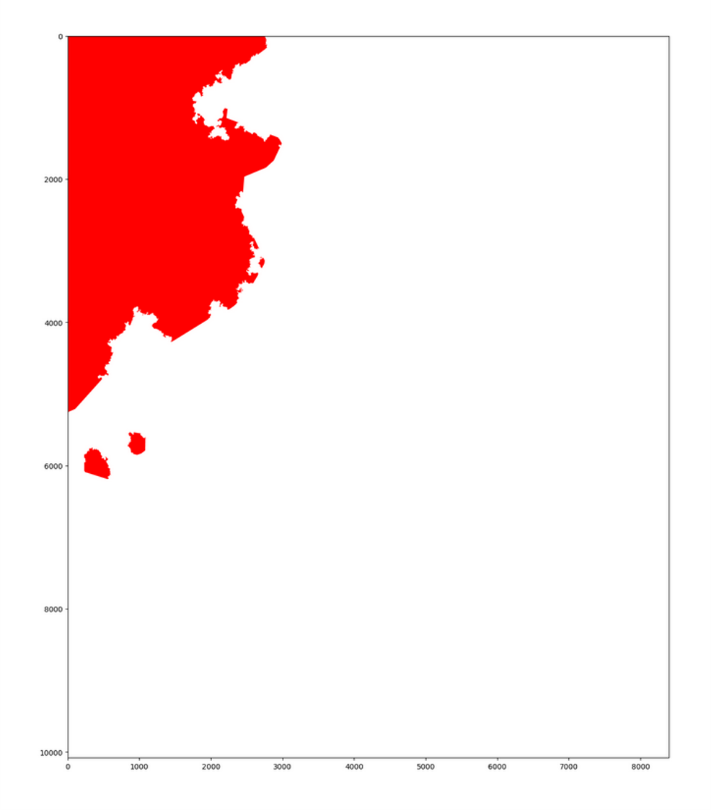

7.3 A Probabilistic Fusion Framework for Burnt zones mapping from multimodal satellite and UAV imagery

Participants: Martina Pastorino, Josiane Zerubia.

External collaborators: Gabriele Moser [University of Genoa, DITEN dept., Professor], Sebastiano Serpico [University of Genoa, DITEN dept., Professor], Fabien Guerra [INRAE, RECOVER, Aix-Marseille University].

Keywords: image processing, stochastic models, deep learning, satellite images, UAV imagery.

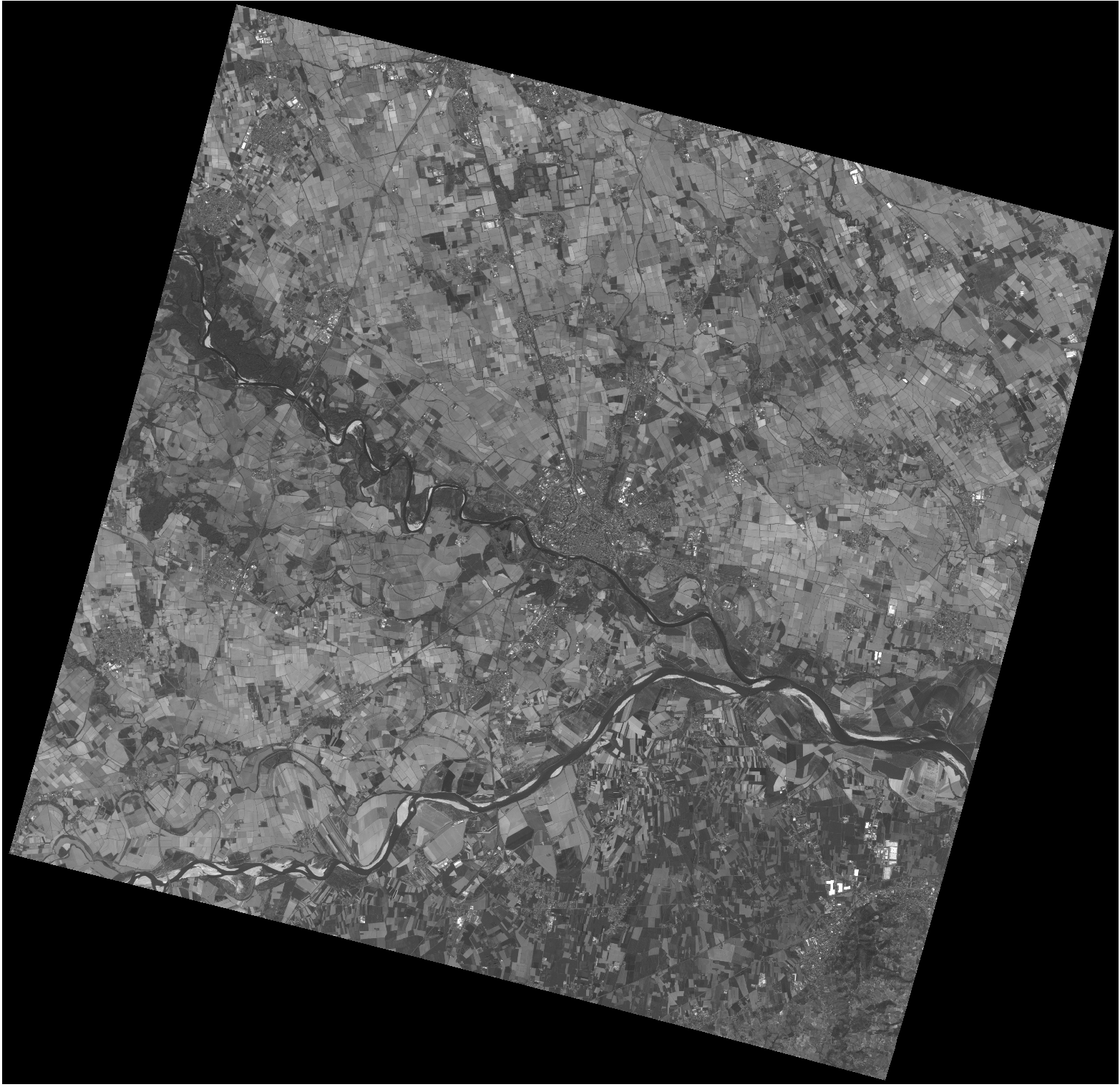

Semantic segmentation has also been addressed in the framework of natural disaster management applications, specifically to detect zones affected by forest fires. In this context, on one hand, satellite images provide a synoptic view, although with limited spatial detail and possibly being affected by haze and smoke. On the other hand, images collected using unmanned aerial vehicles (UAVs, drones) achieve spatial resolutions of a few centimeters and are mostly unaffected by smoke and haze, but intrinsically focus on a smaller geographical coverage. The proposed approach addresses the fusion of these two data modalities in the application to fire scar mapping. The resulting multiresolution fusion task is especially challenging because the ratio between the involved spatial resolutions is extremely large (e.g., 10 m from Sentinel-2 vs 5 cm from an UAV) – a situation that is normally not addressed by State-of-the-Art multiresolution schemes. Two novel Bayesian approaches, combining deep fully convolutional networks, ensemble learning, decision fusion, and hierarchical PGMs have been proposed. The experimental validation, conducted in collaboration with forest science experts from INRAE (Institut national de recherche pour l'agriculture, l'alimentation et l'environnement) and with regard to two case studies associated with wildfires in Provence (see Fig. 3), have suggested the capability of the developed approaches to benefit from both remote sensing image modalities to achieve high detection performance.

These proposed methods have been published in the conferences IGARSS'24 11 and ICPR'24 12; a journal paper has been submitted to TGRS 29.

7.4 Multiresolution Fusion and Segmentation of Hyperspectral and Panchromatic Remote Sensing Images with deep learning and probabilistic graphical models

Participants: Martina Pastorino, Josiane Zerubia.

External collaborators: Gabriele Moser [University of Genoa, DITEN dept., Professor], Sebastiano Serpico [University of Genoa, DITEN dept., Professor].

Keywords: image processing, stochastic models, deep learning, satellite images, hyperspectral imagery.

This work proposes a supervised method for the joint classification and fusion of multiresolution panchromatic and hyperspectral data based on the combination of probabilistic graphical models (PGMs) and deep learning methods.

The idea is to exploit the spatial and spectral information contained in panchromatic and hyperspectral images at different resolutions with the aim to generate a classification map at the spatial resolution of the panchromatic channel, while exploiting the richness of the spectral information provided by the hyperspectral channels.

The proposed technique is based on deep learning, with FCN-type architectures, and PGMs, through the definition of a conditional random field (CRF) model approximating the behavior of the ideal fully connected CRF in a computationally tractable manner. The neural architecture aims to integrate hyperspectral and panchromatic data at the corresponding spatial resolution and generate posterior probability estimates, while the CRF incorporates information associated with not only local but also long-distance spatio-spectral relationships.

The algorithm has been experimentally validated with PRISMA data in the framework of a project with the Italian Space Agency with promising results (see Fig. 4).

The proposed method has been accepted for the GeoCV Workshop at WACV'25 30.

7.5 Learning stochastic geometry models and convolutional neural networks: Application to multiple object detection in aerospatial data sets.

Participants: Jules Mabon, Josiane Zerubia.

External collaborators: Mathias Ortner [Airbus Defense and Space, Senior Data Scientist].

Keywords: object detection, deep learning, fully convolutional network, stochastic models, energy based models.

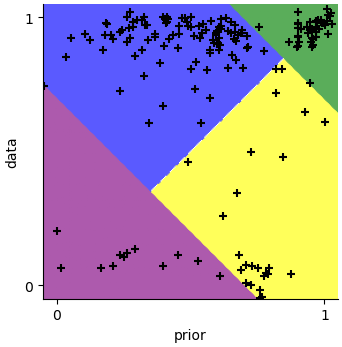

Convolutional neural networks (CNN) have shown great results for object-detection tasks by learning texture and pattern-extraction filters. However, object-level interactions are harder to grasp without increasing the complexity of the architectures. On the other hand, Point Process models propose to solve the detection of the configuration of objects as a whole, allowing to factor in the image data and the objects’ prior interactions. In our work, we propose to combine the information extracted by a CNN with priors on objects within a Markov Marked Point Process framework. We also propose a method to learn the parameters of this Energy-Based Model. The Point Process allows building a lightweight interaction model, while the CNN allows to efficiently extract meaningful information from the image in a context where interaction priors can complement the limited visual information. More specifically, we present matching parameter estimation and resulting scoring procedures, which allow to take into account object interaction (see Fig. 5). The method provides good results on both benchmark data and data provided by Airbus Defense and Space, along with a degree of interpretability of the output.

This work has been published in Remote Sensing2. See also the EUSIPCO'24 paper 8.

Inferred configuration on an Airbus Defense and Space data sample (a), colored according to their prior/data scores (b)(yellow: ; blue: ; purple: low ; green: high ). Each point in (b) corresponds to a detection in , space (log values scaled to ).

7.6 Unsupervised Winter/Summer Crop Classification Methods based on FPAR Image Time Series for Drought Risk Monitoring

Participants: Priscilla Indira Osa, Josiane Zerubia.

External collaborators: Gabriele Moser [University of Genoa, DITEN dept., Professor], Sebastiano Serpico [University of Genoa, DITEN dept., Professor].

Keywords: clustering, unsupervised crop classification, FPAR, drought monitoring.

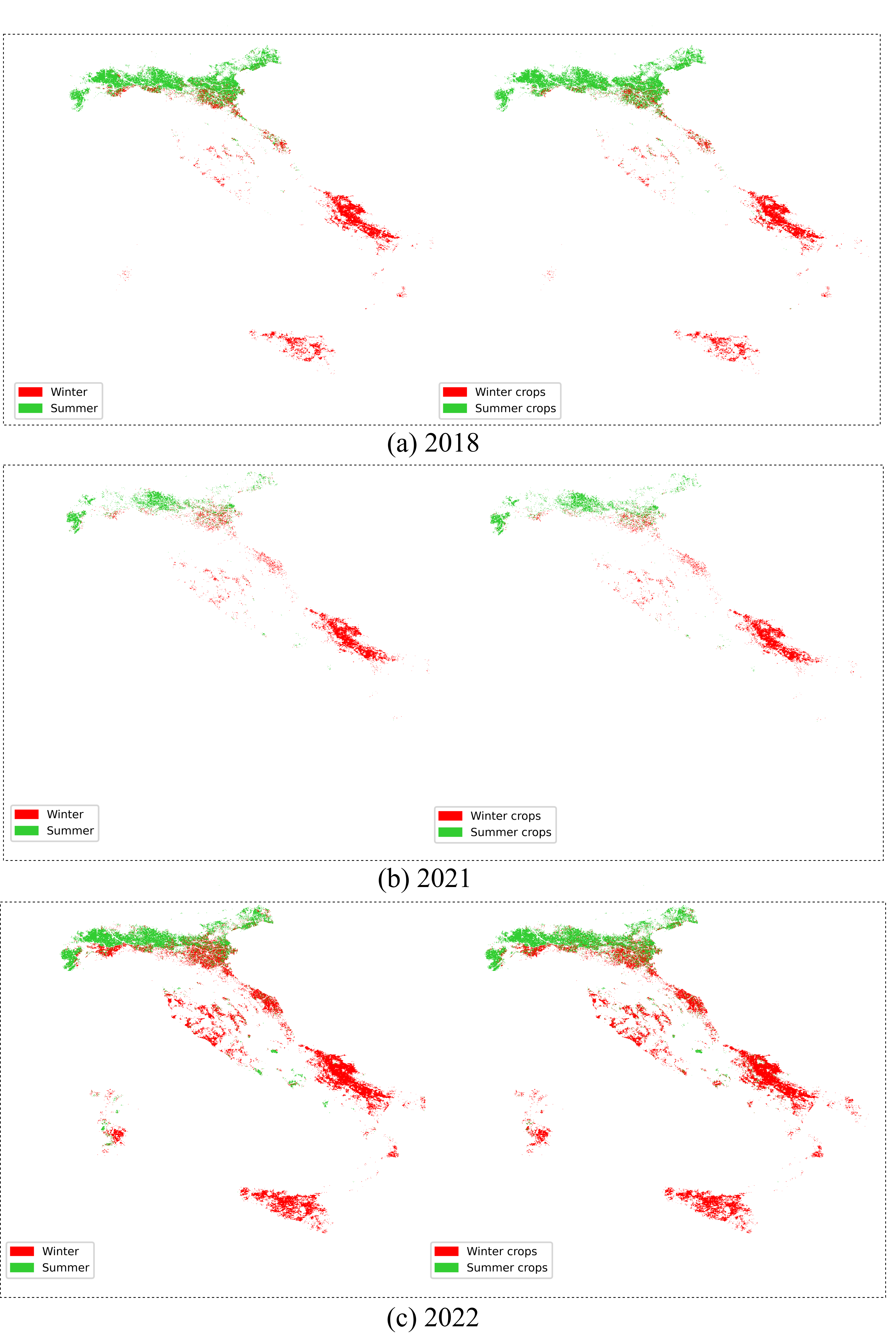

Crops are one of the drought risk indicators, as the phenological cycle and health of crops reflect the availability of water in the soil. This work examines different unsupervised crop classification methods for remote sensing images in the application of drought risk monitoring. The classification task is framed as a clustering task whose aim is to classify the image pixels in two categories: winter and summer crops. The input data is a vegetation index called Fraction of Photosynthetically Active Radiation (FPAR) which is stacked to form annual image time series. We choose several clustering methods ranging from the classical clustering algorithms, such as -means and Gaussian Mixture Model (GMM), to the deep clustering models that are based on Autoencoder (AE), Variational Autoencoder (VAE), and Recurrent Neural Network (RNN). The crop classification task is run for the whole region of Italy, and the performances of all algorithms are validated using three different publicly available crop maps 39, 36, 38 for three different years (2018, 2021, and 2022). Fig .6 shows the comparison between the validation map on the left and the clustering map on the right for each year. The illustrated clustering maps are of the best-performing algorithms in each year. The results indicate that the clustering methods based on FPAR time-series data are a good candidate to be used for drought risk monitoring.

The study was conducted within the RETURN Extended Partnership and received funding from the European Union Next-GenerationEU. This research work has been submitted to IEEE International Geoscience and Remote Sensing Symposium (IGARSS) 2025.

7.7 Gabor Feature Network for Transformer-based Building Change Detection Model in Remote Sensing

Participants: Priscilla Indira Osa, Josiane Zerubia.

External collaborators: Zoltan Kato [University of Szeged, Institute of Informatics, Professor].

Keywords: transformer, Gabor feature, building change detection, remote sensing, image analysis.

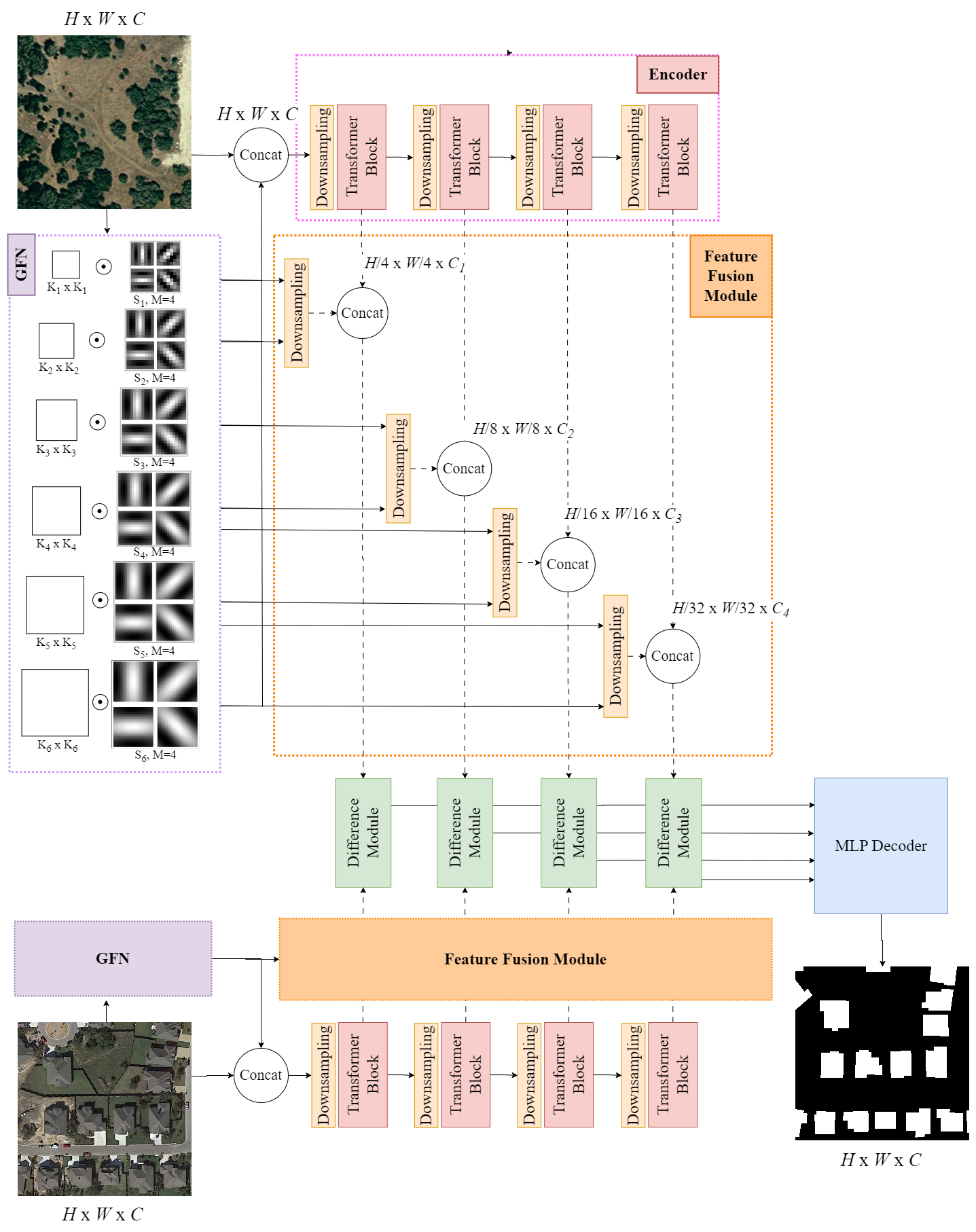

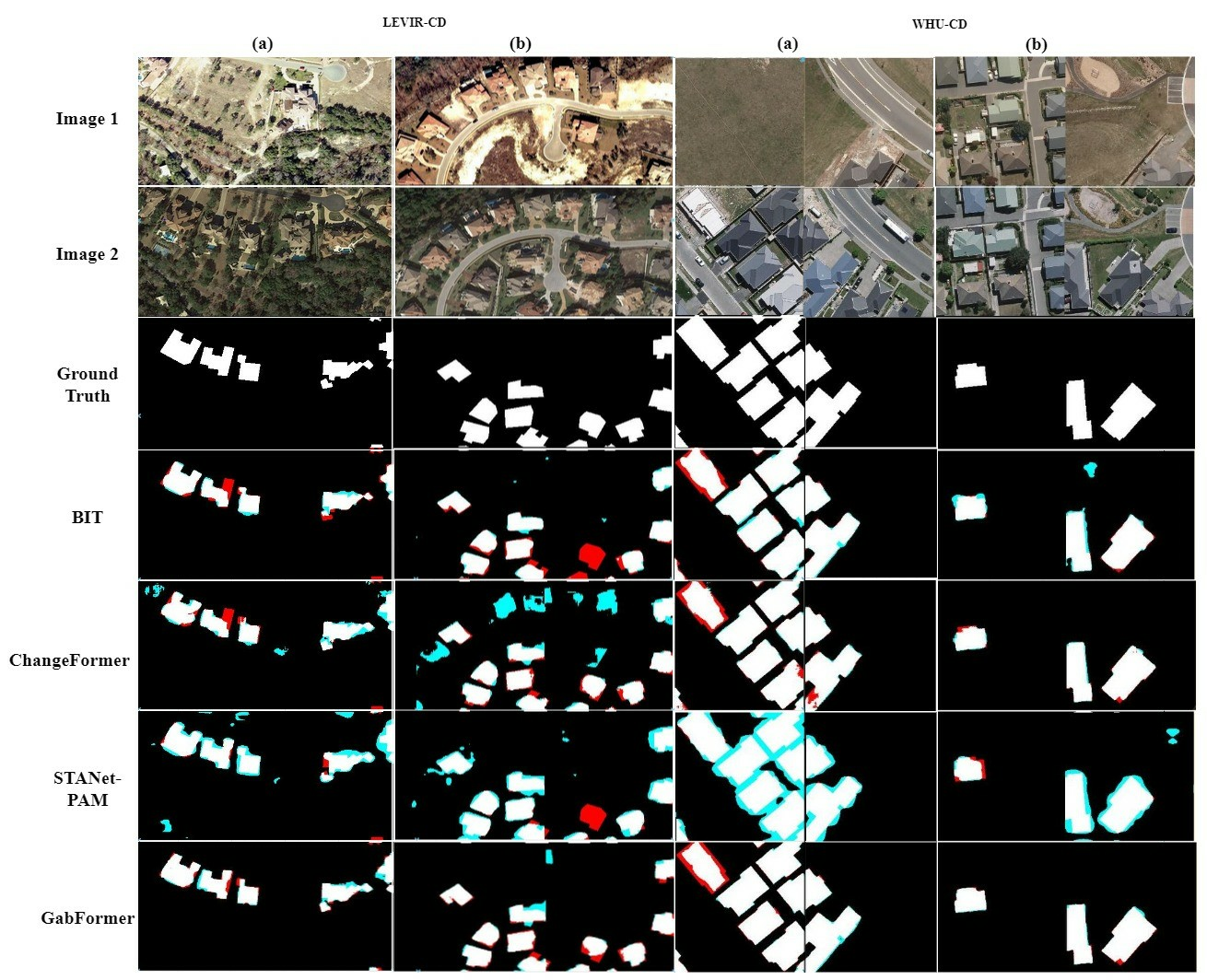

One of the characteristics of buildings in remote sensing (RS) imagery is their textures that are relatively repetitive compared to other objects or land cover types. Using this prior knowledge, Gabor Feature Network (GFN) was proposed to be integrated with Transformer-based architecture for the Building Change Detection (BCD) task. GFN is based on Gabor filters 23 that are modulated in learnable convolutional filters, which enable a powerful combination of the excellent texture-extracting filters and the learning capability of deep learning network 27. The incorporation of GFN in Transformer-based BCD model is expected to have two benefits: 1) to heighten the capability of the model to extract texture features of buildings which also address one of the challenges of BCD task i.e., highlighting the changes of buildings while ignoring those from other objects or sensing conditions, and 2) to help the Transformer-based network to learn from RS data that generally have fewer amount of images compared to the common computer vision tasks, recalling that Transformer may fail to learn some particular features if training data is not sufficient 32.

Fig. 7 shows the overall architecture of the model. GFN performs the feature extraction covering several scales and orientations, and Feature Fusion Module combines the extracted features from GFN and Transformer-based encoder in different resolutions. The model was evaluated on two benchmark BCD datasets: LEVIR-CD18 and WHU-CD 25. Fig. 8 confirms that the proposed model produces preferable output change maps compared to other State-of-the-Art (SOTA) models: STANet-PAM 18, BIT 17, and ChangeFormer 15.

This research work was presented in IEEE International Conference on Image Processing (ICIP) 2024 in Abu Dhabi, UAE 10.

7.8 AYANet: A Gabor Wavelet-based and CNN-based Double Encoder for Building Change Detection in Remote Sensing

Participants: Priscilla Indira Osa, Josiane Zerubia.

External collaborators: Zoltan Kato [University of Szeged, Institute of Informatics, Professor].

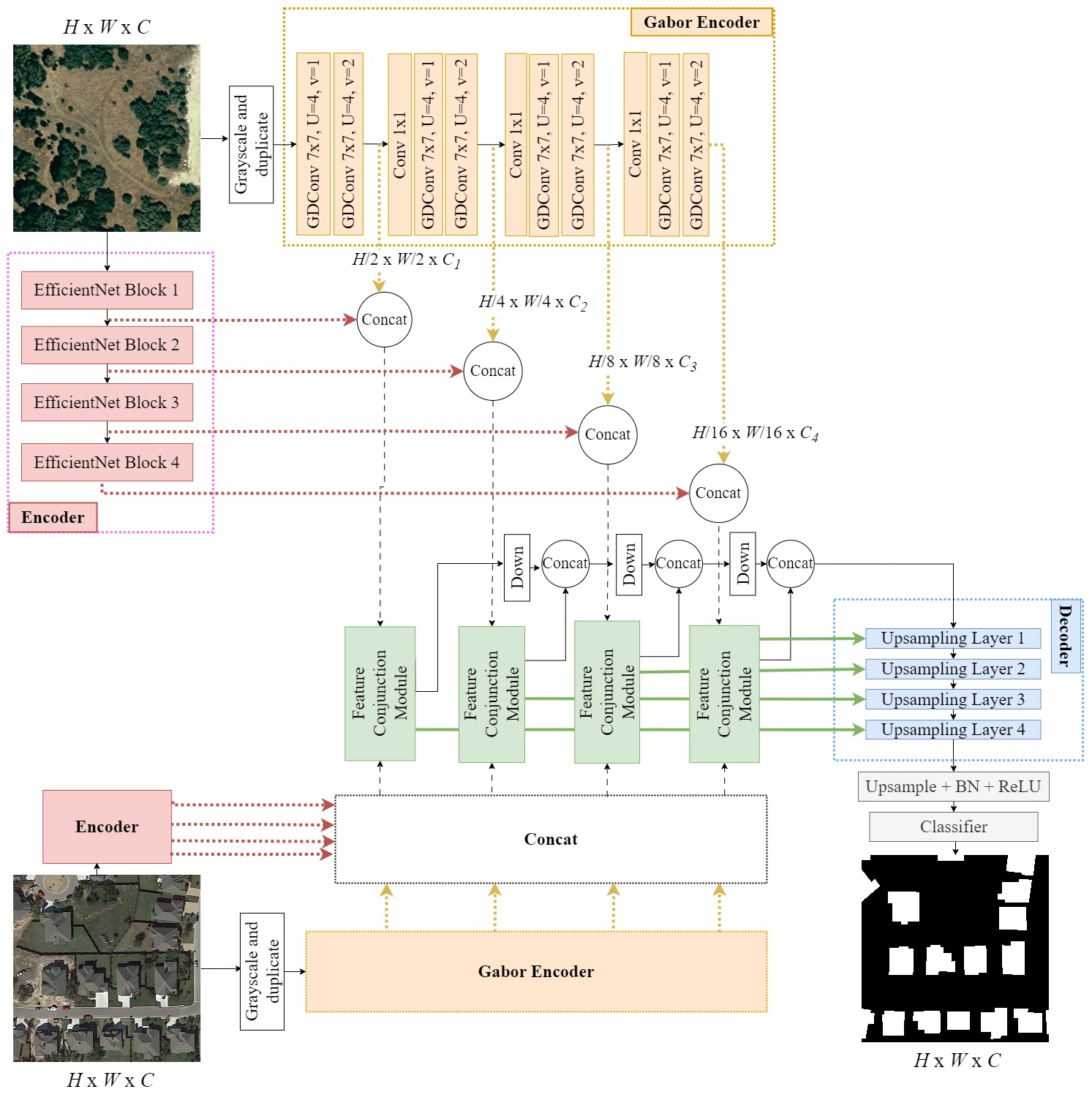

Keywords: Gabor wavelet, convolutional neural network, building change detection, remote sensing.

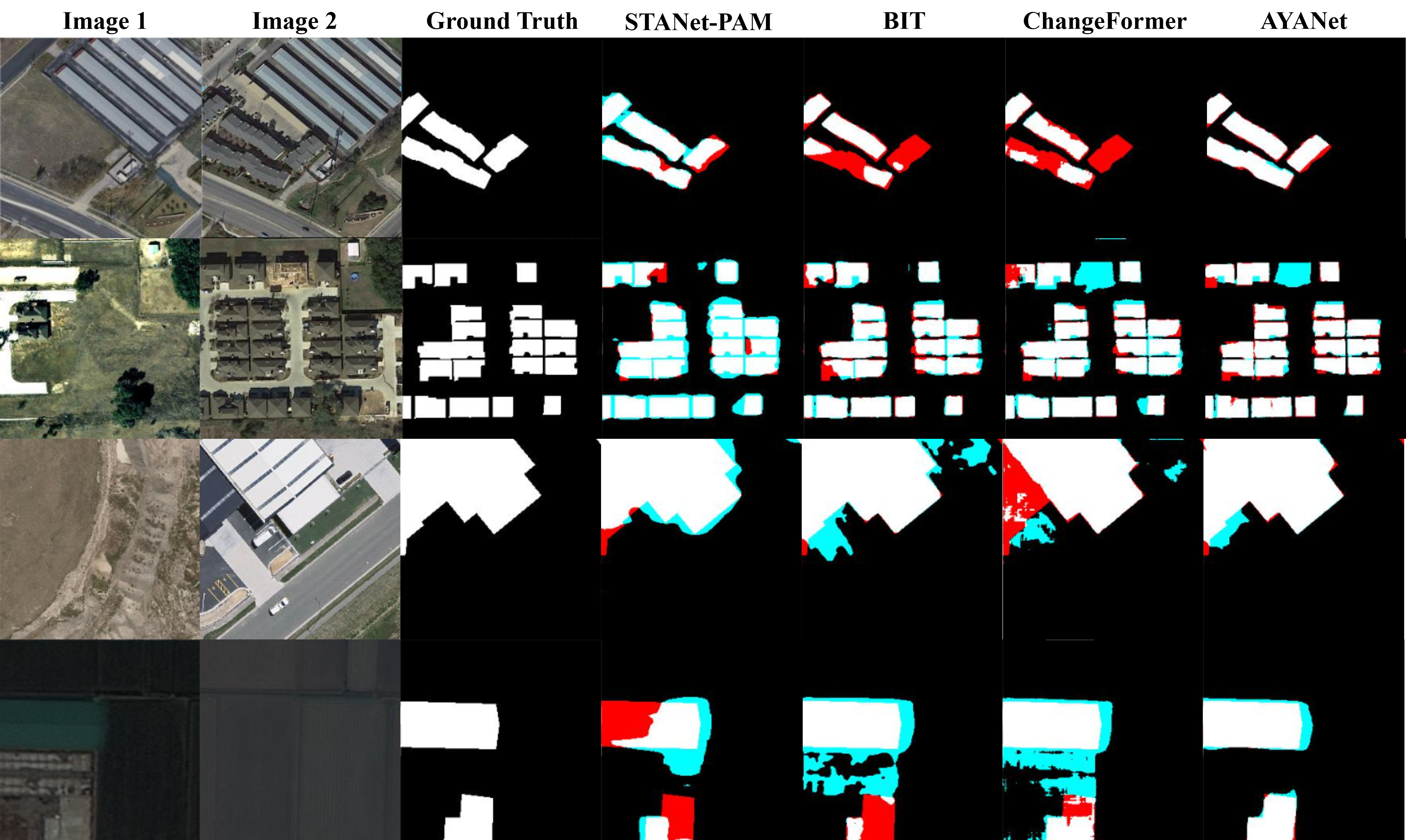

In this work, we proposed a bi-temporal BCD model with a double-encoder architecture as the feature extractor. Fig. 9 indicates the whole design of AYANet, the proposed BCD model. The double encoder consists of 1) a Gabor Encoder which aims to extract the texture of buildings, as buildings are distinguishable from other objects on RS images by their repetitive features when being observed from bird's eye view, and 2) a CNN-based Encoder that consists of building blocks of EfficientNet-B7 35 whose task is to extract other high-level features. The Gabor Encoder is built based on the Gabor filters integrated in CNN filter 27 but the standard convolution process is modified to the depthwise convolution 19, forming what is indicated in Fig. 9 as GDConv. This GDConv is stacked in such a way that the Gabor Encoder will extract multi-resolution features at different scales and orientations. Furthermore, to emphasize the difference between features from the image pair, the Feature Conjunction Module was proposed.

The performance of the proposed model was compared with several SOTA methods using three BCD datasets (LEVIR-CD 18, WHU-CD 25, and S2Looking 34). Fig. 10 indicates some samples of qualitative comparison between AYANet and three SOTA models that were chosen to represent the pure CNN model (STANet-PAM 18), the hybrid CNN-Transformer method (BIT 17), and the pure Transformer model (ChangeFormer 15). The result suggests that the proposed model shows better capability in addressing the changes in buildings compared to the considered SOTA models in the study.

This work was presented in International Conference on Pattern Recognition (ICPR) 2024 in Kolkata, India 9.

7.9 Generalization of Single-Linkage with higher-order interactions

Participants: Louis Hauseux, Konstantin Avrachenkov, Josiane Zerubia.

Keywords: hierarchical clustering, density-based clustering, geometric graphs, high-order interactions, simplicial complexes, percolation.

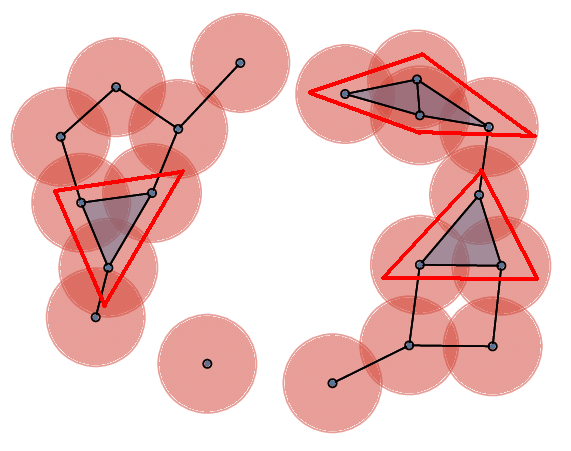

Single-Linkage 21, 24 is a classical clustering algorithm. Single-Linkage is usually defined as follows: it performs hierarchical clustering by grouping iteratively the two closest clusters. In practice, this is roughly equivalent to the extraction of the Minimum Spanning Tree on the data.

Since it appeared in the , Single-Linkage algorithm has flourished, and numerous improvements have been proposed. The State of the Art in clustering today is held by HDBSCAN 16, 28, which is essentially based on the Robust version of Single-Linkage.

It is worth noting that Single-Linkage can be seen from two alternative points of view: 1) it performs persistent analysis on geometric graphs constructed on the data; 2) in doing so, it also computes the high-density clusters of the data for the 1-Nearest Neighbor density estimator.

With these three equivalent points of view in mind, we found the natural way to generalize Single-Linkage with higher-order interactions. We used hypergraphs rather than geometric graphs – namely: the Čech complexes – with a more restrictive notion of connected component we call -polyhedron. Then, we have proven that -polyhedra correspond to high-density clusters of -Nearest Neighbors density estimator on the dataset. In practice, our algorithm can be implemented by identifying a certain minimum -tree on the data.

The specific case corresponds to the “triangle-connectivity” (Fig. 11). We develop geometric optimizations to compute the 2-generalization of Single-Linkage in Euclidean spaces of small dimensions. We show that is already sufficient to outperform the State of the Art methods, such as HDBSCAN, on several datasets, both synthetic and real.

These results were presented at the European Signal Processing Conference (EUSIPCO, Lyon) 5 and an article has been submitted to a journal.

7.10 Percolation: The key phenomenon in a family of clustering algorithms

Participants: Louis Hauseux, Konstantin Avrachenkov, Josiane Zerubia.

Keywords: hierarchical clustering, density-based clustering, geometric graphs, high-order interactions, simplicial complexes, percolation.

In the previous Section 7.9, we explained how to generalize properly the Single-Linkage algorithm.

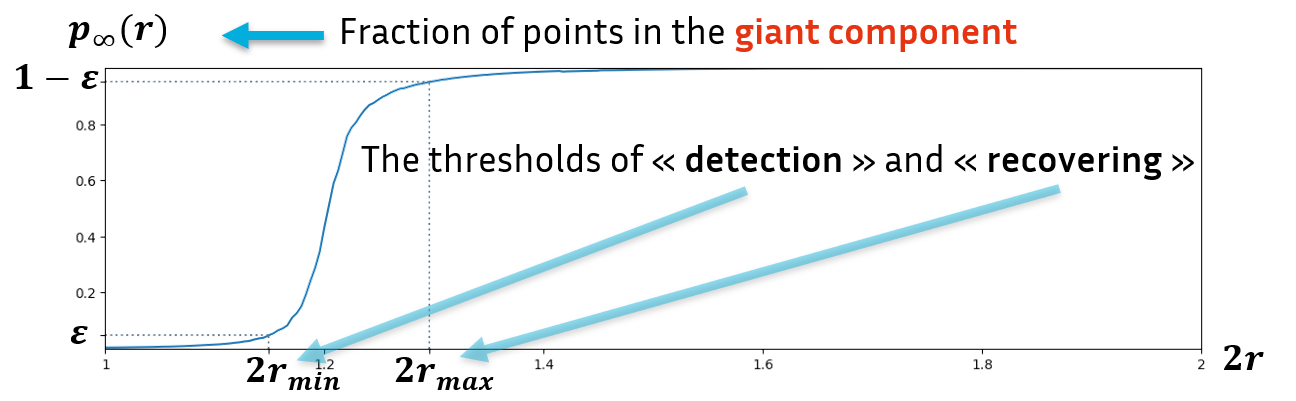

Single-Linkage algorithm as well as its robust version, or HDBSCAN the State of the Art for clustering algorithm, and many other algorithms can be classified in a large family of clustering algorithms 33. These algorithms all proceed in the same way: they consider objects (density level set, graphs, hypergraphs, etc.) on the data depending on a parameter (density , radius , etc.). Varying this parameter, one can observe some structures appearing (high-density clusters, connected components, etc.) and then merge until only one giant structure encompasses all the data. We call this method “persistent analysis”, by analogy with persistent homology in Topological Data Analysis (see the illustration in Fig. 12). The mathematical phenomenon at work is percolation20 and is directly linked to the performance of this family of algorithms

The speed of percolation depends on the type of objects we are considering and the definition we take for structures. The faster this speed, the better the algorithm will be able to distinguish between neighboring high-density clusters.

First, we are able to measure the theoretical performance of hierarchical clustering algorithms of this family thanks to an index which we have named percolation rate. Second, we can show that the -polyhedra of the previous Section 7.9 get better results compared to the State-of-the-Art -Robust Single-Linkage components (used by HDBSCAN). Some results in are given in Tab. 1.

| Clustering | -Robust Single-Linkage | -polyhedra |

| 0.64 | 0.62 | |

| 0.61 | 0.73 | |

| 0.60 | 0.76 |

This work been presented at the ACM Sigmetrics Student Research Competition7(Venice, June 2024) by Louis Hauseux who got the second prize and at the Complex Networks conference 6 (Istanbul, December 2024).

7.11 Progressive crack extraction on optical or LiDAR images. Generalization of Frangi filter

Participants: Louis Hauseux, Josiane Zerubia.

External collaborators: Pierre Charbonnier [CEREMA, Research scientist], Philippe Foucher [CEREMA, Research scientist], Raphaël Antoine [CEREMA, Research scientist].

Keywords: generalized Frangi filter, crack extraction, optical image, LiDAR.

Our aim is to detect anomalies, such as cracks, in data consisting of a point cloud (spatially localized) and a signal on these points

E. g., is the grid of pixels and is an image.

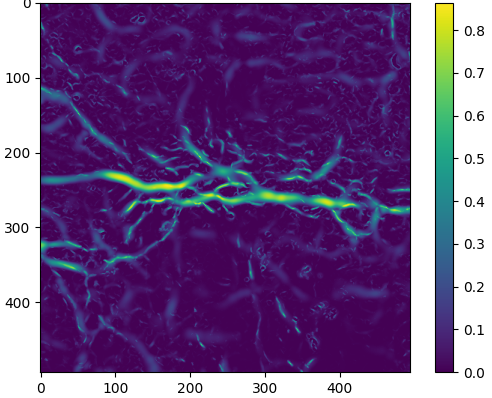

Frangi filter 22 was invented to enhance tubular structures within an image using the Hessian matrix of the signal. See an example of Frangi response in Fig. 13.

The Frangi filter is a pixelwise response to measure the degree to which the pixel signal is located in a tubular structure. We generalized the Frangi filter to get a response on couples of pixels. The response is thus much more precise for clustering: we can now measure if two pixels are in the same tubular structure. As with the simple Frangi response, the shape of the tubular structure in which is located and the signal intensity at that point can be observed at different scales . By looking at a pair , we can also observe the angle formed by the tubular structures at and , and thus measure their alignment.

With these responses on pixel couples, we obtain a (hyper-)graph, allowing us to launch the generalized Single-Linkage algorithm we presented in the previous section 7.9. Figure 14 shows the firsts results we get.

This research work is in progress. We plan to submit this work to a conference in 2025.

8 Bilateral contracts and grants with industry

8.1 Bilateral contracts with industry

8.1.1 LiChIE contract with Airbus Defense and Space funded by Bpifrance

Participants: Louis Hauseux, Jules Mabon, Josiane Zerubia.

External collaborators: Mathias Ortner [Airbus Defense and Space, Senior Data Scientist].

Automatic object detection and tracking on sequences of images taken from various constellations of satellites. This contract covered one PhD (Jules Mabon from October 2020 until January 2024), one postdoc (Camilo Aguilar Herrera from January 2021 until September 2022), and one research engineer (Louis Hauseux from February until April 2023).

9 Partnerships and cooperations

9.1 International initiatives

9.1.1 Visits to international teams

Research stays abroad

Josiane Zerubia

-

Visited institution:

Durham University, Department of Mathematical Sciences

-

Country:

United-Kingdom

-

Dates:

2 July 2024 - 17 July 2024

-

Context of the visit:

Josiane Zerubia was invited by Professor Ian Jermyn to visit his laboratory in order to prepare a research proposal and to give a seminar on July 11th entitled “Learning Stochastic geometry models and Convolutional Neural Networks. Application to multiple object detection in aerospatial data sets”.

-

Mobility program/type of mobility:

Funded by Prof. Ian Jermyn on his contracts.

9.2 National initiatives

ANR FAULTS R GEMS

Participants: Josiane Zerubia.

External collaborators: Isabelle Manighetti [OCA, Géoazur, Senior Physicist].

AYANA team is part of the ANR project FAULTS R GEMS (2017-2026, PI Geoazur) dedicated to realistic generic earthquake modeling and hazard simulation.

10 Dissemination

Participants: Josiane Zerubia, Martina Pastorino, Priscilla Osa, Louis Hauseux.

10.1 Promoting scientific activities

- Josiane Zerubia is part of the organizing committee of the ICASSP'28 proposal in Washington DC, USA

10.1.1 Scientific events: selection

Member of the conference program committees

- Josiane Zerubia was a member of the conference program committee of SPIE Artificial Intelligence and Image and Signal Processing for Remote Sensing'24, Edinburgh (United-Kingdom).

Reviewer

- Martina Pastorino reviewed for the following international conferences: IEEE IGARSS'24, and IAPR-ICPR'24.

- Josiane Zerubia reviewed for the following international conferences: IEEE ICASSP'24, IEEE IGARSS'24, IEEE ICIP'24, IEEE-EURASIP EUSIPCO'24,IAPR-ICPR'24, SPIE Artificial Intelligence and Image and Signal Processing for Remote Sensing'24.

10.1.2 Journal

Member of the editorial boards

- Josiane Zerubia has been a member of the editorial board of Fondation and Trend in Signal Processing (2007- ).

Reviewer - reviewing activities

- Martina Pastorino reviewed for the following international journals: IEEE TGRS, IEEE TIP, IEEE GRSL, IEEE JSTARS, and MDPI Remote Sensing.

10.1.3 Invited talks

- Josiane Zerubia presented AYANA research work at Airbus DS and CNES Data Campus,Toulouse, February 2024.

- Louis Hauseux gave a seminar at DITEN, invited by Professor Gabriele Moser, Genova, Italy, April 2024.

- Martina Pastorino gave a seminar at EVERGREEN team, invited by Professor Dino Ienco, INRIA, Montpellier, France, May 2024.

- Martina Pastorino gave a seminar at THOTH team, invited by Professor Jocelyn Chanussot, INRIA, Grenoble, France, November 2024.

- Josiane Zerubia participated in Toulouse to the “Rencontres Techniques & Numériques” organized by CNES, November 2024.

- Josiane Zerubia presented AYANA research work, as well as the activities of Inria Defense and Security mission, during her visit to both the French Space Command (CDE) and Airbus DS in Toulouse, November 2024.

10.1.4 Leadership within the scientific community

- Josiane Zerubia is a Fellow of IEEE (2002- ), EURASIP (2019- ) and IAPR (2020-).

- Josiane Zerubia is a member of the Teaching Board of the Doctoral School STIET at University of Genoa, Italy (2018- ).

- Josiane Zerubia is a senior member of IEEE WISP Committee (2024-2025).

10.1.5 Scientific expertise

- Josiane Zerubia did consulting for CAC (Cosmeto Azur Consulting), November 2024.

- Josiane Zerubia presented Ayana work to the Vice-Dean Research of West University of Timisoara during his visit to Université Côte d'Azur and Inria, November 2024.

10.1.6 Research administration

- Josiane Zerubia is a member of Comité des Equipes Projets (CEP) at Inria-Centre d’Université Côte d’Azur.

- Josiane Zerubia is in charge of the aerospace research field for the Defense and Security mission at Inria, headed by Frédérique Segond since January 2023. She was invited to EMA-Balard (État-Major des Armées) in Paris in April 2024.

10.2 Teaching - Supervision - Juries

10.2.1 Teaching

- Josiane Zerubia taught a master course on Remote Sensing: (9h of lectures, 13.5h eq. TD), Master RISKS, Université Côte d'Azur, France (2019-2024). This course was given to Masters students

- Martina Pastorino co-taught (official teaching appointment) of the Remote Sensing for Hydrography course (6h of lectures, 9h eq. TD), Master in Geomatics, Istituto Idrografico della Marina, University of Genoa, Italy, (2023-2024).

- Martina Pastorino co-taught (official teaching appointment) of the Remote Sensing Elements for Infrastructure Monitoring course, Master in Management of the Security of Networks and Transportation Systems (6h of lectures, 9h eq. TD), University of Genoa, Italy (2023-2024).

- Martina Pastorino taught support activities for the course of Signals and Systems for the Telecommunications (laboratories, 25h TD), University of Genoa (2024-2025).

- Louis Hauseux with Konstantin Avrachenkov taught the master course “Statistical Analysis of Networks”: (12h of lectures, 18H eq. TD, and 12h of TD), M2 Data Science and Artificial Intelligence, Université Côte d'Azur (2024).

- Louis Hauseux gave the practicals of the master course “Statistical Inference”, (30H TD), taught by Vincent Vandewalle, M1 Data Science and Artificial Intelligence, Université Côte d'Azur (2024).

10.2.2 Supervision

- Priscilla Indira Osa co-supervised a Master student in 2024 for the Master in Engineering for Natural Risk Management, University of Genoa.

- Josiane Zerubia supervized three PhD students (Louis Hauseux, Jules Mabon and Priscilla Indira Osa) and one post-doctoral student (Martina Pastorino) within the AYANA team.

10.2.3 Juries

- Josiane Zerubia was part of the Defence Committee as external reviewer of 2 Master students of Prof. Simon Pun at Chinese University of Hong Kong in Shenzhen, June 2024.

10.3 Popularization

- Josiane Zerubia participated as a member of Inria/Terra Numerica/Femmes&Sciences to the “Forum des métiers” at the Lycée technique Hutinel in Cannes, February 2024.

- Josiane Zerubia was member of the panel for IEEE WISP luncheon at ICIP'24 in Abu Dabi with 3 other women role models, November 2024

10.3.1 Productions (articles, videos, podcasts, serious games, ...)

- Ayana team was highlighted in the Inria news “Ayana, an exploratory action to support the future of space exploration”, July 2024.

11 Scientific production

11.1 Major publications

- 1 thesisProbabilistic graphical models and deep learning methods for remote sensing image analysis.Université Côte d'Azur; Università degli studi (Gênes, Italie)December 2023HALback to text

11.2 Publications of the year

International journals

International peer-reviewed conferences

Scientific book chapters

11.3 Cited publications

- 15 inproceedingsA Transformer-Based Siamese Network for Change Detection.IEEE International Geoscience and Remote Sensing Symposium (IGARSS)2022, 207-210DOIback to textback to text

- 16 inproceedingsDensity-Based clustering based on hierarchical density estimates.Advances in Knowledge Discovery and Data MiningBerlin, HeidelbergSpringer2013, 160--172DOIback to text

- 17 articleRemote Sensing Image Change Detection With Transformers.IEEE Transactions on Geoscience and Remote Sensing602022, 1-14DOIback to textback to text

- 18 articleA Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection.Remote Sensing122020, 1662back to textback to textback to textback to text

- 19 articleXception: Deep Learning with Depthwise Separable Convolutions.2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)2016, 1800-1807URL: https://api.semanticscholar.org/CorpusID:2375110back to text

- 20 inproceedingsSixty years of percolation.Proceedings of the International Congress of Mathematicians, Rio de JaneiroWorld Scientific2018, 2829--2856DOIback to text

- 21 articleSur la liaison et la division des points d'un ensemble fini.Colloquium Mathematicum23-41951, 282-285DOIback to text

- 22 inproceedingsMultiscale vessel enhancement filtering.Medical Image Computing and Computer-Assisted Intervention --- MICCAI'98Springer Berlin Heidelberg1998DOIback to text

- 23 articleTheory of Communication.Journal of Institution of Electrical Engineers9331946, 429--457back to text

- 24 articleMinimum Spanning Trees and Single Linkage Cluster Analysis.Journal of the Royal Statistical Society. Series C (Applied Statistics)1811969, 54--64DOIback to text

- 25 articleFully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set.IEEE Transactions on Geoscience and Remote Sensing5712019, 574-586DOIback to textback to text

- 26 phdthesisContraintes géométriques et topologiques pour la segmentation d’images médicales : approches hybrides variationnelles et par apprentissage profond.Thèse de doctorat dirigée par Le Guyader, Carole et Petitjean, Caroline Mathématiques appliquées et informatique Normandie 20222022, URL: http://www.theses.fr/2022NORMIR30back to text

- 27 articleGabor Convolutional Networks.IEEE Transactions on Image Processing2792018, 4357-4366DOIback to textback to text

- 28 inproceedingsAccelerated Hierarchical Density Based Clustering.IEEE International Conference on Data Mining Workshops (ICDMW)2017, 33-42DOIback to text

- 29 articleProbabilistic Fusion Framework Based on Fully Convolutional Networks and Graphical Models for Burnt Area Detection from Multiresolution Satellite and UAV Imagery.IEEE Transactions on Geoscience and Remote Sensingsubmittedback to text

- 30 inproceedingsMultiresolution Fusion and Classification of Hyperspectral and Panchromatic Remote Sensing Images.2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) Workshop (GeoCV)Tucson, Arizonasubmittedback to text

- 31 articleSemantic Segmentation of Remote-Sensing Images Through Fully Convolutional Neural Networks and Hierarchical Probabilistic Graphical Models.IEEE Transactions on Geoscience and Remote Sensing6054071162022, 1-16DOIback to text

- 32 inproceedingsDo Vision Transformers See Like Convolutional Neural Networks?Neural Information Processing Systems2021, URL: https://api.semanticscholar.org/CorpusID:237213700back to text

- 33 miscStable and consistent density-based clustering.2023DOIback to text

- 34 articleS2Looking: A Satellite Side-Looking Dataset for Building Change Detection.Remote Sensing13242021, URL: https://www.mdpi.com/2072-4292/13/24/5094DOIback to text

- 35 inproceedingsEfficientNet: Rethinking Model Scaling for Convolutional Neural Networks.Proceedings of the 36th International Conference on Machine Learning97Proceedings of Machine Learning ResearchPMLR09--15 Jun 2019, 6105--6114URL: https://proceedings.mlr.press/v97/tan19a.htmlback to text

- 36 articleWorldCereal: a dynamic open-source system for global-scale, seasonal, and reproducible crop and irrigation mapping.Earth System Science Data15122023, 5491--5515URL: https://essd.copernicus.org/articles/15/5491/2023/DOIback to text

- 37 inproceedingsConditional Random Fields as Recurrent Neural Networks.2015 IEEE International Conference on Computer Vision (ICCV)2015, 1529-1537DOIback to textback to text

- 38 articleAdvances in LUCAS Copernicus 2022: enhancing Earth observations with comprehensive in situ data on EU land cover and use.Earth System Science Data16122024, 5723--5735URL: https://essd.copernicus.org/articles/16/5723/2024/DOIback to text

- 39 articleFrom parcel to continental scale – A first European crop type map based on Sentinel-1 and LUCAS Copernicus in-situ observations.Remote Sensing of Environment2662021, 112708URL: https://www.sciencedirect.com/science/article/pii/S0034425721004284DOIback to text