2024Activity reportProject-TeamSTARS

RNSR: 201221015V- Research center Inria Centre at Université Côte d'Azur

- Team name: Spatio-Temporal Activity Recognition of Social interactions

- Domain:Perception, Cognition and Interaction

- Theme:Vision, perception and multimedia interpretation

Keywords

Computer Science and Digital Science

- A5.3. Image processing and analysis

- A5.3.3. Pattern recognition

- A5.4. Computer vision

- A5.4.2. Activity recognition

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.5. Object tracking and motion analysis

- A9. Artificial intelligence

- A9.1. Knowledge

- A9.2. Machine learning

- A9.3. Signal analysis

- A9.8. Reasoning

Other Research Topics and Application Domains

- B1. Life sciences

- B1.2. Neuroscience and cognitive science

- B1.2.2. Cognitive science

- B2. Health

- B2.1. Well being

- B7. Transport and logistics

- B7.1.1. Pedestrian traffic and crowds

- B8. Smart Cities and Territories

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientists

- François Brémond [Team leader, INRIA, Senior Researcher]

- Michal Balazia [INRIA, ISFP]

- Antitza Dantcheva [INRIA, Senior Researcher, from Oct 2024]

- Antitza Dantcheva [INRIA, Researcher, until Sep 2024]

- Monique Thonnat [INRIA, Emeritus, from Jun 2024]

- Monique Thonnat [INRIA, Senior Researcher, until May 2024]

Post-Doctoral Fellows

- Baptiste Chopin [INRIA, Post-Doctoral Fellow]

- Olivier Huynh [INRIA, Post-Doctoral Fellow]

PhD Students

- Tanay Agrawal [INRIA]

- Abid Ali [UNIV COTE D'AZUR]

- Yuan Gao [INRAE]

- Mohammed Guermal [INRIA, until Jul 2024]

- Snehashis Majhi [INRIA]

- Tomasz Stanczyk [INRIA]

- Valeriya Strizhkova [INRIA]

- Charbel Yahchouchi [Supponor, CIFRE, from May 2024 until Oct 2024]

- Di Yang [INRIA, until Feb 2024]

- Seongro Yoon [INRIA, from Oct 2024]

Technical Staff

- Mahmoud Ali [INRIA, Engineer]

- Ezem Sura Ekmekci [INRIA, Engineer, from Nov 2024]

- Ezem Sura Ekmekci [INRIA, Engineer, until Sep 2024]

- Yoann Torrado [INRIA, Engineer]

Interns and Apprentices

- Maheswar Bora [INRIA, Intern, from Jul 2024]

- Junuk Cha [INRIA, from Sep 2024 until Nov 2024]

- Antonio Cimino [INRIA, Intern, from May 2024 until Aug 2024]

- Anil Egin [INRIA, Intern, from Nov 2024]

- Lucas Ferez [INRIA, Intern, from Apr 2024 until May 2024]

- Rui-Han Lee [INRIA, Intern, until Jan 2024]

- Cyprien Michel-Deletie [ENS DE LYON, Intern, until Mar 2024]

- Dorval De Celestin Mobendza [INRIA, Intern, from May 2024 until Aug 2024]

- David Prigodin [UNIV COTE D'AZUR, Intern, from Mar 2024 until Apr 2024]

- Nabyl Quignon [INRIA, from Dec 2024]

- Dominick Reilly [INRIA, Intern, until May 2024]

- Aglind Reka [UNIV COTE D'AZUR, Intern, from May 2024 until Oct 2024]

- Sanya Sinha [INRIA, Intern, until Jun 2024]

- Sujith Sai Sripadam [INRIA, Intern, from Jul 2024 until Aug 2024]

- Utkarsh Tiwari [INRIA, Intern, from Jul 2024 until Aug 2024]

Administrative Assistant

- Sandrine Boute [INRIA]

Visiting Scientists

- Diana Borza [Babeș Bolyai University Cluj-Napoca, from May 2024 until Jul 2024]

- Giacomo D'Amicantonio [TU Eindhoven, from Aug 2024]

- Salvatore Fiorilla [UNIV BOLOGNA, from Sep 2024]

- Sabine Moisan [Retired, Emeritus]

- Jean-Paul Rigault [Retired]

External Collaborators

- Nibras Abo Alzahab [UNIV COTE D'AZUR, until Jun 2024]

- David Anghelone [BANQUE LOMBARD ODIER, until Jun 2024]

- Laura Ferrari [Scuola Superiore Sant'Anna]

- Rachid Guerchouche [CoBTeK]

- Alexandra Konig [CHU NICE, from Mar 2024]

- Hali Lindsay [KIT - ALLEMAGNE, from Jun 2024]

- Philippe Robert [CoBTeK]

- Yaohui Wang [Shanghai AI Lab]

2 Overall objectives

2.1 Presentation

The STARS (Spatio-Temporal Activity Recognition Systems) team focuses on the design of cognitive vision systems for Activity Recognition. More precisely, we are interested in the real-time semantic interpretation of dynamic scenes observed by video cameras and other sensors. We study long-term spatio-temporal activities performed by agents such as human beings, animals or vehicles in the physical world. The major issue in semantic interpretation of dynamic scenes is to bridge the gap between the subjective interpretation of data and the objective measures provided by sensors. To address this problem Stars develops new techniques in the field of computer vision, machine learning and cognitive systems for physical object detection, activity understanding, activity learning, vision system design and evaluation. We focus on two principal application domains: visual surveillance and healthcare monitoring.

2.2 Research Themes

Stars is focused on the design of cognitive systems for Activity Recognition. We aim at endowing cognitive systems with perceptual capabilities to reason about an observed environment, to provide a variety of services to people living in this environment while preserving their privacy. In today's world, a huge amount of new sensors and new hardware devices are currently available, addressing potentially new needs of the modern society. However, the lack of automated processes (with no human interaction) able to extract a meaningful and accurate information (i.e. a correct understanding of the situation) has often generated frustrations among the society and especially among older people. Therefore, Stars objective is to propose novel autonomous systems for the real-time semantic interpretation of dynamic scenes observed by sensors. We study long-term spatio-temporal activities performed by several interacting agents such as human beings, animals and vehicles in the physical world. Such systems also raise fundamental software engineering problems to specify them as well as to adapt them at run time.

We propose new techniques at the frontier between computer vision, knowledge engineering, machine learning and software engineering. The major challenge in semantic interpretation of dynamic scenes is to bridge the gap between the task dependent interpretation of data and the flood of measures provided by sensors. The problems we address range from physical object detection, activity understanding, activity learning to vision system design and evaluation. The two principal classes of human activities we focus on, are assistance to older adults and video analytics.

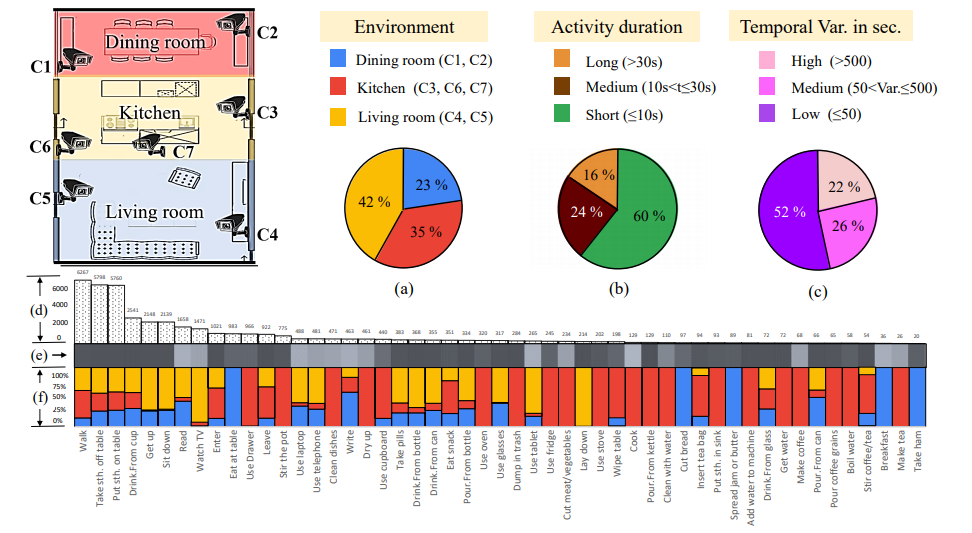

Typical examples of complex activity are shown in Figure 1 and Figure 2 for a homecare application (See Toyota Smarthome Dataset here). In this example, the duration of the monitoring of an older person apartment could last several months. The activities involve interactions between the observed person and several pieces of equipment. The application goal is to recognize the everyday activities at home through formal activity models (as shown in Figure 3) and data captured by a network of sensors embedded in the apartment. Here typical services include an objective assessment of the frailty level of the observed person to be able to provide a more personalized care and to monitor the effectiveness of a prescribed therapy. The assessment of the frailty level is performed by an Activity Recognition System which transmits a textual report (containing only meta-data) to the general practitioner who follows the older person. Thanks to the recognized activities, the quality of life of the observed people can thus be improved and their personal information can be preserved.

.png)

| Activity (PrepareMeal, | |

| PhysicalObjects( | (p : Person), (z : Zone), (eq : Equipment)) |

| Components( | (s_inside : InsideKitchen(p, z)) |

| (s_close : CloseToCountertop(p, eq)) | |

| (s_stand : PersonStandingInKitchen(p, z))) | |

| Constraints( | (z->Name = Kitchen) |

| (eq->Name = Countertop) | |

| (s_close->Duration >= 100) | |

| (s_stand->Duration >= 100)) | |

| Annotation( | AText("prepare meal"))) |

The ultimate goal is for cognitive systems to perceive and understand their environment to be able to provide appropriate services to a potential user. An important step is to propose a computational representation of people activities to adapt these services to them. Up to now, the most effective sensors have been video cameras due to the rich information they can provide on the observed environment. These sensors are currently perceived as intrusive ones. A key issue is to capture the pertinent raw data for adapting the services to the people while preserving their privacy. We plan to study different solutions including of course the local processing of the data without transmission of images and the utilization of new compact sensors developed for interaction (also called RGB-Depth sensors, an example being the Kinect) or networks of small non-visual sensors.

2.3 International and Industrial Cooperation

Our work has been applied in the context of more than 10 European projects such as COFRIEND, ADVISOR, SERKET, CARETAKER, VANAHEIM, SUPPORT, DEM@CARE, VICOMO, EIT Health.

We had or have industrial collaborations in several domains: transportation (CCI Airport Toulouse Blagnac, SNCF, Inrets, Alstom, Ratp, Toyota, GTT (Italy), Turin GTT (Italy)), banking (Crédit Agricole Bank Corporation, Eurotelis and Ciel), security (Thales R&T FR, Thales Security Syst, EADS, Sagem, Bertin, Alcatel, Keeneo), multimedia (Thales Communications), civil engineering (Centre Scientifique et Technique du Bâtiment (CSTB)), computer industry (BULL), software industry (AKKA), hardware industry (ST-Microelectronics) and health industry (Philips, Link Care Services, Vistek).

We have international cooperations with research centers such as Reading University (UK), ENSI Tunis (Tunisia), Idiap (Switzerland), Multitel (Belgium), National Cheng Kung University, National Taiwan University (Taiwan), MICA (Vietnam), IPAL, I2R (Singapore), University of Southern California, University of South Florida (USA), Michigan State University (USA), Chinese Academy of Sciences (China), IIIT Delhi (India), Hochschule Darmstadt (Germany), Fraunhofer Institute for Computer Graphics Research IGD (Germany).

3 Research program

3.1 Introduction

Stars follows three main research directions: perception for activity recognition, action recognition and semantic activity recognition. These three research directions are organized following the workflow of activity recognition systems: First, the perception and the action recognition directions provide new techniques to extract powerful features, whereas the semantic activity recognition research direction provides new paradigms to match these features with concrete video analytics and healthcare applications.

Transversely, we consider a new research axis in machine learning, combining a priori knowledge and learning techniques, to set up the various models of an activity recognition system. A major objective is to automate model building or model enrichment at the perception level and at the understanding level.

3.2 Perception for Activity Recognition

Participants: François Brémond, Antitza Dantcheva, Michal Balazia, Monique Thonnat.

Keywords: Activity Recognition, Scene Understanding, Machine Learning, Computer Vision, Cognitive Vision Systems, Software Engineering.

3.2.1 Introduction

Our main goal in perception is to develop vision algorithms able to address the large variety of conditions characterizing real world scenes in terms of sensor conditions, hardware requirements, lighting conditions, physical objects, and application objectives. We have also several issues related to perception which combine machine learning and perception techniques: learning people appearance, parameters for system control and shape statistics.

3.2.2 Appearance Models and People Tracking

An important issue is to detect in real-time physical objects from perceptual features and predefined 3D models. It requires finding a good balance between efficient methods and precise spatio-temporal models. Many improvements and analysis need to be performed in order to tackle the large range of people detection scenarios.

Appearance models. In particular, we study the temporal variation of the features characterizing the appearance of a human. This task could be achieved by clustering potential candidates depending on their position and their reliability. This task can provide any people tracking algorithms with reliable features allowing for instance to (1) better track people or their body parts during occlusion, or to (2) model people appearance for re-identification purposes in mono and multi-camera networks, which is still an open issue. The underlying challenge of the person re-identification problem arises from significant differences in illumination, pose and camera parameters. The re-identification approaches have two aspects: (1) establishing correspondences between body parts and (2) generating signatures that are invariant to different color responses. As we have already several descriptors which are color invariant, we now focus more on aligning two people detection and on finding their corresponding body parts. Having detected body parts, the approach can handle pose variations. Further, different body parts might have different influence on finding the correct match among a whole gallery dataset. Thus, the re-identification approaches have to search for matching strategies. As the results of the re-identification are always given as the ranking list, re-identification focuses on learning to rank. "Learning to rank" is a type of machine learning problem, in which the goal is to automatically construct a ranking model from a training data.

Therefore, we work on information fusion to handle perceptual features coming from various sensors (several cameras covering a large-scale area or heterogeneous sensors capturing more or less precise and rich information). New 3D RGB-D sensors are also investigated, to help in getting an accurate segmentation for specific scene conditions.

Long-term tracking. For activity recognition we need robust and coherent object tracking over long periods of time (often several hours in video surveillance and several days in healthcare). To guarantee the long-term coherence of tracked objects, spatio-temporal reasoning is required. Modeling and managing the uncertainty of these processes is also an open issue. In Stars we propose to add a reasoning layer to a classical Bayesian framework modeling the uncertainty of the tracked objects. This reasoning layer can take into account the a priori knowledge of the scene for outlier elimination and long-term coherency checking.

Controlling system parameters. Another research direction is to manage a library of video processing programs. We are building a perception library by selecting robust algorithms for feature extraction, by ensuring they work efficiently with real time constraints and by formalizing their conditions of use within a program supervision model. In the case of video cameras, at least two problems are still open: robust image segmentation and meaningful feature extraction. For these issues, we are developing new learning techniques.

3.3 Action Recognition

Participants: François Brémond, Antitza Dantcheva, Michal Balazia, Monique Thonnat.

Keywords: Machine Learning, Computer Vision, Cognitive Vision Systems.

3.3.1 Introduction

Due to the recent development of high processing units, such as GPU, it is now possible to extract meaningful features directly from videos (e.g. video volume) to recognize reliably short actions. Action Recognition benefits also greatly from the huge progress made recently in Machine Learning (e.g. Deep Learning), especially for the study of human behavior. For instance, Action Recognition enables to measure objectively the behavior of humans by extracting powerful features characterizing their everyday activities, their emotion, eating habits and lifestyle, by learning models from a large amount of data from a variety of sensors, to improve and optimize for example, the quality of life of people suffering from behavior disorders. However, Smart Homes and Partner Robots have been well advertized but remain laboratory prototypes, due to the poor capability of automated systems to perceive and reason about their environment. A hard problem is for an automated system to cope 24/7 with the variety and complexity of the real world. Another challenge is to extract people fine gestures and subtle facial expressions to better analyze behavior disorders, such as anxiety or apathy. Taking advantage of what is currently studied for self-driving cars or smart retails, there is a large avenue to design ambitious approaches for the healthcare domain. In particular, the advance made with Deep Learning algorithms has already enabled to recognize complex activities, such as cooking interactions with instruments, and from this analysis to differentiate healthy people from the ones suffering from dementia.

To address these issues, we propose to tackle several challenges which are detailed in the following subsections:

3.3.2 Action recognition in the wild

The current Deep Learning techniques are mostly developed to work on few clipped videos, which have been recorded with students performing a limited set of predefined actions in front of a camera with high resolution. However, real life scenarios include actions performed in a spontaneous manner by older people (including people interactions with their environment or with other people), from different viewpoints, with varying framerate, partially occluded by furniture at different locations within an apartment depicted through long untrimmed videos. Therefore, a new dedicated dataset should be collected in a real-world setting to become a public benchmark video dataset and to design novel algorithms for Activities of Daily Living (ADL) activity recognition. A special attention should be taken to anonymize the videos.

3.3.3 Attention mechanisms for action recognition

ADL and video-surveillance activities are different from internet activities (e.g. Sports, Movies, YouTube), as they may have very similar context (e.g. same background kitchen) with high intra-variation (different people performing the same action in different manners), but in the same time low inter-variation, similar ways to perform two different actions (e.g. eating and drinking a glass of water). Consequently, fine-grained actions are badly recognized. So, we will design novel attention mechanisms for action recognition, for the algorithm being able to focus on a discriminative part of the person conducting the action. For instance, we will study attention algorithms, which could focus on the most appropriate body parts (e.g. full body, right hand). In particular, we plan to design a soft mechanism, learning the attention weights directly on the feature map of a 3DconvNet, a powerful convolutional network, which takes as input a batch of videos.

3.3.4 Action detection for untrimmed videos

Many approaches have been proposed to solve the problem of action recognition in short clipped 2D videos, which achieved impressive results with hand-crafted and deep features. However, these approaches cannot address real life situations, where cameras provide online and continuous video streams in applications such as robotics, video surveillance, and smart-homes. Here comes the importance of action detection to help recognizing and localizing each action happening in long videos. Action detection can be defined as the ability to localize starting and ending of each human action happening in the video, in addition to recognizing each action label. There have been few action detection algorithms designed for untrimmed videos, which are based on either sliding window, temporal pooling or frame-based labeling. However, their performance is too low to address real-word datasets. A first task consists in benchmarking the already published approaches to study their limitations on novel untrimmed video datasets, recorded following real-world settings. A second task could be to propose a new mechanism to improve either 1) the temporal pooling directly from the 3DconvNet architecture using for instance Temporal Convolution Networks (TCNs) or 2) frame-based labeling with a clustering technique (e.g. using Fisher Vectors) to discover the sub-activities of interest.

3.3.5 View invariant action recognition

The performance of current approaches strongly relies on the used camera angle: enforcing that the camera angle used in testing is the same (or extremely close to) as the camera angle used in training, is necessary for the approach to perform well. On the contrary, the performance drops when a different camera view-point is used. Therefore, we aim at improving the performance of action recognition algorithms by relying on 3D human pose information. For the extraction of the 3D pose information, several open-source algorithms can be used, such as openpose or videopose3D (from CMU or Facebook research, click here). Also, other algorithms extracting 3d meshes can be used. To generate extra views, Generative Adversial Network (GAN) can be used together with the 3D human pose information to complete the training dataset from the missing view.

3.3.6 Uncertainty and action recognition

Another challenge is to combine the short-term actions recognized by powerful Deep Learning techniques with long-term activities defined by constraint-based descriptions and linked to user interest. To realize this objective, we have to compute the uncertainty (i.e. likelihood or confidence), with which the short-term actions are inferred. This research direction is linked to the next one, to Semantic Activity Recognition.

3.4 Semantic Activity Recognition

Participants: François Brémond, Monique Thonnat.

Keywords: Activity Recognition, Scene Understanding, Computer Vision.

3.4.1 Introduction

Semantic activity recognition is a complex process where information is abstracted through four levels: signal (e.g. pixel, sound), perceptual features, physical objects and activities. The signal and the feature levels are characterized by strong noise, ambiguous, corrupted and missing data. The whole process of scene understanding consists in analyzing this information to bring forth pertinent insight of the scene and its dynamics while handling the low-level noise. Moreover, to obtain a semantic abstraction, building activity models is a crucial point. A still open issue consists in determining whether these models should be given a priori or learned. Another challenge consists in organizing this knowledge in order to capitalize experience, share it with others and update it along with experimentation. To face this challenge, tools in knowledge engineering such as machine learning or ontology are needed.

Thus, we work along the following research axes: high level understanding (to recognize the activities of physical objects based on high level activity models), learning (how to learn the models needed for activity recognition) and activity recognition and discrete event systems.

3.4.2 High Level Understanding

A challenging research axis is to recognize subjective activities of physical objects (i.e. human beings, animals, vehicles) based on a priori models and objective perceptual measures (e.g. robust and coherent object tracks).

To reach this goal, we have defined original activity recognition algorithms and activity models. Activity recognition algorithms include the computation of spatio-temporal relationships between physical objects. All the possible relationships may correspond to activities of interest and all have to be explored in an efficient way. The variety of these activities, generally called video events, is huge and depends on their spatial and temporal granularity, on the number of physical objects involved in the events, and on the event complexity (number of components constituting the event).

Concerning the modeling of activities, we are working towards two directions: the uncertainty management for representing probability distributions and knowledge acquisition facilities based on ontological engineering techniques. For the first direction, we are investigating classical statistical techniques and logical approaches. For the second direction, we built a language for video event modeling and a visual concept ontology (including color, texture and spatial concepts) to be extended with temporal concepts (motion, trajectories, events ...) and other perceptual concepts (physiological sensor concepts ...).

3.4.3 Learning for Activity Recognition

Given the difficulty of building an activity recognition system with a priori knowledge for a new application, we study how machine learning techniques can automate building or completing models at the perception level and at the understanding level.

At the understanding level, we are learning primitive event detectors. This can be done for example by learning visual concept detectors using SVMs (Support Vector Machines) with perceptual feature samples. An open question is how far can we go in weakly supervised learning for each type of perceptual concept (i.e. leveraging the human annotation task). A second direction is to learn typical composite event models for frequent activities using trajectory clustering or data mining techniques. We name composite event a particular combination of several primitive events.

3.4.4 Activity Recognition and Discrete Event Systems

The previous research axes are unavoidable to cope with the semantic interpretations. However, they tend to let aside the pure event driven aspects of scenario recognition. These aspects have been studied for a long time at a theoretical level and led to methods and tools that may bring extra value to activity recognition, the most important being the possibility of formal analysis, verification and validation.

We have thus started to specify a formal model to define, analyze, simulate, and prove scenarios. This model deals with both absolute time (to be realistic and efficient in the analysis phase) and logical time (to benefit from well-known mathematical models providing re-usability, easy extension, and verification). Our purpose is to offer a generic tool to express and recognize activities associated with a concrete language to specify activities in the form of a set of scenarios with temporal constraints. The theoretical foundations and the tools being shared with Software Engineering aspects.

The results of the research performed in perception and semantic activity recognition (first and second research directions) produce new techniques for scene understanding and contribute to specify the needs for new software architectures (third research direction).

4 Application domains

4.1 Introduction

While in our research the focus is to develop techniques, models and platforms that are generic and reusable, we also make efforts in the development of real applications. The motivation is twofold. The first is to validate the new ideas and approaches we introduce. The second is to demonstrate how to build working systems for real applications of various domains based on the techniques and tools developed. Indeed, Stars focuses on two main domains: video analytic and healthcare monitoring.

Domain: Video Analytics

Our experience in video analytic (also referred to as visual surveillance) is a strong basis which ensures both a precise view of the research topics to develop and a network of industrial partners ranging from end-users, integrators and software editors to provide data, objectives, evaluation and funding.

For instance, the Keeneo start-up was created in July 2005 for the industrialization and exploitation of Orion and Pulsar results in video analytic (VSIP library, which was a previous version of SUP). Keeneo has been bought by Digital Barriers in August 2011 and is now independent from Inria. However, Stars continues to maintain a close cooperation with Keeneo for impact analysis of SUP and for exploitation of new results.

Moreover, new challenges are arising from the visual surveillance community. For instance, people detection and tracking in a crowded environment are still open issues despite the high competition on these topics. Also detecting abnormal activities may require to discover rare events from very large video data bases often characterized by noise or incomplete data.

Domain: Healthcare Monitoring

Since 2011, we have initiated a strategic partnership (called CobTek) with Nice hospital (CHU Nice, Prof P. Robert) to start ambitious research activities dedicated to healthcare monitoring and to assistive technologies. These new studies address the analysis of more complex spatio-temporal activities (e.g. complex interactions, long term activities).

4.1.1 Research

To achieve this objective, several topics need to be tackled. These topics can be summarized within two points: finer activity description and longitudinal experimentation. Finer activity description is needed for instance, to discriminate the activities (e.g. sitting, walking, eating) of Alzheimer patients from the ones of healthy older people. It is essential to be able to pre-diagnose dementia and to provide a better and more specialized care. Longer analysis is required when people monitoring aims at measuring the evolution of patient behavioral disorders. Setting up such long experimentation with dementia people has never been tried before but is necessary to have real-world validation. This is one of the challenges of the European FP7 project Dem@Care where several patient homes should be monitored over several months.

For this domain, a goal for Stars is to allow people with dementia to continue living in a self-sufficient manner in their own homes or residential centers, away from a hospital, as well as to allow clinicians and caregivers remotely provide effective care and management. For all this to become possible, comprehensive monitoring of the daily life of the person with dementia is deemed necessary, since caregivers and clinicians will need a comprehensive view of the person's daily activities, behavioral patterns, lifestyle, as well as changes in them, indicating the progression of their condition.

4.1.2 Ethical and Acceptability Issues

The development and ultimate use of novel assistive technologies by a vulnerable user group such as individuals with dementia, and the assessment methodologies planned by Stars are not free of ethical, or even legal concerns, even if many studies have shown how these Information and Communication Technologies (ICT) can be useful and well accepted by older people with or without impairments. Thus, one goal of Stars team is to design the right technologies that can provide the appropriate information to the medical carers while preserving people privacy. Moreover, Stars will pay particular attention to ethical, acceptability, legal and privacy concerns that may arise, addressing them in a professional way following the corresponding established EU and national laws and regulations, especially when outside France. Now, Stars can benefit from the support of the COERLE (Comité Opérationnel d'Evaluation des Risques Légaux et Ethiques) to help it to respect ethical policies in its applications.

As presented in 2, Stars aims at designing cognitive vision systems with perceptual capabilities to monitor efficiently people activities. As a matter of fact, vision sensors can be seen as intrusive ones, even if no images are acquired or transmitted (only meta-data describing activities need to be collected). Therefore, new communication paradigms and other sensors (e.g. accelerometers, RFID (Radio Frequency Identification), and new sensors to come in the future) are also envisaged to provide the most appropriate services to the observed people, while preserving their privacy. To better understand ethical issues, Stars members are already involved in several ethical organizations. For instance, F. Brémond has been a member of the ODEGAM - “Commission Ethique et Droit” (a local association in Nice area for ethical issues related to older people) from 2010 to 2011 and a member of the French scientific council for the national seminar on “La maladie d'Alzheimer et les nouvelles technologies - Enjeux éthiques et questions de société” in 2011. This council has in particular proposed a chart and guidelines for conducting researches with dementia patients.

For addressing the acceptability issues, focus groups and HMI (Human Machine Interaction) experts, are consulted on the most adequate range of mechanisms to interact and display information to older people.

5 Social and environmental responsibility

5.1 Footprint of research activities

We have limited our travels by reducing our physical participation to conferences and to international collaborations.

5.2 Impact of research results

We have been involved for many years in promoting public transportation by improving safety onboard and in station. Moreover, we have been working on pedestrian detection for self-driving cars, which will help also reducing the number of individual cars.

6 Highlights of the year

- We have created a new research team in November 2024 after the Spatio-Temporal Activity Recognition Systems (STARS) team reached its 12 years duration at the end of 2024.

- The new Spatio-Temporal Activity Recognition of Social Interactions (STARS) research project-team focuses on the design of computer vision methods for real-time understanding of social interactions observed by sensors. Our objective is to propose new algorithms to analyze the behavior of people suffering from behavioral disorders, in order to improve their quality of life. We will study long-term spatio-temporal interactions performed by humans in their natural environment. We will address this challenge by proposing novel deep-learning architectures to model behavioral traits such as facial expression, gaze, gestures, body behavior, and body language. To cope with the limited amount of available data and the privacy issues of medical data, we propose data generation for data augmentation and anonymization. Another important challenge is to make the link between collected data, medical diagnosis, and ultimately treatments. To validate our research, we will work closely with our clinical partners, in particular those of the Nice Hospital.

- Antitza Dantcheva was promoted to Directrice de Recherche (DR2).

7 New software, platforms, open data

7.1 Open data

We have provided two benchmark datasets.

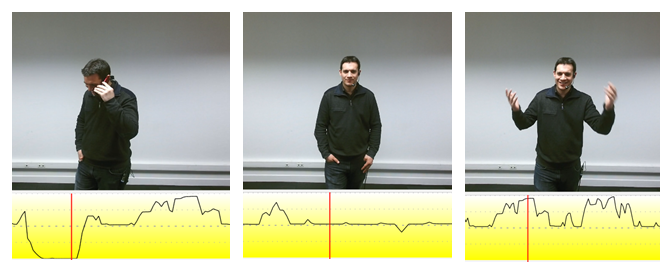

Stress ID Dataset: a Multimodal Dataset for Stress Identification

-

Contributors:

Hava Chaptoukaev, Valeriya Strizhkova, Michele Panariello, Bianca Dalpaos, Aglind Reka, Valeria Manera, Susanne Thummler, Esma Ismailova, Nicholas W., Francois Bremond, Massimiliano Todisco, Maria A Zuluaga and Laura M. Ferrari

-

Description:

It contains RGB facial video, audio and physiological signals (ECG, EDA, Respiration). Different stress-inducing stimuli are used: emotional video-clips, cognitive tasks and public speaking. The total dataset consists of recordings from 65 participants that performed 11 tasks. Each task is labeled by the subjects in terms of stress, relaxation, arousal, and valence. The experimental set-up ensures synchronized, high-quality, and low noise data.

- Project link:

-

Publications:

https://nips.cc/virtual/2023/poster/73454

-

Contact:

stressid.dataset@inria.fr

-

Release contributions:

The Dataset is licensed for non-commercial scientific research purposes.

Toyota Smarthome Datasets: Real-World Activities of Daily Living.

-

Contributors:

R. Dai, S. Das, S. Sharma, L. Minciullo, L. Garattoni, F. Bremond and G. Francesca

-

Description:

Smarthome has been recorded in an apartment equipped with 7 Kinect v1 cameras. It contains the common daily living activities of 18 subjects. The subjects are senior people in the age range 60-80 years old. The dataset has a resolution of 640×480 and offers 3 modalities: RGB + Depth + 3D Skeleton. The 3D skeleton joints were extracted from RGB. For privacy-preserving reasons, the face of the subjects is blurred. Currently, two versions of the dataset are provided: Toyota Smarthome Trimmed and Toyota Smarthome Untrimmed.

-

Dataset DOI:

10.1109/TPAMI.2022.3169976

- Project link:

-

Publications:

Toyota Smarthome Untrimmed: Real-World Untrimmed Videos for Activity Detection, PAMI 2022.

-

Contact:

toyotasmarthome@inria.fr

-

Release contributions:

The Dataset is licensed for non-commercial scientific research purposes.

8 New results

8.1 Introduction

This year Stars has proposed new results related to its three main research axes: (i) perception for activity recognition, (ii) action recognition and (iii) semantic activity recognition.

Perception for Activity Recognition

Participants: François Brémond, Antitza Dantcheva, Michal Balazia, Baptiste Chopin, Di Yang, Abid Ali, Olivier Huynh, Tomasz Stanczyk, Sanya Sinha.

The new results for perception for activity recognition are:

- Identifying Surgical Instruments in Pedagogical Cataract Surgery Videos through an Optimized Aggregation Network (see 8.2)

- Temporally Propagated Masks as an Association Cue for Multi-Object Tracking (see 8.3)

- Re-Evaluating Re-ID in Multi-Object Tracking (see 8.4)

- Anti-forgetting adaptation for unsupervised person re-identification (see 8.5)

- Enhancing age estimation by regularizing with look-alike references and ensuring ordinal continuity (see 8.6)

- P-Age: Pexels Dataset for Robust Spatio-Temporal Apparent Age Classification (see 8.7)

- Synthetic data in human analysis (see 8.8)

- LEO: Generative latent image animator for human video synthesis (see 8.9)

- LIA: Latent image animator (see 8.10)

- Local Distributional Smoothing for Noise-invariant Fingerprint Restoration (see 8.11)

- DiffTV: Identity-Preserved Thermal-to-Visible Face Translation via Feature Alignment and Dual-Stage Conditions (see 8.12)

- GHNeRF: Learning Generalizable Human Features with Efficient Neural Radiance Fields (see 8.13)

- HFNeRF: Learning Human Biomechanical Features with Neural Radiance Fields (see 8.14)

- Bipartite Graph Diffusion Model for Human Interaction Generation (see 8.15)

- Dimitra: Audio-driven Diffusion model for Expressive Talking Head Generation (see 8.16)

Action Recognition

Participants: François Brémond, Antitza Dantcheva, Michal Balazia, Monique Thonnat, Mohammed Guermal, Tanay Agrawal, Abid Ali, Di Yang, Aglind Reka, Valeriya Strizhkova.

The new results for action recognition are:

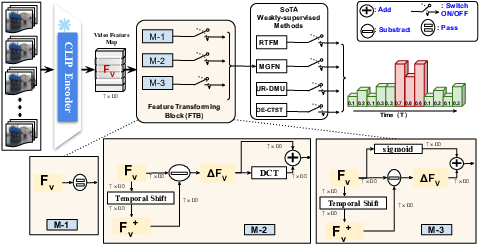

- Learning Effective Video Representations for Action Recognition (see 8.17)

- CM3T: Framework for Efficient Multimodal Learning for Inhomogeneous Interaction Datasets (see 8.18)

- Scaling Action Detection: AdaTAD++ with Transformer-Enhanced Temporal-Spatial Adaptation (see 8.19)

- AM Flow: Adapters for Temporal Processing in Action Recognition (see 8.20)

- View-invariant Skeleton Action Representation Learning via Motion Retargeting (see 8.21)

- Human Activity Recognition in Videos (see 8.22)

- Introducing Gating and Context into Temporal Action Detection (see 8.23)

- MAURA: Video Representation Learning for Conversational Facial Expression Recognition Guided by Multiple View Reconstruction (see 8.24)

- MVP: Multimodal Emotion Recognition based on Video and Physiological Signals (see 8.25)

Semantic Activity Recognition

Participants: François Brémond, Monique Thonnat, Snehashis Majhi, Mohammed Guermal, Mahmoud Ali, Abid Ali, Alexandra Konig, Rachid Guerchouche, Michal Balazia, Utkarsh Tiwari, Yoann Torrado.

For this research axis, the contributions are:

- MultiMediate'24: Multimodal Behavior Analysis for Artificial Mediation (see 8.26)

- OE-CTST: Outlier-Embedded Cross Temporal Scale Transformer for Weakly-supervised Video Anomaly Detection (see 8.27)

- Guess Future Anomalies from Normalcy: Forecasting Abnormal Behavior in Real-World Videos (see 8.28)

- What Matters in Autonomous Driving Anomaly Detection: A Weakly Supervised Horizon (see 8.29)

- Classification of Rare Diseases on Facial Videos (see 8.30)

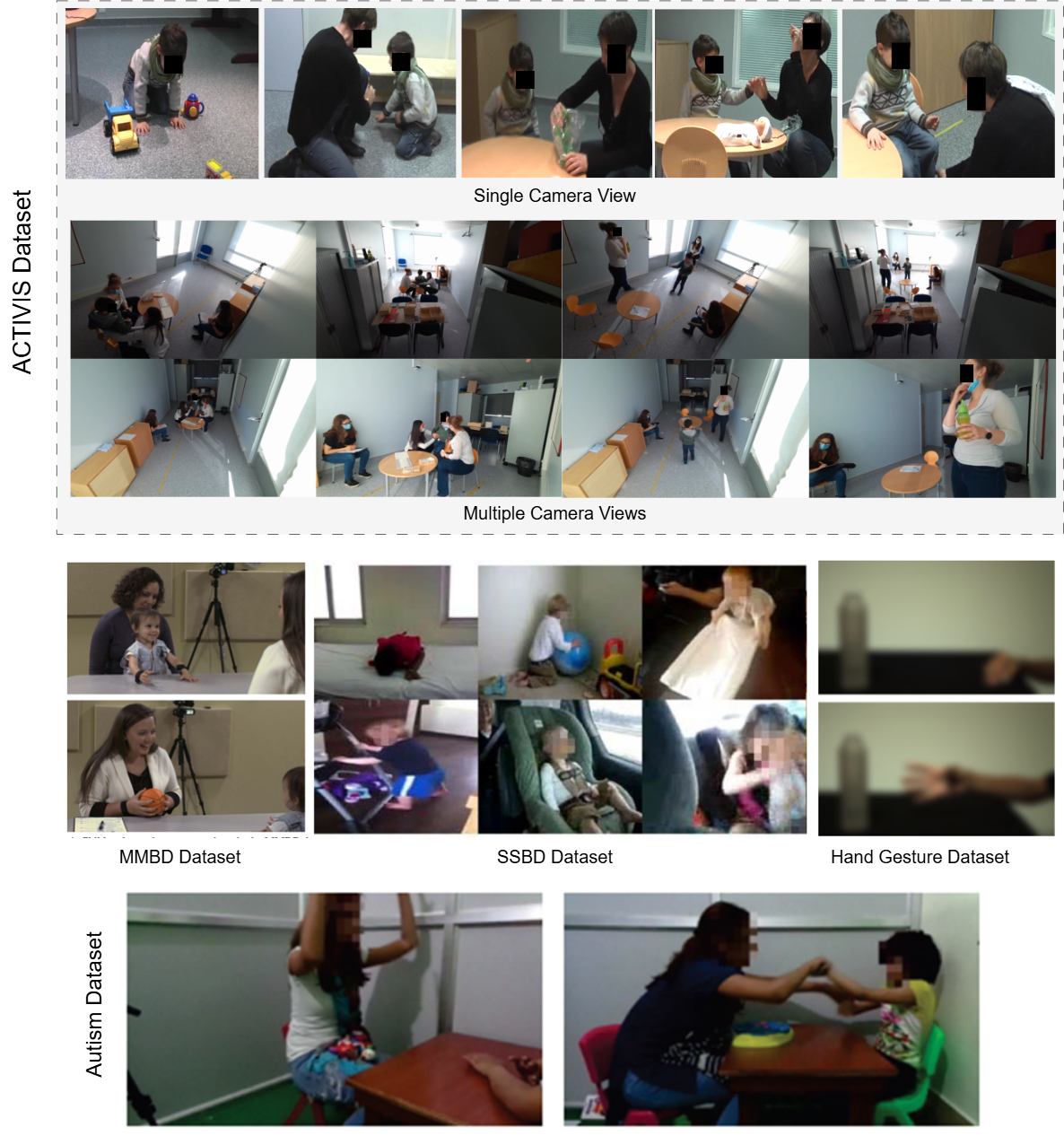

- Weakly-supervised Autism Severity Assessment in Long Videos (see 8.31)

- Video Analysis Using Deep Neural Networks: An Application for Autism (see 8.32)

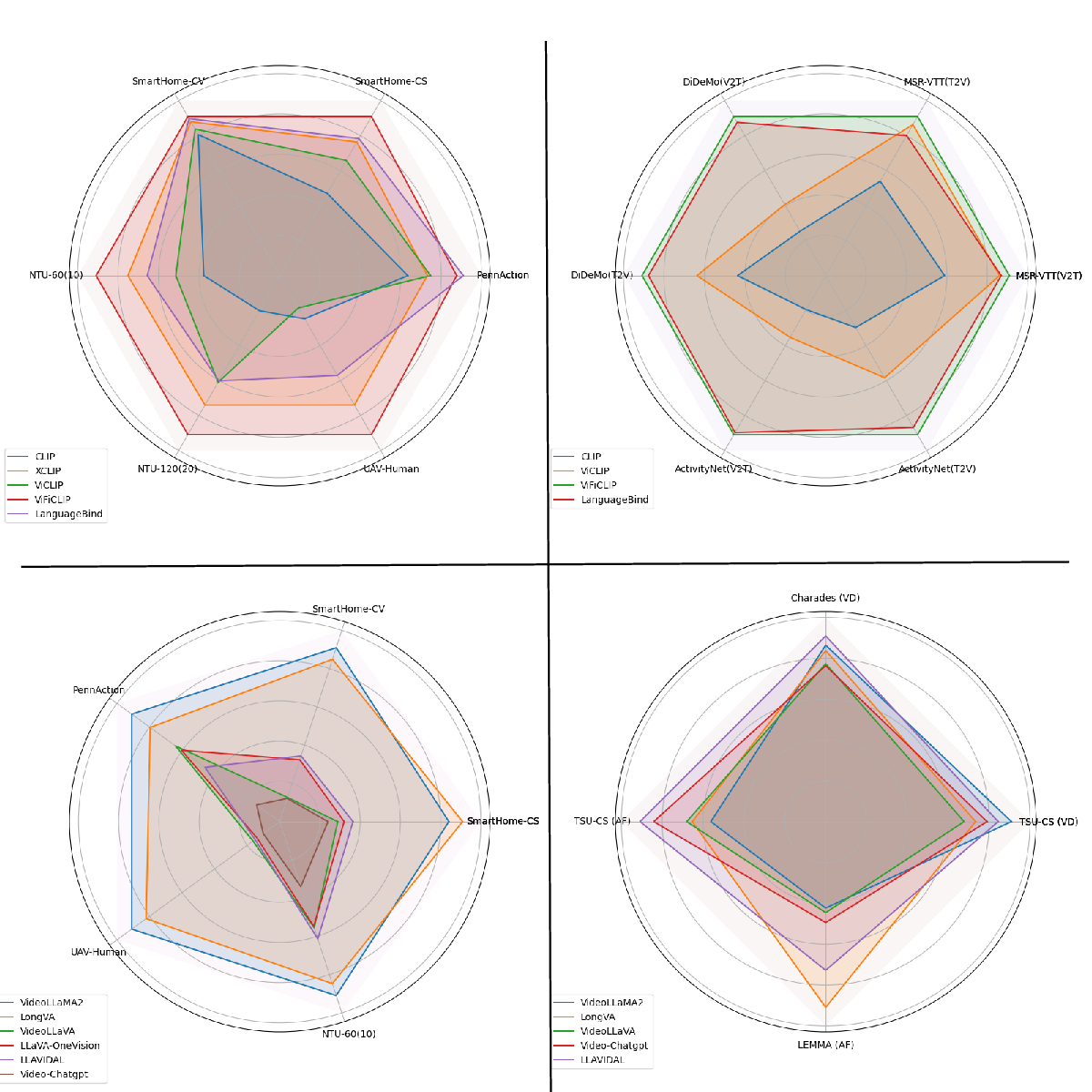

- Quo Vadis, Video Understanding with Vision-Language Foundation Models? (see 8.33)

8.2 Identifying Surgical Instruments in Pedagogical Cataract Surgery Videos through an Optimized Aggregation Network

Participants: Sanya Sinha, Michal Balazia, Francois Bremond.

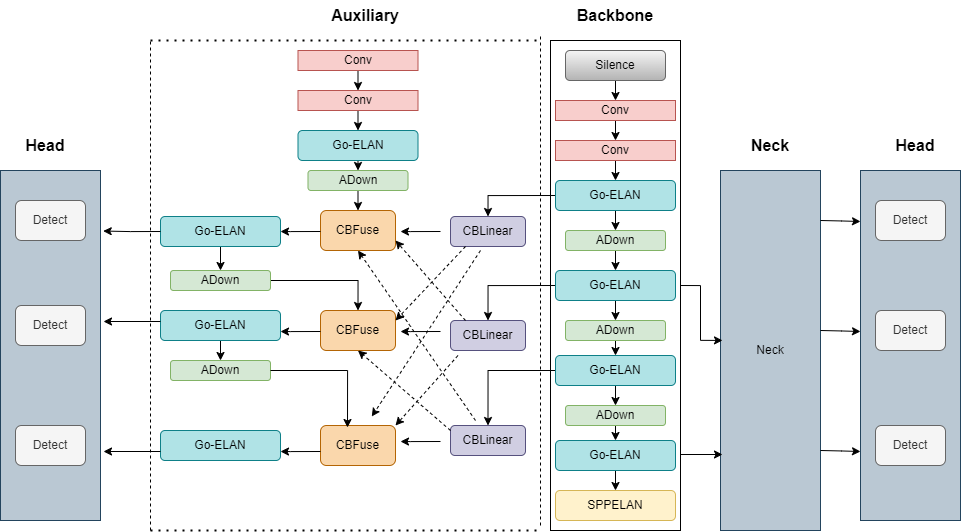

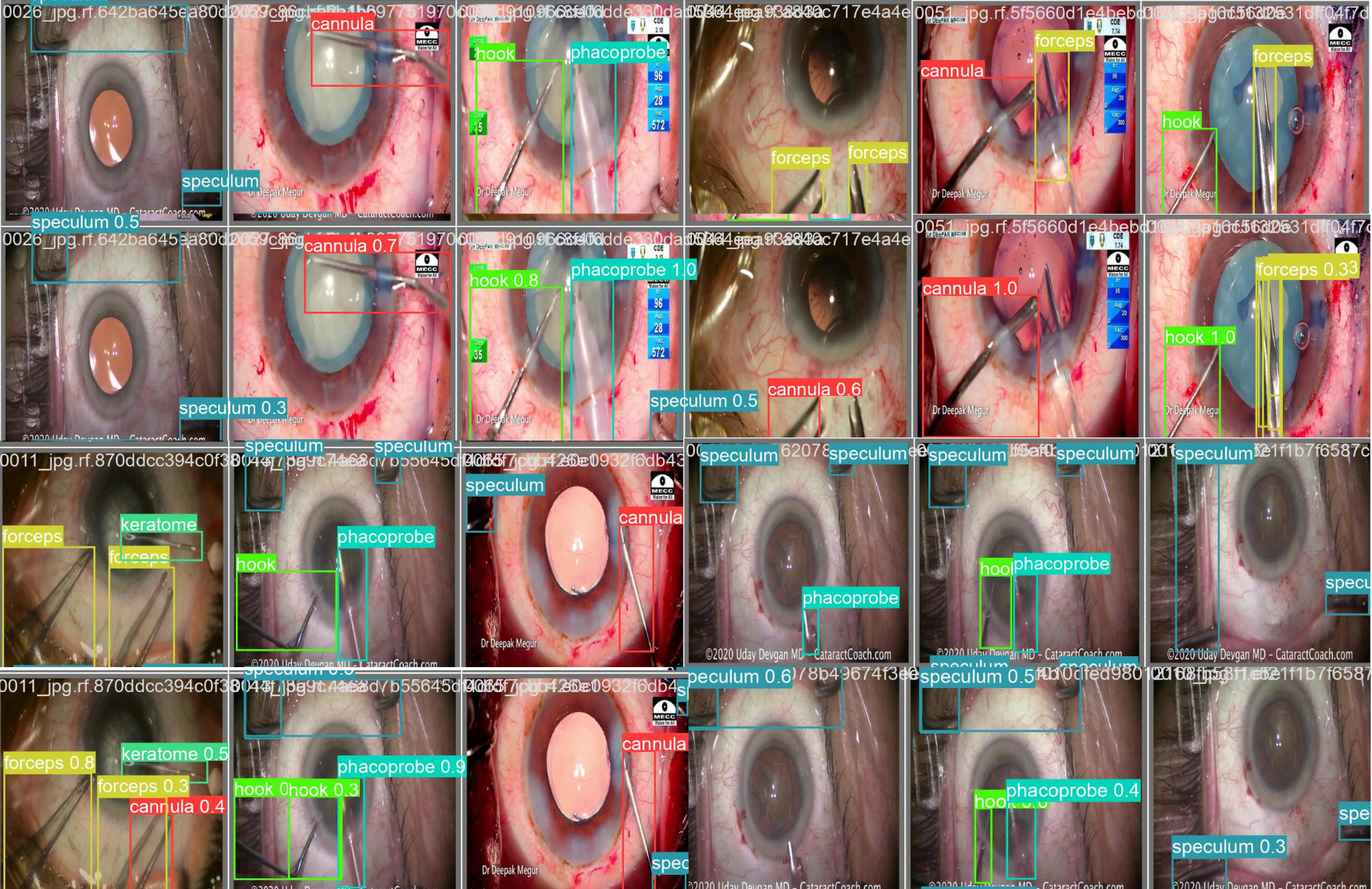

Instructional cataract surgery videos are crucial for ophthalmologists and trainees to observe surgical details repeatedly. In 35, we present a deep learning model for real-time identification of surgical instruments in these videos, using a custom dataset scraped from open-access sources. Inspired by the architecture of YOLOv9, the model employs a Programmable Gradient Information (PGI) mechanism and a novel Generally-Optimized Efficient Layer Aggregation Network (Go-ELAN) to address the information bottleneck problem, enhancing Minimum Average Precision (mAP) at higher Non-Maximum Suppression Intersection over Union (NMS IoU) scores.

Go-ELAN YOLOv9 Architecture (see Figure 4) contains an auxiliary block which works on the Programmable Gradient Information (PGI) concept by creating an auxiliary reverse branch for enabling reliable gradient calculation by avoiding potential semantic loss. The GELAN block in the backbone feature extractor is replaced by the Go-ELAN block proposed in this paper. The Spatial Pyramid Pooling block SPPELAN removes the fixed size limitation of the backbone. The ADown block downsamples the generated feature maps to target sizes. The CBLinear blocks extract higher level features from the images, and the CBFuse block fuses these extracted features. The Neck combines the acquired features and the Head predicts the final bounding bound outputs with their respective probabilities.

Our Go-ELAN YOLOv9 model, evaluated against YOLO v5, v7, v8, v9 vanilla, Laptool and DETR, achieves a superior mAP of 73.74 at IoU 0.5 on a dataset of 615 images with 10 instrument classes, demonstrating the effectiveness of the proposed model. To illustrate the visual and qualitative superiority of our model, we have compared 12 ground-truth images with their respective model predictions in Figure 5.

8.3 Temporally Propagated Masks as an Association Cue for Multi-Object Tracking

Participants: Tomasz Stanczyk, Francois Bremond.

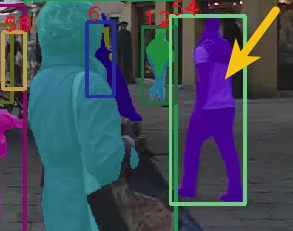

The second contribution involved the development of McByte, a novel tracking-by-detection algorithm that introduces temporally propagated segmentation masks as a robust association cue. Traditional MOT methods often rely solely on bounding boxes, which can struggle under conditions such as heavy occlusion or abrupt motion. McByte addresses these challenges by combining bounding box information with the additional guidance provided by temporally propagated masks, improving object association accuracy and generalizability.

McByte eliminates the need for per-sequence tuning, a common limitation in many existing methods, making it more practical and adaptable across diverse datasets. Evaluations on DanceTrack, MOT17, SoccerNet, and KITTI-tracking demonstrated its consistent superiority over state-of-the-art approaches. The algorithm showed significant improvements in critical tracking metrics, such as HOTA and IDF1, while effectively handling complex scenarios like occlusion and tracking objects across extended sequences. These results highlight McByte's potential as a robust solution for diverse applications, including surveillance, autonomous driving, and sports analytics. Visual example of our designed tracker utilizing the temporally propagated mask as an association cue improving over the baseline algorithm are presented in Figure 6.

|

|

| Frame 319 (baseline) | Frame 401 (baseline) |

|

|

| Frame 319 (McByte) | Frame 401 (McByte) |

8.4 Re-Evaluating Re-ID in Multi-Object Tracking

Participants: Tomasz Stanczyk, Francois Bremond.

Re-identification (Re-ID) algorithms are widely used in tracking-by-detection pipelines for multi-object tracking (MOT) to maintain consistent object identities across frames. A detailed study was conducted to evaluate the effectiveness of Re-ID when integrated with BoT-SORT, focusing on its impact across diverse datasets and conditions. The analysis revealed that, while Re-ID can provide performance gains in controlled scenarios, its overall impact is inconsistent and highly dependent on factors such as dataset characteristics, detection quality, and the extent of fine-tuning.

In several cases, particularly with datasets featuring low-quality detections or a lack of parameter optimization, Re-ID integration negatively affected performance. This study highlighted that the benefits of Re-ID are not universally applicable and emphasized the importance of tailoring Re-ID strategies to specific datasets. These findings serve as a caution for over-reliance on Re-ID and encourage more rigorous evaluation when incorporating it into MOT systems.

8.5 Anti-forgetting adaptation for unsupervised person re-identification

Participants: Francois Bremond.

Regular unsupervised domain adaptive person re-identification (ReID) focuses on adapting a model from a source domain to a fixed target domain. However, an adapted ReID model can hardly retain previously-acquired knowledge and generalize to unseen data. In this work 16, we propose a Dual-level Joint Adaptation and Anti-forgetting (DJAA) framework, which incrementally adapts a model to new domains without forgetting source domain and each adapted target domain. We explore the possibility of using prototype and instance-level consistency to mitigate the forgetting during the adaptation. Specifically, we store a small number of representative image samples and corresponding cluster prototypes in a memory buffer, which is updated at each adaptation step. With the buffered images and prototypes, we regularize the image-to-image similarity and image-to-prototype similarity to rehearse old knowledge. After the multi-step adaptation, the model is tested on all seen domains and several unseen domains to validate the generalization ability of our method. Extensive experiments demonstrate that our proposed method significantly improves the anti-forgetting, generalization and backward-compatible ability of an unsupervised person ReID model.

8.6 Enhancing age estimation by regularizing with look-alike references and ensuring ordinal continuity

Participants: Olivier Huynh, Francois Bremond.

Age estimation is a classic problem in computer vision and can be used by Law Enforcement Agencies (LEAs) to detect minors in Child Sexual Abuse Material (CSAM). We present and aim to address two essential challenges in age estimation: the continuity of the aging process and the proper regularization of the latent space. The latter is particularly hindered by the imbalance of publicly available datasets, which is recognized as a challenge in the field.

To improve prediction results, we propose that the model leverage contextual information related to a cross-age sequence of a similar reference individual. This approach implicitly groups people of the same ethnicity, gender, etc., and provides insight into how a particular person ages. One associated challenge is to indicate to the system the labels of these references. We propose adding them as embeddings to our tokens while simultaneously training an image embedding estimator for the queried image, implemented with self-attention. We also explore the possibility of simulating this embedding across all ages and learning the target label with cross-entropy loss. Regarding regularization, we studied several ways to separate trajectories of distinct individuals using objectives such as ArcFace (CVPR'19) or triplet losses. Since our association criterion is based on look-alike features and to ensure high-quality references, we have manually annotated in FGNET and CAF datasets the association of each image from a trajectory to the images of a look-alike person. We also conducted experiments to automatically enrich our sequences with an unconstrained dataset (APPA-Real) by using a policy trained with the REINFORCE algorithm.

Simultaneously, related to the continuity of the aging process, we propose a novel constraint objective based on the reconstruction of the representation of the queried image from the reference sequence. We found that reconstruction could either collapse if poorly placed in the network or lead to learning difficulties. The optimal and most effective level is to work on the feature map given directly by the encoder, preserving a local feature scale (e.g., wrinkles).

Finally, to showcase the research work and as part of the European project HEROES, a demo tool was developed that integrates face detection and tracking. Experiments were conducted on adult content data, and the tool was used by LEAs on CSAM data with good performance.

8.7 P-Age: Pexels Dataset for Robust Spatio-Temporal Apparent Age Classification

Participants: Abid Ali.

Age estimation is a challenging task that has numerous applications. In this work 23, we propose a new direction for age classification that utilizes a video-based model to address challenges such as occlusions, low-resolution, and lighting conditions. To address these challenges, we propose AgeFormer which utilizes spatio-temporal information on the dynamics of the entire body dominating face-based methods for age classification. Our novel two-stream architecture uses TimeSformer and EfficientNet as backbones, to effectively capture both facial and body dynamics information for efficient and accurate age estimation in videos. Furthermore, to fill the gap in predicting age in real-world situations from videos, we construct a video dataset called Pexels Age (P-Age) for age classification. The proposed method achieves superior results compared to existing face-based age estimation methods and is evaluated in situations where the face is highly occluded, blurred, or masked. The method is also cross-tested on a variety of challenging video datasets such as Charades, Smarthome, and Thumos-14. The code and dataset is available on GitHub.

8.8 Synthetic data in human analysis

Participants: Francois Bremond, Antitza Dantcheva.

Deep neural networks have become prevalent in human analysis, boosting the performance of applications, such as biometric recognition, action recognition, as well as person re-identification. However, the performance of such networks scales with the available training data. In human analysis, the demand for large-scale datasets poses a severe challenge, as data collection is tedious, time-expensive, costly and must comply with data protection laws. Current research investigates the generation of synthetic data as an efficient and privacy-ensuring alternative to collecting real data in the field. This survey 17 introduces the basic definitions and methodologies, essential when generating and employing synthetic data for human analysis. We summarize current state-of-the-art methods and the main benefits of using synthetic data. We also provide an overview of publicly available synthetic datasets and generation models. Finally, we discuss limitations, as well as open research problems in this field. This survey is intended for researchers and practitioners in the field of human analysis.

8.9 LEO: Generative latent image animator for human video synthesis

Participants: Yaohui Wang, Antitza Dantcheva.

Spatio-temporal coherency is a major challenge in synthesizing high-quality videos, particularly in synthesizing human videos that contain rich global and local deformations. To resolve this challenge, previous approaches have resorted to different features in the generation process aimed at representing appearance and motion. However, in the absence of strict mechanisms to guarantee such disentanglement, a separation of motion from appearance has remained challenging, resulting in spatial distortions and temporal jittering that break the spatio-temporal coherency. Motivated by this, we here propose LEO 18, a novel framework for human video synthesis, placing emphasis on spatio-temporal coherency. Our key idea is to represent motion as a sequence of flow maps in the generation process, which inherently isolate motion from appearance. We implement this idea via a flow-based image animator and a Latent Motion Diffusion Model (LMDM). The former bridges a space of motion codes with the space of flow maps, and synthesizes video frames in a warp-and-inpaint manner. LMDM learns to capture motion prior in the training data by synthesizing sequences of motion codes. Extensive quantitative and qualitative analysis suggests that LEO significantly improves coherent synthesis of human videos over previous methods on the datasets TaichiHD, FaceForensics and CelebV-HQ. In addition, the effective disentanglement of appearance and motion in LEO allows for two additional tasks, namely infinite-length human video synthesis, as well as content-preserving video editing.

8.10 LIA: Latent image animator

Participants: Yaohui Wang, Francois Bremond, Antitza Dantcheva.

Previous animation techniques mainly focus on leveraging explicit structure representations (e.g., meshes or keypoints) for transferring motion from driving videos to source images. However, such methods are challenged with large appearance variations between source and driving data, as well as require complex additional modules to respectively model appearance and motion. Towards addressing these issues, we introduce the Latent Image Animator (LIA) 19, streamlined to animate high-resolution images. LIA is designed as a simple autoencoder that does not rely on explicit representations. Motion transfer in the pixel space is modeled as linear navigation of motion codes in the latent space. Specifically such navigation is represented as an orthogonal motion dictionary learned in a self-supervised manner based on proposed Linear Motion Decomposition (LMD). Extensive experimental results demonstrate that LIA outperforms state-of-the-art on VoxCeleb, TaichiHD, and TED-talk datasets with respect to video quality and spatio-temporal consistency. In addition, LIA is well equipped for zero-shot high-resolution image animation. Code, models, and demo video are available on GitHub.

8.11 Local Distributional Smoothing for Noise-invariant Fingerprint Restoration

Participants: Antitza Dantcheva.

Existing fingerprint restoration models fail to generalize on severely noisy fingerprint regions. To achieve noise-invariant fingerprint restoration, this work 31 proposes to regularize the fingerprint restoration model by enforcing local distributional smoothing by generating similar output for clean and perturbed fingerprints. Notably, the perturbations are learnt by virtual adversarial training so as to generate the most difficult noise patterns for the fingerprint restoration model. Improved generalization on noisy fingerprints is obtained by the proposed method on two publicly available databases of noisy fingerprints.

8.12 DiffTV: Identity-Preserved Thermal-to-Visible Face Translation via Feature Alignment and Dual-Stage Conditions

Participants: Antitza Dantcheva.

The thermal-to-visible (T2V) face translation task is essential for enabling face verification in low-light or dark conditions by converting thermal infrared faces into their visible counterparts. However, this task faces two primary challenges. First, the inherent differences between the modalities hinder the effective use of thermal information to guide RGB face reconstruction. Second, translated RGB faces often lack the identity details of the corresponding visible faces, such as skin color. To tackle these challenges, we introduce DiffTV 39, the first Latent Diffusion Model (LDM) specifically designed for T2V facial image translation with a focus on preserving identity. Our approach proposes a novel heterogeneous feature alignment strategy that bridges the modal gap and extracts both coarse- and fine-grained identity features consistent with visible images. Furthermore, a dual-stage condition injection strategy introduces control information to guide identity-preserved translation. Experimental results demonstrate the superior performance of DiffTV, particularly in scenarios where maintaining identity integrity is critical.

8.13 GHNeRF: Learning Generalizable Human Features with Efficient Neural Radiance Fields

Participants: Di Yang, Antitza Dantcheva.

Recent advances in Neural Radiance Fields (NeRF) have demonstrated promising results in 3D scene representations, including 3D human representations. However, these representations often lack crucial information on the underlying human pose and structure, which is crucial for AR/VR applications and games. In this work 28, we introduce a novel approach, termed GHNeRF, designed to address these limitations by learning 2D/3D joint locations of human subjects with NeRF representation. GHNeRF uses a pre-trained 2D encoder streamlined to extract essential human features from 2D images, which are then incorporated into the NeRF framework in order to encode human biomechanical features. This allows our network to simultaneously learn biomechanical features, such as joint locations, along with human geometry and texture. To assess the effectiveness of our method, we conduct a comprehensive comparison with state-of-the-art human NeRF techniques and joint estimation algorithms. Our results show that GHNeRF can achieve state-of-the-art results in near real-time.

8.14 HFNeRF: Learning Human Biomechanical Features with Neural Radiance Fields

Participants: Di Yang, Antitza Dantcheva.

In recent advancements in novel view synthesis, generalizable Neural Radiance Fields (NeRF) based methods applied to human subjects have shown remarkable results in generating novel views from few images. However, this generalization ability cannot capture the underlying structural features of the skeleton shared across all instances. Building upon this, we introduce HFNeRF 29: a novel generalizable human feature NeRF aimed at generating human biomechanical features using a pre-trained image encoder. While previous human NeRF methods have shown promising results in the generation of photorealistic virtual avatars, such methods lack underlying human structure or biomechanical features such as skeleton or joint information that are crucial for downstream applications including Augmented Reality (AR)/Virtual Reality (VR). HFNeRF leverages 2D pre-trained foundation models toward learning human features in 3D using neural rendering, and then volume rendering towards generating 2D feature maps. We evaluate HFNeRF in the skeleton estimation task by predicting heatmaps as features. The proposed method is fully differentiable, allowing to successfully learn color, geometry, and human skeleton in a simultaneous manner. This paper presents preliminary results of HFNeRF, illustrating its potential in generating realistic virtual avatars with biomechanical features using NeRF.

8.15 Bipartite Graph Diffusion Model for Human Interaction Generation

Participants: Baptiste Chopin.

The generation of natural human motion interactions is a hot topic in computer vision and computer animation. It is a challenging task due to the diversity of possible human motion interactions. Diffusion models, which have already shown remarkable generative capabilities in other domains, are a good candidate for this task. In this work 27, we introduce a novel bipartite graph diffusion method (BiGraphDiff) to generate human motion interactions between two persons. Specifically, bipartite node sets are constructed to model the inherent geometric constraints between skeleton nodes during interactions. The interaction graph diffusion model is transformer-based, combining some state-of-the-art motion methods. We show that the proposed method achieves new state-of-the-art results on leading benchmarks for the human interaction generation task.

8.16 Dimitra: Audio-driven Diffusion model for Expressive Talking Head Generation

Participants: Baptiste Chopin, Antitza Dantcheva.

We propose Dimitra, a novel framework for audio-driven talking head generation, streamlined to learn lip motion, facial expression, as well as head pose motion. Specifically, we train a conditional Motion Diffusion Transformer (cMDT) by modeling facial motion sequences with 3D representation. We condition the cMDT with only two input signals, an audio- sequence, as well as a reference facial image. By extracting additional features directly from audio, Dimitra is able to increase quality and realism of generated videos. In particular, phoneme sequences contribute to the realism of lip motion, whereas text transcript to facial expression and head pose realism. Quantitative and qualitative experiments on two widely employed datasets, VoxCeleb2 and HDTF, showcase that Dimitra is able to outperform existing approaches for generating realistic talking heads imparting lip motion, facial expression, and head pose. This work was submitted to FG 2025. We extend Dimitra by minimizing the number of features extracted from the audio. Instead of text, phoneme and deep features from wav2vec, we only use deep features from Wavlm. The high-quality features from Wavlm are able to better encode the audio resulting in features that contains information about the phonemes and the semantics. This leads to better results quantitatively and qualitatively. Additionally we modify our training scheme by training on the HDTF dataset and finetuning on the LRW dataset. The LRW dataset contains a large number of samples representing 500 common english word, allowing Dimitra to learn better representation for the lips motion of these words increasing the overall quality of the generated videos. Finally, in an effort to propose an alternative to the widely used, but inherently flawed, syncnet based metric for lips synchronization evaluation, we propose a new way to evaluate lips synchronization. This new evaluation consists of several metrics, each evaluating an aspect of lips synchronization (e.g., the lips do not move during silence, the correct mouth shape are being generated). To extend this work beyond talking head generation, we are looking into co-speech gesture generation using 3D meshes. With this we can generate both face and full body motion related to speech resulting in more realistic videos.

8.17 Learning Effective Video Representations for Action Recognition

Participants: Di Yang, Francois Bremond.

Human action recognition is an active research field with significant contributions to applications such as home-care monitoring, human-computer interaction, and game control. However, recognizing human activities in real-world videos remains challenging, especially when learning effective video representations with a high expressive power to represent human spatio-temporal motion, view-invariant actions, complex composable actions, etc. To address this challenge, this thesis 42 makes three contributions toward learning effective video representations that can be applied and evaluated in real-world human action classification and segmentation tasks by transfer learning.

The first contribution is to improve the generalizability of human skeleton motion representation models. We propose a unified framework for real-world skeleton human action recognition. The framework includes a novel skeleton model that not only effectively learns spatio-temporal features on human skeleton sequences but also generalizes across datasets.

The second contribution extends the proposed framework by introducing two novel joint skeleton action generation and representation learning frameworks for different downstream tasks. The first is a self-supervised framework for learning from synthesized composable motions for skeleton-based action segmentation. The second is a View-invariant model for self-supervised skeleton action representation learning that can deal with large variations across subjects and camera viewpoints.

The third contribution targets general RGB-based video action recognition. Specifically, a time-parameterized contrastive learning strategy is proposed. It captures time-aware motions to improve the performance of action classification in fine-grained and human-oriented tasks. Experimental results on benchmark datasets demonstrate that the proposed approaches achieve state-of-the-art performance in action classification and segmentation tasks. The proposed frameworks improve the accuracy and interpretability of human activity recognition and provide insights into the underlying structure and dynamics of human actions in videos.

Overall, this thesis contributes to the field of video understanding by proposing novel methods for skeleton-based action representation learning, and general RGB video representation learning. Such representations benefit both action classification and segmentation tasks.

8.18 CM3T: Framework for Efficient Multimodal Learning for Inhomogeneous Interaction Datasets

Participants: Tanay Agrawal, Mohammed Guermal, Michal Balazia, Francois Bremond.

(Accepted for WACV 2025)

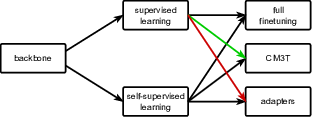

Challenges in cross-learning involve inhomogeneous or even inadequate amount of training data and lack of resources for retraining large pretrained models. Inspired by transfer learning techniques in NLP, adapters and prefix tuning, this work presents a new model-agnostic plugin architecture for cross-learning, called CM3T, that adapts transformer-based models to new or missing information. We introduce two adapter blocks 21: multi-head vision adapters for transfer learning and cross-attention adapters for multimodal learning. Training becomes substantially efficient as the backbone and other plugins do not need to be finetuned along with these additions. Comparative and ablation studies on three datasets Epic-Kitchens-100, MPIIGroupInteraction and UDIVA v0.5 show efficacy of this framework on different recording settings and tasks. With only 12.8% trainable parameters compared to the backbone to process video input and only 22.3% trainable parameters for two additional modalities, we achieve comparable and even better results than the state-of-the-art. CM3T has no specific requirements for training or pretraining and is a step towards bridging the gap between a general model and specific practical applications of video classification. Figure 8 illustrates a representation of the main problem CM3T aims to solve.

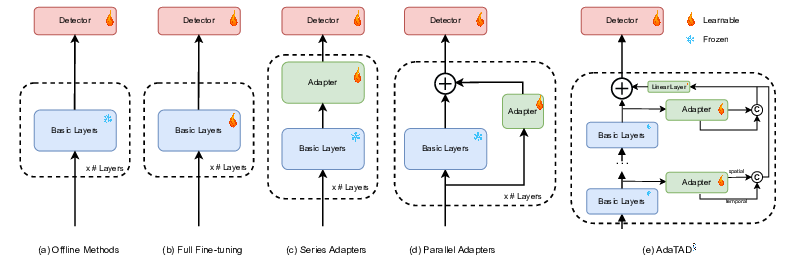

8.19 Scaling Action Detection: AdaTAD++ with Transformer-Enhanced Temporal-Spatial Adaptation

Participants: Tanay Agrawal, Abid Ali, Francois Bremond.

Temporal Action Detection (TAD) is essential for understanding long-form videos by detecting and segmenting actions within untrimmed sequences. Although recent innovations, such as Temporal Informative Adapters (TIA), have advanced the scalability of TAD, memory constraints persist, specifically during training, hindering the handling of larger video inputs. This paper presents an enhanced TIA framework that introduces independently trainable temporal and spatial adapters. Our two-step training protocol initially trains low spatial and high temporal resolution, followed by high spatial and low temporal resolution, allowing higher input resolutions during inference. We further replace the 1D convolutional layers with a transformer encoder for temporal feature extraction to capture long-range dependencies more effectively. Extensive experiments on ActivityNet-1.3, THUMOS14, and EPIC-Kitchens 100 datasets demonstrate our approach's ability to balance performance gains with computational feasibility, achieving state-of-the-art results in both detection accuracy and scalability, see Figure 9. This work provides a pathway for future TAD models to scale efficiently, leveraging high-resolution data while minimizing memory overhead.

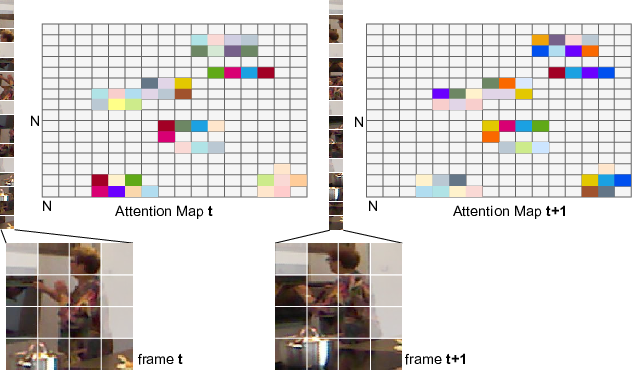

8.20 AM Flow: Adapters for Temporal Processing in Action Recognition

Participants: Tanay Agrawal, Abid Ali, Antitza Dantcheva, Francois Bremond.

Deep learning models, in particular image models, have recently gained generalizability and robustness. In this work, we propose to exploit such advances in the realm of video classification. Video foundation models suffer from the requirement of extensive pretraining and a large training time. Towards mitigating such limitations, we propose "Attention Map (AM) Flow" for image models, a method for identifying pixels relevant to motion in each input video frame. In this context, we propose two methods to compute AM flow, depending on camera motion. AM flow allows the separation of spatial and temporal processing, while providing improved results over combined spatio-temporal processing (as in video models). Adapters, one of the popular techniques in parameter efficient transfer learning, facilitate the incorporation of AM flow into pretrained image models, mitigating the need for full-finetuning. We extend adapters to "temporal processing adapters" by incorporating a temporal processing unit into the adapters, see Figure 10. Our work achieves faster convergence, therefore reducing the number of epochs needed for training. Moreover, we endow an image model with the ability to achieve state-of-the-art results on popular action recognition datasets. This reduces training time and simplifies pretraining. We present experiments on Kinetics-400, Something-Something v2, and Toyota Smarthome datasets, showcasing state-of-the-art or comparable results.

8.21 View-invariant Skeleton Action Representation Learning via Motion Retargeting

Participants: Di Yang, Antitza Dantcheva, Francois Bremond.

Current self-supervised approaches for skeleton action representation learning often focus on constrained scenarios, where videos and skeleton data are recorded in laboratory settings. When dealing with estimated skeleton data in real-world videos, such methods perform poorly due to the large variations across subjects and camera viewpoints. To address this issue, we introduce ViA 20, a novel View-Invariant Autoencoder for self-supervised skeleton action representation learning. ViA leverages motion retargeting between different human performers as a pretext task, in order to disentangle the latent action-specific `Motion' features on top of the visual representation of a 2D or 3D skeleton sequence. Such `Motion' features are invariant to skeleton geometry and camera view and allow ViA to facilitate both, cross-subject and cross-view action classification tasks. We conduct a study focusing on transfer-learning for skeleton-based action recognition with self-supervised pre-training on real-world data (e.g., Posetics). Our results showcase that skeleton representations learned from ViA are generic enough to improve upon state-of-the-art action classification accuracy, not only on 3D laboratory datasets such as NTU-RGB+D 60 and NTU-RGB+D 120, but also on real-world datasets where only 2D data are accurately estimated, e.g., Toyota Smarthome, UAV-Human and Penn Action.

8.22 Human Activity Recognition in Videos

Participants: Mohammed Guermal, Francois Bremond.

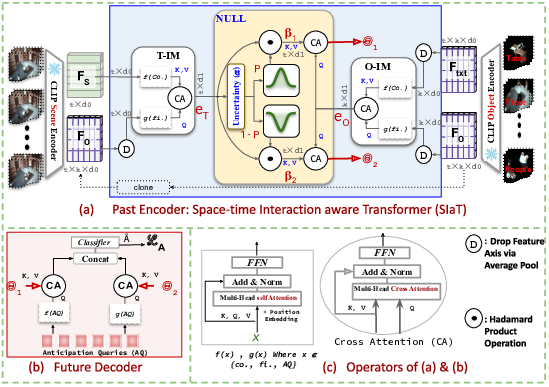

Understanding actions in videos is a pivotal aspect of computer vision with profound implications across various domains. As our reliance on visual data continues to surge, the ability to comprehend and interpret human actions in videos is necessary for advancing technologies in surveillance, healthcare, autonomous systems, and human-computer interaction. Moreover, there is an unprecedented economic and societal demand for robots that can assist humans in their industrial work and daily life activities. Hence, understanding human behavior and their activities would be very helpful and would facilitate the development of such robots. The accurate interpretation of actions in videos serves as a cornerstone for the development of intelligent systems that can navigate and respond effectively to the complexities of the real world. Computer Vision has made huge progress with the rise of deep learning methods such as convolutional neural networks (CNNs) and more lately transformers. However, when it comes to video processing, it is still limited compared to static images. In this thesis 41, we focus on action understanding and we divide it into two main parts: action recognition and action detection. Mainly, action understanding algorithms face the following challenges: 1) temporal and spatial analysis, 2) fine grained actions, and 3) temporal modeling.

In this thesis, we introduce in more detail the different aspects and key challenges of action understanding. After that, we introduce our contributions and solutions on how to deal with these challenges. We focus mainly on recognizing fine-grained actions using spatio-temporal object semantics and their dependencies in space and time. We tackle also action detection in real-time and anticipation by introducing a new joint model of action anticipation and online action detection for real-life scenarios applications of action detection. Finally, we discuss some ongoing and future works. All our contributions were extensively evaluated on challenging benchmarks and outperformed previous works.

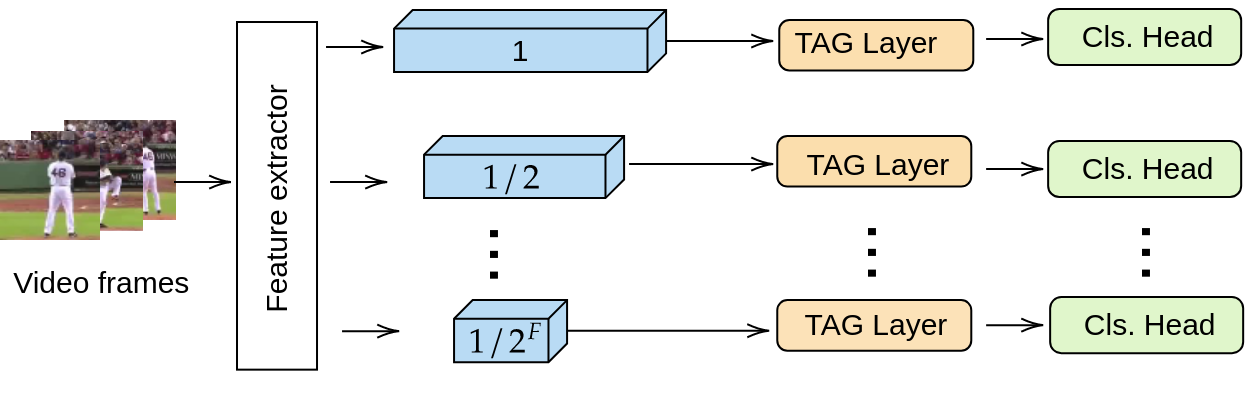

8.23 Introducing Gating and Context into Temporal Action Detection

Participants: Aglind Reka, Diana Borza, Michal Balazia, Francois Bremond.

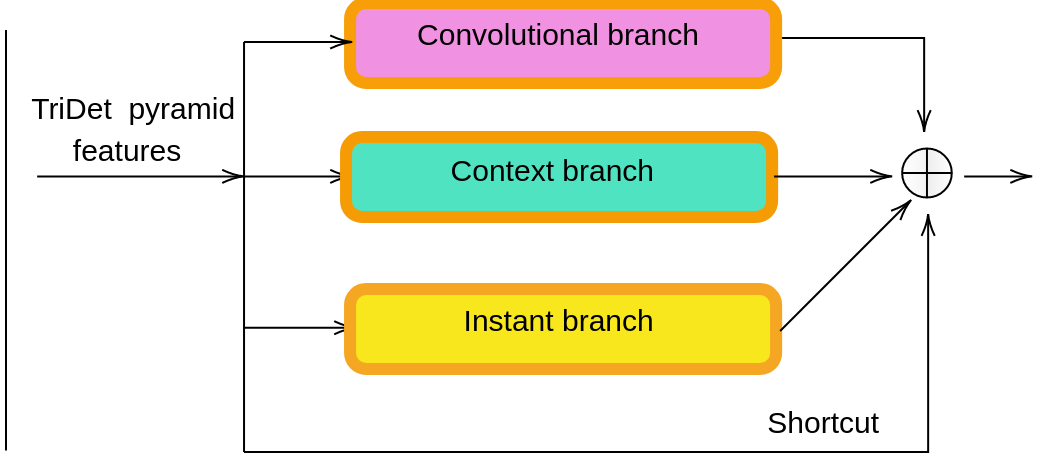

Temporal Action Detection (TAD), the task of localizing and classifying actions in untrimmed video, remains challenging due to action overlaps and variable action durations. Recent findings suggest that TAD performance is dependent on the structural design of transformers rather than on the self-attention mechanism. Building on this insight, we propose a refined feature extraction process through lightweight, yet effective operations. First, we employ a local branch that employs parallel convolutions with varying window sizes to capture both fine-grained and coarse-grained temporal features. This branch incorporates a gating mechanism to select the most relevant features. Second, we introduce a context branch that uses boundary frames as key-value pairs to analyze their relationship with the central frame through cross-attention. The proposed method 34 captures temporal dependencies and improves contextual understanding.

This work introduces a one-stage TAD model, built on top of the TriDet architecture. As its predecessor, the model comprises three modules: a video feature extractor, a feature pyramid extractor that progressively down-samples the video features to effectively handle actions of different lengths, and a boundary-oriented Trident-head for action localization and classification. Figure 11 shows the model consisting of a video feature extractor, a feature pyramid extractor, and a boundary-oriented head for action localization and classification. Figure 11 also depicts the structure of the proposed Temporal Attention Gating layer.

Conducted on two TAD benchmarks, EPIC-KITCHEN 100 and THUMOS14, our experiments confirm the efficacy of our proposed model. The results demonstrate an improvement in detection performance compared to existing methods, underlining the benefits of the proposed architectural design.

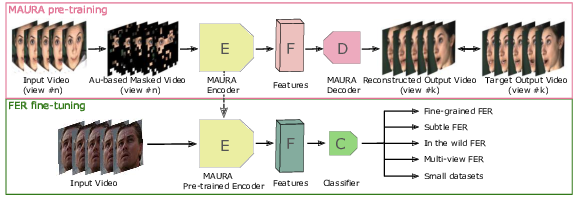

8.24 MAURA: Video Representation Learning for Conversational Facial Expression Recognition Guided by Multiple View Reconstruction

Participants: Valeriya Strizhkova, Antitza Dantcheva, Francois Bremond.

The paper 36 introduces MAURA (Masking Action Units and Reconstructing Multiple Angles), a novel self-supervised pre-training method for conversational facial expression recognition (cFER), see Figure 12. MAURA addresses challenges such as fine-grained emotional expressions, low-intensity emotions, extreme face angles, and limited datasets. Unlike existing methods, MAURA incorporates an innovative masking and reconstruction strategy that focuses on active facial muscle movements (Action Units) and reconstructs synchronized multi-view videos to capture dependencies between muscle movements.

The MAURA framework uses an asymmetric encoder-decoder architecture, where the encoder processes videos with masked Action Units, and the decoder reconstructs videos from different angles. This approach enables the model to learn robust, transferable video representations, capturing subtle emotional expressions even in challenging conditions.

The method demonstrates superior performance over state-of-the-art techniques in various tasks, including low-intensity, fine-grained, multi-view, and in-the-wild facial expression recognition. Experiments on datasets such as MEAD, DFEW, CMU-MOSEI, and MFA highlight MAURA’s capability to improve emotion classification accuracy, particularly in cases with limited training data and complex visual conditions.

MAURA’s contributions include a novel masking strategy, multi-view representation learning, and significant improvements in emotion recognition tasks. The study concludes with future directions, including integrating additional modalities (e.g., audio and language) and exploring other applications like lip synchronization and DeepFake detection.

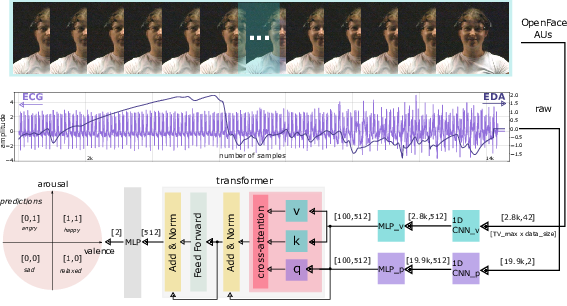

8.25 MVP: Multimodal Emotion Recognition based on Video and Physiological Signals

Participants: Valeriya Strizhkova, Michal Balazia, Antitza Dantcheva, Francois Bremond.

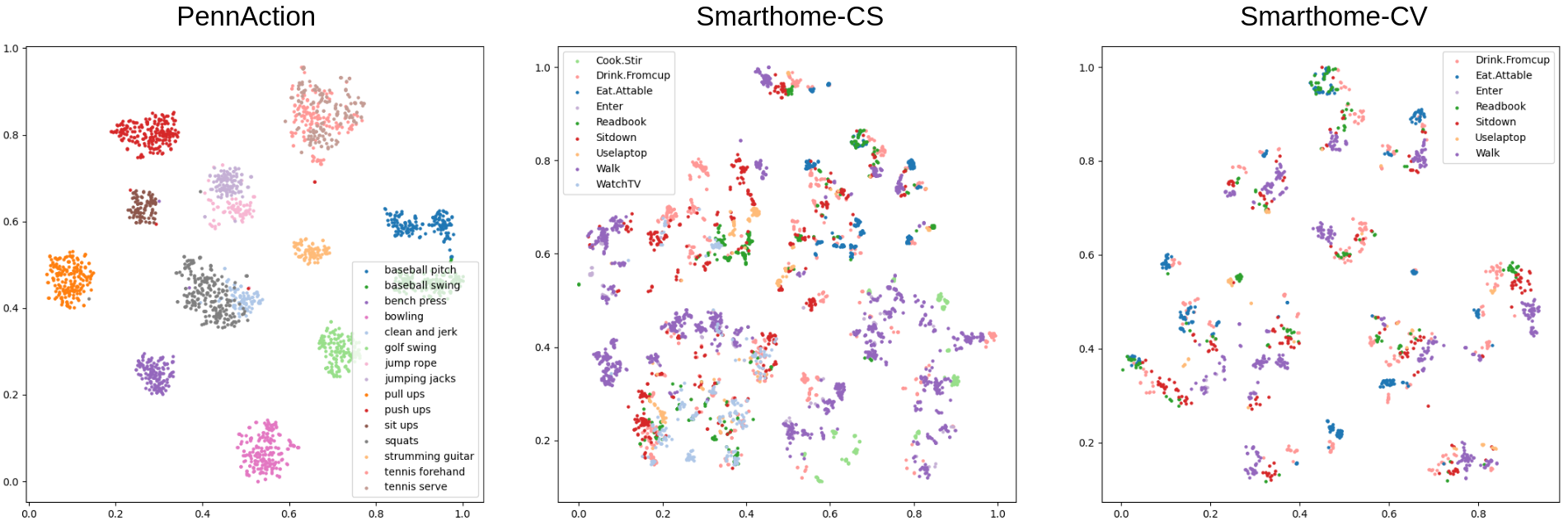

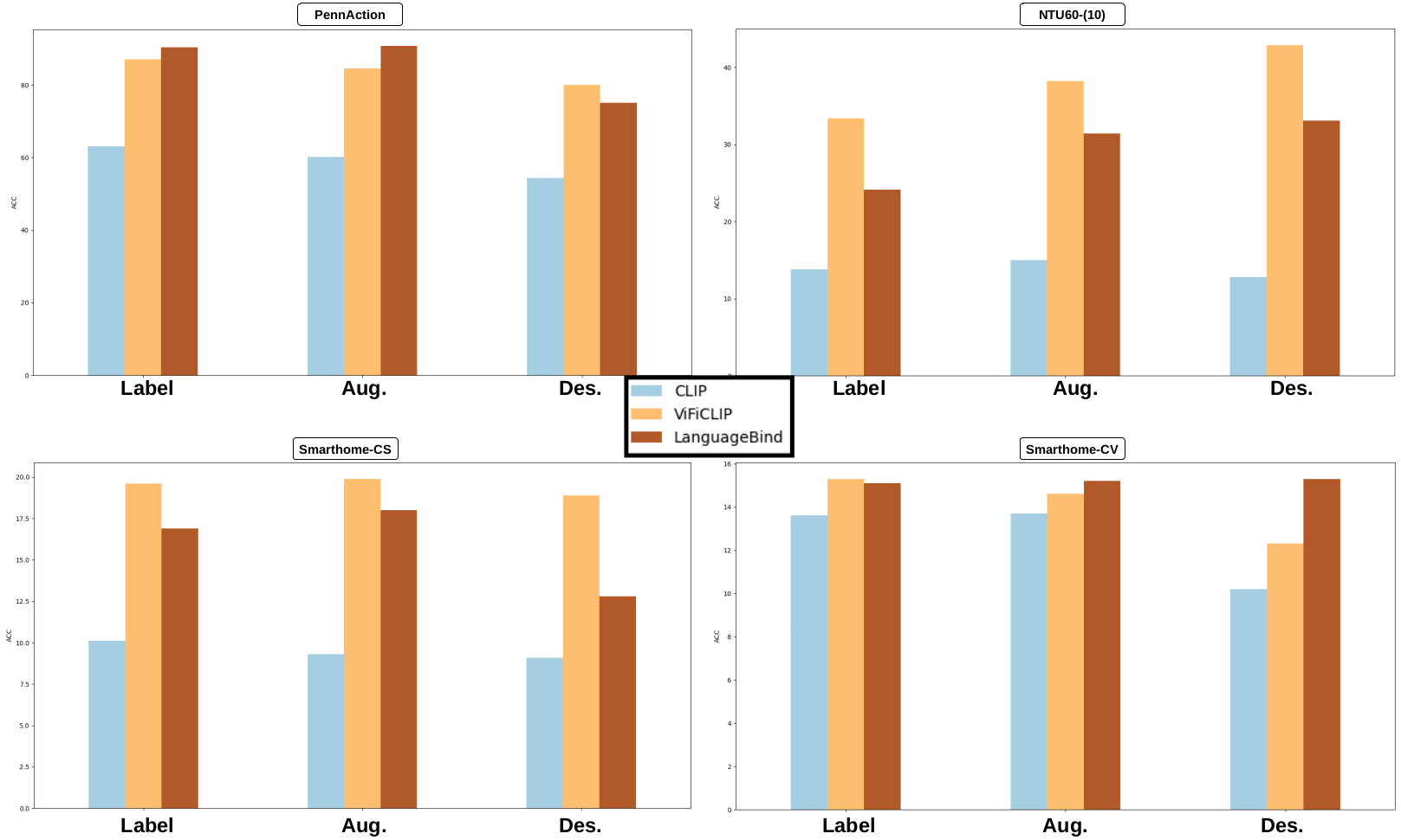

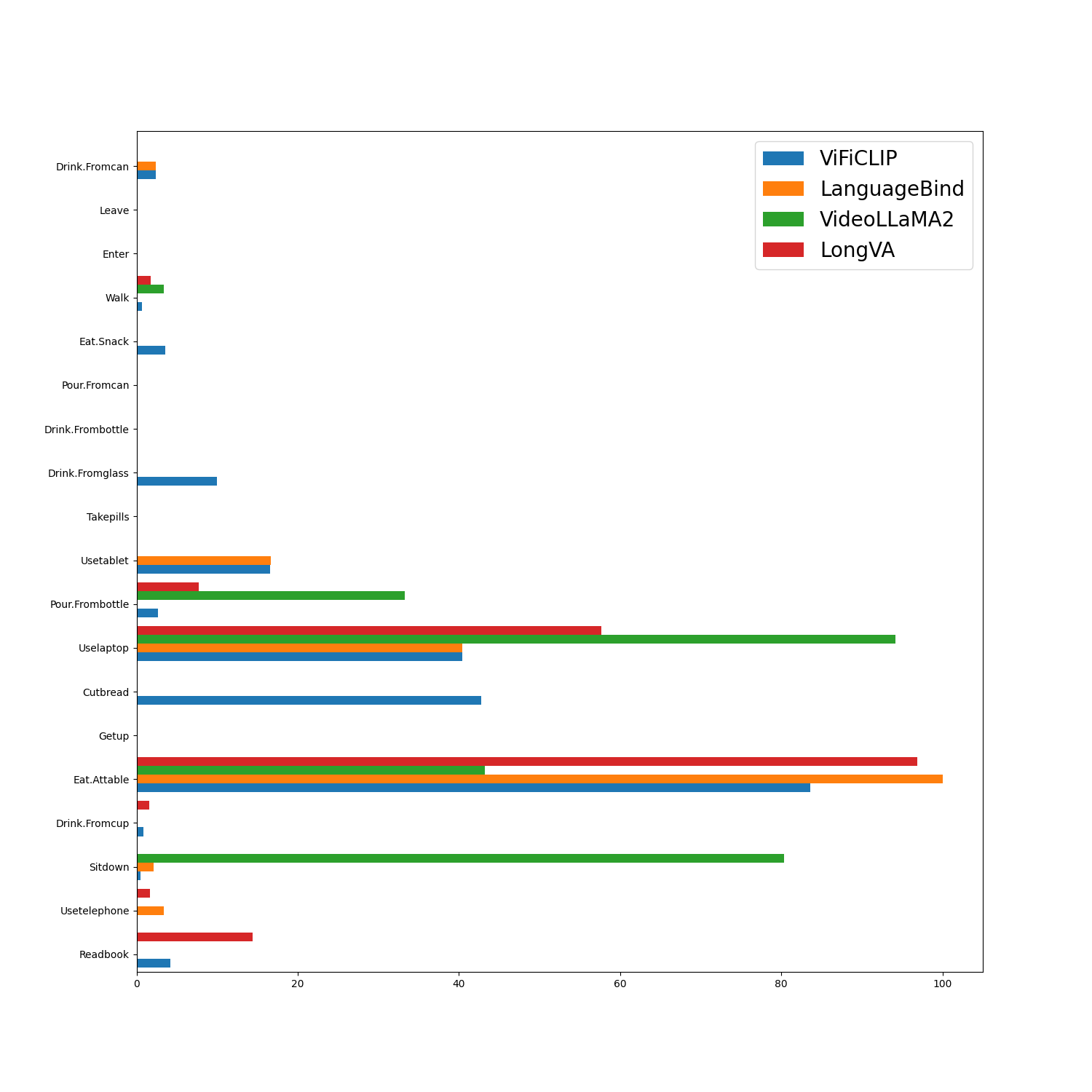

The paper 37 introduces the Multimodal for Video and Physio (MVP) architecture, designed to advance emotion recognition by fusing video and physiological signals. Unlike traditional methods that rely on classic machine learning, MVP leverages deep learning and transformer-based architectures to handle long input sequences (1-2 minutes). The model integrates video features (Action Units or deep learning-extracted representations) with physiological signals (e.g., ECG and EDA), employing cross-attention to learn dependencies across modalities effectively.