Section: Overall Objectives

Scientific Grounds

Public-key cryptography is our main application target. We are interested in the study of the cryptographic primitives that serve as a basis for the most widespread protocols.

Since the early days of public-key cryptography, and through the practices and international standards that have been established for several decades, the most widespread cryptographic primitives have been the RSA cryptosystem, as well as the Diffie–Hellman key exchange using multiplicative groups of finite fields. The level of security provided by these cryptographic primitives is related to the hardness of the underlying mathematical problems, which are integer factorization and the discrete logarithm problem. The complexity of attacking them is known to be subexponential in the public key size, and more precisely written as for factoring an integer , where the notation stands for

This complexity is achieved with the Number Field Sieve (NFS) algorithm and its many derivatives. This means that as the desired security level grows, the matching public key size grows roughly like . As to how these complexity estimates translate into concrete assessments and recommendations, the hard facts are definitely the computational records that are set periodically by academics, and used as key ingredients by governmental agencies emitting recommendations for industry [31], [18].

Software for NFS is obviously the entry point to computational records. Few complete NFS implementations exist, and their improvement is of crucial importance for better assessment of the hardness of the key cryptographic primitives considered. Here, “improvement” may be understood in many ways: better algorithms (outperforming the NFS algorithm as a whole is certainly a tremendous improvement, but replacing one of its numerous substeps is one, too), better implementations, better parallelization, or better adaptation to suitable hardware. The numerous sub-algorithms of NFS strongly depend on arithmetic efficiency. This concerns various mathematical objects, from integers and polynomials to ideals in number fields, lattices, or linear algebra.

Since the early 1990's, no new algorithm has improved on the complexity of NFS. As it is used in practice, the algorithm has complexity for factoring general integers or for computing discrete logarithms in prime fields of similar size (the so-called “multiple polynomial” variants have better complexity by a very thin margin, but this has not yet yielded a practical improvement). Given the wide use of the underlying hard problems, progress in this area is of utmost importance. In 2013, several new algorithms have modified the complexity of the discrete logarithm problem in small characteristic fields, which is a closely related problem, reaching a heuristic quasi-polynomial time algorithm [20], [27], [26], [24]. A stream of computational records have been obtained since 2013 with these algorithms, using in particular techniques from polynomial system solving, or from Galois theory. These new algorithms, together with these practical realizations, have had a very strong impact of course on the use of small-characteristic fields for cryptography (now clearly unsuitable), as well as on pairings on elliptic curves over small-characteristic finite fields (which are also no longer considered safe to use).

While it is relatively easy to set public key sizes for RSA or Diffie–Hellman that are “just above” the reach of academic computing power with NFS, the sensible cryptographic choice is to aim at security parameters that are well above this feasibility limit, in particular because assessing this limit precisely is in fact a very difficult problem. In line with the security levels offered by symmetric primitives such as AES-128, public key sizes should be chosen so that with current algorithmic knowledge, an attacker would need at least elementary operations to solve the underlying hard problem. Such security parameters would call for RSA key sizes above 3,000 bits, which is seldom seen, except in contexts where computing power is plentiful anyway.

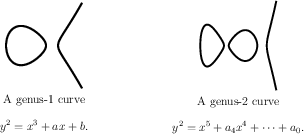

Since the mid-1980's, elliptic curves, and more generally Jacobians of algebraic curves, have been proposed as alternative mathematical settings for building cryptographic primitives.

The discrete logarithm problem in these groups is formidably hard, and in comparison to the situation with the traditional primitives mentioned above, the cryptanalysis algorithms are such that the appropriate public-key size grows only linearly with the desired security level: a 256-bit public key, using algebraic curves, is well suited to match the hardness of AES-128. This asset makes algebraic curves more attractive for the future of public-key cryptography.

Challenges related to algebraic curves in cryptology are rather various, and call for expertise in several areas. Suggesting curves to be used in the cryptographic context requires solving the point counting problem. This may be done by variants of the Schoof–Elkies–Atkin algorithm and its generalizations (which, in genus 2, require arithmetic modulo multivariate systems of equations), or alternatively the use of the complex multiplication method, a rich theory that opens the way to several problems in computational number theory.

The long-awaited transition from the legacy primitives to primitives based on curves is ready to happen, only circumstantially slowed down presently by the need to agree on a new set of elliptic curves (not because of any attack, but because of skepticism over how the currently widespread ones have been generated). The Internet Research Task Force has completed in 2015 a standardization proposal [30]. In this context, the recommended curves are not of the complex multiplication family, and enjoy instead properties that allow fast implementation, and avoid a few implementation difficulties. Those are also naturally chosen to be immune to the few known attacks on the discrete logarithm problem for curves. No curve of genus 2 has made its way to the standardization process so far, however one candidate exists for the 128-bit security level [23].

The discrete logarithm problem on curves is very hard. Some results were obtained however for curves over extension fields, using techniques such as the Weil descent, or the point decomposition problem. In this context, the algorithmic setup connects to polynomial system solving, fast arithmetic, and linear algebra.

Another possible route for transitioning away from RSA and finite field-based cryptography is suggested, namely the switch to the “post-quantum” cryptographic primitives. Public-key cryptographic primitives that rely on mathematical problems related to Euclidean lattices or coding theory have an advantage: they would resist the potential advent of a quantum computer. Research on these topics is quite active, and there is no doubt that when the efficiency challenges that are currently impeding their deployment are overcome, the standardization of some post-quantum cryptographic primitives will be a worthwhile addition to the general cryptographic portfolio. The NSA has recently devoted an intriguing position text to this topic [32] (for a glimpse of some of the reactions within the academic community, the reference [29] is useful). Post-quantum cryptography, as a research topic, is complementary to the topics we address most, which are NFS and algebraic curves. We are absolutely confident that, at the very least for the next decade, primitives based on integer factoring, finite fields, and algebraic curves will continue to hold the lion's share in the cryptographic landscape. We also expect that before the advent of standardized and widely developed post-quantum cryptographic primitives, the primitives based on algebraic curves will become dominant (despite the apparent restraint from the NSA on this move).

We acknowledge that the focus on cryptographic primitives is part of a larger picture. Cryptographic primitives are part of cryptographic protocols, which eventually become part of cryptographic software. All these steps constitute research topics in their own right, and need to be scrutinized (as part of independent research efforts) in order to be considered as dependable building blocks. This being said, the interplay of the different aspects, from primitives to protocols, sometimes spawns very interesting and fruitful collaborations. A very good example of this is the LogJam attack [17].