Keywords

Computer Science and Digital Science

- A5.2. Data visualization

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

Other Research Topics and Application Domains

- B5.5. Materials

- B5.7. 3D printing

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.2.4. Theater

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Nicolas Holzschuch [Team leader, Inria, Senior Researcher, HDR]

- Fabrice Neyret [CNRS, Senior Researcher, HDR]

- Cyril Soler [Inria, Researcher, HDR]

Faculty Members

- Georges-Pierre Bonneau [Univ Grenoble Alpes, Professor, HDR]

- Joëlle Thollot [Institut polytechnique de Grenoble, Professor, HDR]

- Romain Vergne [Univ Grenoble Alpes, Associate Professor]

Post-Doctoral Fellow

- Maxime Garcia [Inria]

PhD Students

- Alban Fichet [Inria, until Jan 2020]

- Morgane Gerardin [Univ Grenoble Alpes]

- Nolan Mestres [Univ Grenoble Alpes]

- Ronak Molazem [Inria]

- Vincent Tavernier [Univ Grenoble Alpes]

- Sunrise Wang [Inria]

Interns and Apprentices

- Mohamed Amine Farhat [Inria, from Feb 2020 until Jul 2020]

- Marco Freire [Inria, from Feb 2020 until Jul 2020]

- Erwan Leria [Inria, from Apr 2020 until Sep 2020]

- Axel Ricard [Institut polytechnique de Grenoble, from Feb 2020 until May 2020]

- Bastien Zigmann [Inria, until Sep 2020]

Administrative Assistant

- Diane Courtiol [Inria]

External Collaborator

- Mohamed Amine Farhat [Univ Grenoble Alpes]

2 Overall objectives

Computer-generated pictures and videos are now ubiquitous: both for leisure activities, such as special effects in motion pictures, feature movies and video games, or for more serious activities, such as visualization and simulation.

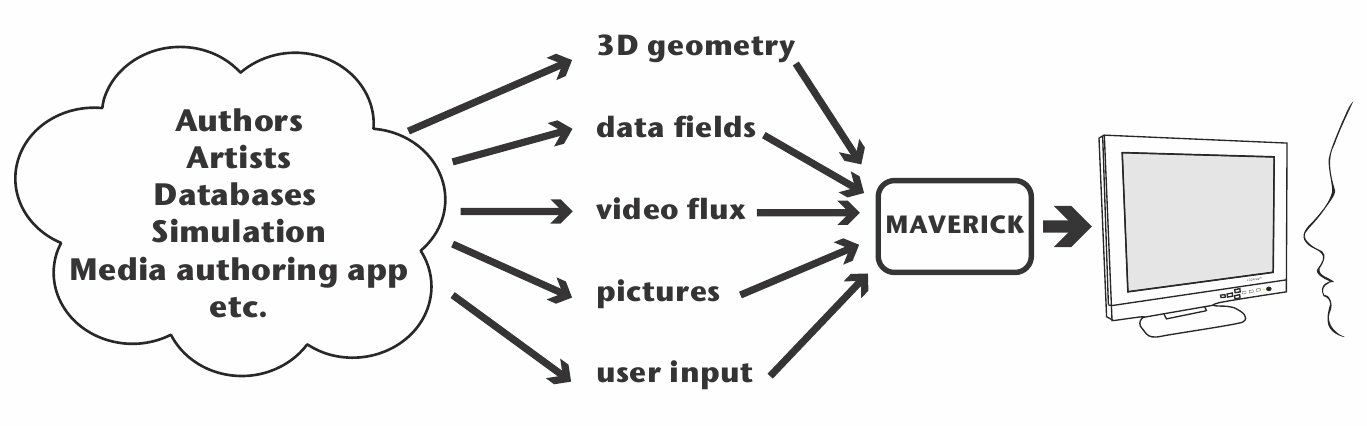

Maverick was created as a research team in January 2012 and upgraded as a research project in January 2014. We deal with image synthesis methods. We place ourselves at the end of the image production pipeline, when the pictures are generated and displayed (see figure 1). We take many possible inputs: datasets, video flows, pictures and photographs, (animated) geometry from a virtual world... We produce as output pictures and videos.

These pictures will be viewed by humans, and we consider this fact as an important point of our research strategy, as it provides the benchmarks for evaluating our results: the pictures and animations produced must be able to convey the message to the viewer. The actual message depends on the specific application: data visualization, exploring virtual worlds, designing paintings and drawings... Our vision is that all these applications share common research problems: ensuring that the important features are perceived, avoiding cluttering or aliasing, efficient internal data representation, etc.

Computer Graphics, and especially Maverick is at the crossroad between fundamental research and industrial applications. We are both looking at the constraints and needs of applicative users and targeting long term research issues such as sampling and filtering.

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four Research problems:

- Computer Visualization, where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties,

- Expressive Rendering, where we create an artistic representation of a virtual world,

- Illumination Simulation, where our focus is modelling the interaction of light with the objects in the scene.

- Complex Scenes, where our focus is rendering and modelling highly complex scenes.

The heart of Maverick is understanding what makes a picture useful, powerful and interesting for the user, and designing algorithms to create these pictures.

We will address these research problems through three interconnected approaches:

- working on the impact of pictures, by conducting perceptual studies, measuring and removing artefacts and discontinuities, evaluating the user response to pictures and algorithms,

- developing representations for data, through abstraction, stylization and simplification,

- developing new methods for predicting the properties of a picture (e.g. frequency content, variations) and adapting our image-generation algorithm to these properties.

A fundamental element of the Maverick project-team is that the research problems and the scientific approaches are all cross-connected. Research on the impact of pictures is of interest in three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will gather contributions from all three scientific approaches: impact, representations and prediction.

3 Research program

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four research problems:

- Computer Visualization where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties. Visualization can be used for data analysis, for the results of a simulation, for medical imaging data...

- Expressive Rendering, where we create an artistic representation of a virtual world. Expressive rendering corresponds to the generation of drawings or paintings of a virtual scene, but also to some areas of computational photography, where the picture is simplified in specific areas to focus the attention.

- Illumination Simulation, where we model the interaction of light with the objects in the scene, resulting in a photorealistic picture of the scene. Research include improving the quality and photorealism of pictures, including more complex effects such as depth-of-field or motion-blur. We are also working on accelerating the computations, both for real-time photorealistic rendering and offline, high-quality rendering.

- Complex Scenes, where we generate, manage, animate and render highly complex scenes, such as natural scenes with forests, rivers and oceans, but also large datasets for visualization. We are especially interested in interactive visualization of complex scenes, with all the associated challenges in terms of processing and memory bandwidth.

The fundamental research interest of Maverick is first, understanding what makes a picture useful, powerful and interesting for the user, and second designing algorithms to create and improve these pictures.

3.1 Research approaches

We will address these research problems through three interconnected research approaches:

Picture Impact

Our first research axis deals with the impact pictures have on the viewer, and how we can improve this impact. Our research here will target:

- evaluating user response: we need to evaluate how the viewers respond to the pictures and animations generated by our algorithms, through user studies, either asking the viewer about what he perceives in a picture or measuring how his body reacts (eye tracking, position tracking).

- removing artefacts and discontinuities: temporal and spatial discontinuities perturb viewer attention, distracting the viewer from the main message. These discontinuities occur during the picture creation process; finding and removing them is a difficult process.

Data Representation

The data we receive as input for picture generation is often unsuitable for interactive high-quality rendering: too many details, no spatial organisation... Similarly the pictures we produce or get as input for other algorithms can contain superfluous details.

One of our goals is to develop new data representations, adapted to our requirements for rendering. This includes fast access to the relevant information, but also access to the specific hierarchical level of information needed: we want to organize the data in hierarchical levels, pre-filter it so that sampling at a given level also gives information about the underlying levels. Our research for this axis include filtering, data abstraction, simplification and stylization.

The input data can be of any kind: geometric data, such as the model of an object, scientific data before visualization, pictures and photographs. It can be time-dependent or not; time-dependent data bring an additional level of challenge on the algorithm for fast updates.

Prediction and simulation

Our algorithms for generating pictures require computations: sampling, integration, simulation... These computations can be optimized if we already know the characteristics of the final picture. Our recent research has shown that it is possible to predict the local characteristics of a picture by studying the phenomena involved: the local complexity, the spatial variations, their direction...

Our goal is to develop new techniques for predicting the properties of a picture, and to adapt our image-generation algorithms to these properties, for example by sampling less in areas of low variation.

Our research problems and approaches are all cross-connected. Research on the impact of pictures is of interest in three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will use all three research approaches: impact, representations and prediction.

3.2 Cross-cutting research issues

Beyond the connections between our problems and research approaches, we are interested in several issues, which are present throughout all our research:

- Sampling: is an ubiquitous process occurring in all our application domains, whether photorealistic rendering (e.g. photon mapping), expressive rendering (e.g. brush strokes), texturing, fluid simulation (Lagrangian methods), etc. When sampling and reconstructing a signal for picture generation, we have to ensure both coherence and homogeneity. By coherence, we mean not introducing spatial or temporal discontinuities in the reconstructed signal. By homogeneity, we mean that samples should be placed regularly in space and time. For a time-dependent signal, these requirements are conflicting with each other, opening new areas of research.

- Filtering: is another ubiquitous process, occuring in all our application domains, whether in realistic rendering (e.g. for integrating height fields, normals, material properties), expressive rendering (e.g. for simplifying strokes), textures (through non-linearity and discontinuities). It is especially relevant when we are replacing a signal or data with a lower resolution (for hierarchical representation); this involves filtering the data with a reconstruction kernel, representing the transition between levels.

- Performance and scalability: are also a common requirement for all our applications. We want our algorithms to be usable, which implies that they can be used on large and complex scenes, placing a great importance on scalability. For some applications, we target interactive and real-time applications, with an update frequency between 10 Hz and 120 Hz.

- Coherence and continuity: in space and time is also a common requirement of realistic as well as expressive models which must be ensured despite contradictory requirements. We want to avoid flickering and aliasing.

- Animation: our input data is likely to be time-varying (e.g. animated geometry, physical simulation, time-dependent dataset). A common requirement for all our algorithms and data representation is that they must be compatible with animated data (fast updates for data structures, low latency algorithms...).

3.3 Methodology

Our research is guided by several methodological principles:

- Experimentation: to find solutions and phenomenological models, we use experimentation, performing statistical measurements of how a system behaves. We then extract a model from the experimental data.

- Validation: for each algorithm we develop, we look for experimental validation: measuring the behavior of the algorithm, how it scales, how it improves over the state-of-the-art... We also compare our algorithms to the exact solution. Validation is harder for some of our research domains, but it remains a key principle for us.

- Reducing the complexity of the problem: the equations describing certain behaviors in image synthesis can have a large degree of complexity, precluding computations, especially in real time. This is true for physical simulation of fluids, tree growth, illumination simulation... We are looking for emerging phenomena and phenomenological models to describe them (see framed box “Emerging phenomena”). Using these, we simplify the theoretical models in a controlled way, to improve user interaction and accelerate the computations.

- Transferring ideas from other domains: Computer Graphics is, by nature, at the interface of many research domains: physics for the behavior of light, applied mathematics for numerical simulation, biology, algorithmics... We import tools from all these domains, and keep looking for new tools and ideas.

- Develop new fondamental tools: In situations where specific tools are required for a problem, we will proceed from a theoretical framework to develop them. These tools may in return have applications in other domains, and we are ready to disseminate them.

- Collaborate with industrial partners: we have a long experience of collaboration with industrial partners. These collaborations bring us new problems to solve, with short-term or medium-term transfer opportunities. When we cooperate with these partners, we have to find what they need, which can be very different from what they want, their expressed need.

4 Application domains

The natural application domain for our research is the production of digital images, for example for movies and special effects, virtual prototyping, video games... Our research have also been applied to tools for generating and editing images and textures, for example generating textures for maps. Our current application domains are:

- Offline and real-time rendering in movie special effects and video games;

- Virtual prototyping;

- Scientific visualization;

- Content modeling and generation (e.g. generating texture for video games, capturing reflectance properties, etc);

- Image creation and manipulation.

5 New software and platforms

5.1 New software

5.1.1 GRATIN

- Functional Description: Gratin is a node-based compositing software for creating, manipulating and animating 2D and 3D data. It uses an internal direct acyclic multi-graph and provides an intuitive user interface that allows to quickly design complex prototypes. Gratin has several properties that make it useful for researchers and students. (1) it works in real-time: everything is executed on the GPU, using OpenGL, GLSL and/or Cuda. (2) it is easily programmable: users can directly write GLSL scripts inside the interface, or create new C++ plugins that will be loaded as new nodes in the software. (3) all the parameters can be animated using keyframe curves to generate videos and demos. (4) the system allows to easily exchange nodes, group of nodes or full pipelines between people.

-

URL:

http://

gratin. gforge. inria. fr/ - Authors: Romain Vergne, Pascal Barla

- Contact: Romain Vergne

- Participants: Pascal Barla, Romain Vergne

- Partner: UJF

5.1.2 HQR

- Name: High Quality Renderer

- Keywords: Lighting simulation, Materials, Plug-in

- Functional Description: HQR is a global lighting simulation platform. HQR software is based on the photon mapping method which is capable of solving the light balance equation and of giving a high quality solution. Through a graphical user interface, it reads X3D scenes using the X3DToolKit package developed at Maverick, it allows the user to tune several parameters, computes photon maps, and reconstructs information to obtain a high quality solution. HQR also accepts plugins which considerably eases the developpement of new algorithms for global illumination, those benefiting from the existing algorithms for handling materials, geometry and light sources.

-

URL:

http://

artis. imag. fr/ ~Cyril. Soler/ HQR - Contact: Cyril Soler

- Participant: Cyril Soler

5.1.3 libylm

- Name: LibYLM

- Keyword: Spherical harmonics

- Functional Description: This library implements spherical and zonal harmonics. It provides the means to perform decompositions, manipulate spherical harmonic distributions and provides its own viewer to visualize spherical harmonic distributions.

-

URL:

https://

launchpad. net/ ~csoler-users/ +archive/ ubuntu/ ylm - Author: Cyril Soler

- Contact: Cyril Soler

5.1.4 ShwarpIt

- Name: ShwarpIt

- Keyword: Warping

- Functional Description: ShwarpIt is a simple mobile app that allows you to manipulate the perception of shapes in images. Slide the ShwarpIt slider to the right to make shapes appear rounder. Slide it to the left to make shapes appear more flat. The Scale slider gives you control on the scale of the warping deformation.

-

URL:

http://

bonneau. meylan. free. fr/ ShwarpIt/ ShwarpIt. html - Contacts: Georges-Pierre Bonneau, Romain Vergne

5.1.5 iOS_system

- Keyword: IOS

-

Functional Description:

From a programmer point of view, iOS behaves almost as a BSD Unix. Most existing OpenSource programs can be compiled and run on iPhones and iPads. One key exception is that there is no way to call another program (system() or fork()/exec()). This library fills the gap, providing an emulation of system() and exec() through dynamic libraries and an emulation of fork() using threads.

While threads can not provide a perfect replacement for fork(), the result is good enough for most usage, and open-source programs can easily be ported to iOS with minimal efforts. Examples of softwares ported using this library include TeX, Python, Lua and llvm/clang.

- Release Contributions: This version makes iOS_system available as Swift Packages, making the integration in other projects easier.

-

URL:

https://

github. com/ holzschu/ ios_system - Contact: Nicolas Holzschuch

5.1.6 Carnets for Jupyter

- Keywords: IOS, Python

- Functional Description: Jupyter notebooks are a very convenient tool for prototyping, teaching and research. Combining text, code snippets and the result of code execution, they allow users to write down ideas, test them, share them. Jupyter notebooks usually require connection to a distant server, and thus a stable network connection, which is not always possible (e.g. for field trips, or during transport). Carnets runs both the server and the client locally on the iPhone or iPad, allowing users to create, edit and run Jupyter notebooks locally.

-

URL:

https://

holzschu. github. io/ Carnets_Jupyter/ - Contact: Nicolas Holzschuch

5.1.7 a-Shell

- Keywords: IOS, Smartphone

- Functional Description: a-Shell is a terminal emulator for iOS. It behaves like a Unix terminal and lets the user run commands. All these commands are executed locally, on the iPhone or iPad. Commands available include standard terminal commands (ls, cp, rm, mkdir, tar, nslookup...) but also programming languages such as Python, Lua, C and C++. TeX is also available. Users familiar with Unix tools can run their favorite commands on their mobile device, on the go, without the need for a network connection.

-

URL:

https://

holzschu. github. io/ a-Shell_iOS/ - Contact: Nicolas Holzschuch

6 New results

6.1 Managing high complexity: procedural texture synthesis

6.1.1 Freely orientable microstructures for designing deformable 3D prints

Participants: Thibault Tricard, Vincent Tavernier, Cédric Zanni, Jonàs Martínez, Pierre-Alexandre Hugron, Fabrice Neyret, Sylvain Lefebvre.

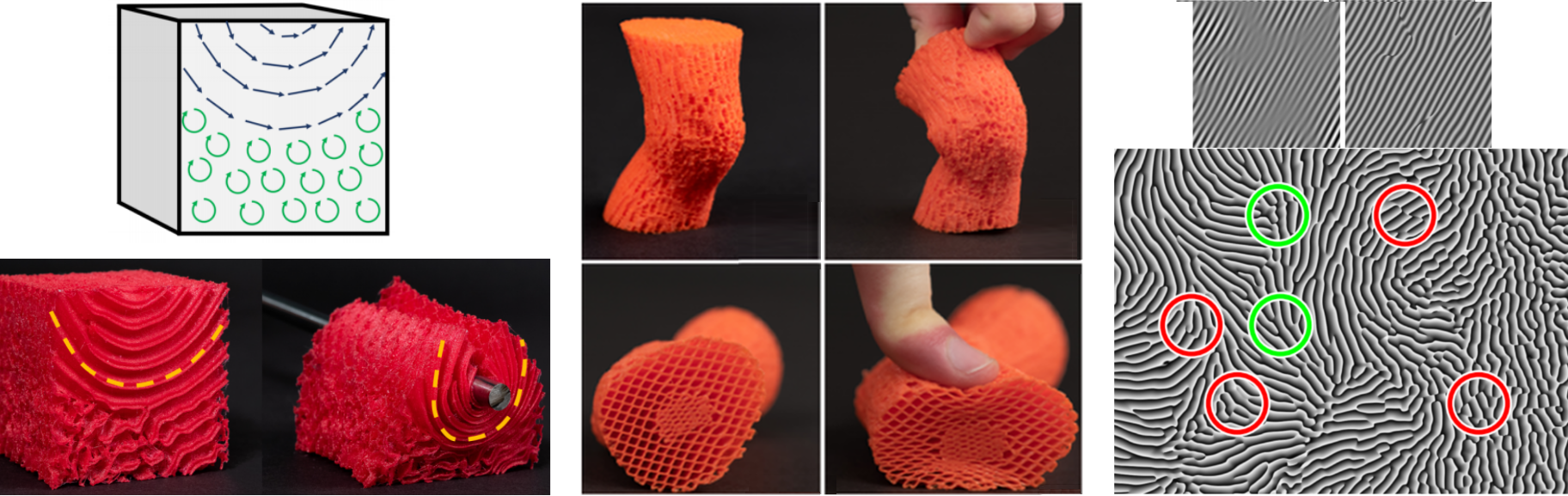

Nature offers a marvel of astonishing and rich deformation behaviors. Yet, most of the objects we fabricate are comparatively rather inexpressive, either rigid or having simple homogeneous behaviors when interacted with. In this work, we focus on controlling how a 3D printed volume reacts under large deformations. We propose a novel microstructure that is extremely rigid along a transverse direction, while being comparatively very flexible in the orthogonal plane. By allowing free gradation of this local orientation within the object, the microstructure can be designed such that, under deformation, some distances in the volume are preserved while others freely change. This allows to control the way the volume reshapes when deformed, and results in a wide range of design possibilities. Other gradations are possible, such as locally and progressively canceling the directional effect.

To synthesize the structures we propose an algorithm that builds upon the procedural texturing work we published last year, "Procedural Phasor Noise" 17, for synthesizing strip patterns. Our new algorithm produces a cellular geometry that can be fabricated reliably despite 3D printing walls at a minimal thickness, for maximal flexibility. The synthesis algorithm is efficient, and scales to large volumes. At Maverick we worked more specifically on revisiting "Procedural Phasor Noise" so as to cancel the quasi-discontinuity that could otherwise create mechanical defects at larger scales. See Figure 2.

This paper was published in ACM TOG 10 and presented at Siggraph ASIA 2020.

Left: Specification of the volumetric amount and direction of anisotropy, and result on a real 3D printed object. Middle: Example of a knee, which can only bend as expected, and reproducing the rigid bones. Right: Two types of discontinuitie appearing when turning Gabor noise into Phasor noise, that we needed to sweep away to produce a seemless microstructure.

6.1.2 Procedural modeling of 3D realistic galactic dust and nebulas

Participants: Erwan Leria, Fabrice Neyret.

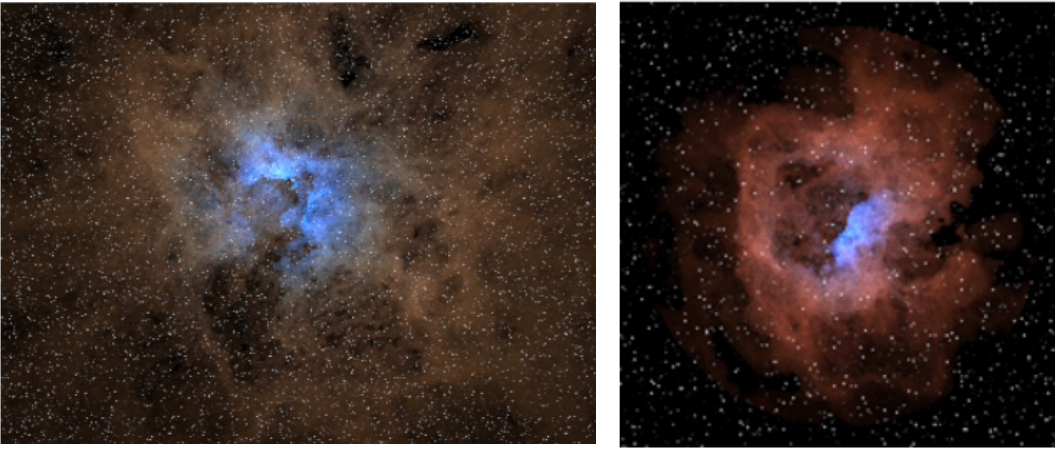

The real-time walthrough into large high-resolution virtual galaxies requires the procedural production of a lot of data that couldn't be stored and transfered to the GPU, or which are not available from observation (objects hidden from Earth, 3Dness of 2D apparant objects) nor reasonnably all designable by artists. This was the very topic of our ANR Galaxy / veRTIGE with RSA Cosmos and Observatoire de Paris-Meudon some years ago.

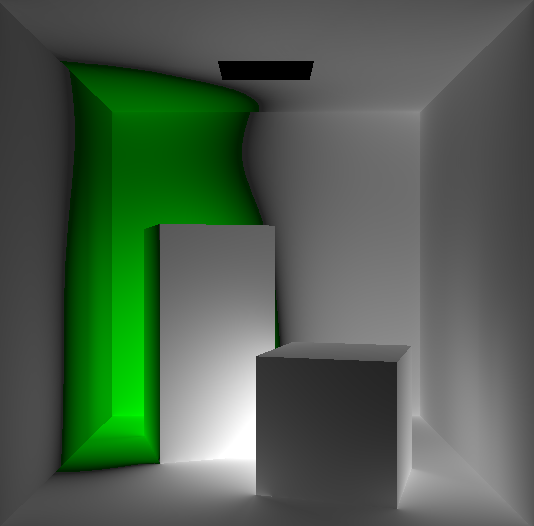

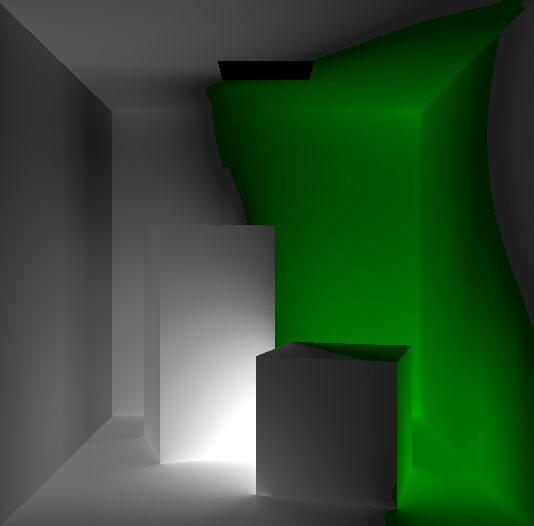

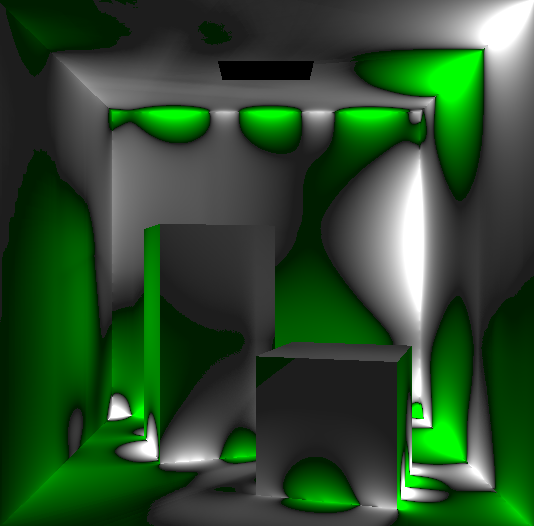

In particular, nebula are spectacular objects which Hubble provide high resolution 2D images of some, while a lot more are present in the galaxy. But since the only point of view of all real astronomy images is Earth the 3D information is not available or poor, while a virtual exploration expect to turn around or inside. In this work we model nebulas as shockwave empty bubbles in low resolution dust cloud lit by a young blue star at bubble center, and enrich this with stochastic variations + filament details. From the first we can infer analytical approximations of illumination and shadowing within a real-time volumetric ray-tracing. For the second we explored the use and extension of Perlin noise in able to obtain the filament aspect and to control the anisotropy field. See early results in Figure 3.

Two procedural nebula rendered in real-time with our system.

Online demo: https://

6.2 Light Transport Simulation

6.2.1 Spectral Analysis of the Light Transport Operator

Participants: Ronak Molazem, Cyril Soler.

In this work we study the eigenvalues and eigenfunctions of the light transport operator. While computing the spectrum of the light transport operator is a simple task in Lambertian scenes by applying a traditional eigensolver to the linear system obtained from discretized geometry, it becomes a real challenge in general environments where discretizing the geometry is not possible anymore. “Diagonalizing” light transport however can be a very effective way to perform re-lighting and rapidely compute light transport solutions.

In this work we study the properties of the spectrum of the light transport operator, which incidently falls into the 'bad case' of non Hermitian integral operators with discontinuous kernels. This explains the lack of practical theorems, which we have to make up for by falling back to low level concepts such as trace-class operators.

During 2019-2020, we produced several methods to estimate the spectrum of the light transport operator, combining results from the fields of pure and abstract mathematics: resolvent theory, Monte-Carlo factorisation of large matrices and Fredholm determinents. We proved in particular that it is possible to compute the eigenvalues of the light transport operator by integrating "circular" light paths of various lengths accross the scene, and that eigenvalues are real in lambertian scenes despite the operator not being normal.

This work is part of the PhD of Ronak Molazem and is funded by the ANR project "CaLiTrOp". At the time of writing this (Feb. 2021), we're about to submit a paper to ACM Transactions on Graphics.

|

|

|

|

6.2.2 Capturing the indirect illumination manifold

Participants: Wilhem Barbier, Ronak Molazem, Cyril Soler.

This project aims at parameterizing the manifold of indirect illumination (with an arbitrary number of bouces) using a Gaussian Process latent variable model (a.k.a. GPLVM). This technique offers multiple advantages that suit the problem of interpolating indirect illumination when the sources of light vary (in intensity and position). We use n-dimensional measurements of direct illumination values as a latent space, to which we associate the actual indirect illumination due to the same light source. This allows us to produce indirect ilumination by linearly interpolating smooth precomputed indirect illumination maps that can be stored either in image-space (meaning the viewpoint is fixed) or in object space as textures.

6.2.3 Low Dimension Approximations of Light Scattering

Participants: Hugo Rens, Cyril Soler.

Light transport is known to be a low rank linear operator: the vector space formed by solutions of a light transport problem for different initial conditions is of low dimension. This is especially true when participating media are involved. Approximating this space using appropriate bases is therefore of paramount help to efficiently compute solutions to light transport problems in these environments.

We propose to compute a low dimensional approximation of the light transport operator in participating taking inspiration from techniques in Monte-Carlo approximation techniques for large matrices, and use the result in a real-time rendering engine, where, after some precomputation, a new image can be computed while changing the illumination and view point.

This work is an ongoing collaboration with the IRIT laboratory (Toulouse, France), and is funded by the ANR project "CaLiTrOp".

6.2.4 Slope-space integrals for specular next event estimation

Participants: Guillaume Loubet, Wenzel Jakob, Nicolas Holzschuch.

Monte Carlo light transport simulations often lack robustness in scenes containing specular or near-specular materials. Widely used uni- and bidirectional sampling strategies tend to find light paths involving such materials with insufficient probability, producing unusable images that are contaminated by significant variance. In a recent paper 8, we address the problem of sampling a light path connecting two given scene points via a single specular reflection or refraction, extending the range of scenes that can be robustly handled by unbiased path sampling techniques. Our technique enables efficient rendering of challenging transport phenomena caused by such paths, such as underwater caustics or caustics involving glossy metallic objects. We derive analytic expressions that predict the total radiance due to a single reflective or refractive triangle with a microfacet BSDF and we show that this reduces to the well known Lambert boundary integral for irradiance. We subsequently show how this can be leveraged to efficiently sample connections on meshes comprised of vast numbers of triangles. Our derivation builds on the theory of off-center microfacets and involves integrals in the space of surface slopes. Our approach straightforwardly applies to the related problem of rendering glints with high-resolution normal maps describing specular microstructure. Our formulation alleviates problems raised by singularities in filtering integrals and enables a generalization of previous work to perfectly specular materials. We also extend previous work to the case of GGX distributions and introduce new techniques to improve accuracy and performance.

6.2.5 Adaptive Matrix Completion for Fast Visibility Computations with Many Lights Rendering

Participants: Sunrise Wang, Nicolas Holzschuch.

Several fast global illumination algorithms rely on the Virtual Point Lights framework. This framework separates illumination into two steps: first, propagate radiance in the scene and store it in virtual lights, then gather illumination from these virtual lights. To accelerate the second step, virtual lights and receiving points are grouped hierarchically, for example using Multi-Dimensional Lightcuts. Computing visibility between clusters of virtual lights and receiving points is a bottleneck. Separately, matrix completion algorithms reconstruct completely a low-rank matrix from an incomplete set of sampled elements. In a recent paper 14, we use adaptive matrix completion to approximate visibility information after an initial clustering step. We reconstruct visibility information using as little as 10 % to 20 % samples for most scenes, and combine it with shading information computed separately, in parallel on the GPU. Overall, our method computes global illumination 3 or more times faster than previous state-of-the-art methods.

6.2.6 A Detail Preserving Neural Network Model for Monte Carlo Denoising

Participants: Beibei Wang, Nicolas Holzschuch.

Monte Carlo based methods such as path tracing are widely used in movie production. To achieve noise-free quality, they tend to require a large number of samples per pixel, resulting in longer rendering time. To reduce that cost, one solution is Monte Carlo denoising: render the image with fewer samples per pixel (as little as 128) and then denoise the resulting image. Many Monte Carlo denoising methods rely on deep learning: they use convolutional neural networks to learn the relationship between noisy images and reference images, using auxiliary features such as position and normal together with image color as inputs. The network predicts kernels which are then applied to the noisy input. These methods have shown powerful denoising ability. However, they tend to lose geometric details or lighting details and over blur sharp features during denoising. In a recent paper 7, we solve this issue by proposing a novel network structure, a new input feature-light transport covariance from path space-and an improved loss function. In our network, we separate feature buffers with color buffer to enhance detail effects. Their features are extracted separately and then are integrated to a shallow kernel predictor. Our loss function considers perceptual loss, which also improves the detail preserving. In addition, we present the light transport covariance feature in path space as one of the features, which is used to preserve illumination details. Our method denoises Monte Carlo path traced images while preserving details much better than previous work.

6.3 Modelisation of material appearance

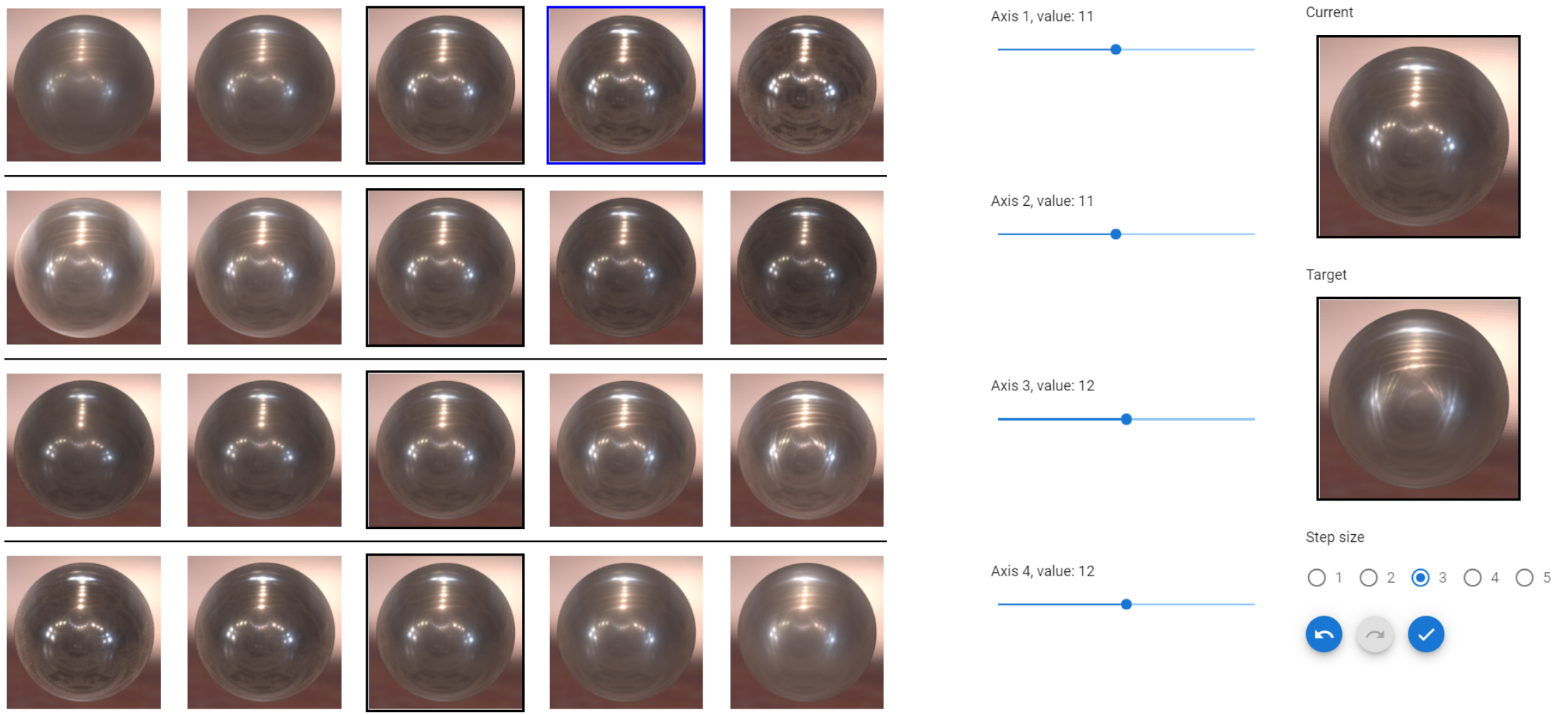

6.3.1 A Low-Dimension Perceptual Space for Intuitive Material Appearance Editing

Participants: Cyril Soler.

Understanding human visual perception to materials is challenging. Real-world material appearance functions are usually described by high-dimensional tabulated measurements, and their connections to the human visual system are not explicitly defined. In this work, we present a method to build an intuitive low-dimensional perceptual space for real-worldmeasured material data based on its underlying non-linear reflectance manifold. Unlike many previous works that address individual perceptual attributes (such as gloss), we focus on building a complete perceptual space with multiple attributes and understanding how they influence perception of materials.

We first use crowdsourced data on the perceived similarity of measured materials to build a multi-dimensional scaling model. Then we use the output as a low-dimensional manifold to construct a perceptual space that can be interpolated and extrapolated with Gaussian process regression. Given with the perceptual space, we propose a material design interface using the manifold, allowing users to edit material appearance using its perceptual dimensions with visual variations and instant feedback. To evaluate the interface, we conduct a user study to compare the accuracy of editing and matching material appearances using perceptual and physical dimensions.

This work is an ongoing collaboration with Yale University (With Prof. Holly Rushmeier and PhD. Weiqi Shi). A paper will be submitted to the Eurographics Symposium on rendering 2021.

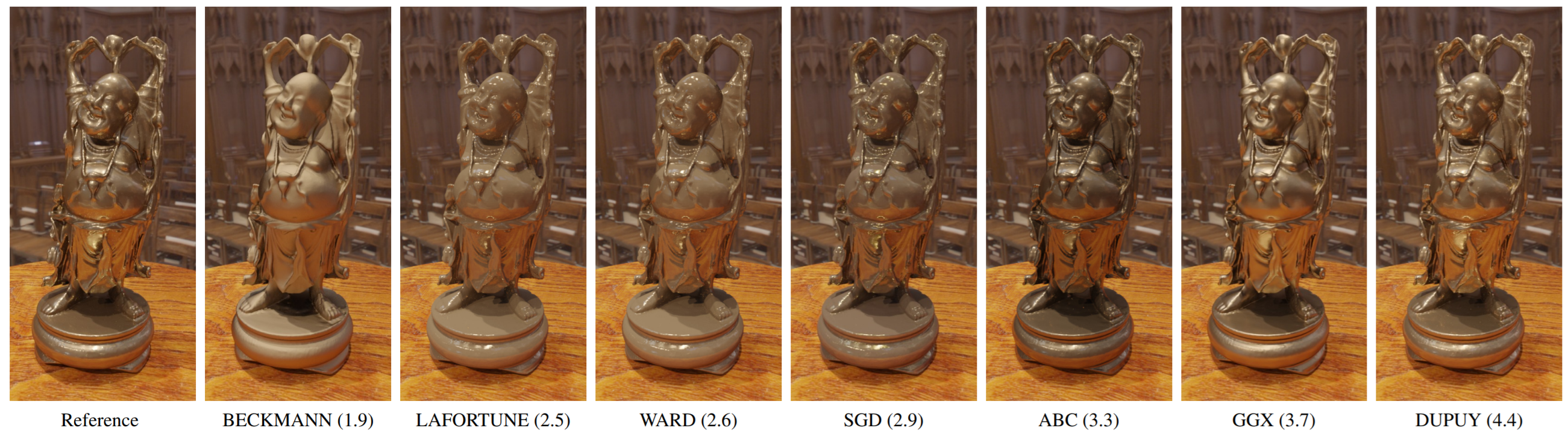

6.3.2 Perceptual quality of BRDF approximations: dataset and metrics

Participants: Cyril Soler.

Bidirectional Reflectance Distribution Functions (BRDFs) are pivotal to the perceived realism in image synthesis. While measured BRDF datasets are available, reflectance functions are most of the time approximated by analytical formulas for storage efficiency reasons. These approximations are often obtained by minimizing metrics such as —or weighted quadratic—distances, but these metrics do not usually correlate well with perceptual quality when the BRDF is used in a rendering context, which motivates a perceptual study.

The contributions of this work are threefold. First, we perform a large-scale user study to assess the perceptual quality of 2026 BRDF approximations, resulting in 84138 judgments across 1005 unique participants. We explore this dataset and analyze perceptual scores based on material type and illumination.

Second, we assess nine analytical BRDF models in their ability to approximate tabulated BRDFs. Third, we assess several image-based and BRDF-based (, optimal transport and kernel distance) metrics in their ability to approximate perceptual similarity judgments.

This work is a collaboration with the LIRIS laboratory (Lyon) that involves J.P.Farrugia, G.Lavoue and N.Bonneel. It has been accepted for publication in Eurographics 2021 5.

6.3.3 Correlation between micro-structural features and color of nanocrystallized powders of hematite

Participants: Morgane Gerardin, Pauline Martinetto, Nicolas Holzschuch.

Pigments are quite complex materials whose appearance involves many optical phenomena. In a recent paper 15, we establish a comparison between the size and shape of grains of powdered hematite and the pigment color. We focused on hematite as it is a traditional pigment, whose origin of coloration has been well discussed in the literature. Pure nanocrystallized hematite powders have been synthesized using different synthesis routes. These powders have been characterized by X–ray powder diffraction and scanning electronic microscopy. The color of the samples has been studied by visible–NIR spectrophotometry. We obtained hematite with both various grain morphologies and noticeably different shades going from orange–red to purple. Colorimetric parameters in CIE L*a*b* color space and diffuse reflectance spectra properties were studied against the structural parameters. For small nanocrystals, hue is increasing with the grain size until a critical diameter of about 80 nm, where the trend is reversed. We believe a combination of multiple physical phenomena occurring at this scale may explain this trend.

This problem is particularly interesting because all these pigments have the same chemical composition; changes in color are only due to physical phenomena, which are not described by the models currently used in Computer Graphics.

6.3.4 Example-Based Microstructure Rendering with Constant Storage

Participants: Beibei Wang, Nicolas Holzschuch.

Rendering glinty details from specular microstructure enhances the level of realism, but previous methods require heavy storage for the high-resolution height field or normal map and associated acceleration structures. In a recent paper 13, we aim at dynamically generating theoretically infinite microstructure, preventing obvious tiling artifacts, while achieving constant storage cost. Unlike traditional texture synthesis, our method supports arbitrary point and range queries, and is essentially generating the microstructure implicitly. Our method fits the widely used microfacet rendering framework with multiple importance sampling (MIS), replacing the commonly used microfacet normal distribution functions (NDFs) like GGX by a detailed local solution, with a small amount of runtime performance overhead.

6.3.5 Real-Time Glints Rendering with Prefiltered Discrete Stochastic Microfacets

Participants: Beibei Wang, Nicolas Holzschuch.

Many real-life materials have a sparkling appearance. Examples include metallic paints, sparkling fabrics, snow. Simulating these sparkles is important for realistic rendering but expensive. As sparkles come from small shiny particles reflecting light into a specific direction, they are very challenging for illumination simulation. Existing approaches use a 4-dimensional hierarchy, searching for light-reflecting particles simultaneously in space and direction. The approach is accurate, but extremely expensive. A separable model is much faster, but still not suitable for real-time applications. The performance problem is even worse when illumination comes from environment maps, as they require either a large sample count per pixel or prefiltering. Prefiltering is incompatible with the existing sparkle models, due to the discrete multi-scale representation. In a recent paper 11, we present a GPU friendly, prefiltered model for real-time simulation of sparkles and glints. Our method simulates glints under both environment maps and point light sources in real-time, with an added cost of just 10 ms per frame with full high definition resolution. Editing material properties requires extra computations but is still real-time, with an added cost of 10 ms per frame.

6.3.6 Joint SVBRDF Recovery and Synthesis From a Single Image using an Unsupervised Generative Adversarial Network

Participants: Beibei Wang, Nicolas Holzschuch.

We want to recreate spatially-varying bi-directional reflectance distribution functions (SVBRDFs) from a single image. Producing these SVBRDFs from single images will allow designers to incorporate many new materials in their virtual scenes, increasing their realism. A single image contains incomplete information about the SVBRDF, making reconstruction difficult. Existing algorithms can produce high-quality SVBRDFs with single or few input photographs using supervised deep learning. The learning step relies on a huge dataset with both input photographs and the ground truth SVBRDF maps. This is a weakness as ground truth maps are not easy to acquire. For practical use, it is also important to produce large SVBRDF maps. Existing algorithms rely on a separate texture synthesis step to generate these large maps, which leads to the loss of consistency between generated SVBRDF maps. In a recent paper 16, we address both issues simultaneously. We present an unsupervised generative adversarial neural network that addresses both SVBRDF capture from a single image and synthesis at the same time. From a low-resolution input image, we generate a large resolution SVBRDF, much larger than the input images. We train a generative adversarial network (GAN) to get SVBRDF maps, which have both a large spatial extent and detailed texels. We employ a two-stream generator that divides the training of maps into two groups (normal and roughness as one, diffuse and specular as the other) to better optimize those four maps. In the end, our method is able to generate high-quality large scale SVBRDF maps from a single input photograph with repetitive structures and provides higher quality rendering results with more details compared to the previous works. Each input for our method requires individual training, which costs about 3 hours.

6.3.7 Participating media

Participants: Beibei Wang, Nicolas Holzschuch.

Rendering participating media is important to the creation of photorealistic images. Participating media have a translucent aspect that comes from light being scattered inside the material. Several effects are at play, depending on the characteristic of the material: with a large albedo or a small mean-free-path, higher-order scattering effects dominate. With low opacity materials, single scattering effects dominate. With a refractive boundary, we have concentration of the incoming light inside the medium, resulting in volume caustics.

For the former, we precompute multiple scattering effects. In a first paper 12, precomputed double- and multiple-scattering effects are stored in two compact tables, that are integrated with any illumination simulation algorithm, such as virtual ray lights (VRL), photon mapping with beams and paths (UPBP) or Metropolis Light Transport with Manifold Exploration (MEMLT). In a second paper 4, we use a neural network to encode these tables, resulting in a significant gain in memory: double and multiple scattering effects for the entire participating media space are encoded using only 23.6 KB of memory. This algorithm is implemented on GPU, and achieves 50 ms per frame in typical scenes and provides results almost identical to the reference.

For the latter, we used frequency analysis of light transport to allocate point samples efficiently inside the medium. Our method works in two steps: in the first step, we compute volume samples along with their covariance matrices, encoding the illumination frequency content in a compact way. In the rendering step, we use the covariance matrices to compute the kernel size for each volume sample: small kernel for high-frequency single scattering, large kernel for lower frequencies. Our algorithm 6 computes volume caustics with fewer volume samples, with no loss of quality. Our method is both faster and uses less memory than the original method. It is roughly twice as fast and uses one fifth of the memory. The extra cost of computing covariance matrices for frequency information is negligible.

Finally, we developped an extension to path guiding for translucent materials 2. Our method learns an adaptive approximate representation of the radiance distribution in the volume and use this representation to sample the scattering direction, combining with the phase function sampling by resampled importance sampling. The proposed method significantly improves the performance of light transport simulation in participating media, especially for small lights and media with refractive boundaries. Our method can handle any homogeneous participating media, from highly scattering to low scattering, from highly absorption to low absorption, from isotropic media to highly anisotropic media.

6.4 Expressive rendering

6.4.1 Local Light Alignment for Multi-Scale Shape Depiction

Participants: Nolan Mestre, Romain Vergne, Joëlle Thollot.

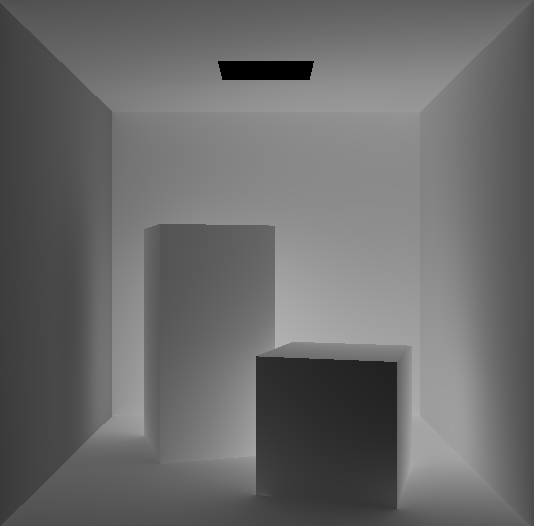

Motivated by recent findings in the field of visual perception, we present in 9 a novel approach for enhancing shape depiction and perception of surface details. We propose a shading-based technique that relies on locally adjusting the direction of light to account for the different components of materials. Our approach ensures congruence between shape and shading flows, leading to an effective enhancement of the perception of shape and details while impairing neither the lighting nor the appearance of materials. It is formulated in a general way allowing its use for multiple scales enhancement in real-time on the GPU, as well as in global illumination contexts. We also provide artists with fine control over the enhancement at each scale.

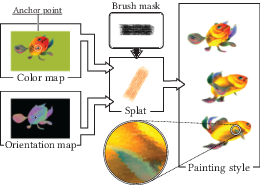

6.4.2 Coherent Mark-based Stylization of 3D Scenes at the Compositing Stage

Participants: Maxime Garcia, Mohamed Amine Farhat, Romain Vergne, Joëlle Thollot.

We present in 3 a novel temporally coherent stylized rendering technique working entirely at the compositing stage. We first generate a distribution of 3D anchor points using an implicit grid based on the local object positions stored in a G-buffer, hence following object motion. We then draw splats in screen space anchored to these points so as to be motion coherent. To increase the perceived flatness of the style, we adjust the anchor points density using a fractalization mechanism. Sudden changes are prevented by controlling the anchor points opacity and introducing a new order-independent blending function. We demonstrate the versatility of our method by showing a large variety of styles thanks to the freedom offered by the splats content and their attributes that can be controlled by any G-buffer.

6.5 Synthetizing new materials

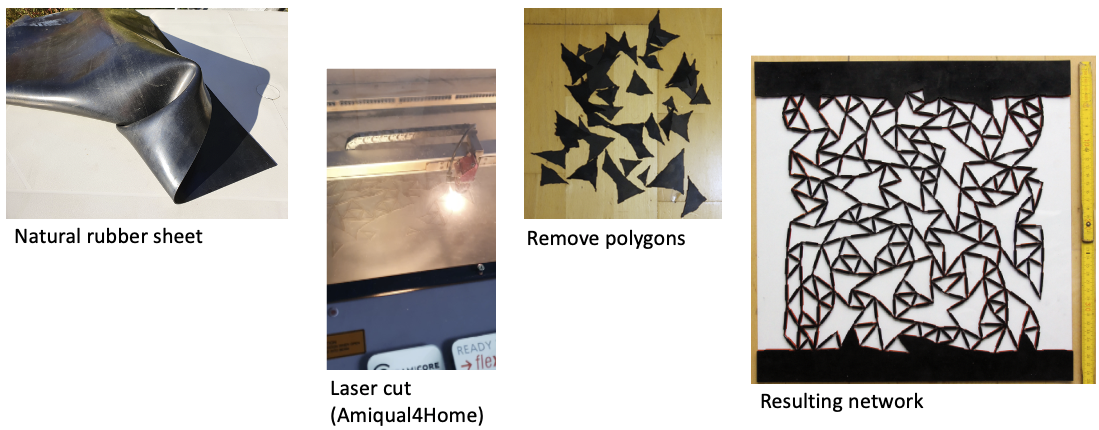

6.5.1 Geometric construction of auxetic metamaterials

Participants: Georges-Pierre Bonneau, Stefanie Hahmann, Johana Marku.

This work is devoted to a category of metamaterials called auxetics, identified by their negative Poisson's ratio. Our work consists in exploring geometrical strategies to generate irregular auxetic structures. More precisely we seek to reduce the Poisson's ratio, by pruning an irregular network based solely on geometric criteria. We introduce a strategy combining a pure geometric pruning algorithm followed by a physics-based testing phase to determine the resulting Poisson's ratio of our structures. We propose an algorithm that generates sets of irregular auxetic networks. Our contributions include geometrical characterization of auxetic networks, development of a pruning strategy, generation of auxetic networks with low Poisson's ratio, as well as validation of our approach. We provide statistical validation of our approach on large sets of irregular networks, and we additionally laser-cut auxetic networks in sheets of rubber. The findings reported here show that it is possible to reduce the Poisson's ratio by geometric pruning, and that we can generate irregular auxetic networks at lower processing times than a physics-based approach. This work has been submitted to the conference Eurographics'2021.

This work is part of the FET Open European Project ADAM2 [http://

7 Partnerships and cooperations

7.1 International initiatives

7.1.1 Inria international partners

Informal international partners

Maverick has informal relation with many companies related to special effects, gaming and image editing ( Weta Digital, Allegorithmics, Unity, Ubisoft, nVidia, Adobe, ... ), former contract partners, companies of ex-students ( Artineering ), and more. In particular, we have ongoing discussions with UbiSoft aim at defining common interests to create new collaborations, and with RSA Cosmos to renew collaborations.

7.2 European initiatives

7.2.1 FP7 & H2020 Projects

Georges-Pierre Bonneau is part of the FET Open European Project "ANALYSIS, DESIGN And MANUFACTURING using MICROSTRUCTURES" (ADAM2, Grant agreement ID: 862025) [http://

7.3 National initiatives

7.3.1 ANR: Materials

Participants: Nicolas Holzschuch, Romain Vergne.

We are funded by the ANR for a joint research project on acquisition and restitution of micro-facet based materials. This project is in cooperation with Océ Print Logic technologies, the Museum of Ethnography at the University of Bordeaux and the Manao team at Inria Bordeaux. The grant started in October 2015, for 60 months.7.3.2 CDP: Patrimalp 2.0

Participants: Nicolas Holzschuch, Romain Vergne.

The main objective and challenge of Patrimalp 2.0 is to develop a cross-disciplinary approach in order to get a better knowledge of the material cultural heritage in order to ensure its sustainability, valorization and diffusion in society. Carried out by members of UGA laboratories, combining skills in human sciences, geosciences, digital engineering, material sciences, in close connection with stakeholders of heritage and cultural life, curators and restorers, Patrimalp 2.0 intends to develop of a new interdisciplinary science: Cultural Heritage Science. The grant starts in January 2018, for a period of 48 months.7.3.3 ANR: CaLiTrOp

Participants: Cyril Soler.

Computing photorealistic images relies on the simulation of light transfer in a 3D scene, typically modeled using geometric primitives and a collection of reflectance properties that represent the way objects interact with light. Estimating the color of a pixel traditionally consists in integrating contributions from light paths connecting the light sources to the camera sensor at that pixel.In this ANR we explore a transversal view of examining light transport operators from the point of view of infinite dimensional function spaces of light fields (imagine, e.g., reflectance as an operator that transforms a distribution of incident light into a distribution of reflected light). Not only are these operators all linear in these spaces but they are also very sparse. As a side effect, the sub-spaces of light distributions that are actually relevant during the computation of a solution always boil down to a low dimensional manifold embedded in the full space of light distributions.

Studying the structure of high dimensional objects from a low dimensional set of observables is a problem that becomes ubiquitous nowadays: Compressive sensing, Gaussian processes, harmonic analysis and differential analysis, are typical examples of mathematical tools which will be of great relevance to study the light transport operators.

Expected results of the fundamental-research project CALiTrOp, are a theoretical understanding of the dimensionality and structure of light transport operators, bringing new efficient lighting simulation methods, and efficient approximations of light transport with applications to real time global illumination for video games.

8 Dissemination

8.1 Promoting scientific activities

8.1.1 Scientific events: organisation

Member of the organizing committees

Nicolas Holzschuch is a member of the Eurographics Symposium on Rendering Steering Committee.

8.1.2 Scientific events: selection

Chair of conference program committees

Georges-Pierre Bonneau has been nominated co-chair of the conference Solid and Physical Modeling (SPM) 2021

Member of the conference program committees

- Nicolas Holzschuch: Siggraph General Submission 2020 IPC member, Eurographics Symposium on Rendering 2020 IPC member, SIBGRAPI 2020 IPC member.

- Georges-Pierre Bonneau: Solid and Physical Modeling (SPM) 2020 IPC member, Shape Modeling International (SMI) 2020 IPC Member, EnvirVis 2020 IPC Member.

- Romain Vergne : SIBGRAPI 2020 IPC member, best paper comitee member AFIG/EGFR 2020.

Reviewer

Maverick faculties are regular reviewers of most of the major journals and conferences of the domain.

8.1.3 Journal

Reviewer - reviewing activities

Maverick faculties are regular reviewers of most of the major journals and conferences of the domain.

8.1.4 Research administration

Nicolas Holzschuch is a member of the Conseil National de l'Enseignement Supérieur et de la Recherche (CNESER).

8.2 Teaching - Supervision - Juries

8.2.1 Teaching

Joëlle Thollot and Georges-Pierre Bonneau are both full Professor of Computer Science. Romain Vergne is an associate professor in Computer Science. They teach general computer science topics at basic and intermediate levels, and advanced courses in computer graphics and visualization at the master levels. Nicolas Holzschuch teaches advanced courses in computer graphics at the master level.

Joëlle Thollot is in charge of the Erasmus+ program ASICIAO https://

- Licence: Joëlle Thollot, Théorie des langages, 45h, L3, ENSIMAG, France

- Licence: Joëlle Thollot, Module d'accompagnement professionnel, 10h, L3, ENSIMAG, France

- Master : Joelle Thollot, English courses using theater, 18h, M1, ENSIMAG, France.

- Master : Joelle Thollot, Analyse et conception objet de logiciels, 24h, M1, ENSIMAG, France.

- Licence : Romain Vergne, Introduction to algorithms, 64h, L1, UGA, France.

- Licence : Romain Vergne, WebGL, 29h, L3, IUT2 Grenoble, France.

- Licence : Romain Vergne, Programmation, 68h. L1, UGA, France.

- Master : Romain Vergne, Image synthesis, 27h, M1, UGA, France.

- Master : Romain Vergne, 3D graphics, 15h, M1, UGA, France.

- Master : Nicolas Holzschuch, Computer Graphics II, 18h, M2 MoSIG, France.

- Master : Nicolas Holzschuch, Synthèse d’Images et Animation, 32h, M2, ENSIMAG, France.

- Licence: Georges-Pierre Bonneau, Algorithmique et Programmation Impérative, 23h, L3, Polytech-Grenoble, France.

- Master: Georges-Pierre Bonneau, responsable de la 4ième année du département INFO, 32h, M1, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Image Synthesis, 23h, M1, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Data Visualization, 40h, M2, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Digital Geometry, 23h, M1, UGA

- Master: Georges-Pierre Bonneau, Information Visualization, 22h, Mastere, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Scientific Visualization, M2, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Computer Graphics II, 18h, M2 MoSiG, UGA, France.

8.2.2 Supervision

- PhD in progress: Vincent Tavernier, Procedural stochastic textures, 1/10/2017, Fabrice Neyret, Joëlle Thollot, Romain Vergne.

- PhD in progress: Sunrise Wang, Light transport operators simplification using neural networks, 1/9/2018, Nicolas Holzschuch

- PhD in progress: Morgane Gérardin, Connecting physical and chemical properties with material appearance, 1/10/2018, Nicolas Holzschuch

- PhD in progress: Ronak Molazem, Dimensional Analysis of Light Transport, 1/09/2018, Cyril Soler

- PhD in progress: Nolan Mestre, Rendering of panorama maps, 1/10/2019, Joëlle Thollot, Romain Vergne.

- Post-doc in progress: Maxime Garcia, Coherent procedural stylization of 3D scenes, 2020, Joëlle Thollot, Romain Vergne.

8.2.3 Juries

- Romain Vergne, member of the jury, PhD of Julien Fayer, University of Toulouse, 19/04/2019.

- Joëlle Thollot, president of the jury, PhD of Thibault Louis, Université Grenoble-Alpes, 22/10/2020

- Joëlle Thollot, reviewer, PhD of Pierre Ecormier-Nocca, institut polytechnique de Paris, 3/12/2020

- Georges-Pierre Bonneau, president of the jury, PhD of Patrick Perea, Université Grenoble Alpes, 27/02/20

- Georges-Pierre Bonneau, reviewer, PhD of Corentin Mercier, institut polytechnique de Paris, 17/12/20

8.3 Popularization

- Fabrice Neyret maintains the blog shadertoy-Unofficial https://

shadertoyunofficial. wordpress. com/ and various shaders examples on Shadertoy site https:// www. shadertoy. com/ to popularize GPU technologies as well as disseminates academic models within computer graphics, computer science, applied math and physics fields. About 26k pages viewed and 12k unique visitors (92% out of France) in 2020. - Fabrice Neyret maintains the the blog desmosGraph-Unofficial https://

desmosgraphunofficial. wordpress. com/ to popularize the use of interactive grapher DesmosGraph https:// www. desmos. com/ calculator for research, communication and pedagogy. For this year, about 8k pages viewed and 6k unique visitors (99% out of France) in 2020. - In August 2020 Fabrice Neyret launched the blog chiffres-et-paradoxes https://

chiffresetparadoxes. wordpress. com/ (in French) to popularize common errors, misunderstandings and paradoxes about statistics and numerical reasonning. About 8k pages viewed and 4k unique visitors in 2020 second semester (14% out of France, knowning the blog is in French).

9 Scientific production

9.1 Publications of the year

International journals

International peer-reviewed conferences

9.2 Cited publications

- 17 articleProcedural Phasor NoiseACM Transactions on Graphics384July 2019, Article No. 57:1-13