Keywords

Computer Science and Digital Science

- A5.2. Data visualization

- A5.5. Computer graphics

- A5.5.1. Geometrical modeling

- A5.5.2. Rendering

- A5.5.3. Computational photography

- A5.5.4. Animation

Other Research Topics and Application Domains

- B5.5. Materials

- B5.7. 3D printing

- B9.2.2. Cinema, Television

- B9.2.3. Video games

- B9.2.4. Theater

- B9.6.6. Archeology, History

1 Team members, visitors, external collaborators

Research Scientists

- Nicolas Holzschuch [Team leader, INRIA, Senior Researcher, HDR]

- Fabrice Neyret [CNRS, Senior Researcher, HDR]

- Cyril Soler [INRIA, Researcher, HDR]

Faculty Members

- Georges-Pierre Bonneau [UGA, Professor, HDR]

- Joelle Thollot [GRENOBLE INP, Professor, HDR]

- Romain Vergne [UGA, Associate Professor]

PhD Students

- Mohamed Amine Farhat [UGA, from Mar 2022]

- Ana Maria Granizo Hidalgo [UGA]

- Nicolas Guichard [KDAB]

- Nolan Mestres [UGA]

- Ronak Molazem [UGA, from Mar 2022]

- Ronak Molazem [INRIA, until Feb 2022]

- Antoine Richermoz [UGA, from Sep 2022]

Interns and Apprentices

- Antoine Richermoz [INRIA, from Oct 2022]

- Antoine Richermoz [INRIA, from Feb 2022 until Jul 2022]

Administrative Assistant

- Diane Courtiol [INRIA]

2 Overall objectives

Computer-generated pictures and videos are now ubiquitous: both for leisure activities, such as special effects in motion pictures, feature movies and video games, or for more serious activities, such as visualization and simulation.

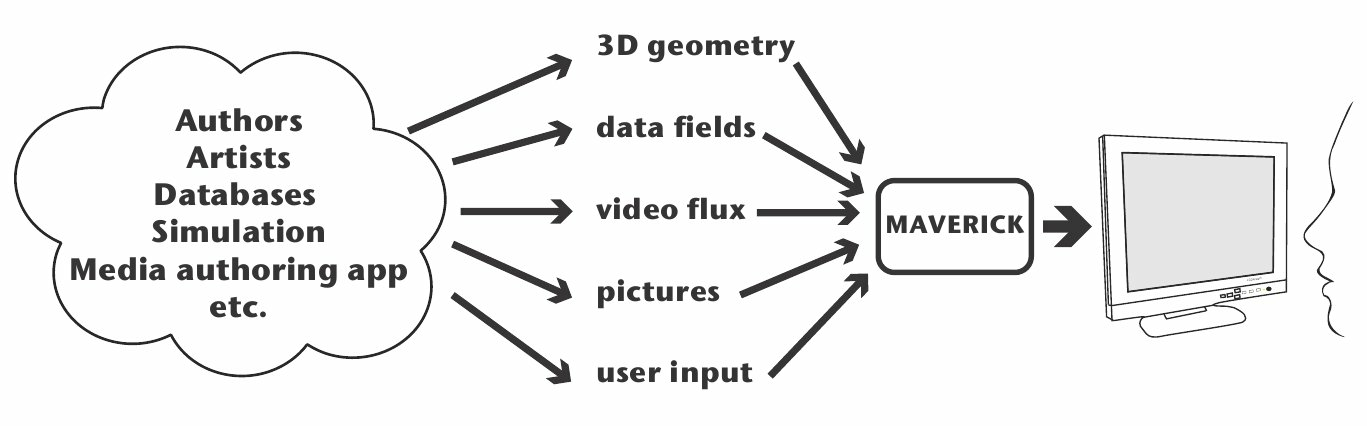

Maverick was created as a research team in January 2012 and upgraded as a research project in January 2014. We deal with image synthesis methods. We place ourselves at the end of the image production pipeline, when the pictures are generated and displayed (see figure 1). We take many possible inputs: datasets, video flows, pictures and photographs, (animated) geometry from a virtual world... We produce as output pictures and videos.

These pictures will be viewed by humans, and we consider this fact as an important point of our research strategy, as it provides the benchmarks for evaluating our results: the pictures and animations produced must be able to convey the message to the viewer. The actual message depends on the specific application: data visualization, exploring virtual worlds, designing paintings and drawings... Our vision is that all these applications share common research problems: ensuring that the important features are perceived, avoiding cluttering or aliasing, efficient internal data representation, etc.

Computer Graphics, and especially Maverick is at the crossroad between fundamental research and industrial applications. We are both looking at the constraints and needs of applicative users and targeting long term research issues such as sampling and filtering.

Position of the team in the graphics pipeline

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four Research problems:

- Computer Visualization, where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties,

- Expressive Rendering, where we create an artistic representation of a virtual world,

- Illumination Simulation, where our focus is modelling the interaction of light with the objects in the scene.

- Complex Scenes, where our focus is rendering and modelling highly complex scenes.

The heart of Maverick is understanding what makes a picture useful, powerful and interesting for the user, and designing algorithms to create these pictures.

We will address these research problems through three interconnected approaches:

- working on the impact of pictures, by conducting perceptual studies, measuring and removing artefacts and discontinuities, evaluating the user response to pictures and algorithms,

- developing representations for data, through abstraction, stylization and simplification,

- developing new methods for predicting the properties of a picture (e.g. frequency content, variations) and adapting our image-generation algorithm to these properties.

A fundamental element of the Maverick project-team is that the research problems and the scientific approaches are all cross-connected. Research on the impact of pictures is of interest in three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will gather contributions from all three scientific approaches: impact, representations and prediction.

3 Research program

The Maverick project-team aims at producing representations and algorithms for efficient, high-quality computer generation of pictures and animations through the study of four research problems:

- Computer Visualization where we take as input a large localized dataset and represent it in a way that will let an observer understand its key properties. Visualization can be used for data analysis, for the results of a simulation, for medical imaging data...

- Expressive Rendering, where we create an artistic representation of a virtual world. Expressive rendering corresponds to the generation of drawings or paintings of a virtual scene, but also to some areas of computational photography, where the picture is simplified in specific areas to focus the attention.

- Illumination Simulation, where we model the interaction of light with the objects in the scene, resulting in a photorealistic picture of the scene. Research include improving the quality and photorealism of pictures, including more complex effects such as depth-of-field or motion-blur. We are also working on accelerating the computations, both for real-time photorealistic rendering and offline, high-quality rendering.

- Complex Scenes, where we generate, manage, animate and render highly complex scenes, such as natural scenes with forests, rivers and oceans, but also large datasets for visualization. We are especially interested in interactive visualization of complex scenes, with all the associated challenges in terms of processing and memory bandwidth.

The fundamental research interest of Maverick is first, understanding what makes a picture useful, powerful and interesting for the user, and second designing algorithms to create and improve these pictures.

3.1 Research approaches

We will address these research problems through three interconnected research approaches:

Picture Impact

Our first research axis deals with the impact pictures have on the viewer, and how we can improve this impact. Our research here will target:

- evaluating user response: we need to evaluate how the viewers respond to the pictures and animations generated by our algorithms, through user studies, either asking the viewer about what he perceives in a picture or measuring how his body reacts (eye tracking, position tracking).

- removing artefacts and discontinuities: temporal and spatial discontinuities perturb viewer attention, distracting the viewer from the main message. These discontinuities occur during the picture creation process; finding and removing them is a difficult process.

Data Representation

The data we receive as input for picture generation is often unsuitable for interactive high-quality rendering: too many details, no spatial organisation... Similarly the pictures we produce or get as input for other algorithms can contain superfluous details.

One of our goals is to develop new data representations, adapted to our requirements for rendering. This includes fast access to the relevant information, but also access to the specific hierarchical level of information needed: we want to organize the data in hierarchical levels, pre-filter it so that sampling at a given level also gives information about the underlying levels. Our research for this axis include filtering, data abstraction, simplification and stylization.

The input data can be of any kind: geometric data, such as the model of an object, scientific data before visualization, pictures and photographs. It can be time-dependent or not; time-dependent data bring an additional level of challenge on the algorithm for fast updates.

Prediction and simulation

Our algorithms for generating pictures require computations: sampling, integration, simulation... These computations can be optimized if we already know the characteristics of the final picture. Our recent research has shown that it is possible to predict the local characteristics of a picture by studying the phenomena involved: the local complexity, the spatial variations, their direction...

Our goal is to develop new techniques for predicting the properties of a picture, and to adapt our image-generation algorithms to these properties, for example by sampling less in areas of low variation.

Our research problems and approaches are all cross-connected. Research on the impact of pictures is of interest in three different research problems: Computer Visualization, Expressive rendering and Illumination Simulation. Similarly, our research on Illumination simulation will use all three research approaches: impact, representations and prediction.

3.2 Cross-cutting research issues

Beyond the connections between our problems and research approaches, we are interested in several issues, which are present throughout all our research:

-

Sampling:

is an ubiquitous process occurring in all our application domains, whether photorealistic rendering (e.g. photon mapping), expressive rendering (e.g. brush strokes), texturing, fluid simulation (Lagrangian methods), etc. When sampling and reconstructing a signal for picture generation, we have to ensure both coherence and homogeneity. By coherence, we mean not introducing spatial or temporal discontinuities in the reconstructed signal. By homogeneity, we mean that samples should be placed regularly in space and time. For a time-dependent signal, these requirements are conflicting with each other, opening new areas of research.

-

Filtering:

is another ubiquitous process, occuring in all our application domains, whether in realistic rendering (e.g. for integrating height fields, normals, material properties), expressive rendering (e.g. for simplifying strokes), textures (through non-linearity and discontinuities). It is especially relevant when we are replacing a signal or data with a lower resolution (for hierarchical representation); this involves filtering the data with a reconstruction kernel, representing the transition between levels.

-

Performance and scalability:

are also a common requirement for all our applications. We want our algorithms to be usable, which implies that they can be used on large and complex scenes, placing a great importance on scalability. For some applications, we target interactive and real-time applications, with an update frequency between 10 Hz and 120 Hz.

-

Coherence and continuity:

in space and time is also a common requirement of realistic as well as expressive models which must be ensured despite contradictory requirements. We want to avoid flickering and aliasing.

-

Animation:

our input data is likely to be time-varying (e.g. animated geometry, physical simulation, time-dependent dataset). A common requirement for all our algorithms and data representation is that they must be compatible with animated data (fast updates for data structures, low latency algorithms...).

3.3 Methodology

Our research is guided by several methodological principles:

-

Experimentation:

to find solutions and phenomenological models, we use experimentation, performing statistical measurements of how a system behaves. We then extract a model from the experimental data.

-

Validation:

for each algorithm we develop, we look for experimental validation: measuring the behavior of the algorithm, how it scales, how it improves over the state-of-the-art... We also compare our algorithms to the exact solution. Validation is harder for some of our research domains, but it remains a key principle for us.

-

Reducing the complexity of the problem:

the equations describing certain behaviors in image synthesis can have a large degree of complexity, precluding computations, especially in real time. This is true for physical simulation of fluids, tree growth, illumination simulation... We are looking for emerging phenomena and phenomenological models to describe them (see framed box “Emerging phenomena”). Using these, we simplify the theoretical models in a controlled way, to improve user interaction and accelerate the computations.

-

Transferring ideas from other domains:

Computer Graphics is, by nature, at the interface of many research domains: physics for the behavior of light, applied mathematics for numerical simulation, biology, algorithmics... We import tools from all these domains, and keep looking for new tools and ideas.

-

Develop new fondamental tools:

In situations where specific tools are required for a problem, we will proceed from a theoretical framework to develop them. These tools may in return have applications in other domains, and we are ready to disseminate them.

-

Collaborate with industrial partners:

we have a long experience of collaboration with industrial partners. These collaborations bring us new problems to solve, with short-term or medium-term transfer opportunities. When we cooperate with these partners, we have to find what they need, which can be very different from what they want, their expressed need.

4 Application domains

The natural application domain for our research is the production of digital images, for example for movies and special effects, virtual prototyping, video games... Our research have also been applied to tools for generating and editing images and textures, for example generating textures for maps. Our current application domains are:

- Offline and real-time rendering in movie special effects and video games;

- Virtual prototyping;

- Scientific visualization;

- Content modeling and generation (e.g. generating texture for video games, capturing reflectance properties, etc);

- Image creation and manipulation.

5 Social and environmental responsibility

While research in the Maverick team generaly involves significantly greedy computer hardware (e.g. 1 Tera-flop graphics cards) and heavy computations, the objective of most work in the Maverick team is the improvement of the performance of algorithms or to create new methods to obtain results at a lower computation cost. The team can in some way be seen as having a favorable impact in the long term on the overall energy consumption in computer graphics activities such as movie productions, video games, etc.

6 Highlights of the year

6.1 Awards

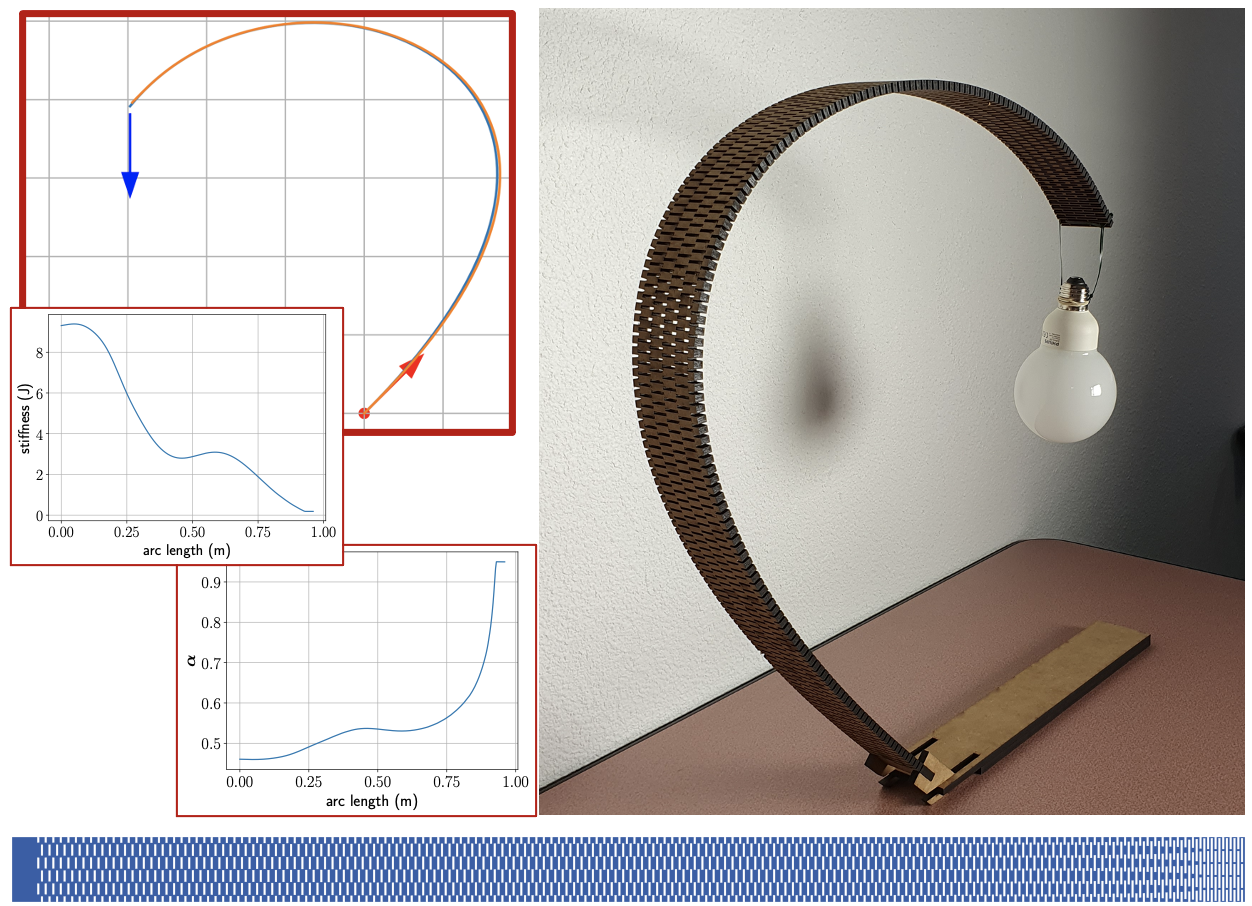

Emmanuel Rodriguez, Georges-Pierre Bonneau, Stefanie Hahmann and Melina Skouras received the Best-Paper Award at the conference Solid and Physical Modeling'2022 for their paper on Computational Design of Laser-Cut Bending-Active Structures 4 (see Figure 2). This work is co-funded by the FET Open EU project ADAM2.

Best paper award SPM2022Teaser.

6.2 Micmap startup project: maturation phase

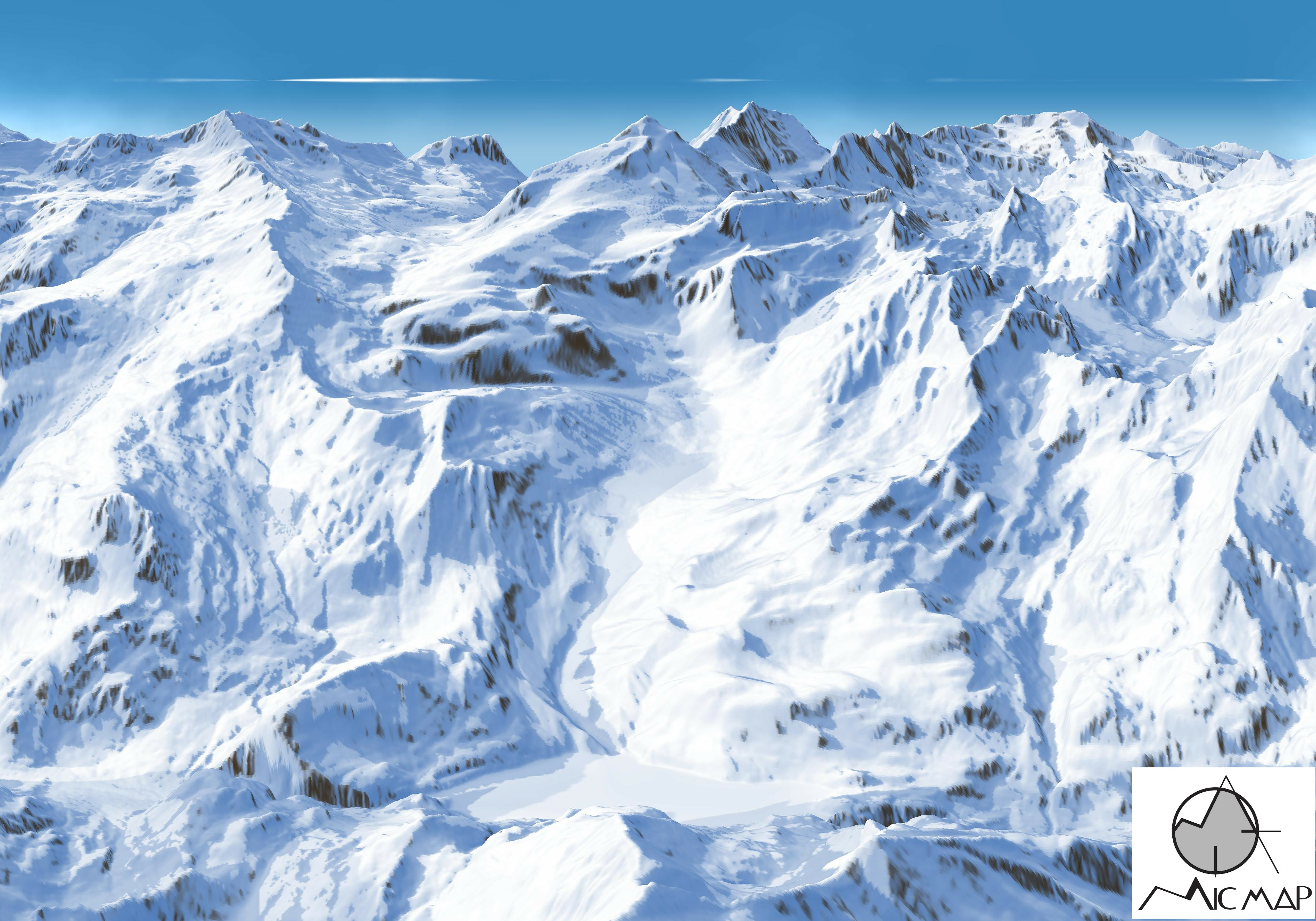

Joëlle Thollot and Romain Vergne are in the process of creating a deeptech startup, Micmap, based on their research on rendering stylized terrain panorama maps (see Figure 3). They have obtained a 190 k€ funding from the Linksium SATT for the maturation phase.

7 New software and platforms

7.1 New software

7.1.1 GRATIN

-

Keywords:

GLSL Shaders, Vector graphics, Texture Synthesis

-

Functional Description:

Gratin is a node-based compositing software for creating, manipulating and animating 2D and 3D data. It uses an internal direct acyclic multi-graph and provides an intuitive user interface that allows to quickly design complex prototypes. Gratin has several properties that make it useful for researchers and students. (1) it works in real-time: everything is executed on the GPU, using OpenGL, GLSL and/or Cuda. (2) it is easily programmable: users can directly write GLSL scripts inside the interface, or create new C++ plugins that will be loaded as new nodes in the software. (3) all the parameters can be animated using keyframe curves to generate videos and demos. (4) the system allows to easily exchange nodes, group of nodes or full pipelines between people.

- URL:

-

Contact:

Romain Vergne

-

Participants:

Pascal Barla, Romain Vergne

-

Partner:

UJF

7.1.2 HQR

-

Name:

High Quality Renderer

-

Keywords:

Lighting simulation, Materials, Plug-in

-

Functional Description:

HQR is a global lighting simulation platform. HQR software is based on the photon mapping method which is capable of solving the light balance equation and of giving a high quality solution. Through a graphical user interface, it reads X3D scenes using the X3DToolKit package developed at Maverick, it allows the user to tune several parameters, computes photon maps, and reconstructs information to obtain a high quality solution. HQR also accepts plugins which considerably eases the developpement of new algorithms for global illumination, those benefiting from the existing algorithms for handling materials, geometry and light sources.

- URL:

-

Contact:

Cyril Soler

-

Participant:

Cyril Soler

7.1.3 libylm

-

Name:

LibYLM

-

Keyword:

Spherical harmonics

-

Functional Description:

This library implements spherical and zonal harmonics. It provides the means to perform decompositions, manipulate spherical harmonic distributions and provides its own viewer to visualize spherical harmonic distributions.

- URL:

-

Author:

Cyril Soler

-

Contact:

Cyril Soler

7.1.4 ShwarpIt

-

Name:

ShwarpIt

-

Keyword:

Warping

-

Functional Description:

ShwarpIt is a simple mobile app that allows you to manipulate the perception of shapes in images. Slide the ShwarpIt slider to the right to make shapes appear rounder. Slide it to the left to make shapes appear more flat. The Scale slider gives you control on the scale of the warping deformation.

- URL:

-

Contact:

Georges-Pierre Bonneau

7.1.5 iOS_system

-

Keyword:

IOS

-

Functional Description:

From a programmer point of view, iOS behaves almost as a BSD Unix. Most existing OpenSource programs can be compiled and run on iPhones and iPads. One key exception is that there is no way to call another program (system() or fork()/exec()). This library fills the gap, providing an emulation of system() and exec() through dynamic libraries and an emulation of fork() using threads.

While threads can not provide a perfect replacement for fork(), the result is good enough for most usage, and open-source programs can easily be ported to iOS with minimal efforts. Examples of softwares ported using this library include TeX, Python, Lua and llvm/clang.

-

Release Contributions:

This version makes iOS_system available as Swift Packages, making the integration in other projects easier.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.6 Carnets for Jupyter

-

Keywords:

IOS, Python

-

Functional Description:

Jupyter notebooks are a very convenient tool for prototyping, teaching and research. Combining text, code snippets and the result of code execution, they allow users to write down ideas, test them, share them. Jupyter notebooks usually require connection to a distant server, and thus a stable network connection, which is not always possible (e.g. for field trips, or during transport). Carnets runs both the server and the client locally on the iPhone or iPad, allowing users to create, edit and run Jupyter notebooks locally.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.7 a-Shell

-

Keywords:

IOS, Smartphone

-

Functional Description:

a-Shell is a terminal emulator for iOS. It behaves like a Unix terminal and lets the user run commands. All these commands are executed locally, on the iPhone or iPad. Commands available include standard terminal commands (ls, cp, rm, mkdir, tar, nslookup...) but also programming languages such as Python, Lua, C and C++. TeX is also available. Users familiar with Unix tools can run their favorite commands on their mobile device, on the go, without the need for a network connection.

- URL:

-

Contact:

Nicolas Holzschuch

7.1.8 X3D TOOLKIT

-

Name:

X3D Development pateform

-

Keywords:

X3D, Geometric modeling

-

Functional Description:

X3DToolkit is a library to parse and write X3D files, that supports plugins and extensions.

- URL:

-

Contact:

Cyril Soler

-

Participants:

Gilles Debunne, Yannick Le Goc

7.1.9 GigaVoxels

-

Functional Description:

Gigavoxel is a software platform which goal is the real-time quality rendering of very large and very detailed scenes which couldn't fit memory. Performances permit showing details over deep zooms and walk through very crowdy scenes (which are rigid, for the moment). The principle is to represent data on the GPU as a Sparse Voxel Octree which multiscale voxels bricks are produced on demand only when necessary and only at the required resolution, and kept in a LRU cache. User defined producer lays accross CPU and GPU and can load, transform, or procedurally create the data. Another user defined function is called to shade each voxel according to the user-defined voxel content, so that it is user choice to distribute the appearance-making at creation (for faster rendering) or on the fly (for storageless thin procedural details). The efficient rendering is done using a GPU differential cone-tracing using the scale corresponding to the 3D-MIPmapping LOD, allowing quality rendering with one single ray per pixel. Data is produced in case of cache miss, and thus only whenever visible (accounting for view frustum and occlusion). Soft-shadows and depth-of-field is easily obtained using larger cones, and are indeed cheaper than unblurred rendering. Beside the representation, data management and base rendering algorithm themself, we also worked on realtime light transport, and on quality prefiltering of complex data. Ongoing researches are addressing animation. GigaVoxels is currently used for the quality real-time exploration of the detailed galaxy in ANR RTIGE. Most of the work published by Cyril Crassin (and al.) during his PhD (see http://maverick.inria.fr/Members/Cyril.Crassin/ ) is related to GigaVoxels. GigaVoxels is available for Windows and Linux under the BSD-3 licence.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Cyril Crassin, Eric Heitz, Fabrice Neyret, Jérémy Sinoir, Pascal Guehl, Prashant Goswami

7.1.10 MobiNet

-

Keywords:

Co-simulation, Education, Programmation

-

Functional Description:

The MobiNet software allows for the creation of simple applications such as video games, virtual physics experiments or pedagogical math illustrations. It relies on an intuitive graphical interface and language which allows the user to program a set of mobile objects (possibly through a network). It is available in public domain for Linux,Windows and MacOS.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Fabrice Neyret, Franck Hétroy, Joelle Thollot, Samuel Hornus, Sylvain Lefebvre

-

Partners:

CNRS, LJK, INP Grenoble, Inria, IREM, Cies, GRAVIR

7.1.11 PROLAND

-

Name:

PROcedural LANDscape

-

Keywords:

Atmosphere, Masses of data, Realistic rendering, 3D, Real time, Ocean

-

Functional Description:

The goal of this platform is the real-time quality rendering and editing of large landscapes. All features can work with planet-sized terrains, for all viewpoints from ground to space. Most of the work published by Eric Bruneton and Fabrice Neyret (see http://evasion.inrialpes.fr/Membres/Eric.Bruneton/ ) has been done within Proland and integrated in the main branch. Proland is available under the BSD-3 licence.

- URL:

-

Contact:

Fabrice Neyret

-

Participants:

Antoine Begault, Eric Bruneton, Fabrice Neyret, Guillaume Piolet

8 New results

8.1 Hierarchical Animation in Complex Scenes

8.1.1 Interactive simulation of plume and pyroclastic volcanic ejections

Participants: Maud Lastic, Damien Rohmer, Guillaume Cordonnier, Claude Jaupart, Fabrice Neyret, Marie-Paule Cani.

We propose an interactive animation method for the ejection of gas and ashes mixtures in volcano eruption. Our novel, layered solution combines a coarse-grain, physically-based simulation of the ejection dynamics with a consistent, procedural animation of multi-resolution details. We show that this layered model can be used to capture the two main types of ejection, namely ascending plume columns composed of rapidly rising gas carrying ash which progressively entrains more air, and pyroclastic flows which descend the slopes of the volcano depositing ash, ultimately leading to smaller plumes along their way. We validate the large-scale consistency of our model through comparison with geoscience data, and discuss both real-time visualization and off-line, realistic rendering (see Figure 4). This work was published in the journal Proceedings of the ACM on Computer Graphics and Interactive Techniques 2.

Interactive simulation (left) and off-line rendering of our work on ejection phenomena during volcanic eruption: Rising column under the wind (middle); Pyroclastic flow (right), where secondary columns are generated along the way.

8.2 Light Transport Simulation

8.2.1 Spectral Analysis of the Light Transport Operator

Participants: Ronak Molazem, Cyril Soler.

In this work we study the spectral properties of the light transport operator. We proved in particular that this operator is not compact and therefore the light transport equation does not benefit from the guaranties that normally come with Fredholm equations. This contradicts several previous work that took the compactness of the light transport operator for granted, and it explains why methods strive to bound errors next to scene edges where the integral kernel is not bounded.

This study also brought us to devise new methods to compute eigenvalues from the light transport operator in Lambertian scenes (where in some situations is locally behaves like a compact operator despite not being compact).These methods combine results from the fields of pure and abstract mathematics: resolvent theory, Monte-Carlo factorisation of large matrices and Fredholm determinents. We proved in particular that it is possible to compute the eigenvalues of the light transport operator by integrating "circular" light paths of various lengths accross the scene, and that eigenvalues are real in lambertian scenes despite the operator not being normal.

This work is part of the PhD of Ronak Molazem and is funded by the ANR project "CaLiTrOp". One part of this work has been published to Siggraph 2022 9. A short video presenting the problem and how we solved it is accessible here. The other part (eigenvalue calculation methods) will be submitted later.

Ronak Molazem defended her PhD on September 30, 2022 12.

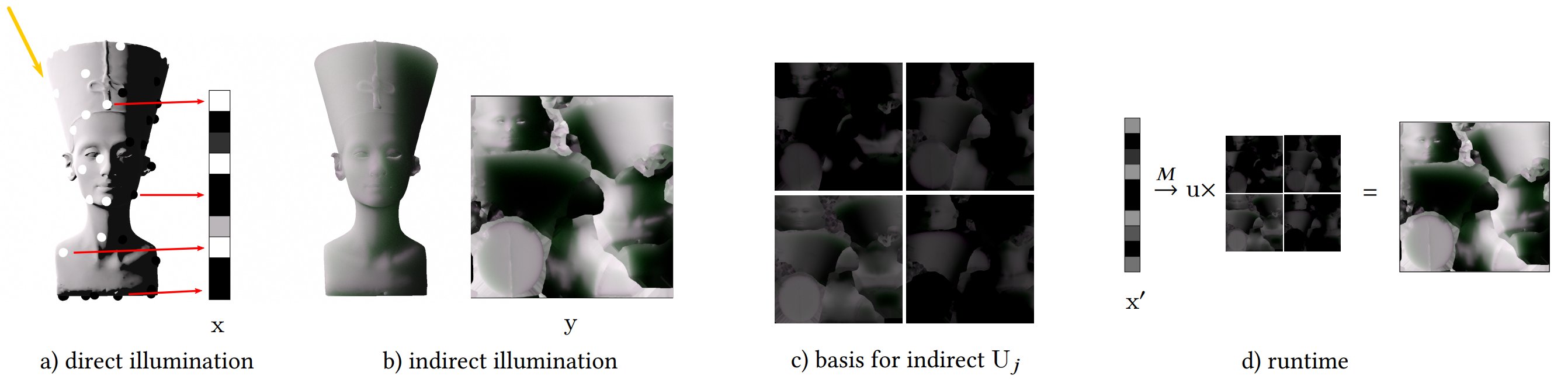

8.2.2 Data-Driven Precomputed Radiance Transfer

Participants: Laurent Belcour, Thomas Deliot, Wilhem Barbier, Cyril Soler.

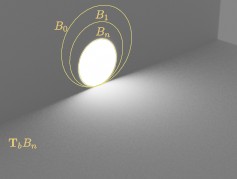

In this work, we explore a change of paradigm to build Precomputed Radiance Transfer (PRT) methods in a data-driven way. This paradigm shift allows us to alleviate the difficulties of building traditional PRT methods such as defining a reconstruction basis, coding a dedicated path tracer to compute a transfer function, etc. Our objective is to pave the way for Machine Learned methods by providing a simple baseline algorithm. More specifically, we demonstrate real-time rendering of indirect illumination in hair and surfaces from a few measurements of direct lighting. We build our baseline from pairs of direct and indirect illumination renderings using only standard tools such as Singular Value Decomposition (SVD) to extract both the reconstruction basis and transfer function from the observed data itself.

This work was a collaboration with the Unity Research Grenoble (Laurent Belcour, Thomas Deliot). It has been published to Eurographics 2022 7.

8.2.3 Unbiased Caustics Rendering guided by representative specular paths

Participants: Nicolas Holzschuch, Beibei Wang.

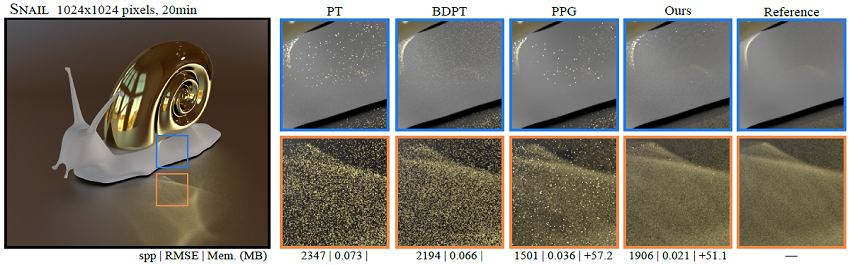

Equal-time comparison between our new method for computing caustics and the state-of-the-art.

Caustics are interesting patterns caused by the light being focused when reflecting off glossy materials. Rendering them in computer graphics is still challenging: they correspond to high luminous intensity focused over a small area. Finding the paths that contribute to this small area is difficult, and even more difficult when using camera-based path tracing instead of bidirectional approaches. Recent improvements in path guiding are still unable to compute efficiently the light paths that contribute to a caustic. We have developed a novel path guiding approach to enable reliable rendering of caustics. Our approach relies on computing representative specular paths, then extending them using a chain of spherical Gaussians. We use these extended paths to estimate the incident radiance distribution and guide path tracing. We combine this approach with several practical strategies, such as spatial reusing and parallax-aware representation for arbitrarily curved reflectors. Our path-guided algorithm using extended specular paths outperforms current state-of-the-art methods and handles multiple bounces of light and a variety of scenes (see Figure 7). This work was published as a conference paper at Siggraph Asia 2022.

8.3 Modelisation of material appearance

8.3.1 Constant-Cost Spatio-Angular Prefiltering of Glinty Appearance Using Tensor Decomposition

Participants: Nicolas Holzschuch, Beibei Wang.

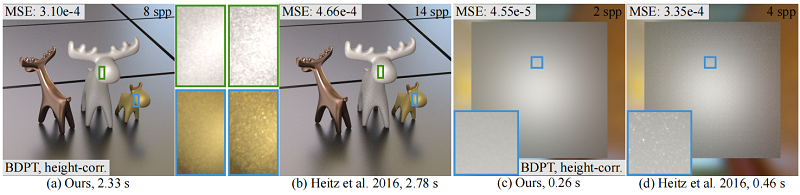

Comparison between our constant-coost method for computing glints and the state of the art by Yan et al. [2016]

The detailed glinty appearance from complex surface microstructures enhances the level of realism, but is both storage- and time-consuming to render, especially when viewed from far away (large spatial coverage) and/or illuminated by area lights (large angular coverage). We have developed a way to formulate the glinty appearance rendering process as a spatio-angular range query problem of the Normal Distribution Functions (NDFs), and introduce an efficient spatio-angular prefiltering solution to it. We start by exhaustively precomputing all possible NDFs with differently sized positional coverages. Then we compress the precomputed data using tensor rank decomposition, which enables accurate and fast angular range queries. With our spatio-angular prefiltering scheme, we are able to solve both the storage and performance issues at the same time, leading to efficient rendering of glinty appearance with both constant storage and constant performance, regardless of the range of spatio-angular queries. Finally, we demonstrate that our method easily applies to practical rendering applications that were traditionally considered difficult. For example, efficient bidirectional reflection distribution function (BRDF) evaluation accurate NDF importance sampling, fast global illumination between glinty objects, high-frequency preserving rendering with environment lighting, and tile-based synthesis of glinty appearance (see Figure 8). This work was published in the journal ACM Transactions on Graphics 1.

8.3.2 Position-Free Multiple-Bounce Computations for Smith Microfacet BSDFs

Participants: Nicolas Holzschuch, Beibei Wang.

Comparison between our method for computing multiple bounces in microfacet BSDFs and previous work by Heitz et al. [2016]. Our method is less noisy for the same computation time.

Bidirectional Scattering Distribution Functions (BSDFs) encode how a material reflects or transmits the incoming light. The most commonly used model is the microfacet BSDF. It computes the material response from the microgeometry of the surface assuming a single bounce on specular microfacets. The original model ignores multiple bounces on the microgeometry, resulting in an energy loss, especially for rough materials. We have developed a new method to compute the multiple bounces inside the microgeometry, eliminating this energy loss. Our method relies on a position-free formulation of multiple bounces inside the microgeometry. We use an explicit mathematical definition of the path space that describes single and multiple bounces in a uniform way. We then study the behavior of light on the different vertices and segments in the path space, leading to a reciprocal multiple-bounce description of BSDFs. Furthermore, we present practical, unbiased Monte Carlo estimators to compute multiple scattering. Our method is less noisy than existing algorithms for computing multiple scattering. It is almost noise-free with a very-low sampling rate, from 2 to 4 samples per pixel (spp) (see Figure 9). This work was accepted at Siggraph 2022 and published in the journal ACM Transactions on Graphics 5.

8.3.3 SVBRDF Recovery from a Single Image with Highlights Using a Pre-Trained Generative Adversarial Network

Participants: Nicolas Holzschuch, Beibei Wang.

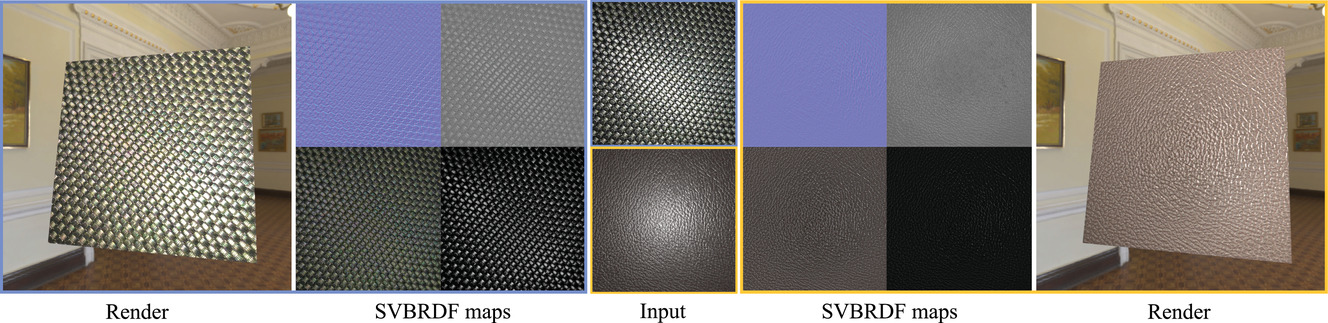

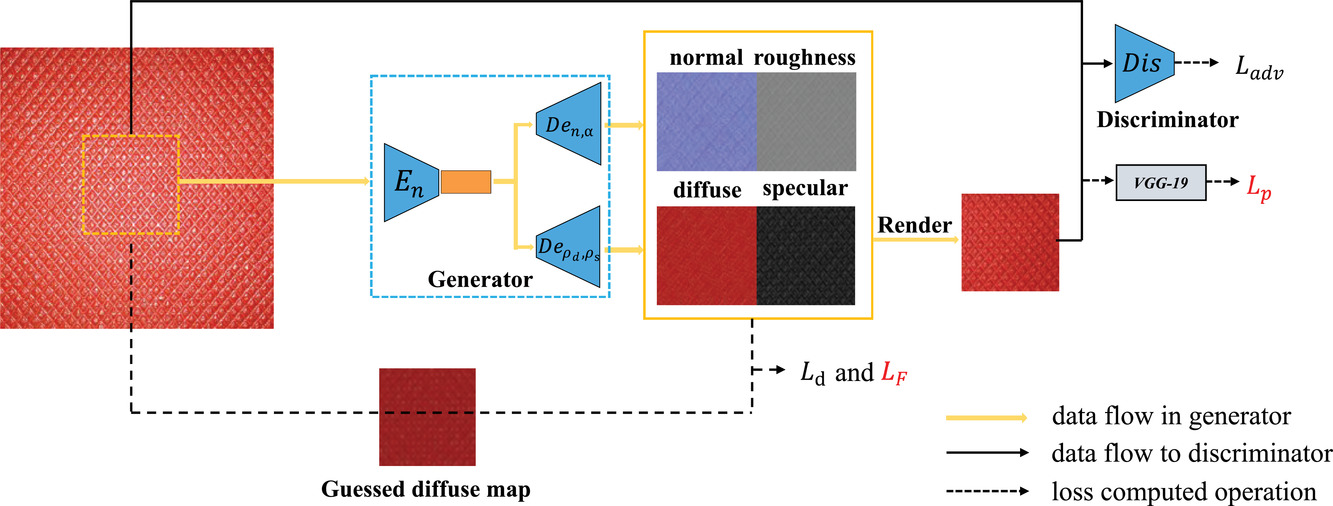

Our method generates high-quality and natural SVBRDF maps from a single input photograph.

Description of our

Spatially varying bi-directional reflectance distribution functions (SVBRDFs) are crucial for designers to incorporate new materials in virtual scenes, making them look more realistic. Reconstruction of SVBRDFs is a long-standing problem. Existing methods either rely on an extensive acquisition system or require huge datasets, which are non-trivial to acquire. We aim to recover SVBRDFs from a single image, without any datasets. A single image contains incomplete information about the SVBRDF, making the reconstruction task highly ill-posed. It is also difficult to separate between the changes in colour that are caused by the material and those caused by the illumination, without the prior knowledge learned from the dataset. We use an unsupervised generative adversarial neural network (GAN) to recover SVBRDFs maps with a single image as input (see Figure 11). To better separate the effects due to illumination from the effects due to the material, we add the hypothesis that the material is stationary and introduce a new loss function based on Fourier coefficients to enforce this stationarity. For efficiency, we train the network in two stages: reusing a trained model to initialize the SVBRDFs and fine-tune it based on the input image. Our method generates high-quality SVBRDFs maps from a single input photograph, and provides more vivid rendering results compared to the previous work (see Figure 10). The two-stage training boosts runtime performance, making it eight times faster than the previous work. This work was published in the journal Computer Graphics Forum 6.

8.4 Expressive rendering

8.4.1 Light Manipulation for an Expressive Depiction of Shape and Depth

Participants: Nolan Mestre, Romain Vergne, Joëlle Thollot.

Nolan Mestres defended his PhD thesis 11 in which he investigates how visual artists control lighting to influence our perception of physical properties of the scene. He found a particular case-study in the style of the hand-painted panorama maps of Pierre Novat (1928-2007), who excelled at depicting complex mountainous landscapes. He studied Novat’s pictorial style and how he freed himself from depicting mountains realistically, in favour of effectively transmitting the necessary information for terrain understanding. This work has been published in Cartographic Perspectives 3.

In his PhD thesis, Nolan Mestres presents two technical contributions. Local Light Alignment focuses on enhancing shape depiction at multiple scales by controlling the shading intensity locally at the surface. The second contribution focuses on cast shadows by computing geometry-dependent light directions ensuring a correct placement of cast shadows. He also proposes multi-scale cast shadows to reintroduce lost depth and shape cues in already shadowed areas.

Based of a careful study of Novat’s panoramas, we propose a shading method and controlled cast shadows so as to produce legible images.

8.4.2 Micmap transfer project

Participants: Nolan Mestre, Arthur Novat, Romain Vergne, Joëlle Thollot.

Following the work done during Nolan's Mestres PhD, we have decided to start an industrial transfer project with the goal of creating a small company specialized in mountain panorama maps creation. We are currently funded by the Linksium SATT and Grenoble Inp for the maturation step.

In this context Nolan Mestres will start a 15 month postdoc in January 2023.

8.5 Synthetizing new materials

8.5.1 Computational Design of Laser-Cut Bending-Active Structures

Participants: Emmanuel Rodriguez, Georges-Pierre Bonneau, Stefanie Hahmann, Mélina Skouras.

We propose a method to automatically design bending-active structures, made of wood, whose silhouettes at equilibrium match desired target curves. Our approach is based on the use of a parametric pattern that is regularly laser-cut on the structure and that allows us to locally modulate the bending stiffness of the material. To make the problem tractable, we rely on a two-scale approach where we first compute the mapping between the average mechanical properties of periodically laser-cut samples of mdf wood, treated here as metamaterials, and the stiffness parameters of a reduced 2D model; then, given an input target shape, we automatically select the parameters of this reduced model that give us the desired silhouette profile. We validate our method both numerically and experimentally by fabricating a number of full scale structures of varied target shapes. This paper has been published in the Elsevier journal CAD 4, and presented at the conference SPM'2022. It has received the Best-Paper Award at SPM'2022.

This work is part of the FET Open European Project ADAM2

Design of bending-active strutures.

9 Bilateral contracts and grants with industry

9.1 Bilateral contracts with industry

Participants: Nicolas Holzschuch, Nicolas Guichard, Joëlle Thollot, Romain Vergne, Amine Farhat.

- We have a contract with KDAB France, connected with the PhD thesis of Nicolas Guichard (CIFRE).

- We have a contract with LeftAngle, connected with the PhD thesis of Amine Farhat (CIFRE).

10 Partnerships and cooperations

10.1 International research visitors

10.1.1 Visits to international teams

Research stays abroad

Georges-Pierre Bonneau

-

Visited institution:

Universität Leipzig

-

Country:

Germany

-

Dates:

01/09/2022-31/01/2023

- Context of the visit:

-

Mobility program/type of mobility:

Sabbatical, funded by Délégation INRIA.

-

Visited institution:

Freie Universität Berlin

-

Country:

Germany

-

Dates:

01/02/2023-30/06/2023

- Context of the visit:

-

Mobility program/type of mobility:

Sabbatical, funded by Délégation INRIA.

10.2 European initiatives

10.2.1 H2020 projects

Georges-Pierre Bonneau is part of the FET Open European Project "ANALYSIS, DESIGN And MANUFACTURING using MICROSTRUCTURES" (ADAM2, Grant agreement ID: 862025) , together with Stefanie Hahmann and Mélina Skouras from INRIA-Team ANIMA. This project is devoted to the geometric design, numerical analysis and fabrication of objects made of microstructures. The INRIA team participates to the research workpackage entitled "Modeling of curved geometries using microstructures and auxetic metamaterials", and leads the workpackage "Dissemination". We published a paper in 2022 within the project ADAM2 4.

10.3 National initiatives

10.3.1 CDP: Patrimalp 2.0

Participants: Nicolas Holzschuch [contact], Romain Vergne.

The main objective and challenge of Patrimalp 2.0 is to develop a cross-disciplinary approach in order to get a better knowledge of the material cultural heritage in order to ensure its sustainability, valorization and diffusion in society. Carried out by members of UGA laboratories, combining skills in human sciences, geosciences, digital engineering, material sciences, in close connection with stakeholders of heritage and cultural life, curators and restorers, Patrimalp 2.0 intends to develop of a new interdisciplinary science: Cultural Heritage Science.Patrimalp is funded by the Idex Univ. Grenoble-Alpes under its “Cross-Disciplinary Program” funding and started in January 2018, for a period of 48 months.

10.3.2 CDTools: Patrimalp

Participants: Nicolas Holzschuch [contact], Romain Vergne.

Patrimalp was extended by Univ. Grenoble-Alpes under the new funding “`Cross-Disciplinary Tools”, for a period of 36 months.

The main objective and challenge of the CDTools Patrimalp is to develop a cross-disciplinary approach in order to get a better knowledge of the material cultural heritage in order to ensure its sustainability, valorization and diffusion in society. Carried out by members of UGA laboratories, combining skills in human sciences, geosciences, digital engineering, material sciences, in close connection with stakeholders of heritage and cultural life, curators and restorers, the CDTools Patrimalp intends to develop of a new interdisciplinary science: Cultural Heritage Science.

10.3.3 ANR: CaLiTrOp

Participants: Cyril Soler [contact].

Computing photorealistic images relies on the simulation of light transfer in a 3D scene, typically modeled using geometric primitives and a collection of reflectance properties that represent the way objects interact with light. Estimating the color of a pixel traditionally consists in integrating contributions from light paths connecting the light sources to the camera sensor at that pixel.In this ANR we explore a transversal view of examining light transport operators from the point of view of infinite dimensional function spaces of light fields (imagine, e.g., reflectance as an operator that transforms a distribution of incident light into a distribution of reflected light). Not only are these operators all linear in these spaces but they are also very sparse. As a side effect, the sub-spaces of light distributions that are actually relevant during the computation of a solution always boil down to a low dimensional manifold embedded in the full space of light distributions.

Studying the structure of high dimensional objects from a low dimensional set of observables is a problem that becomes ubiquitous nowadays: Compressive sensing, Gaussian processes, harmonic analysis and differential analysis, are typical examples of mathematical tools which will be of great relevance to study the light transport operators.

Expected results of the fundamental-research project CALiTrOp, are a theoretical understanding of the dimensionality and structure of light transport operators, bringing new efficient lighting simulation methods, and efficient approximations of light transport with applications to real time global illumination for video games.

11 Dissemination

Participants: Nicolas Holzschuch, Cyril Soler, Georges-Pierre Bonneau, Romain Vergne, Fabrice Neyret, Joëlle Thollot.

11.1 Promoting scientific activities

11.1.1 Scientific events: organisation

Member of the organizing committees

- Nicolas Holzschuch is a member of the Eurographics Symposium on Rendering Steering Committee.

11.1.2 Scientific events: selection

Chair of conference program committees

- Georges-Pierre Bonneau was Paper co-Chair of SPM'2022

- Georges-Pierre Bonneau has been nominated Paper co-Chair of SMI'2023

Member of the conference program committees

- Nicolas Holzschuch was a member of the program committee for: Siggraph 2022 Technical Papers, Siggraph 2022 general program.

Reviewer

- Nicolas Holzschuch was a reviewer for Siggraph Asia 2022, for the Eurographics 2022 State-of-the-art program.

- Cyril Soler was a reviewer for Eurographics 2022, Siggraph 2022, and Siggraph Asia 2022.

- Joëlle Thollot was a reviewer for Eurographics 2022.

11.1.3 Journal

Member of the editorial boards

- Georges-Pierre Bonneau is Editorial Board Member of the journal Elsevier Computer Aided Design .

Reviewer - reviewing activities

- Cyril Soler was a reviewer for Transactions on Visualization and Computer Graphics (TVCG), and ACM TOG.

11.1.4 Research administration

- Nicolas Holzschuch is an elected member of the Conseil National de l'Enseignement Supérieur et de la Recherche (CNESER).

- Nicolas Holzschuch is co-responsible (with Anne-Marie Kermarrec of EPFL) of the Inria International Lab (IIL) “Inria-EPFL”.

- Nicolas Holzschuch and Georges-Pierre Bonneau are members of the Habilitation committee of the École Doctorale MSTII of Univ. Grenoble Alpes.

- Cyril Soler acts as scientific expert for the funding of scientific events at INRIA Grenoble.

11.2 Teaching - Supervision - Juries

11.2.1 Teaching

Joëlle Thollot and Georges-Pierre Bonneau are both full Professor of Computer Science. Romain Vergne is an associate professor in Computer Science. They teach general computer science topics at basic and intermediate levels, and advanced courses in computer graphics and visualization at the master levels. Nicolas Holzschuch teaches advanced courses in computer graphics at the master level.

Joëlle Thollot is in charge of the Erasmus+ program ASICIAO: a EU program to support six schools from Senegal and Togo in their pursuit of autonomy by helping them to develop their own method of improving quality in order to obtain the CTI accreditation.

- Licence: Joëlle Thollot, Théorie des langages, 45h, L3, ENSIMAG, France

- Licence: Joëlle Thollot, Module d'accompagnement professionnel, 10h, L3, ENSIMAG, France

- Licence: Joëlle Thollot, innovation, 10h, L3, ENSIMAG, France

- Master : Joelle Thollot, English courses using theater, 18h, M1, ENSIMAG, France.

- Master : Joelle Thollot, Analyse et conception objet de logiciels, 24h, M1, ENSIMAG, France.

- Licence : Romain Vergne, Introduction to algorithms, 64h, L1, UGA, France.

- Licence : Romain Vergne, WebGL, 29h, L3, IUT2 Grenoble, France.

- Licence : Romain Vergne, Programmation, 68h. L1, UGA, France.

- Master : Romain Vergne, Image synthesis, 27h, M1, UGA, France.

- Master : Romain Vergne, 3D graphics, 15h, M1, UGA, France.

- Master : Nicolas Holzschuch, Computer Graphics II, 18h, M2 MoSIG, France.

- Master : Nicolas Holzschuch, Synthèse d’Images et Animation, 32h, M2, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Image Synthesis, 23h, M1, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Data Visualization, 40h, M2, Polytech-Grenoble, France

- Master: Georges-Pierre Bonneau, Digital Geometry, 23h, M1, UGA

- Master: Georges-Pierre Bonneau, Information Visualization, 22h, Mastere, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Scientific Visualization, M2, ENSIMAG, France.

- Master: Georges-Pierre Bonneau, Computer Graphics II, 18h, M2 MoSiG, UGA, France.

11.2.2 Supervision

- Nicolas Holzschuch supervised the PhD of Morgane Gérardin, Sunrise Wang, Nicolas Guichard and Anita Granizo-Hidalgo.

- Fabrice Neyret supervises the PhD of Antoine Richermoz.

- Cyril Soler supervises the PhD of Ronak Molazem.

- Romain Vergne and Joëlle Thollot co-supervise the PhD of Nolan Mestres and Amine Farhat.

- Georges-Pierre Bonneau co-supervises the PhD of Emmanuel Rodriguez (project-team ANIMA).

11.2.3 Juries

- Georges-Pierre Bonneau was reviewer of for the PhD defense of Jeremie Schertzer (Institut Polytechnique de Paris).

- Nicolas Holzschuch was reviewer and president of the jury for the PhD defense of Siddhant Prakash (Université de Nice).

- Cyril Soler was reviewer in the jury of Pierre Mezières defense (Universite Paul Sabbatier, Toulouse).

- Joëlle Thollot was reviewer in the jury of Pauline Olivier defense (École polytechnique, Saclay).

11.3 Popularization

11.3.1 Articles and contents

- Fabrice Neyret maintains the blog shadertoy-Unofficial and various shaders examples on Shadertoy site to popularize GPU technologies as well as disseminates academic models within computer graphics, computer science, applied math and physics fields. About 24k pages viewed and 11k unique visitors (93% out of France) in 2021.

- Fabrice Neyret maintains the blog desmosGraph-Unofficial to popularize the use of interactive grapher DesmosGraph for research, communication and pedagogy. For this year, about 10k pages viewed and 6k unique visitors (99% out of France) in 2021.

- Fabrice Neyret maintains the the blog chiffres-et-paradoxes (in French) to popularize common errors, misunderstandings and paradoxes about statistics and numerical reasoning. About 9k pages viewed and 4.5k unique visitors since then (15% out of France, knowing the blog is in French) on the blog, plus the viewers via the Facebook and Twitter pages.

11.3.2 Interventions

-

Fabrice Neyret presented two conferences at UIAD ( inter-age Open University of the Grenoble Area ):

- "Les sciences dans les effets spéciaux et les jeux vidéo"

- "Manipulation par l'image: doit-on encore croire ce que l'on voit ?"

12 Scientific production

12.2 Publications of the year

International journals

International peer-reviewed conferences

Doctoral dissertations and habilitation theses

Other scientific publications