2024Activity reportProject-TeamRAINBOW

RNSR: 201822637G- Research center Inria Centre at Rennes University

- In partnership with:CNRS, Institut national des sciences appliquées de Rennes, Université de Rennes

- Team name: Sensor-based Robotics and Human Interaction

- In collaboration with:Institut de recherche en informatique et systèmes aléatoires (IRISA)

- Domain:Perception, Cognition and Interaction

- Theme:Robotics and Smart environments

Keywords

Computer Science and Digital Science

- A5.1.2. Evaluation of interactive systems

- A5.1.3. Haptic interfaces

- A5.1.7. Multimodal interfaces

- A5.1.9. User and perceptual studies

- A5.4.4. 3D and spatio-temporal reconstruction

- A5.4.6. Object localization

- A5.4.7. Visual servoing

- A5.6. Virtual reality, augmented reality

- A5.6.1. Virtual reality

- A5.6.2. Augmented reality

- A5.6.3. Avatar simulation and embodiment

- A5.6.4. Multisensory feedback and interfaces

- A5.9.2. Estimation, modeling

- A5.10.1. Design

- A5.10.2. Perception

- A5.10.3. Planning

- A5.10.4. Robot control

- A5.10.5. Robot interaction (with the environment, humans, other robots)

- A5.10.6. Swarm robotics

- A5.10.7. Learning

- A6.4.1. Deterministic control

- A6.4.3. Observability and Controlability

- A6.4.4. Stability and Stabilization

- A6.4.5. Control of distributed parameter systems

- A6.4.6. Optimal control

- A8.2.3. Calculus of variations

- A9.2. Machine learning

- A9.5. Robotics

- A9.7. AI algorithmics

- A9.9. Distributed AI, Multi-agent

Other Research Topics and Application Domains

- B2.5. Handicap and personal assistances

- B2.5.1. Sensorimotor disabilities

- B2.5.2. Cognitive disabilities

- B2.5.3. Assistance for elderly

- B5.1. Factory of the future

- B5.6. Robotic systems

- B8.1.2. Sensor networks for smart buildings

- B8.4. Security and personal assistance

1 Team members, visitors, external collaborators

Research Scientists

- Paolo Robuffo Giordano [Team leader, CNRS, Senior Researcher]

- François Chaumette [INRIA, Senior Researcher, HDR]

- Alexandre Krupa [INRIA, Senior Researcher, HDR]

- Claudio Pacchierotti [CNRS, Researcher, HDR]

- Esteban Restrepo [CNRS, Researcher, from Nov 2024]

- Marco Tognon [INRIA, ISFP]

Faculty Members

- Marie Babel [INSA RENNES, Professor]

- Vincent Drevelle [Univ. Rennes, Associate Professor]

- Maud Marchal [INSA RENNES, Professor]

- Éric Marchand [Univ. Rennes, Professor]

Post-Doctoral Fellow

- Tommaso Belvedere [CNRS, Post-Doctoral Fellow, from Jun 2024]

PhD Students

- Jose Eduardo Aguilar Segovia [INRIA]

- Lorenzo Balandi [INRIA]

- Maxime Bernard [CNRS]

- Szymon Bielenin [INRIA, from Oct 2024]

- Antoine Bout [INSA RENNES]

- Pierre-Antoine Cabaret [INRIA]

- Nicola De Carli [CNRS, until Apr 2024]

- Jessé De Oliveira Santana Alves [Univ. Rennes, from Nov 2024]

- Mael Gallois [INRIA, from Sep 2024]

- Glenn Kerbiriou [INTERDIGITAL, until Apr 2024]

- Ines Lacote [INRIA, until May 2024]

- Theo Le Terrier [INSA RENNES]

- Emilie Leblong [POLE ST HELIER]

- Maxime Manzano [INSA RENNES]

- Antonio Marino [Univ. Rennes]

- Paul Mefflet [Haption, CIFRE, from Feb 2024]

- Phillip Maximilian Mehl [INRIA, from Feb 2024]

- Lendy Mulot [INSA RENNES]

- Thibault Noel [INRIA, from Oct 2024]

- Thibault Noel [CREATIVE, until Sep 2024]

- Erwan Normand [Univ. Rennes]

- Mandela Ouafo Fonkoua [INRIA]

- Jim Pavan [INSA RENNES, from Oct 2024]

- Mattia Piras [INRIA]

- Lluis Prior Sancho [INRIA, from Nov 2024]

- Leon Raphalen [CNRS]

- Sara Rossi [INSA RENNES, from Feb 2024]

- Lev Smolentsev [INRIA, until Mar 2024]

- Ali Srour [CNRS, until Sep 2024]

- John Thomas [INRIA, until Apr 2024]

Technical Staff

- Riccardo Belletti [INRIA, from Apr 2024 until Jul 2024]

- Tommaso Belvedere [CNRS, from Mar 2024 until May 2024]

- Alessandro Colotti [INRIA, Engineer]

- Gianluca Corsini [CNRS, Engineer]

- Nicola De Carli [CNRS, Engineer, from May 2024]

- Louise Devigne [INSA RENNES, Engineer, from Sep 2024]

- Louise Devigne [INRIA, Engineer, until Aug 2024]

- Samuel Felton [INRIA, Engineer]

- Marco Ferro [CNRS, Engineer]

- Guillaume Gicquel [INSA RENNES, Engineer]

- Fabien Grzeskowiak [INSA RENNES, Engineer]

- Glenn Kerbiriou [INSA RENNES, Engineer, from Apr 2024]

- Romain Lagneau [INRIA, Engineer]

- Paul Mefflet [CNRS, Engineer, until Feb 2024]

- François Pasteau [INSA RENNES]

- Esteban Restrepo [CNRS, Engineer, until Oct 2024]

- Olivier Roussel [INRIA, Engineer]

- Fabien Spindler [INRIA, Engineer]

- Sebastien Thomas [INRIA, Engineer]

- Thomas Voisin [INRIA]

Interns and Apprentices

- Riccardo Belletti [INRIA, Intern, until Feb 2024]

- Martin Bichon Reynaud [ENS RENNES, Intern, until Jan 2024]

- Valeria Braglia [INRIA, Intern, from Feb 2024 until Aug 2024]

- Emanuele Buzzurro [INRIA, Intern, from Feb 2024 until Jul 2024]

- Giulio Franchi [INRIA, Intern, from Nov 2024]

- Tom Goalard [ENS Rennes, from Oct 2024]

- Nicolas Martinet [CNRS, Intern, until May 2024]

- Ilaria Pasini [INRIA, Intern, from Dec 2024]

- Francesca Porro [INRIA, Intern, from Sep 2024]

Administrative Assistant

- Hélène de La Ruée [Univ. Rennes]

Visiting Scientists

- Massimiliano Bertoni [UNIV PADOUE, until Mar 2024]

- Marco Cognetti [UNIV TOULOUSE III, from Jun 2024 until Jul 2024]

- Alessia Ivani [UNIV PISE, from Jun 2024 until Sep 2024]

- Matteo Lanzarini [UNIV BOLOGNE, from Feb 2024 until Jul 2024]

- Julien Mellet [UNIV NAPLES, from Apr 2024 until May 2024]

- Hiroki Ota [NAIST, from Oct 2024]

- Francesca Pagano [UNIV NAPLES, from Jun 2024 until Jun 2024]

- Francesca Pagano [UNIV NAPLES, until Feb 2024]

- Andrea Pupa [Université de Modène, from Jun 2024 until Jun 2024]

- Danilo Troisi [UNIV PISE, until Apr 2024]

2 Overall objectives

The long-term vision of the Rainbow team is to develop the next generation of sensor-based robots able to navigate and/or interact in complex unstructured environments together with human users. Clearly, the word “together”' can have very different meanings depending on the particular context: for example, it can refer to mere co-existence (robots and humans share some space while performing independent tasks), human-awareness (the robots need to be aware of the human state and intentions for properly adjusting their actions), or actual cooperation (robots and humans perform some shared task and need to coordinate their actions).

One could perhaps argue that these two goals are somehow in conflict since higher robot autonomy should imply lower (or absence of) human intervention. However, we believe that our general research direction is well motivated since: despite the many advancements in robot autonomy, complex and high-level cognitive-based decisions are still out of reach. In most applications involving tasks in unstructured environments, uncertainty, and interaction with the physical word, human assistance is still necessary, and will most probably be for the next decades. On the other hand, robots are extremely capable of autonomously executing specific and repetitive tasks, with great speed and precision, and of operating in dangerous/remote environments, while humans possess unmatched cognitive capabilities and world awareness which allow them to take complex and quick decisions; the cooperation between humans and robots is often an implicit constraint of the robotic task itself. Consider for instance the case of assistive robots supporting injured patients during their physical recovery, or human augmentation devices. It is then important to study proper ways of implementing this cooperation; finally, safety regulations can require the presence at all times of a person in charge of supervising and, if necessary, of taking direct control of the robotic workers. For example, this is a common requirement in all applications involving tasks in public spaces, like autonomous vehicles in crowded spaces, or even UAVs when flying in civil airspace such as over urban or populated areas.

Within this general picture, the Rainbow activities will be particularly focused on the case of (shared) cooperation between robots and humans by pursuing the following vision: on the one hand, empower robots with a large degree of autonomy for allowing them to effectively operate in non-trivial environments (e.g., outside completely defined factory settings). On the other hand, include human users in the loop for having them in (partial and bilateral) control of some aspects of the overall robot behavior. We plan to address these challenges from the methodological, algorithmic and application-oriented perspectives. The main research axes along which the Rainbow activities will be articulated are: three supporting axes (Optimal and Uncertainty-Aware Sensing; Advanced Sensor-based Control; Haptics for Robotics Applications) that are meant to develop methods, algorithms and technologies for realizing the central theme of Shared Control of Complex Robotic Systems.

3 Research program

3.1 Main Vision

The vision of Rainbow (and foreseen applications) calls for several general scientific challenges: high-level of autonomy for complex robots in complex (unstructured) environments, forward interfaces for letting an operator giving high-level commands to the robot, backward interfaces for informing the operator about the robot `status', user studies for assessing the best interfacing, which will clearly depend on the particular task/situation. Within Rainbow we plan to tackle these challenges at different levels of depth:

- the methodological and algorithmic side of the sought human-robot interaction will be the main focus of Rainbow. Here, we will be interested in advancing the state-of-the-art in sensor-based online planning, control and manipulation for mobile/fixed robots. For instance, while classically most control approaches (especially those sensor-based) have been essentially reactive, we believe that less myopic strategies based on online/reactive trajectory optimization will be needed for the future Rainbow activities. The core ideas of Model-Predictive Control approaches (also known as Receding Horizon) or, in general, numerical optimal control methods will play a role in the Rainbow activities, for allowing the robots to reason/plan over some future time window and better cope with constraints. We will also consider extending classical sensor-based motion control/manipulation techniques to more realistic scenarios, such as deformable/flexible objects (“Advanced Sensor-based Control” axis). Finally, it will also be important to spend research efforts into the field of Optimal Sensing, in the sense of generating (again) trajectories that can optimize the state estimation problem in presence of scarce sensory inputs and/or non-negligible measurement and process noises, especially true for the case of mobile robots (“Optimal and Uncertainty-Aware Sensing” axis). We also aim at addressing the case of coordination between a single human user and multiple robots where, clearly, as explained the autonomy part plays even a more crucial role (no human can control multiple robots at once, thus a high degree of autonomy will be required by the robot group for executing the human commands);

-

the interfacing side will also be a focus of the Rainbow activities. As explained above, we will be interested in both the forward (human robot) and backward (robot human) interfaces. The forward interface will be mainly addressed from the algorithmic point of view, i.e., how to map the few degrees of freedom available to a human operator (usually in the order of 3–4) into complex commands for the controlled robot(s). This mapping will typically be mediated by an “AutoPilot” onboard the robot(s) for autonomously assessing if the commands are feasible and, if not, how to least modify them (“Advanced Sensor-based Control” axis).

The backward interface will, instead, mainly consist of a visual/haptic feedback for the operator. Here, we aim at exploiting our expertise in using force cues for informing an operator about the status of the remote robot(s). However, the sole use of classical grounded force feedback devices (e.g., the typical force-feedback joysticks) will not be enough due to the different kinds of information that will have to be provided to the operator. In this context, the recent interest in the use of wearable haptic interfaces is very interesting and will be investigated in depth (these include, e.g., devices able to provide vibro-tactile information to the fingertips, wrist, or other parts of the body). The main challenges in these activities will be the mechanical conception (and construction) of suitable wearable interfaces for the tasks at hand, and in the generation of force cues for the operator: the force cues will be a (complex) function of the robot state, therefore motivating research in algorithms for mapping the robot state into a few variables (the force cues) (“Haptics for Robotics Applications” axis);

- the evaluation side that will assess the proposed interfaces with some user studies, or acceptability studies by human subjects. Although this activity will not be a main focus of Rainbow (complex user studies are beyond the scope of our core expertise), we will nevertheless devote some efforts into having some reasonable level of user evaluations by applying standard statistical analysis based on psychophysical procedures (e.g., randomized tests and Anova statistical analysis). This will be particularly true for the activities involving the use of smart wheelchairs, which are intended to be used by human users and operate inside human crowds. Therefore, we will be interested in gaining some level of understanding of how semi-autonomous robots (a wheelchair in this example) can predict the human intention, and how humans can react to a semi-autonomous mobile robot.

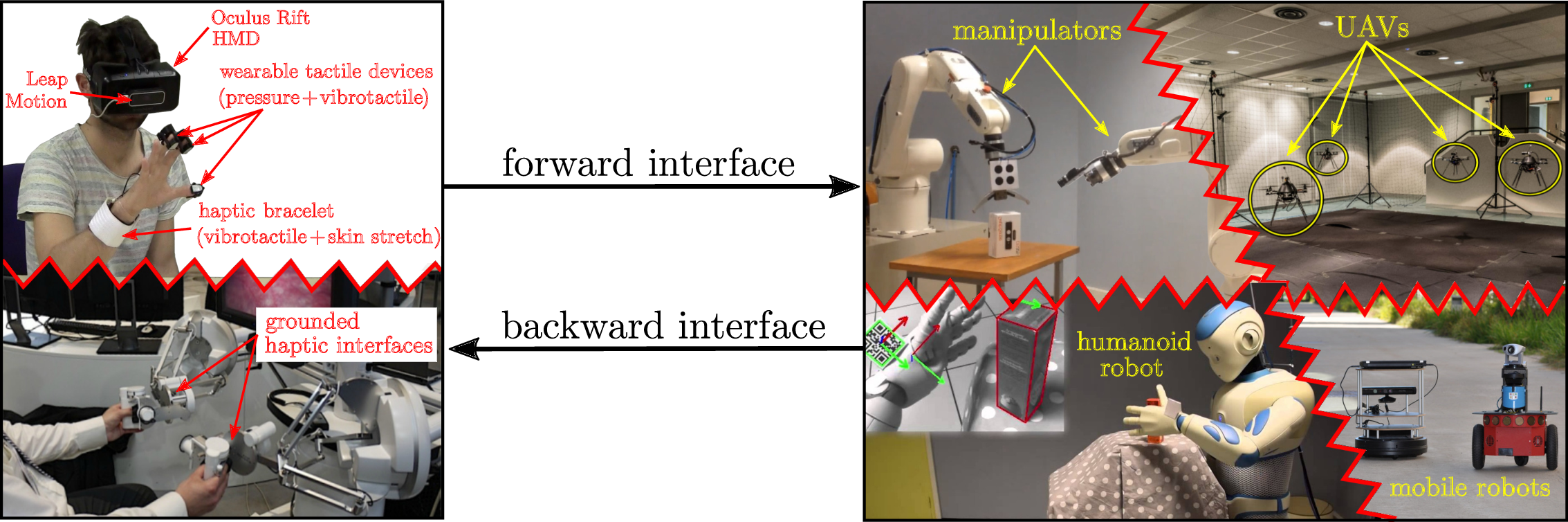

An illustration of the prototypical activities foreseen in Rainbow in which a human operator is in partial (and high-level) control of single/multiple complex robots performing semi-autonomous tasks

Figure 1 depicts in an illustrative way the prototypical activities foreseen in Rainbow. On the righthand side, complex robots (dual manipulators, humanoid, single/multiple mobile robots) need to perform some task with high degree of autonomy. On the lefthand side, a human operator gives some high-level commands and receives a visual/haptic feedback aimed at informing her/him at best of the robot status. Again, the main challenges that Rainbow will tackle to address these issues are (in order of relevance): methods and algorithms, mostly based on first-principle modeling and, when possible, on numerical methods for online/reactive trajectory generation, for enabling the robots with high autonomy; design and implementation of visual/haptic cues for interfacing the human operator with the robots, with a special attention to novel combinations of grounded/ungrounded (wearable) haptic devices; user and acceptability studies.

3.2 Main Components

Hereafter, a summary description of the four axes of research in Rainbow.

3.2.1 Optimal and Uncertainty-Aware Sensing

Future robots will need to have a large degree of autonomy for, e.g., interpreting the sensory data for accurate estimation of the robot and world state (which can possibly include the human users), and for devising motion plans able to take into account many constraints (actuation, sensor limitations, environment), including also the state estimation accuracy (i.e., how well the robot/environment state can be reconstructed from the sensed data). In this context, we will be particularly interested in devising trajectory optimization strategies able to maximize some norm of the information gain gathered along the trajectory (and with the available sensors). This can be seen as an instance of Active Sensing, with the main focus on online/reactive trajectory optimization strategies able to take into account several requirements/constraints (sensing/actuation limitations, noise characteristics). We will also be interested in the coupling between optimal sensing and concurrent execution of additional tasks (e.g., navigation, manipulation). Formal methods for guaranteeing the accuracy of localization/state estimation in mobile robotics, mainly exploiting tools from interval analysis. The interest of these methods is their ability to provide possibly conservative but guaranteed accuracy bounds on the best accuracy one can obtain with the given robot/sensor pair, and can thus be used for planning purposes or for system design (choice of the best sensors for a given robot/task). Localization/tracking of objects with poor/unknown or deformable shape, which will be of paramount importance for allowing robots to estimate the state of “complex objects” (e.g., human tissues in medical robotics, elastic materials in manipulation) for controlling its pose/interaction with the objects of interest.

3.2.2 Advanced Sensor-based Control

One of the main competences of the previous Lagadic team has been, generally speaking, the topic of sensor-based control, i.e., how to exploit (typically onboard) sensors for controlling the motion of fixed/ground robots. The main emphasis has been in devising ways to directly couple the robot motion with the sensor outputs in order to invert this mapping for driving the robots towards a configuration specified as a desired sensor reading (thus, directly in sensor space). This general idea has been applied to very different contexts: mainly standard vision (from which the Visual Servoing keyword), but also audio, ultrasound imaging, and RGB-D.

Use of sensors for controlling the robot motion will also clearly be a central topic of the Rainbow team too, since the use of (especially onboard) sensing is a main characteristic of any future robotics application (which should typically operate in unstructured environments, and thus mainly rely on its own ability to sense the world). We then naturally aim at making the best out of the previous Lagadic experience in sensor-based control to propose new advanced ways of exploiting sensed data for, roughly speaking, controlling the motion of a robot. In this respect, we plan to work on the following topics: “direct/dense methods” which try to directly exploit the raw sensory data in computing the control law for positioning/navigation tasks. The advantages of these methods is the little need for data pre-processing which can minimize feature extraction errors and, in general, improve the overall robustness/accuracy (since all the available data is used by the motion controller); sensor-based interaction with objects of unknown/deformable shapes, for gaining the ability to manipulate, e.g., flexible objects from the acquired sensed data (e.g., controlling online a needle being inserted in a flexible tissue); sensor-based model predictive control, by developing online/reactive trajectory optimization methods able to plan feasible trajectories for robots subjects to sensing/actuation constraints with the possibility of (onboard) sensing for continuously replanning (over some future time horizon) the optimal trajectory. These methods will play an important role when dealing with complex robots affected by complex sensing/actuation constraints, for which pure reactive strategies (as in most of the previous Lagadic works) are not effective. Furthermore, the coupling with the aforementioned optimal sensing will also be considered; multi-robot decentralised estimation and control, with the aim of devising again sensor-based strategies for groups of multiple robots needing to maintain a formation or perform navigation/manipulation tasks. Here, the challenges come from the need of devising “simple” decentralized and scalable control strategies under the presence of complex sensing constraints (e.g., when using onboard cameras, limited fov, occlusions). Also, the need of locally estimating global quantities (e.g., common frame of reference, global property of the formation such as connectivity or rigidity) will also be a line of active research.

3.2.3 Haptics for Robotics Applications

In the envisaged shared cooperation between human users and robots, the typical sensory channel (besides vision) exploited to inform the human users is most often the force/kinesthetic one (in general, the sense of touch and of applied forces to the human hand or limbs). Therefore, a part of our activities will be devoted to study and advance the use of haptic cueing algorithms and interfaces for providing a feedback to the users during the execution of some shared task. We will consider: multi-modal haptic cueing for general teleoperation applications, by studying how to convey information through the kinesthetic and cutaneous channels. Indeed, most haptic-enabled applications typically only involve kinesthetic cues, e.g., the forces/torques that can be felt by grasping a force-feedback joystick/device. These cues are very informative about, e.g., preferred/forbidden motion directions, but are also inherently limited in their resolution since the kinesthetic channel can easily become overloaded (when too much information is compressed in a single cue). In recent years, the arise of novel cutaneous devices able to, e.g., provide vibro-tactile feedback on the fingertips or skin, has proven to be a viable solution to complement the classical kinesthetic channel. We will then study how to combine these two sensory modalities for different prototypical application scenarios, e.g., 6-dof teleoperation of manipulator arms, virtual fixtures approaches, and remote manipulation of (possibly deformable) objects; in the particular context of medical robotics, we plan to address the problem of providing haptic cues for typical medical robotics tasks, such as semi-autonomous needle insertion and robot surgery by exploring the use of kinesthetic feedback for rendering the mechanical properties of the tissues, and vibrotactile feedback for providing with guiding information about pre-planned paths (with the aim of increasing the usability/acceptability of this technology in the medical domain); finally, in the context of multi-robot control we would like to explore how to use the haptic channel for providing information about the status of multiple robots executing a navigation or manipulation task. In this case, the problem is (even more) how to map (or compress) information about many robots into a few haptic cues. We plan to use specialized devices, such as actuated exoskeleton gloves able to provide cues to each fingertip of a human hand, or to resort to “compression” methods inspired by the hand postural synergies for providing coordinated cues representative of a few (but complex) motions of the multi-robot group, e.g., coordinated motions (translations/expansions/rotations) or collective grasping/transporting.

3.2.4 Shared Control of Complex Robotics Systems

This final and main research axis will exploit the methods, algorithms and technologies developed in the previous axes for realizing applications involving complex semi-autonomous robots operating in complex environments together with human users. The leitmotiv is to realize advanced shared control paradigms, which essentially aim at blending robot autonomy and user's intervention in an optimal way for exploiting the best of both worlds (robot accuracy/sensing/mobility/strength and human's cognitive capabilities). A common theme will be the issue of where to “draw the line” between robot autonomy and human intervention: obviously, there is no general answer, and any design choice will depend on the particular task at hand and/or on the technological/algorithmic possibilities of the robotic system under consideration.

A prototypical envisaged application, exploiting and combining the previous three research axes, is as follows: a complex robot (e.g., a two-arm system, a humanoid robot, a multi-UAV group) needs to operate in an environment exploiting its onboard sensors (in general, vision as the main exteroceptive one) and deal with many constraints (limited actuation, limited sensing, complex kinematics/dynamics, obstacle avoidance, interaction with difficult-to-model entities such as surrounding people, and so on). The robot must then possess a quite large autonomy for interpreting and exploiting the sensed data in order to estimate its own state and the environmental one (“Optimal and Uncertainty-Aware Sensing” axis), and for planning its motion in order to fulfil the task (e.g., navigation, manipulation) by coping with all the robot/environment constraints. Therefore, advanced control methods able to exploit the sensory data at its most, and able to cope online with constraints in an optimal way (by, e.g., continuously replanning and predicting over a future time horizon) will be needed (“Advanced Sensor-based Control” axis), with a possible (and interesting) coupling with the sensing part for optimizing, at the same time, the state estimation process. Finally, a human operator will typically be in charge of providing high-level commands (e.g., where to go, what to look at, what to grasp and where) that will then be autonomously executed by the robot, with possible local modifications because of the various (local) constraints. At the same time, the operator will also receive online visual-force cues informative of, in general, how well her/his commands are executed and if the robot would prefer or suggest other plans (because of the local constraints that are not of the operator's concern). This information will have to be visually and haptically rendered with an optimal combination of cues that will depend on the particular application (“Haptics for Robotics Applications” axis).

4 Application domains

The activities of Rainbow fall obviously within the scope of Robotics. Broadly speaking, our main interest is in devising novel/efficient algorithms (for estimation, planning, control, haptic cueing, human interfacing, etc.) that can be general and applicable to many different robotic systems of interest, depending on the particular application/case study. For instance, we plan to consider

- applications involving remote telemanipulation with one or two robot arms, where the arm(s) will need to coordinate their motion for approaching/grasping objects of interest under the guidance of a human operator;

- applications involving single and multiple mobile robots for spatial navigation tasks (e.g., exploration, surveillance, mapping). In the multi-robot case, the high redundancy of the multi-robot group will motivate research in autonomously exploiting this redundancy for facilitating the task (e.g., optimizing the self-localization of the environment mapping) while following the human commands, and vice-versa for informing the operator about the status of a multi-robot group. In the single robot case, the possible combination with some manipulation devices (e.g., arms on a wheeled robot) will motivate research into remote tele-navigation and tele-manipulation;

- applications involving medical robotics, in which the “manipulators” are replaced by the typical tools used in medical applications (ultrasound probes, needles, cutting scalpels, and so on) for semi-autonomous probing and intervention;

- applications involving a direct physical “coupling” between human users and robots (rather than a “remote” interfacing), such as the case of assistive devices used for easing the life of people with disabilities. Here, we will be primarily interested in, e.g., safety and usability issues, and also touch some aspects of user acceptability.

These directions are, in our opinion, very promising since nowadays and future robotics applications are expected to address more and more complex tasks: for instance, it is becoming mandatory to empower robots with the ability to predict the future (to some extent) by also explicitly dealing with uncertainties from sensing or actuation; to safely and effectively interact with human supervisors (or collaborators) for accomplishing shared tasks; to learn or adapt to the dynamic environments from small prior knowledge; to exploit the environment (e.g., obstacles) rather than avoiding it (a typical example is a humanoid robot in a multi-contact scenario for facilitating walking on rough terrains); to optimize the onboard resources for large-scale monitoring tasks; to cooperate with other robots either by direct sensing/communication, or via some shared database (the “cloud”).

While no single lab can reasonably address all these theoretical/algorithmic/technological challenges, we believe that our research agenda can give some concrete contributions to the next generation of robotics applications.

5 Highlights of the year

- C. Pacchierotti nominated IEEE RAS Distinguished Lecturer for the field of haptics

- P. Robuffo Giordano's term as IEEE RAS Distinguished Lecturer for Multi-Robot Systems has been renewed for 2025-2027

- C. Pacchierotti invited to give a keynote at ICRA 2024 in Yokohama, Japan.

- Project ANR PRC MATES, led by the team, has been accepted.

- M. Babel carried the Paralympic flame as part of the relay of innovations for the people with disabilities, as part of her academic chair.

- M. Marchal was a keynote speaker at ISMAR 2024 in Seattle, USA; VRST 2024 in Trier, Germany and SCA 2024 in Montreal, Canada.

- M. Tognon received the IROS 2024 Toshio Fukuda Young Professional Award for his contributions to aerial robotics.

5.1 Awards

6 New software, platforms, open data

6.1 New software

6.1.1 HandiViz

-

Name:

Driving assistance of a wheelchair

-

Keywords:

Health, Persons attendant, Handicap

-

Functional Description:

The HandiViz software proposes a semi-autonomous navigation framework of a wheelchair relying on visual servoing.

It has been registered to the APP (“Agence de Protection des Programmes”) as an INSA software (IDDN.FR.001.440021.000.S.P.2013.000.10000) and is under GPL license.

-

Contact:

Marie Babel

-

Participants:

François Pasteau, Marie Babel

-

Partner:

INSA Rennes

6.1.2 ViSP

-

Name:

Visual servoing platform

-

Keywords:

Computer vision, Robotics, Visual servoing (VS), Visual tracking

-

Scientific Description:

Since 2005, we develop and release ViSP [1], an open source library available from https://visp.inria.fr. ViSP standing for Visual Servoing Platform allows prototyping and developing applications using visual tracking and visual servoing techniques at the heart of the Rainbow research. ViSP was designed to be independent from the hardware, to be simple to use, expandable and cross-platform. ViSP allows designing vision-based tasks for eye-in-hand and eye-to-hand systems from the most classical visual features that are used in practice. It involves a large set of elementary positioning tasks with respect to various visual features (points, segments, straight lines, circles, spheres, cylinders, image moments, pose...) that can be combined together, and image processing algorithms that allow tracking of visual cues (dots, segments, ellipses...), or 3D model-based tracking of known objects or template tracking. Simulation capabilities are also available.

ViSP also provides an open-source dynamic simulator called FrankaSim based on CoppeliaSim and ROS for the Panda robot from Franka Robotics [2]. The simulator fully integrated in the ViSP ecosystem features a dynamic model that has been accurately identified from a real robot, leading to more realistic simulations. Conceived as a multipurpose research simulation platform, it is well suited for visual servoing applications as well as, in general, for any pedagogical purpose in robotics. All the software, models and CoppeliaSim scenes presented in this work are publicly available under free GPL-2.0 license.

A module dedicated to deep neural networks (DNN) is also available to facilitate image classification and object detection. This module can infer the convolutional networks Faster-RCNN, SSD-MobileNet, ResNet 10, Yolo v3, Yolo v4, Yolo v5, Yolo v7, Yolo v8 and Yolo v11, which simultaneously predict object boundaries and prediction scores at each position.

A new module dedicated to the visual tracking of an object using its model has just been introduced. Called RBT for Render-Based-Tracker, it enables complex objects to be localized in real time by robustly combining geometric features, colour-based features and depth map features in the minimisation process.

[1] E. Marchand, F. Spindler, F. Chaumette. ViSP for visual servoing: a generic software platform with a wide class of robot control skills. IEEE Robotics and Automation Magazine, Special Issue on "Software Packages for Vision-Based Control of Motion", P. Oh, D. Burschka (Eds.), 12(4):40-52, December 2005. URL: https://hal.inria.fr/inria-00351899v1

[2] A. A. Oliva, F. Spindler, P. Robuffo Giordano and F. Chaumette. ‘FrankaSim: A Dynamic Simulator for the Franka Emika Robot with Visual-Servoing Enabled Capabilities’. In: ICARCV 2022 - 17th International Conference on Control, Automation, Robotics and Vision. Singapore, Singapore, 11th Dec. 2022, pp. 1–7. URL: https://hal.inria.fr/hal-03794415.

-

Functional Description:

ViSP provides simple ways to integrate and validate new algorithms with already existing tools. It follows a module-based software engineering design where data types, algorithms, sensors, viewers and user interaction are made available. Written in C++, ViSP is based on open-source cross-platform libraries (such as OpenCV) and builds with CMake. Several platforms are supported, including OSX, iOS, Windows and Linux. ViSP online documentation allows to ease learning. More than 307 fully documented classes organized in 18 different modules, with more than 475 examples and 114 tutorials are proposed to the user. ViSP is released under a dual licensing model. It is open-source with a GNU GPLv2 or GPLv3 license. A professional edition license that replaces GNU GPL is also available.

- URL:

-

Contact:

Fabien Spindler

-

Participants:

Romain Lagneau, Éric Marchand, Fabien Spindler, François Chaumette, Olivier Roussel

6.1.3 DIARBENN

-

Name:

Obstacle avoidance through sensor-based servoing

-

Keywords:

Servoing, Shared control, Navigation

-

Functional Description:

DIARBENN's objective is to define an obstacle avoidance solution adapted to a mobile robot such as a powered wheelchair. Through a shared control system, the system corrects progressively and if necessary the trajectory when approaching an obstacle while respecting the user's intention.

-

Contact:

Marie Babel

-

Participants:

Marie Babel, François Pasteau, Sylvain Guegan

-

Partner:

INSA Rennes

6.2 New platforms

The platforms described in the next sections are labeled by the University of Rennes and are parts of the French Research Infrastructure ROBOTEX 2.0 labeled by the French Ministry of Research.

6.2.1 Robot Vision Platform

Participant: François Chaumette, Eric Marchand, Fabien Spindler [contact].

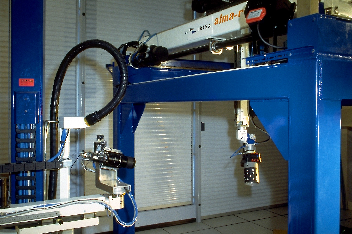

We are using an industrial robot built by Afma Robots in the nineties to validate our research in visual servoing and active vision. This robot is a 6 DoF Gantry on which it is possible to mount a gripper and an RGB-D camera on its end effector (see Fig. 2). This equipment is mainly used to validate vision-based visual servoing and real-time tracking algorithms.

In 2024, this platform has been used to validate experimental results in 1 accepted publication 32.

In this image we can see our Gantry robot.

6.2.2 Mobile Robots

Participants: Marie Babel, François Pasteau, Fabien Spindler [contact].

To validate our research in personally assisted living topic (see Sect. 7.3.2), we have three electric wheelchairs, one from Permobil, one from Sunrise and the last from YouQ (see Fig. 3.a). The control of the wheelchair is performed using a plug and play system between the joystick and the low level control of the wheelchair. Such a system lets us acquire the user intention through the joystick position and control the wheelchair by applying corrections to its motion. The wheelchairs have been fitted with cameras, ultrasound and time of flight sensors to perform the required servoing for assisting handicapped people. A wheelchair haptic simulator completes this platform to develop new human interaction strategies in a virtual reality environment (see Fig. 3(b)).

Moreover, for fast prototyping of algorithms in perception, control and autonomous navigation, the team uses a Pioneer 3DX from Adept (see Fig. 3.d). This platform is equipped with various sensors needed for autonomous navigation and sensor-based control.

In 2024, these robots were used to obtain experimental results presented in 4 papers 26 19 50 51.

|

|

|

| (a) | (b) | (c) |

In the left image, our wheelchairs from Permobil, Sunrise and YouQ. In the middle image our wheelchair simulator. In the next image, our Pioneer P3DX mobile robot equipped with a camera mounted on a pan-tilt head.

6.2.3 Advanced Manipulation Platform

Participants: Alexandre Krupa, Claudio Pacchierotti, Paolo Robuffo Giordano, François Chaumette, Fabien Spindler [contact].

This platform consists of by 2 Panda lightweight 7 DoF arms from Franka Emika equipped with torque sensors in all seven axes. An electric gripper, a camera, a soft hand from qbrobotics or a Reflex TakkTile 2 gripper from RightHand Labs (see Fig. 4.b) can be mounted on the robot end-effector (see Fig. 4.a). A force/torque sensor from Alberobotics is also attached to one of the robots end-effector to provide greater accuracy in torque control.

Two Adept 6 DoF arms (one Viper 650 robot and one Viper 850 robot) and a 6 DoF Universal Robots UR5, which can also be fitted with a force sensor and a camera, complete the platform.

This setup is mainly used to manipulate deformable objects and to validate our activities in coupling force and vision for controlling robot manipulators (see Section 7.3.1) and in controlling the deformation of soft objects (Sect. 7.1.7). Other haptic devices (see Section 7.2) can also be coupled to this platform.

In 2024, 3 papers 18, 34, 35 and 2 PhD thesis 68, 70 were published including experimental results obtained with this platform.

|

|

|

||

| (a) | (b) | (c) |

In the left image our Franka robot equipped with the Pisa SoftHand grasping a box. In the middle image the Reflex TakkTile 2 gripper grasping a yellow ball. In the right image The 5 arms composing the platform with in the foreground a Franka, in the second plan our two Viper robots, then in the background, a UR 5 arm and our second Franka robot.

6.2.4 Unmanned Aerial Vehicles (UAVs)

Participants: Gianluca Corsini [contact], Paolo Robuffo Giordano, Marco Tognon, Claudio Pacchierotti, Pierre Perraud, Fabien Spindler.

Rainbow is involved in several activities concerning conception, modelling, control and perception for single and multiple aerial robots (ARs). Two indoor flying arenas are used to carry out the related experimental activities. The first arena is relatively small (3m x 5m x H1.8m) and is equipped with 11 Vicon cameras for motion capture. The second one, spanning a larger volume (about 9m x 9m x H2.5m), is equipped with 14 Qualisys cameras. However, the latter room is only available within the period from January to August. Compared to the former, the larger arena grants us with the possibility to fly multiple drones at the same time thanks to the larger volume and the great coverage offered by the larger number of cameras.

In these flying arenas, we operate several customized ARs which have been heavily customised by: reprogramming from scratch the low-level firmwares running on the onboard electronics (comprising flight and motor controllers), equipping each robot with an onboard computer (for instance a Jetson or a NUC board) running Linux Ubuntu and the TeleKyb3 software framework, and adding Realsense RGB-D cameras for onboard visual odometry and visual servoing.

Telekyb3 is an open-source framework based on the Genom3 software tool which has been developed at LAAS in Toulouse. It features a modular and formal structure tailored to code reusability, high performance and middleware abstraction. Telekyb3 comprises a set of algorithms dedicated to localization, navigation and low-level control of aerial robots in maneuvering and physical-interaction-based tasks.

The aerial robotic platform of the team includes quadrotors and hexarotors (see Fig. 5.a and 5.b, respectively) which have been internally designed both at the mechanical and the electronic level. While the quadrotors have a standard (in jargon, collinear) propeller orientation, the hexarotors have the motors tilted w.r.t. the main body. This property grants them with manuvering capabilities that cannot be replicated by conventional and commercial drones with collinear rotors.

For most of the mechanical components, we rely on custom parts which are then realized by exploiting 3D printing technology and water-jet and milling processes of carbon-fiber material. From the electronic standpoint, our robots feature a Mikrokopter-based flight controller running custom firmware. However, due to the unavailibility and aging of the latter board, a newer flight controller named Paparazzi, has been adopted and consequently the firmware adapted to the new board. The Paparazzi flight controller is part of an open-source and open-hardware project started at ENAC in Toulouse. This board has been choosen as it fits well our needs: it comprises more precise onboard sensors and sufficient programmable peripherals used to communicate with the other electronic modules and sensors.

Smaller commercial drones, namely the BitCraze Crazyflie, have been added to this robotic platform. Seen the tiny dimensions of these drones and the limited available space for experiments, these robots are perfect candidates to carry out research related to the control and perception of a team of multiple robots.

Among the different successful experiments, we can annoverate a visual servoing using ViSP to position the drone w.r.t. a target and to manipulate deformable objects (e.g. a cable), accurate positioning of a swarm of (more than 5) robots, contact-based interaction with flat surfaces (for instance, drawing on a whiteboard) by means of a hexarotor equipped with a rigid end-effector.

In 2024, 2 papers 33, 60 and 1 phD thesis 68 contains experimental results obtained with this platform.

|

|

|

| (a) | (b) |

In the left image, one of our quadrotors. In the right image, our hexarotor with tilted propellers.

6.2.5 Interactive interfaces and systems

Participants: Claudio Pacchierotti, Paolo Robuffo Giordano, Maud Marchal, Marie Babel, Fabien Spindler [contact].

Interactive technologies enables the communication between artificial systems and human users. Examples of such technologies are haptic interfaces and virtual reality headsets.

Various haptic devices are used to validate our research in, e.g., shared control, extended reality. We design wearable haptics devices to give user feedback and use also some devices out of the shelf. We have a Virtuose 6D device from Haption (see Fig. 6.a). This device is used as master device in many of our shared control activities. An Omega 6 (see Fig. 6.b) from Force Dimension and devices from Ultrahaptics (see Fig. 6.c) complete this platform that could be coupled to the other robotic platforms.

Similarly, in order to augment the immersiveness of virtual scenarios, we make use of virtual and augmented reality headsets. We have HTC Vive headsets for VR and Microsoft Hololens for AR interactions (see Fig. 6.d).

In 2024, this platform was used to obtain experimental results presented in 1 paper 17.

|

|

|

|

|||

| (a) | (b) | (c) | (d) |

Left image, our Virtuose 6D haptic device, in the middle-left our Omega6 haptic device, in the middle-right image the Ultraleap STRATOS device, and in the right our Microsoft Hololens 2 AR headset

6.2.6 Portable immersive room

Participants: François Pasteau, Fabien Grzeskowiak, Marie Babel [contact].

To validate our research on assistive robotics and its applications in virtual conditions, we very recently acquired a portable immersive room that is planned to be easily deployed in different rehabilitation structures in order to conduct clinical trials. The system has been designed by Trinoma company and has been funded by Interreg ADAPT project.

In 2024, this platform was used to prepare next clinical trials that will be conducted during 2025.

|

|

|

| (a) | (b) |

On person sitting on the wheelchair simulator placed in the portable Immersive room

7 New results

7.1 Advanced Sensor-Based Control

7.1.1 Integrated Robust Planning and Control for Uncertain Robots

Participants: Tommaso Belvedere, Ali Srour, Paolo Robuffo Giordano.

The goal of this research activity is to propose an integrated approach for robust planning and control of robots whose models are affected by uncertainty in some of their parameters. The results are based on the notion of closed-loop state sensitivity developed in our group since several years, and are also related to the ANR project CAMP (Sect. 9.4.9).

Over the past years, we have developed several trajectory optimization algorithms leveraging the notion of closed-loop “state sensitivity”, “input sensitivity” and derived quantities. IN particular, exploiting these metrics we were able to construct tubes enveloping the bundle of perturbed trajectories given an uncertainty model (a range of variagion for the parameters around a nominal value). During this year we have continued working on this subject with the following contributions:

- in 60 we have performed an exstensive experimental validation of a sensitivity-aware trajectory planning for a quadorotor UAV with uncertain aerodynamic coefficients, mass and location of the center of mass. A benefit of the proposer approach is that it can be applied to any controller for the robot, even in case the controller is given and cannot be changed. To show this point, we made use of the popular PixHawk controller onboard the quadrotor, as it is a very common control strategy used by many groups. The results clearly showed the benefits of the proposed robust planning. This paper was awarded with the Best Paper Award at the ICUAS 2024 conference

- in 34, the approach proposed in 60 has been extended to the case of torque-controlled manipulator arms. Compared to the quadrotor case, a 7-dof torque-controlled manipulator has a much more complex dynamical model (with many more parameters), and the same goes for the employed control strategy (computed torque with integral term in our case). Nevertheless, we were able to show that our sensitivity-aware trajectory planning can also be used in this case for manipulation tasks. An experimental campaign has been performed with the manipulator handling payloads with different (and unknown) inertial parameters, showing the effectiveness of the proposed approach in reducing the effects of model uncertainties during motion

- in 10, the approach has been applied to a fully-actuated hexartor by also introducing the new concept of sensitivity w.r.t. the initial conditions (besides the parameters), which may also be uncertain because of, e.g., not perfect state estimation. Furthermore, a better formalization and algorithm for computing the tubes of perturbed trajectories has been proposed, improving over the previous method (in the sense of more accurately enveloping the bundle of perturbed state/input trajectories). Finally, we also considered the effects of any additional unmodeled dynamics treated as an uncertain parameter with a given range of variation. The experiments on the hexarotor clearly showed the ability of the proposed framework to produce intrinsic robust motions plans by minimizing the effects of uncertainties in the parameters and in the initial conditions

- together with Simon Wasiela and other colleagues at LAAS-CNRS, we have proposed in 36 an extension to the SAMP motion planner previously developed in 73 and meant to produce robust global plans, emphasizing the generation of trajectories with low sensitivity to model uncertainty. However, the high computational cost of the uncertainty tubes was a bottleneck in 73. In our work 36 we addressed this problem by proposing a novel framework that first incorporates a Gated Recurrent Unit (GRU) neural network to provide fast and accurate estimation of uncertainty tubes and then minimizes these tubes at given points along the trajectory. The approach was experimentally validated on a 3D quadrotor in two challenging scenarios: a navigation through a narrow window, and an in-flight “ring catching”' task with a perch. The experimental results demonstrated the robustness of the approach also in dealing with such a complex perching task for quadrotor.

7.1.2 UWB beacon navigation of assisted power wheelchair

Participants: Vincent Drevelle, Marie Babel, François Pasteau, Theo Le Terrier.

Ultra-wideband (UWB) radio is an emerging technology for indoor localization and object tracking applications. Contrary to vision, these sensors are low-cost, non-intrusive and easy to install on the wheelchair. They provide time-of-flight ranging between fixed beacons and mobile sensors. However, multipath or non-line-of-sight (NLOS) propagation can perturb range measurements in a cluttered indoors environment.

We designed a robust wheelchair positioning method, based on an extended Kalman filter with outlier identification and rejection. The method fuses UWB ranges with low-cost gyro and wheelchair joystick commands to estimate the orientation and position of the wheelchair. A demonstration of autonomous navigation in an apartment of the Pôle Saint-Hélier rehabilitation center was shown to practitioners and power wheelchair users during the Ambrougerien project demo day.

Then, a robust set-membership positioning approach was developed, based on interval constraint propagation. It explicitly accounts for the fact that multipath and NLOS propagation result in range measurements that exceed the actual distance. A reliable pose domain is then computed for the wheelchair at each measurement epoch.

7.1.3 Visual servo of the orientation of an Earth observation satellite

Participants: Alessandro Colotti, François Chaumette.

This study was done in the scope of the ANR Sesame project (see 9.4.6). We developed a method able to determine the complete set of equilibrium (i.e., the global minimum, local minimum, and saddle points) of image-based visual servoing when Cartesian coordinates of image points are used as inputs of the control scheme 16.

7.1.4 Visual servo of the orientation of an Earth observation satellite

Participants: Maxime Robic, Eric Marchand, François Chaumette.

This study was done in the scope of the BPI Lichie project (see 9.4.8). Its goal was to control the orientation of a satellite to track particular objects on the Earth. This year, we considered how to avoid motion-blur effects in the images acquired by the camera while it is gazing on a potentially moving object 32.

7.1.5 Multi-sensor-based control for accurate and safe assembly

Participants: John Thomas, François Pasteau, François Chaumette.

This study was also done in the scope of the BPI Lichie project (see 9.4.8). Its goal was to design sensor-based control strategies coupling vision and proximetry data for ensuring precise positioning while avoiding obstacles in dense environments 35, 70

7.1.6 Visual Exploration of an Indoor Environment

Participants: Thibault Noël, Eric Marchand, François Chaumette.

This study is done in collaboration with the Creative company in Rennes (see Section 7.1.6). It is devoted to the exploration of indoor environments by a mobile robot for a complete and accurate reconstruction of the environment 26.

7.1.7 Shape servoing of soft objects using Finite Element Model

Participants: Mandela Ouafo Fonkoua, Alexandre Krupa, François Chaumette.

This study takes place in the context of the BIFROST project (see Section 9.1.1). In 18, we proposed a visual control framework for accurately positioning feature points belonging to the surface of a 3D deformable object to desired 3D positions, by acting on a set of manipulated points using a robotic manipulator. This framework considers the dynamic behavior of the object deformation, that is, we do not assume that the object is in its static equilibrium during the manipulation. By relying on a coarse dynamic Finite Element Model (FEM), we have successfully formulated the analytical relationship expressing the motion of the feature points to the 6 degrees of freedom motion of a robot gripper. From this modeling step, a novel closed-loop deformation controller was designed. To be robust against model approximations, the whole shape of the object is tracked in real-time using an RGB-D camera, thus allowing to correct any drift between the object and its model on-the-fly. Experimental results have demonstrated that our approach can drive feature points of a deformable objet to desired positions very rapidly (in less than 5 seconds), thus being very far from a (simplified) quasi-static regime. Our methodology thus makes it possible to take into account the inertial properties of soft materials during rapid motions.

7.1.8 Multi-Robot Control Localization and Estimation

Participant: Paolo Robuffo Giordano, Claudio Pacchierotti, Esteban Restrepo, Nicola De Carli, Antonio Marino.

Systems composed by multiple robots are useful in several applications where complex tasks need to be performed. Examples range from target tracking, to search and rescue operations and to load transportation. We have been very active over the last years on the topics of coordination, estimation, localization and control of multiple robots under the possible guidance of a human operator (see, e.g., Sect. 9.4.10), and we recently started to explore the use of machine learning for replicating, or replacing, more analytical control/estimation strategies (with benefits in terms of reduced computational power and communication load).

During this year we have produced the the following contributions:

- in 15 we have proposed an observer scheme to estimate in a common frame the position and yaw orientation of a group of quadrotors from body-frame relative position measurements. The state of the robots is represented by their position and yaw orientation and the graph representing the sensing interaction among the robots is directed and it is only required to be weakly connected in addition to satisfy certain persistency of excitation conditions. The proposed scheme consists of three distinct estimation strategies coupled together, and for which we were able to draw strong conclusions on the stability of the whole system (cascade of three estimations) and validate the method via numerical simulations. This work is significant since it solves in a principled and rigorous way a longstanding problem: how to build in a decentralized way a coherent localization in a common frame from partial body-frame measurements. For instance, in the previous work 72 an analogous problem was solved in a more heuristic way without any formal guarantee of convergence for the whole estimation pipeline, as it is instead the case for 15 where we were finally able to provide a full formal characterization of the filter convergence

- in 41 we have revisited the topic of connectivity maintenance under the light of modern distributed QP-based control. The proposed framework is primarily motivated by the distributed implementation of Control Barrier Functions (CBFs), whose primary objective is to make minimal adjustments to a nominal controller while ensuring constraint satisfaction. By improving over some limitations in the current state-of-the-art, we were able to apply distributed CBFs to the problem of global connectivity maintenance in presence of communication and sensing constraints. This improves of the typical connectivity maintenance algorithms that are based on distributed gradient descents of potential functions that can be hard to tune in practice (in particular w.r.t. the number of robots in the group). The proposed CBF formulation is instead much cleaner and easy to tune, and with better numerical properties for actual implementations.

- in 58 we proposed a distributed strategy to achieve biconnectivity, instead of simple connectivity, for a group of robots that allows establishment/deletion of interaction links as well as addition/removal of agents at anytime while guaranteeing that the connectivity, and thus functionality, of the team is always preserved. Indeed, in the context of open multi-robot systems, that is, when the number of robots in the team is not fixed, merely preserving connectivity of the current graph does not prevent the loss of connectivity after a robot joins/leaves the group. The proposed approach is completely distributed and embeds into a unique gradient-based control multiple constraints and requirements: (i) limited inter-robot communication ranges, (ii) limited field of view, (iii) desired inter-agent distances, and (iv) collision avoidance. Numerical simulations illustrate the effectiveness of our approach.

- in 23 we studied the conditions for input-state stability (ISS) and incremental input-state stability (ISS) of Gated Graph Neural Networks (GGNNs). Indeed, GNNs excel in predicting and analyzing graphs.Recurrent models of GNNs can solve time-dependent problems and have been shown to provide a useful tool for analyzing and designing multi-agent algorithms. In 23 we showed that this recurrent version of Graph Neural Networks (GNNs) can be expressed as a dynamical distributed system and, as a consequence, can be analysed using model-based techniques to assess its stability and robustness properties. Then, the stability criteria found can be exploited as constraints during the training process to enforce the internal stability of the neural network. These findings are demonstrated in two distributed control examples, flocking and multi-robot motion control, showing that using these conditions increases the performance and robustness of the gated GNNs.

- in 24 we considered an end-to-end trajectory planning algorithm tailored for multi-UAV systems for generating collision-free trajectories in environments populated with both static and dynamic obstacles, leveraging point cloud data. Our approach consists of a 2-branch neural network fed with sensing and localization data, able to communicate intermediate learned features among the agents. One network branch crafts an initial collision-free trajectory estimate, while the other devises a neural collision constraint for subsequent optimization, ensuring trajectory continuity and adherence to physical actuation limits. Extensive simulations in challenging cluttered environments, involving up to 25 robots and 25% obstacle density, show a collision avoidance success rate in the range of 100–85%. We also introduced a saliency map computation method acting on the point cloud data, which offers qualitative insights into the proposed methodology.

- in 52 we have instead proposed the Liquid-Graph Time-constant (LGTC) network, a continuous graph neural network (GNN) model for control of multi-agent systems based on the recent Liquid Time Constant (LTC) network. We analyzed its stability leveraging contraction analysis and proposed a closed-form model that preserves the model contraction rate and does not require solving an ODE at each iteration. Compared to discrete models like the previous Graph Gated Neural Networks (GGNNs), the higher expressivity of the proposed model guarantees remarkable performance while reducing the large amount of communicated variables normally required by GNNs, thus mitigating one drawback of GNNs. We evaluated our model on a distributed multi-agent control case study (flocking) taking into account variable communication range and scalability under non-instantaneous communication.

- in 54 we revisited the classical problem of connectivity maintenance of a UAV group. Differently from typical model-based connectivity-maintenance approaches, the proposed technique uses machine learning to attain significantly more scalability in terms of number of UAVs that can be part of the robotic team. It uses Supervised Deep Learning (SDL) with Artificial Neural Networks (ANN), so that each robot can extrapolate the necessary actions for keeping the team connected in one computation step, regardless the size of the team. We compared the performance of our proposed approach vs. a state-of-the-art model-based connectivity-maintenance algorithm when managing a team composed of two, four, six, and ten aerial mobile robots. The results showed that our approach can keep the computational cost almost constant as the number of drones increases, reducing it significantly with respect to model-based techniques. For example, our SDL approach needs 83% less time than a state-of-the-art model-based connectivity maintenance algo- rithm when managing a team of ten drones.

- in 45 we used machine learning techniques for addressing a very different problem in the context of multi-robots: accurate tracking of mico-scale robots for minimally invasive surgery applications (this work is in the context of the H2020 Rego project coordinated by our team). Indeed, accurately tracking the position of the moving agents at the micro-scale remains a significant challenge, particularly for multi-agent systems operating in cluttered and unknown environments. In order to address this issue, we introduced a graph-based multi-agent 3D tracking algorithm for a micro-agent control system. This algorithm integrates image information with the control inputs used to navigate the micro agents. We combined Convolutional Neural Networks and Graph Neural Networks to effectively extract features from image sources, and combine them with historical data and control inputs. The primary novelty of this algorithm is its ability to make predictions when the target is occluded in the 2D detection results. The proposed system achieved a tracking error of 0.15 mm, outperforming standard model-based tracking techniques.

- in 31 we solve the tracking-in-formation problem for a group of underactuated autonomous marine vehicles interconnected over a directed topology. The agents are subject to hard inter-agent constraints, i.e. connectivity maintenance and collision avoidance, and soft constraints, specifically on the non-negativity of the surge velocity, as well as to constant disturbances in the form of unknown ocean currents. The control approach is based on an input-output feedback linearization for marine vehicles and on the edge-based framework for multi-agent consensus under constraints. High-fidelity simulations are provided to illustrate our results.

- in 63 we propose an adaptive control strategy for the simultaneous estimation of topology and synchronization in complex dynamical networks with unknown, time-varying topology. We introduce two auxiliary networks: one that satisfies the persistent excitation condition to facilitate topology estimation, while the other, a uniform delta persistently exciting network, ensures the boundedness of both weight estimation and synchronization errors, assuming bounded time-varying weights and their derivatives. A relevant numerical example shows the efficiency of our methods.

7.1.9 Safe Control of Mobile Manipulators

Participant: Tommaso Belvedere.

Mobile robots working among humans must be controlled to ensure the safety of both humans and the robot and it is essential to avoid collisions. This requires a combination of strategies to safely control the robot despite the inherent unpredictable nature of humans, and to reliably estimate the human motion based on sensor measurements. Orthogonally to this, the safety of the robot is also dependent on its ability to maintain balance. In fact, wheeled mobile robots (i.e., without a fixed base) need to adapt their motion to ensure that wheels always remain in contact with the ground to avoid a potentially catastrophic tip-over. This is particularly important when the robot is navigating over non-flat ground or when dynamically manipulating the environment. Two papers on these topics have been produced this year in collaboration with Sapienza University of Rome, Italy.

- In 40, a Vision-based control scheme is developed to allow safe navigation among a human crowd. The method leverages Control Barrier Functions (CBF) to generate robot movements that safely avoid humans. Its main contribution is in the human detection and crown prediction pipeline, which uses the YOLO-v8 model to detect humans and subsequently estimate their motion through Kalman Filters. Moreover, a strategy to exploit the pan-tilt action of the camera is devised to maximize the human detection reliability, significantly improving the success rate when navigating in a tight crowded environment. This paper was awarded with the Best Paper Award at the HFR 2024 conference.

- In 64, we have proposed a real-time optimization-based controller which ensures the robot is able to maintain balance when pick- ing up and carrying heavy objects. It leverages the concept of Zero Moment Point to describe conditions of dynamic balance essential when fast movements are required, and CBFs to minimally deviate from the desired motion. It also proposes an extension of CBFs to allow for input-level constraints in the discrete time, while maintaining the useful properties of CBFs.

7.1.10 Whole-body predictive control of Humanoid Robots

Participant: Tommaso Belvedere.

While interest in humanoid robots is on the rise and the first industrial deployments are emerging, there are still many challenges related to their complexity that hinder the use of modern optimization-based control methods. Historically, in fact, the most popular approaches used simplified models to reduce the number of optimization variables. This has several disadvantages and limitations that can only be overcome through the use of full models that reflect the dynamic and kinematic capabilities of such robots. In 37, we proposed an efficient scheme based on Model Predictive Control that is capable of exploiting the full capabilities of a humanoid robot. It leverages an ad-hoc formulation of the dynamics that allows the real-time solution of the related optimization problem and the study of its feasibility region. This region is then exploited to actively improve the robustness of the system against disturbances. The proposed method is shown to outperform the baseline (utilizing a simplified model) in robustness and dynamic locomotion capabilities, while maintaining the ability to run in real-time at frequencies larger than 100 Hz.

7.2 Haptic Cueing for Robotic Applications and Virtual Reality (VR)

We coordinated a special issue on this topic 42.

7.2.1 Wearable haptics for human-centered robotics, Virtual Reality (VR), and Augmented Reality (AR)

Participants: Claudio Pacchierotti, Maud Marchal, Eric Marchand, Lisheng Kuang.

We have been working on wearable haptics since few years now, both from the hardware (design of interfaces) and software (rendering and interaction techniques) points of view. This line of research has continued also in this year.

In 21, we present a versatile 4-DoF hand wearable haptic device tailored for VR. Its adaptable design accommodates various end-effectors, facilitating a wide spectrum of tactile experiences. Comprising a fixed upper body attached to the hand's back and interchangeable end-effectors on the palm, the device employs articulated arms actuated by four servo motors. The work outlines its design, kinematics, and a positional control strategy enabling diverse end-effector functionality. Through three distinct end-effector demonstrations mimicking interactions with rigid, curved, and soft surfaces, we showcase its capabilities. Human trials in immersive VR confirm its efficacy in delivering immersive interactions with varied virtual objects, prompting discussions on additional end-effector designs.

In 22, we present a 4-DoF wearable haptic device for the palm, able to provide the sensation of interacting with slanted surfaces and edges. It is composed of a static upper body, secured to the back of the hand, and a mobile end-effector, placed in contact with the palm. They are connected by two articulated arms, actuated by four servo motors housed on the upper body and along the arms. The end-effector is a foldable flat surface that can make/break contact with the palm to provide pressure feedback, move sideways to provide skin stretch and tangential motion feedback, and fold to elicit the sensation of interacting with different curvatures. We also present a position control scheme for the device, which is then quantitatively evaluated.

In 46, we introduced a 7-DoF hand-mounted haptic device. It is composed of a parallel mechanism characterised by eight legs with an articulated diamond-shaped structure, in turn connected to an origami-like shape-changing end-effector. The device can render surface and edge touch simulations as well as apply normal, shear, and twist forces to the palm. The paper presented the device's mechanical structure, a summary of its kinematic model, actuation control, and preliminary device evaluation, characterizing its workspace and force output.

In 27, we addressed key challenges in virtual object manipulation in AR, including limited visual occlusion and the absence of haptic feedback. We investigated the role of visuohaptic rendering of the hand as sensory feedback through two experiments. The first examined six visual hand renderings, showing the user's hand via an AR avatar. The second evaluated visuo-haptic feedback, comparing two vibrotactile techniques applied at four delocalized hand positions, combined with the two most effective visual renderings from the first experiment. The results revealed that vibrotactile feedback near the contact point enhanced perceived effectiveness, realism, and usefulness, while contralateral hand rendering, though disliked, achieved the best performance.

In 56, we investigated whether such wearable haptic augmentations are perceived differently in AR vs. VR and when touching with a virtual hand instead of one's own hand. We first designed a system for real-time rendering of vibrotactile virtual textures without constraints on hand movements, integrated with an immersive visual AR/VR head-set. We then conducted a psychophysical study with 20 participants to evaluate the haptic perception of virtual roughness textures on a real surface touched directly with the finger (1) without visual augmentation, (2) with a realistic virtual hand rendered in AR, and (3) with the same virtual hand in VR. On average, participants overestimated the roughness of haptic textures when touching with their real hand alone and underestimated it when touching with a virtual hand in AR, with VR in between. Exploration behaviour was also slower in VR than with real hand alone, although subjective evaluation of the texture was not affected.

In 55, we investigated the perception of simultaneous visual and haptic texture augmentation of real tangible surfaces touched directly with the fingertip in AR, using a wearable vibrotactile haptic device worn on the middle phalanx. When sliding on a tangible surface with an AR visual texture overlay, vibrations are generated based on data-driven texture models and finger speed to augment the haptic roughness perception of the surface. In a user study with twenty participants, we investigated the perception of the combination of nine representative pairs of visuo-haptic texture augmentations. Participants integrated roughness sensations from both visual and haptic modalities well, with haptics predominating the perception, and consistently identified and matched clusters of visual and haptic textures with similar perceived roughness.

In 59, we reported preliminary results in the design and implementation of an integrated system that includes dynamic simulation of the interaction with deformable objects and tissues, a VR environment with finger motion tracking, and haptic feedback provided by a wearable device. In addition, it explores the challenges and advances in integrating such technologies with a focus on creating realistic tactile experiences. It also addresses the complexity of combining hardware and software components, proposing some solutions to overcome integration problems.

We have also written a survey on the topic of cutaneous haptic feedback for human-centered robotic teleoperation 29. The article presents an overview on cutaneous haptic interaction followed by a review of the literature on cutaneous/tactile feedback systems for robotic teleoperation, categorizing the considered systems according to the type of cutaneous stimuli they can provide to the human operator. It ends with a discussion on the role of cutaneous haptics in robotics and the perspectives of the field.

7.2.2 Affective and persuasive haptics for Virtual Reality (VR)

Participants: Claudio Pacchierotti, Daniele Troisi.

Affective and persuasive haptics in Virtual Reality (VR) constitute an evolving frontier that explores the integration of tactile feedback to evoke emotional responses and influence user behavior within virtual environments. By leveraging haptic technologies, these systems aim to create immersive experiences that go beyond visual and auditory stimuli, introducing touch as a compelling tool for emotional engagement and persuasion.

In 61, we investigated the influence of contact force applied to the human's fingertip on the perception of hot and cold temperatures, studying how variations in contact force may affect the sensitivity of cutaneous thermoreceptors or their interpretation. A psychophysical experiment involved 18 participants exposed to cold (20 °C) and hot (38 °C) thermal stimuli at varying contact forces, ranging from gentle (0.5 N) to firm (3.5 N) touch. Results show a tendency to overestimate hot temperatures (hot feels hotter than it really is) and underestimate cold temperatures (cold feels colder than it really is) as the contact force increases. This result might be linked to the increase in the fingertip contact area that occurs as the contact force between the fingertip and the plate delivering the stimuli grows.

In 57, we investigated the influence of thermal haptic feedback on stress during a cognitive task in virtual reality. We hypothesized that cool feedback would help reduce stress in such a task where users are actively engaged. We designed a haptic system using Peltier cells to deliver thermal feedback to the left and right trapezius muscles. A user study was conducted on 36 participants to investigate the influence of different temperatures (cool, warm, neutral) on users' stress during mental arithmetic tasks. Results show that the impact of the thermal feedback depends on the participant's temperature preference. Interestingly, a subset of participants (36%) felt less stressed with cool feedback than with neutral feedback, but had similar performance levels, and expressed a preference for the cool condition. Emotional arousal also tended to be lower with cool feedback for these participants.

In 43, we conducted a study on thermal feedback during simulated social interactions with a virtual agent. We tested three conditions: warm, cool, and neutral. Results showed that warm feedback positively influenced users' perception of the agent and significantly enhanced persuasion and thermal comfort. Multiple users reported the agent feeling less 'robotic' and more 'human' during the warm condition. Moreover, multiple studies have previously shown the potential of vibrotactile feedback for social interactions. A second study thus evaluated the combination of warmth and vibrations for social interactions. The study included the same protocol and three similar conditions: warmth, vibrations, and warm vibrations. Warmth was perceived as more friendly, while warm vibrations heightened the agent's virtual presence and persuasion. These results encourage the study of thermal haptics to support positive social interactions.

7.2.3 Mid-Air Haptic Feedback

Participants: Claudio Pacchierotti, Maud Marchal, Thomas Howard, Guillaume Gicquel, Lendy Mulot.

In the framework of H2020 projects H-Reality and E-TEXTURE, we have been working to develop novel mid-air haptics paradigms that can convey the information spectrum of touch sensations in the real world, motivating the need to develop new, natural interaction techniques. Both projects ended in 2022, but we have continued to work on this exciting subject.

In 25, we propose the use of non-coplanar mid-air haptic devices for providing simultaneous tactile feedback to both hands during bimanual VR manipulation. We discuss coupling schemes and haptic rendering algorithms for providing bimanual haptic feedback in bimanual interactions with virtual environments. We then present two human participant studies, assessing the benefits of bimanual ultrasound haptic feedback in a two-handed grasping and holding task and in a shape exploration task. Results suggest that the use of multiple non-coplanar UMH devices could be an interesting approach for enriching unencumbered haptic manipulation in virtual environments.