Section:

New Results

Interaction Techniques

Participants :

Caroline Appert, Michel Beaudouin-Lafon, David Bonnet, Anastasia Bezerianos, Olivier Chapuis, Emilien Ghomi, Stéphane Huot, Can Liu, Wendy Mackay [correspondant] , Mathieu Nancel, Cyprien Pindat, Emmanuel Pietriga, Theophanis Tsandilas, Julie Wagner.

We explore interaction techniques in a variety of contexts, including individual interaction techniques on mobile devices, the desktop, and very large wall-sized displays, using one or both hands. We also explore interaction with physical objects and across multiple devices, to create mixed or augmented reality systems. This year, we explored interaction techniques based on time (EWE and Dwell-and-Spring), bimanual interaction on mobile devices (Bipad) and interaction on very large wall displays (Jelly Lenses, Looking-Around-Bezels). We also developed interactive paper systems to support early, creative design (Pen-based Mobile Assistants, Paper Tonnetz, Paper Substrates). We also explored augmented reality systems, using tactile feedback (TactileSnowboard Instructions) and tangible interaction (Mobile AR, Combinatorix) to support learning.

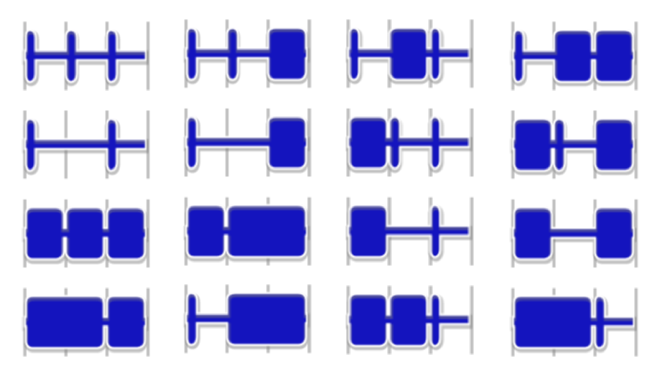

EWE – Although basic interaction techniques, such as multiple clicks or spring-loaded widgets, take advantage of the temporal dimension, more advanced uses of rhythmic patterns have received little attention in HCI. Using temporal structures to convey information can be particularly useful in situations where the visual channel is overloaded or even not available. We introduce Rhythmic Interaction [24] which uses rhythm as an input technique (Figure 10 ). Two experiments demonstrate that (i) rhythmic patterns can be efficiently reproduced by novice users and recognized by computer algorithms, and (ii) rhythmic patterns can be memorized as efficiently as traditional shortcuts when associated with visual commands. Overall, these results demonstrate the potential of Rhythmic Interaction and richer repertoire of interaction techniques. (Best Paper award, CHI'12)

Figure

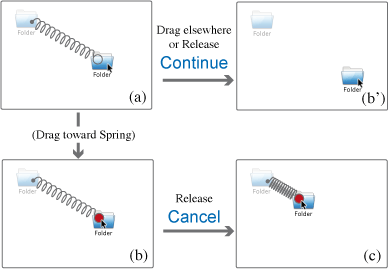

10. We defined 16 three-beat patterns: Each rectangle represents a tap, the thin gray lines show beats. |

Dwell-and-Spring – Direct manipulation interfaces consist of incremental actions that should be reversible The challenge is how to provide users with an effective “undo”. Actions such as manipulating geometrical shapes in a vector graphics editor, navigating a document using a scrollbar, or moving and resizing windows on the desktop rarely offer an undo mechanism. Users must manually revert to the previous state by recreating a similar sequence of direct manipulation actions, with a high level of associated motor and cognitive costs. We need a consistent mechanism that supports undo in multiple contexts. Dwell-and-Spring [19] uses a spring metaphor that lets users undo a variety of direct manipulations of the interface. A spring widget pops up whenever the user dwells during a press-drag-release interaction, giving her the opportunity to either cancel the current manipulation (Figure 11 ) or undo the last one. The technique is generic and can easily be implemented on top of existing applications to complement the traditional undo command. A controlled experiment demonstrated that users can easily discover the technique and adopt it quickly when it is discovered.

Figure

11. Cancel scenario: The user dwells while dragging an icon (a), which pops up a spring. She either (b) catches the spring's handle and releases the mouse button to cancel the current drag and drop, causing the spring to shrink smoothly (c) and returning the cursor and icon to their original locations, or she continues dragging the spring's handle any direction (b'). |

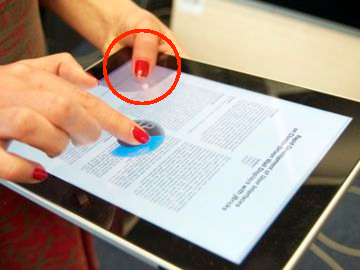

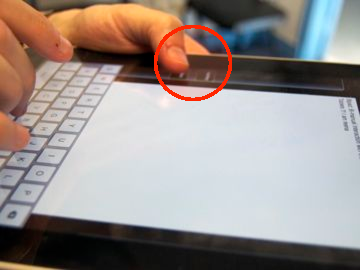

BiPad – Although bimanual interaction is common on large tabletops, it is rare on hand-held devices. We take advantage of the advanced multitouch input capabilities available on today's tablets to introduce new bimanual interactio techniques, under a variety of mobility conditions. We found that, when users hold a tablet, the primary function of the non-domininant hand is to provide support, which limits its potential movement. We studied how users “naturally” hold multi-touch tablets to identify comfortable holds, and then developed a set of 10 two-handed interaction techniques that accounts for the need to support the device while interacting with it. We introduced the BiTouch design space that extends Guiard's “Kinematic Chain Theory” [49] to account for the support function in bimanual interaction. We also designed and implemented the BiPad toolkit and set of interactions, which enables us to implement bimanual interaction on multitouch tablets (Figure 12 ). Finally, a controlled experiment demonstrated the benefits and trade-offs among specific techniques and offered insights for designing bimanual interaction on hand-held devices [31] .

Figure

12. Bimanual interaction on a multitouch tablet with BiPad: (left) navigating in a document; (center) switching to uppercase while typing on a virtual keyboard; (right) zooming a map. The non-dominant hand is holding the device and could perform `tapa', `gestures' or `chords' in order to augment dominant hand's interactions. |

Jelly Lenses – Focus+context lens-based techniques smoothly integrate two levels of detail using spatial distortion to connect the magnified region and the context. Distortion guarantees visual continuity, but causes

problems of interpretation and focus targeting, partly due to the fact that most techniques are based on statically-defined, regular lens shapes, that result in far-from-optimal magnification and distortion (Figure 13 left and center). JellyLenses [27] dynamically adapt to the shape of the objects of interest, providing detail-in-context visualizations of higher relevance by optimizing what regions fall into the focus, context and spatially-distorted transition regions (Figure 13 -right). A multi-scale visual search task experiment demonstrated that JellyLenses consistently perform better than regular fisheye lenses.

Figure

13. Magnifying the Lido in Venice. (left) a small fisheye magnifies one part of the island (Adriatic sea

to Laguna Veneta), but requires extensive navigation to the whole island in detail;

(center) a large fisheye magnifies a bigger part of the island,

but severely distorts almost the entire image, hiding

other islands;

(right) a JellyLens automatically adapts its shape to the region of interest,

with as much relevant information in the focus as (b)

while better preserving context: surrounding islands are almost untouched from (a). |

Looking behind Bezels – Using tiled monitors to build wall-sized displays has multiple advantages: higher pixel density, simpler setup and easier calibration. However, the resulting display walls suffer from the visual discontinuity caused by the bezels that frame each monitor. To avoid introducing distortion, the image has to be rendered as if some pixels were drawn behind the bezels. In turn, this raises the issue that a non-negligible part of the rendered image, that might contain important information, is visually occluded. We drew upon the analogy to french windows that is often used to describe this approach, and make the display really behave as if the visualization were observed through a french window [21] . We designed and evaluated two interaction techniques that let users reveal content hidden behind bezels. One enables users to offset the entire image through explicit touch gestures. The other adopts a more implicit approach: it makes the grid formed by bezels act like a true french window using head tracking to simulate motion parallax, adapting to users' physical movements in front of the display. The two techniques work for both single- and multiple-user contexts.

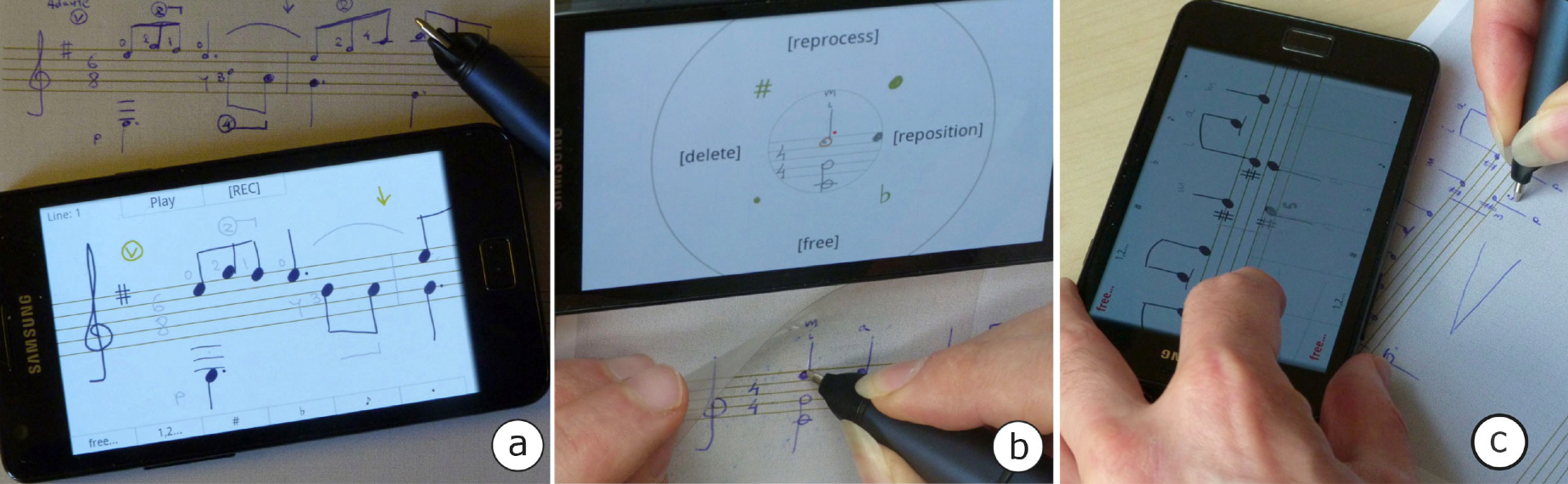

Pen-based Mobile Assistants – Digital pen technology allows easy transfer of pen data from paper to the computer. However, linking handwritten content with the digital world remains difficult as it requires the translation of unstructured and highly personal vocabularies into data structured so as to be understood and processed by a computer. Automatic recognition can help, but is not always reliable: it require active cooperation between users and recognition algorithms. We examined [30] the use of portable touch-screen devices in connection with pen and paper to help users direct and refine the interpretation of their strokes on paper. We explored four bimanual interaction techniques that combine touch and pen-writing, where user attention is divided between the original strokes on paper and their interpretation by the electronic device. We demonstrated these techniques through a mobile interface for writing music (Figure 14 ) that complements the automatic recognition with interactive user-driven interpretation. An experiment evaluated the four techniques and provided insights as to their strengths and limitations.

Figure

14. Writing music with a pen and a smartphone. (a) Handwritten score translated by the device. (b) Correcting the recognition of a note over a plastic sheet. (c) Guiding the interpretation of strokes with the left hand. |

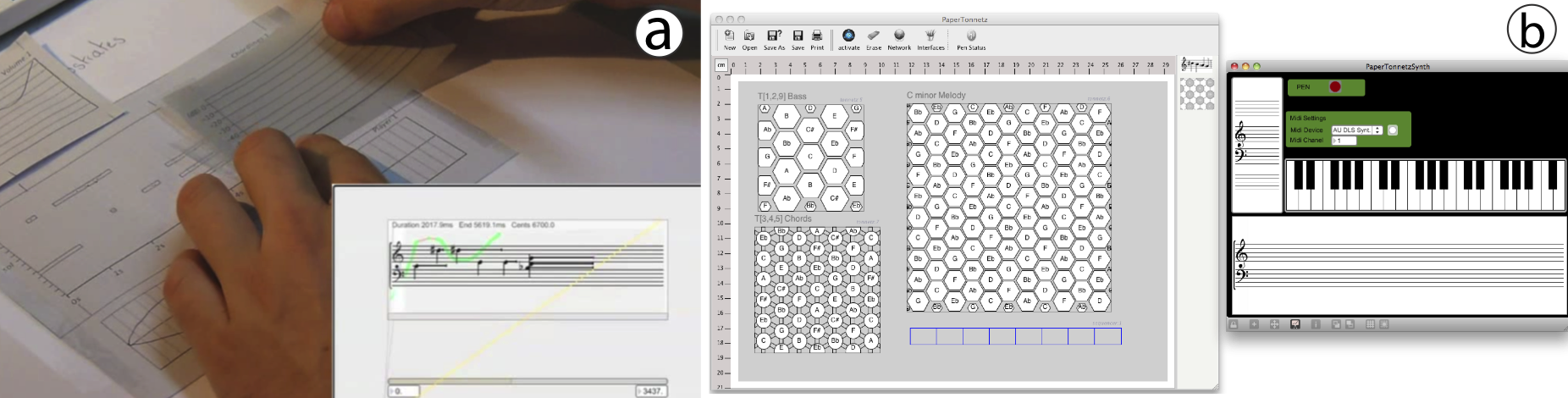

Paper Tonnetz – Tonnetz are space-based musical representations that lay out individual pitches in a regular structure. We investigated how properties of Tonnetz can be applied in the composition process, including how to represent pitch based on chords or scales and lay them out in a two-dimensional space (Figure 15 ). PaperTonnetz[20] is a tool that lets musicians explore and compose music with Tonnetz representations by making gestures on interactive paper, creating replayable patterns that represent pitch sequences and/or chords. An initial test in a public setting demonstrated significant differences between novice and experienced musicians and led to a revised version that explicitly supports discovering, improvising and assembling musical sequences in a Tonnetz.

Paper Substrates – Our goal is to design novel interactive paper interfaces that support the creative process. We ran a series of participatory design sessions with music composers to explore the concept of “paper substrates” [23] . Substrates are ordinary pieces of paper, printed with an Anoto dot pattern, that suppport a variety of advanced forms of interaction (Figure 15 ). Each substrate is strongly typed, such as a musical score or a graph, which faciliates interpretation by the computer. The composers were able to create, manipulate and combine layers of data, rearranging them in time and space as an integral part of the creative process. Moreover, the substrates approach fully supported an iterative process in which templates can evolve and be reused, resulting in highly personal and powerful interfaces. We found that paper substrates take on different roles, serving as data containers, data filters, and selectors. The design sessions resulted in several pen interactions and tangible manipulations of paper components to support these roles: drawing and modifying specialized data over formatted paper, exploring variations by superimposing handwritten data, defining programmable modules, aligning movable substrates, linking them together, overlaying them, and archiving them into physical folders.

Figure

15. Paper-based interfaces for musical creation. (a) Paper substrates are interactive paper components for working with musical data. (b) PaperTonnetz main interface representing the virtual page with three Tonnetz and one sequencer (left). The Max/MSP patch to play and visualize created sequences (right). |

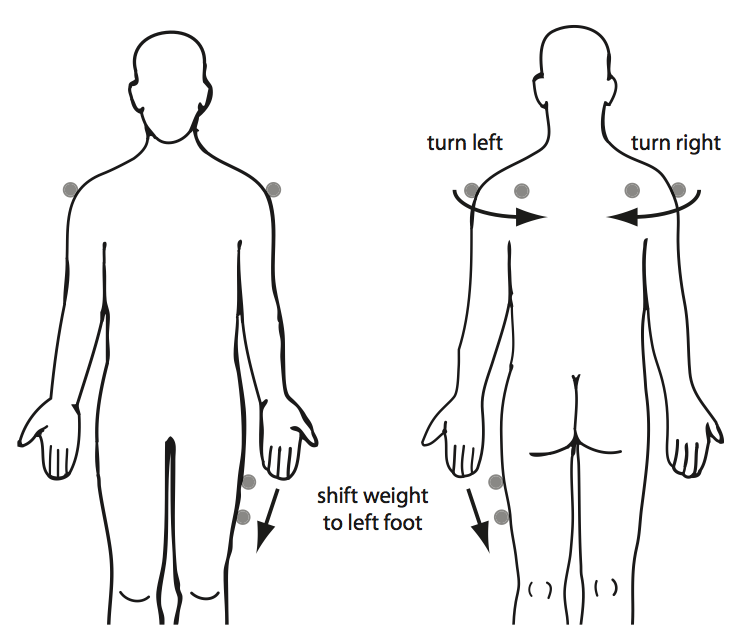

Tactile Snowboard Instructions – Beginnning snowboarders have difficulty getting instructions and feedback on their performance if they are separated spatially from their coach. Snowboarders can learn correct technique by wearing a system with actuators (vibration motors) attached to the thighs and shoulders, which reminds them to shift their weight and to turn their upper body in the correct direction (Figure 16 ). A field study with amateur snowboarders demonstrated that these “tactile instructions” are effective for learning basic turns and offered recommendations for incorporating tactile instructions into sports training. Best Paper award, Mobile HCI'12

Figure

16. Two vibration motors are placed at each shoulder and laterally at the thigh that points forward during the ride. Arrows illustrate the direction of the stimuli on the skin, labels show the corresponding messages. |

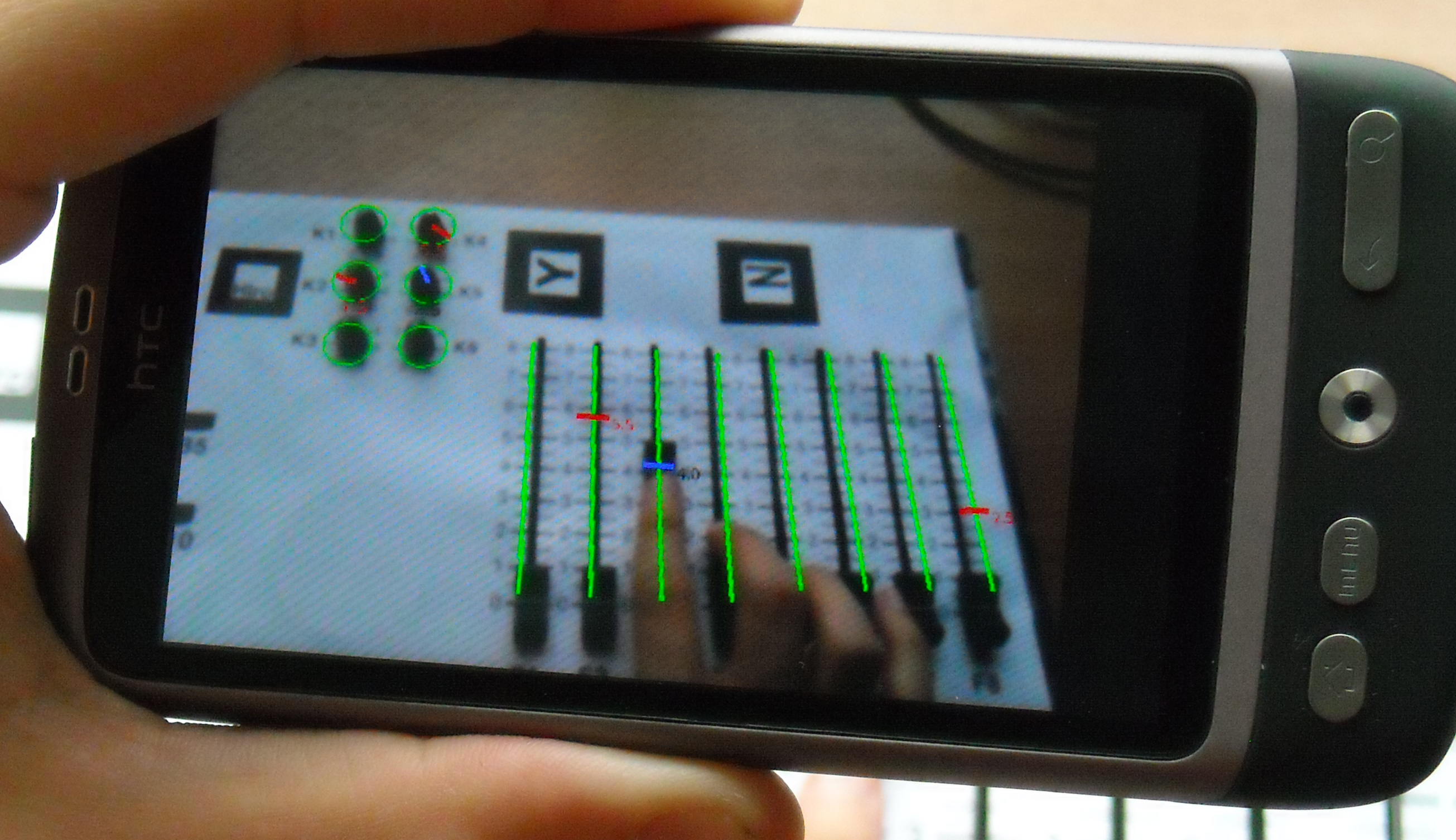

Mobile AR – We examined how new capabilities of hand-held devices, specifically higher resolution screens, camera and localization, can be used to create mobile Augmented Reality (AR) to help users learn and manage their interactions with everyday physical objects, such as door codes and home appliances. We explored AR-based mobile note-taking [50] to provide real-time on-screen feedback of physical objects that the user must manipulate, such as entering a door code. Here, the user uses the device to identify the required values of sliders and buttons (Figure 17 ). A controlled experiment showed that mobile AR improved both speed and accuracy over traditional text or picture-based instructions. We also demonstrated that adding real-time feedback in the AR layer that shows the user's actions with respect to the physical controls further increases performance [25] . (Honorable Mention award, CHI 2012)

Figure

17. Mobile augmented reality for setting physical controls. Required values are displayed in red and turn blue when set correctly. |

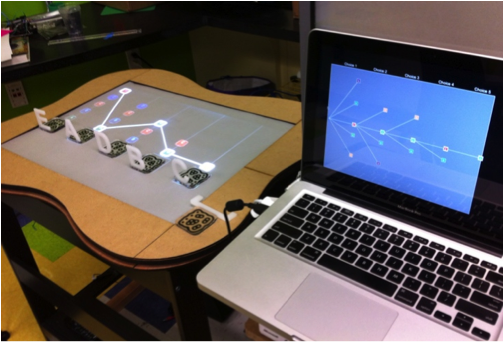

Combinatorix – We developed Combinatorix [28] , a mixed tabletop system to help groups of students work collaboratively to solve probability problems. Users combine tangible objects in different orders and watch the effects of various constraints on the problem space (Figure 18 ). A second screen displays an abstract representation, such as a probability tree, to show how their actions influenced the total number of combinations. We followed an iterative participatory design process with college students taking a combinatorics class and demonstrated the benefits of using a tangible approach to facilitate learning abstract concepts.

Figure

18. Combinatorix uses tangible objects on an interactive tabletop to control the tabletop display and associated screen, to help users explore and understand complex problems in combinatorial statistics. |