Section: Overall Objectives

Highlights of the Year

|

The European project Humavips – Humanoids with Auditory and Visual Abilities in Populated Spaces

HUMAVIPS (http://humavips.inrialpes.fr ) is a 36 months FP7 STREP project coordinated by Radu Horaud and which started in 2010. The project addresses multimodal perception and cognitive issues associated with the computational development of a social robot. The ambition is to endow humanoid robots with audiovisual (AV) abilities: exploration, recognition, and interaction, such that they exhibit adequate behavior when dealing with a group of people. Research and technological developments emphasize the role played by multimodal perception within principled models of human-robot interaction and of humanoid behavior.

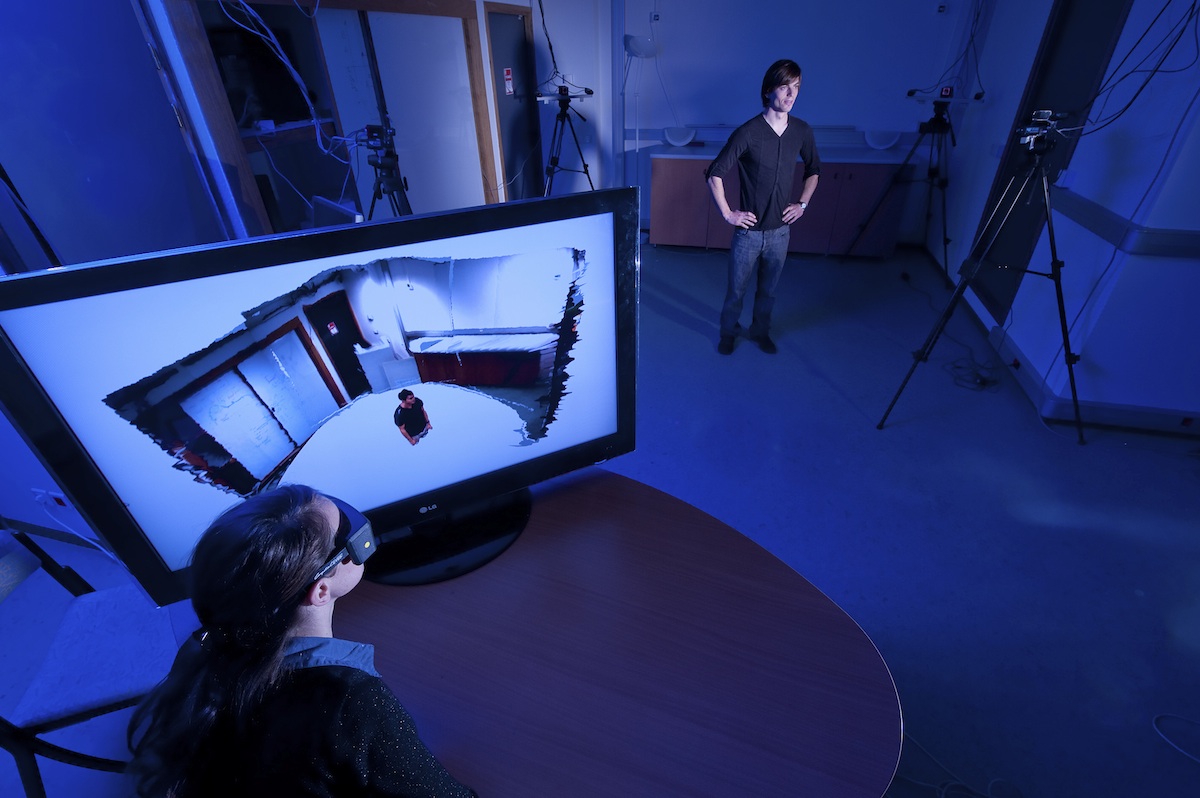

Collaboration with SAMSUNG – 3D Capturing and Modeling from Scalable Camera Configurations

In 2010 started a multi-year collaboration with the Samsung Advanced Institute of Technology (SAIT), Seoul, Korea. Whithin this project we develop a methodology able to combine data from several types of visual sensors (2D high-definition color cameras and 3D range cameras) in order to reconstruct, in real-time, an indoor scene without any constraints in terms of background, illumination conditions, etc. In 2012 we developed a novel TOF-stereo algorithm.

Book on Time-of-Flight Cameras

A book on Time-of-Flight Cameras was published in 2012 in the collection Springer Briefs in Computer Science. The book stems from the scientific collaboration between the PERCEPTION team and SAIT. The book describes a variety of recent research into time-of-flight imaging. Time-of-flight cameras are used to estimate 3D scene-structure directly, in a way that complements traditional multiple-view reconstruction methods. The first two chapters of the book explain the underlying measurement principle, and examine the associated sources of error and ambiguity. Chapters three and four are concerned with the geometric calibration of time-of-flight cameras, particularly when used in combination with ordinary colour cameras. The final chapter shows how to use time-of-flight data in conjunction with traditional stereo matching techniques. The five chapters, together, describe a complete depth and colour 3D reconstruction pipeline. This book will be useful to new researchers in the field of depth imaging, as well as to those who are working on systems that combine colour and time-of-flight cameras. The publisher's url of the book is http://www.springer.com/computer/image+processing/book/978-1-4471-4657-5# .